Self-Directed and Acted

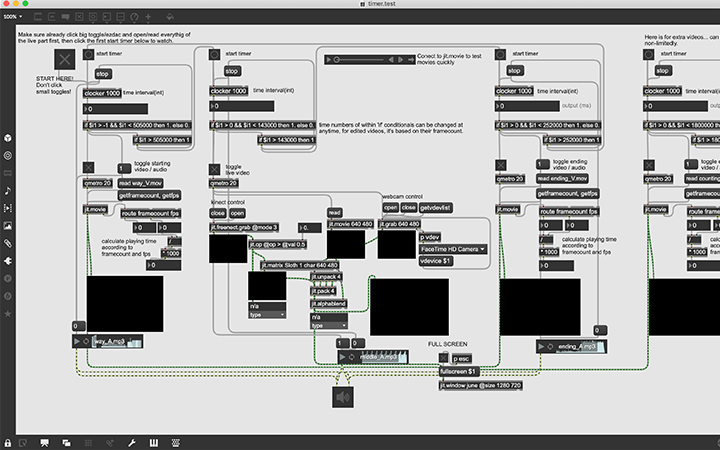

Self-Directed and Actedis a 15-min film aboutthe relationship among screen, viewers and self. Its cinematic-play system was created through coding by me, which is able to play edited videos and live videos in sequence and automatically.

produced by: Baqi Ba

Link of full film: https://vimeo.com/359036523

Password: sdaa

Computational things

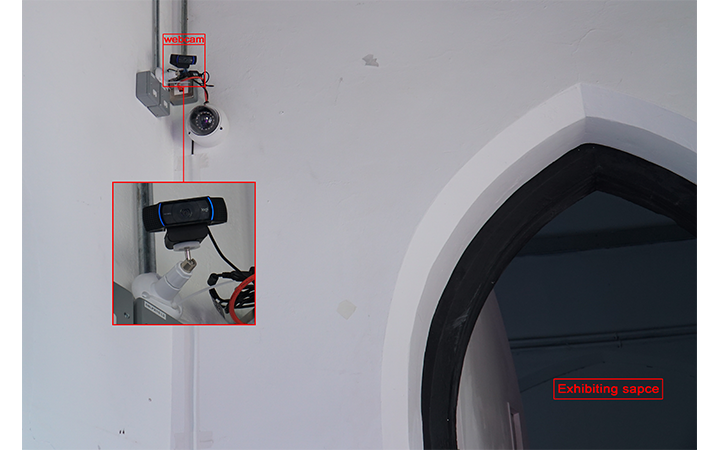

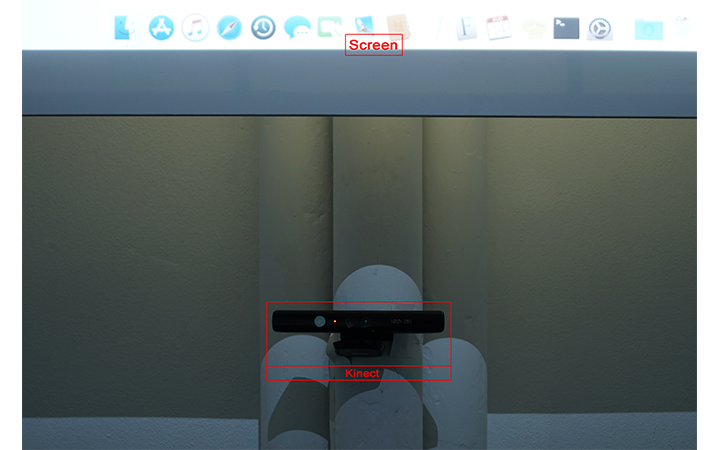

I created a cinematic-play system which can play edited videos and live videos in sequence and automatically by using max msp. There is a webcam at outer exhibiting space to mimic CCTV catching images, and a Kinect facing to audience at inner exhibiting space to catch audience’s movements.

Photo of exhibiting scene - 01

Photo of exhibiting scene - 02

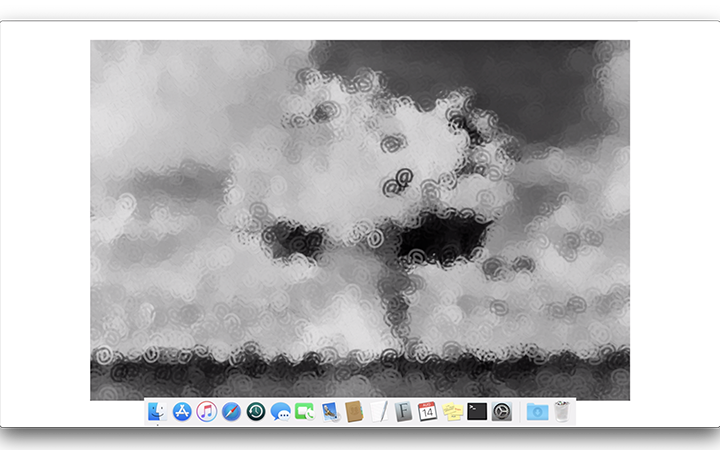

This animation is generated by using Processing. @ is used as a brush to draw a picture of nuclear bomb in Bikini Atolls.

This color is generated from a music by using max msp, then added it onto the footage filmed by myself.

Where is my initial idea from?

1. Computational means ‘live’ for me.

2. I thought about the art forms after photography. Both photography and film are able to be copied easily, as well as both are based on time and space.

3. Multi-layer interactions.

Mixing those ideas above and according to my own background, I decided to program a cinematic-play system which is able to play edited videos and live videos in sequence. Also transfer the actual interactors from real people/space/camera into digital images. Additionally, I hope interactions can be a part of narratives in this film so that its own power can be released when screening with other edited footages together.

Content and Cinematic language

My main topic of this work is the relationship among screen, viewers and self. The contents are all from my own experience when I use screen.

1. Screen as a boundary between reality and myself

2. Screen as another boundary between virtual/data world and reality

3. Screen is able to use as physical light

4. Screen as interface when viewers watching the film

All of those features of screen are able to be seen at the same time when a screen is on.

Different cinematic languages in this film is for reflecting the change of relationship among screen, viewers and me. Below is my description of some main footages following the timeline.

1. Starting from a dream: there are too many methods of dream. This dream forebodes a chaos relationship. There are two camera sights in this footage. One is screen recording, the other is unknow camera which will be seen again in the folder sent by that emoji.

2. POV (point of view): For viewers, that’s the same perspective with my camera. The editing form follows vlog which is based on the stage of internet/YouTube.

3. After disconnected with internet, screen recording appears again. Relationship between viewers and image becomes kind of opposite. The sounds which I used is from protesting. All visual animation is made from internet and computer desktop. Although there are some videos recording in real life, which is sent through internet/Bluetooth/airdrop… Thus, I become a part of inner screen. The sight of screen recording is objective for everybody, it doesn’t have emotion like traditionally cinematic-visual language. This aims to keep a distance between viewers and screen.

4. Live footage from webcam: angle mimics CCTV angle. The webcam with low-quality image aims to show the power between viewers and screen, as well as between space of inner screen and outer screen. The delay from unsmart Kinect is aiming to show

5. When screen becomes purely physical light with variable colors at the end, it could affect the whole exhibiting apace outer the screen, content of image would be ignored by viewers ideally. This is my attitude about development of technology, ‘we cannot control it, but we can dance with it’. Using visual game footages, 8-bit game music and filming amusement park are also related to this attitude.

screenshot of coding

Further development

1. For code, real clock count and frame count are separated currently. That means if I want paly videos quickly, I need change both counting separately.

2. For this film, there is too much information so that the whole film looks not concentrated. But I till have no idea about how to fix this.

Reference

Cycling74.com. (2019). Tutorial: Jitter Recipes: Book 1, Recipes 0-12 | Cycling '74. [online] Available at: https://cycling74.com/tutorials/jitter-recipes-book-1 [Accessed 15 Sep. 2019].

Dmsp.digital.eca.ed.ac.uk. (2019). Audio Visual Instrument/Control System – Colour Tracking | AudioVisual Ensemble. [online] Available at: https://dmsp.digital.eca.ed.ac.uk/blog/ave2014/2014/02/28/audio-visual-instrumentcontrol-system-colour-tracking/ [Accessed 15 Sep. 2019].

The Academy for Systems Change. (2019). Dancing With Systems. [online] Available at: http://donellameadows.org/archives/dancing-with-systems/ [Accessed 15 Sep. 2019].

En.wikipedia.org. (2019). Cybernetics. [online] Available at: https://en.wikipedia.org/wiki/Cybernetics [Accessed 15 Sep. 2019].