If You Could Never Forget a Dream, Would You Still Remember Me?

Does the limitless scope of contemporary archives create a culture in which all history is subjected to the now? Are networked images subject to latent memory once they have been dispossessed? In this artwork, I create a theoretical archive to explore these questions and more

produced by: Alexander Velderman

Introduction

In this work, I explored how archives have shifted from being limited, selective resources to being infinite troves of passively obtained information. Therein, I considered how datafied archives consisting of largely meaningless images can be reinterpreted to generate additional value - an afterlife for the archive. Using the idea that light itself holds an image’s memory, I explored if this idea is transferable in a computational setting by creating meshes and, by doing so, re-interpreting images into a new form.

Concept and background research

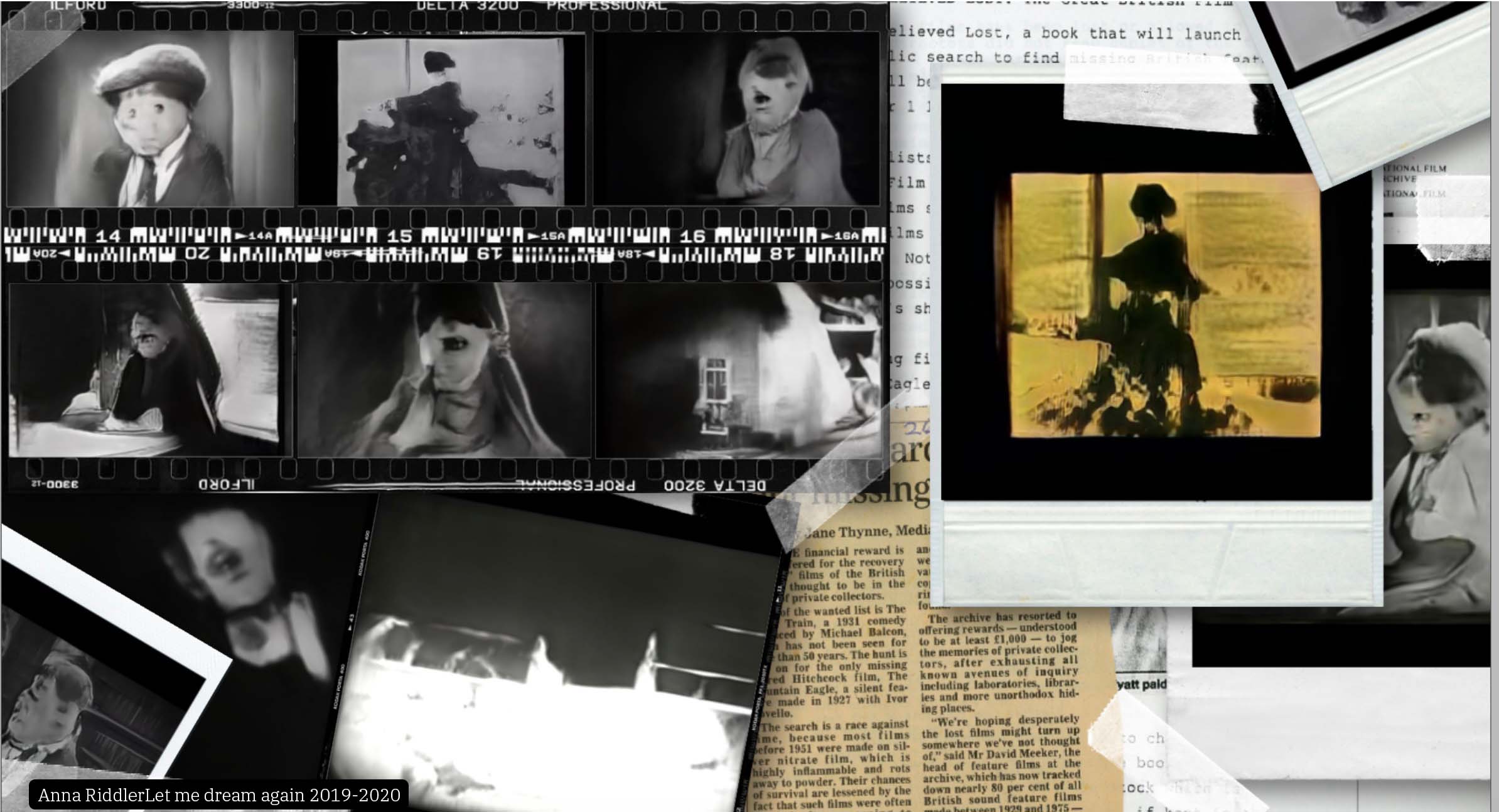

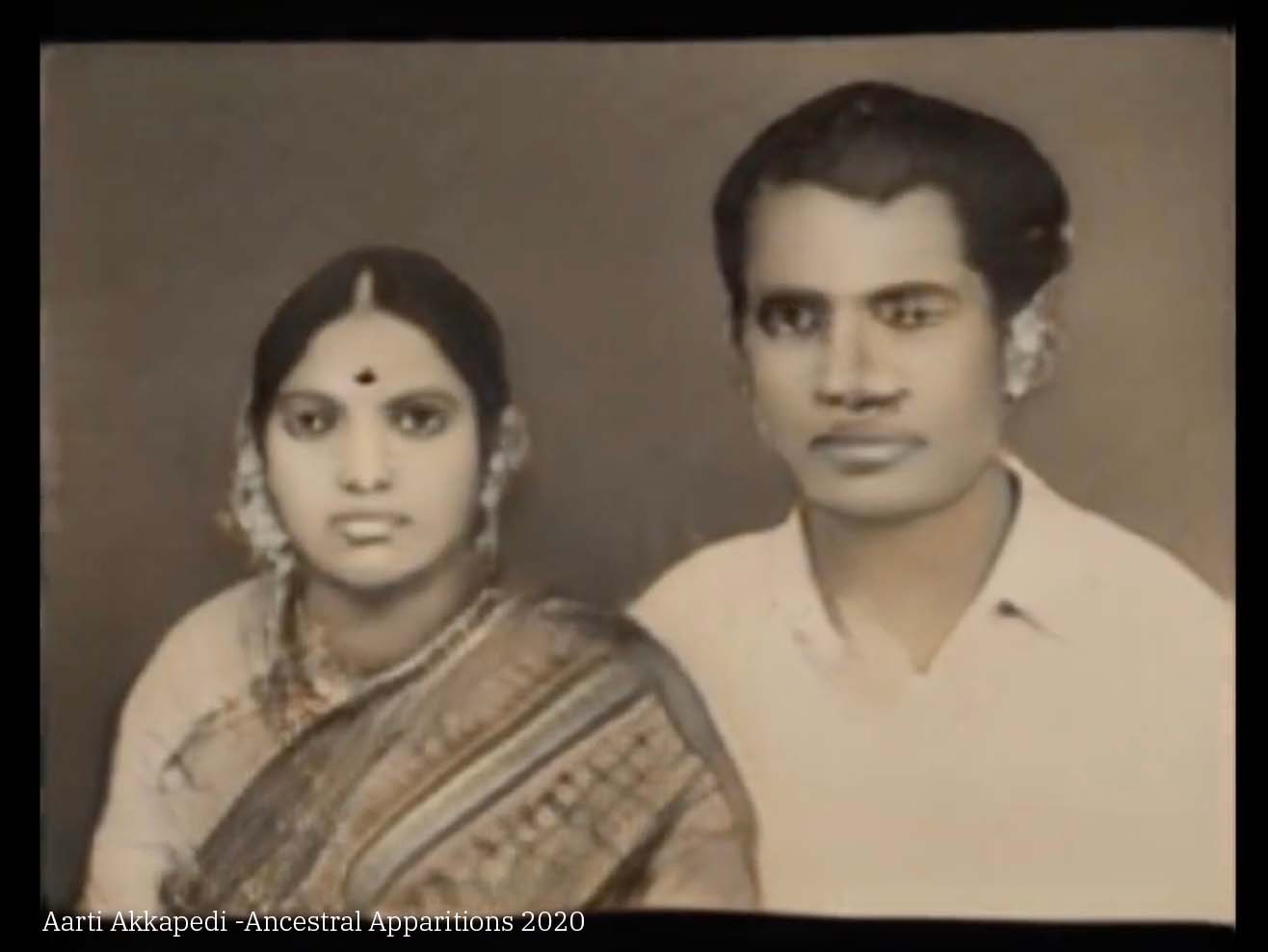

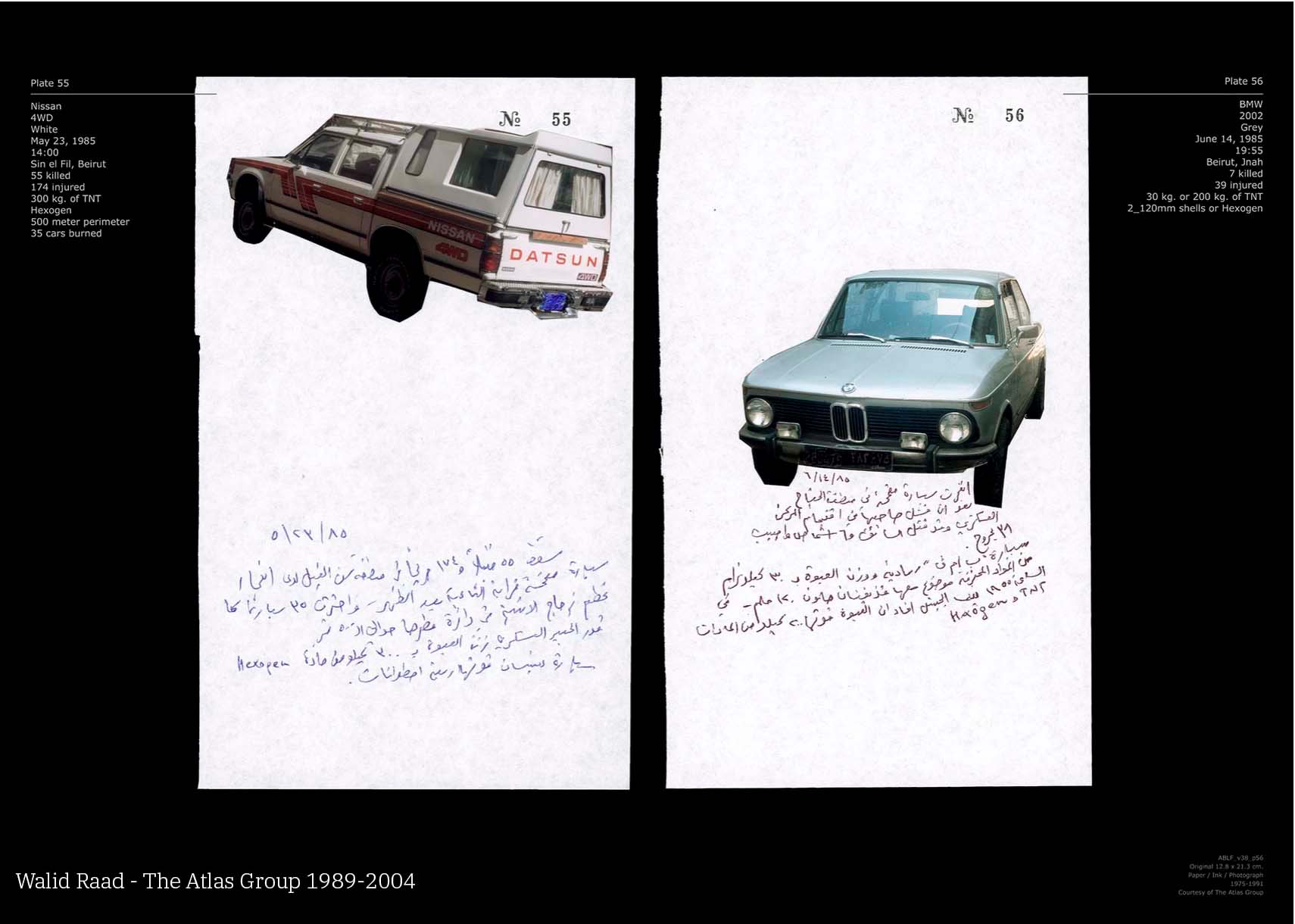

Archives have been well explored in relation to art. In creating these pieces, I was inspired by Aarti Akkapedi’s Ancestral Apparitions, Anna Riddler’s Let me Dream Again, Suzy Lake’s book Performing the Archive, and Walid Raad’s The Atlas Group. As a whole, like many other works dealing with archives and creating fictional archives, each of these works deal with loss, latent memories, and a negotiation of the space between an official record and our divergent perspectives. In my work, I wanted to set out a different goal: to examine the ontological nature of a digital archive. In doing so, I chose to limit the scope of the archive in order to juxtapose the tension between what unlimited archives entail for images and their effects on individuals and society. Both highly malleable in substance but often misrepresented as objective, archives and data have become synonymous concepts, as the cost of data storage has become increasingly inexpensive as Viktor Mayer-Schönberger points out in his book Delete: The Virtue of Forgetting in the Digital Age. Additionally, through my research, I considered the relationships that we have with digital images. Specifically, drawing from Stacey Pitsillides et al.’s Museums of the Self and Digital Death: An Emerging Curatorial Dilemma for Digital Heritage, I was interested in examining how memories are embedded in images through their loose relationship with indexicality . From this foundation, my work considers how we should handle archives of the past, present, and future. I contrasted this with Hal Foster’s An Archival Impulse to examine how artists have dealt with works of the archive in their work through subject and materiality. Therein, I compared this to how the landscape of the archive and what is conveyed through archives has changed in artwork since data-driven archives have become the dominant form of information storage.

What happens to a satellite image of oil fields once the necessary data is extracted? What about the hundreds of thousands of drawings with unattributed origins locked away in the deep corners of museums? Ultimately, although their point of origin is different, they are eventually added to the endless stream of vaguely categorized archives of things we can’t quite decide to throw out yet.

In creating this project, I thought that it was initially important to use vernacular images, the kind of images that really are representative of the massive unforgetting archives that I talk about. Initially drawn to surveillance as a topic within my scope of research, I decided to record and try to make my own datasets. Quickly in my experiments, I realized that the data that I draw from must be unique, generative, and nearly infinitely producible, while at the same time being unidentifiable.

This led me to work with artificial intelligence, and generative means. For my datasets, I thought that it was important to use images that were not your everyday labeled gun detection dataset or labelled traffic. I ended up deciding on images that had little to no value other than their function at the time of being archived. In this end, this included X-Rays rays of COVID-19 lungs, satellite data of oil tanks for monitoring global oil supply, images produced by the curiosity of the Curiosity Mars rover, Anonymous Figure Studies, drawings and sketches from the Rijksmuseum, Archival Portraits from early photography from the Rijksmuseum, and early Architectural photos for either documenting architecture or as entertainment from the Rijksmuseum.

From the datasets used in my work, it is clear that I used both contemporary and historical archives. This was a purposeful decision on my part, as I thought it would be important to show continuity between historical and contemporary images with regards to our archival practices. For example, despite being collected well before the digital era, the historical image datasets used in this work hold little value other than their sheer mass and have been made available expressly for their use in new contexts. Collectively, this promotes the idea that data is infinitely extractable, malleable, and transformational and that this hoarding mentality has predated data itself.

Technical

For this project, since I generated my own, unique images, I used Nvidia’s StyleGan2 Ada Pytorch implementation. I chose this implementation of an image generating GAN and StyleGan, as it is far faster and requires smaller datasets. On Average, I trained each dataset for approximately 24 hours or approximately 1000 epochs. The datasets that were smaller in size it allowed me to be more selective in what I chose to use as my training data. For example, the generated satellite imagery of oil tanks was trained on a dataset of only about 2000 images, and the figures on a dataset of approximately 1500 images that I selected out of wider datasets. When Generating the images, I opted to generate images within a seed range from 1-2000 and curate from there, rather than using latent space, as I was not making any kind of transformative a ->b image types or using video. For each dataset, I adjusted the truncation. In essence, this means that I adjusted how much the AI model interprets what it is generating versus what it was trained on. For highly repetitive datasets, such as the Mars dataset, which primarily contained images of the rover’s wheel, I opted to set the truncation highest to give the model more space to generate novel imagery. In generating the images used in my piece, I followed the Nvidia Labs guide on their GitHub.

One thing to note here is that a lot of my time was spent troubleshooting incompatibilities between different versions of helpers and programs needed to complete my work, which eventually led me to learning to use Anaconda and virtual environments in python or venv to appropriately up or downgrade different packages as needed.

When it came to generating my metadata, I used the Metropolitan Museum of Art’s open database CSV index as a way to train an AI model on names, titles and item numbers. I originally looked at using Open AI’s GPT-2 model. However, upon experimentation, I found that it often overfit results or was repetitive despite using appropriately sized datasets. After trying charRNN and then building a very basic generator with Pytorch I landed on Markov chains. I felt that they were appropriate as a kind of proto machine learning just based off of next character probability. The results felt a lot more generative, playful and less directly like names or titles of pieces. For the chains I used Daniel Shiffmans p5js markov chain example plugging in my own values as needed to achieve the result I wanted.

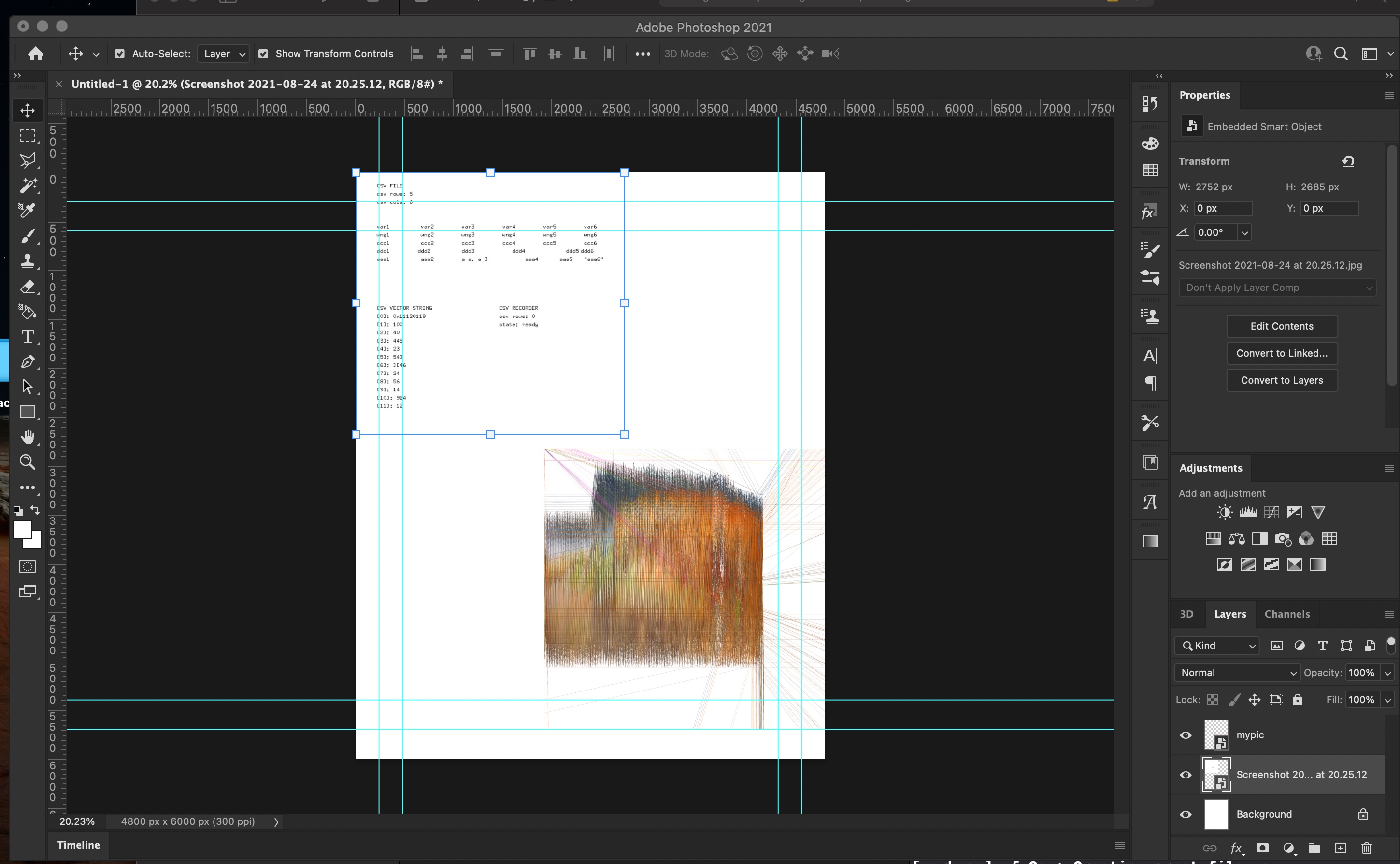

The mesh (or streaky image) was generated in OpenFrameworks by modifying the mesh luminance example and “breaking it” inorder to continue to extrapolate data from it. Because my project was about a possible re-interpretation or extraction of data, I played around with a few ways to re-represent my images that I had generated. This included making depth maps; labeling images via object detection, such as YOLO; using readouts from computer vision; and using slitscanning via FFMPEG. In the end, the simplest mesh that did not stop when the pixel data stopped ended up producing the most satisfying result. Formally, the mesh was the most interesting visual result. This also produced the most conceptually interesting result, as the mesh was unintentionally generating artifacts which was the point of my re-interpretation of my images.

I then used the ofxQuantizer addon to draw the color bar below each image. I played around with the addon slightly to get the result I wanted as doing it outside of the addon tended to break things- there are a few versions of the addon, I used the original as it worked the best/most consistently.

Below: Design process and snapshots of dataset

Future development

For me, it was important that my work consisted of physical artifacts that I could use to create a curated archive. In terms of development, this is a project that I actively would explore further if I were to do the MFA. Outside of academia, I could see myself exploring this topic further in my artistic practise over the next few years. I would break down my expansion into two parts: the creation of the artifacts and the generation. I think for the artifact creation, I would explore creating these prints with various, traditional printmaking methods making toned photogravure prints or platinum/darkroom printing as a way to incorporate more historical and archival elements into my work.

Technically, I think I could have developed the project further by modifying object labeling, such as Open AI’s clip image labeling to read generated images and label them in order to connect my titles or descriptions a bit more closely to my metadata and expand upon my categories of metadata. Additionally, I think that I could iterate on what data is pulled from images after they are generated as meshes.

To further develop my work, I think potentially adding more subjects and creating a book or volume for each dataset might lead to additional, promising results that build upon those achieved in this project.

Self evaluation

For me, it was important that my work consisted of physical artifacts that I could use to create a curated archive. In terms of development, this is a project that I actively would explore further if I were to do the MFA. Outside of academia, I could see myself exploring this topic further in my artistic practise over the next few years. I would break down my expansion into two parts: the creation of the artifacts and the generation. I think for the artifact creation, I would explore creating these prints with various, traditional printmaking methods making toned photogravure prints or platinum/darkroom printing as a way to incorporate more historical and archival elements into my work.

Technically, I think I could have developed the project further by modifying object labeling, such as Open AI’s clip image labeling to read generated images and label them in order to connect my titles or descriptions a bit more closely to my metadata and expand upon my categories of metadata. Additionally, I think that I could iterate on what data is pulled from images after they are generated as meshes.

To further develop my work, I think potentially adding more subjects and creating a book or volume for each dataset might lead to additional, promising results that build upon those achieved in this project.

References

Aarti Akkapedi: https://aarati.me/#aa

Anna Riddler: http://annaridler.com/let-me-dream-again

Suzy Lake: https://www.suzylake.ca/performing-an-archive#1

Walid Raad: https://www.theatlasgroup1989.org/

Foster, Hal. "An Archival Impulse." October, vol. 110, 2004, pp. 3-22. JSTOR, www.jstor.org/stable/3397555.

Mayer-Schönberger Viktor. (2011). Delete: The virtue of forgetting in the digital age. Princeton University Press.

Pitsillides, Stacey, et al. “Museums of the Self and Digital Death: An Emerging Curatorial Dilemma for Digital Heritage.” Goldsmiths Design Department, 2012. 10.4324/9780203112984.

Markov Chain: https://editor.p5js.org/codingtrain/sketches/AAgqWiJAW

StyleGan2: https://github.com/NVlabs/stylegan2-ada-pytorch

Openframeworks Book: https://openframeworks.cc/ofBook/chapters/generativemesh.html

Color Quantizer: https://github.com/mantissa/ofxColorQuantizer

Datasets:

Rijksmuseum (Portraits,Buidlings and Figures): https://www.kaggle.com/lgmoneda/rijksmuseum?fbclid=IwAR1gFt5aHApOA_OODPrXuQogjZruSIoeS5qLryHj4GxtDz0pN1c6xP9Hpsk

Oil Storage tanks: https://www.kaggle.com/towardsentropy/oil-storage-tanks?fbclid=IwAR3NIjfiMVy4pgNqS4uZlmeFzFUX1uaNvsTtzq99PaTzxcpeZ8AZbBoXhBI

Nasa Mars Rover: https://zenodo.org/record/1049137#.YT2xKp5uc-R