Methylphenidate is the name of the medication I take for ADHD. I began taking it last year and I’m still getting used to the effects, overall it’s had a really positive effect on my life - but it has also changed the way I feel in my body.

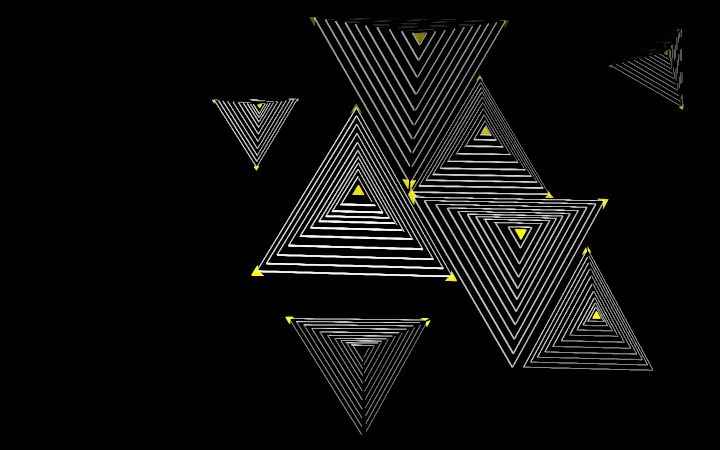

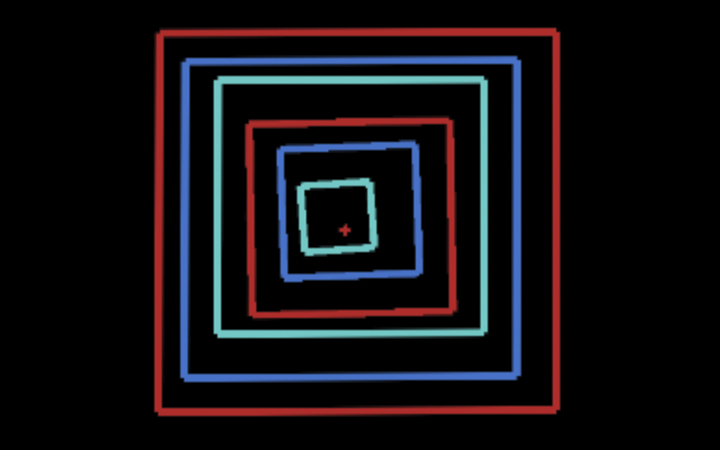

During the semester - we covered techniques for manipulating video/image input, and I wanted to work with these methods to illustrate these feelings of hyper-awareness and loss of self-control.

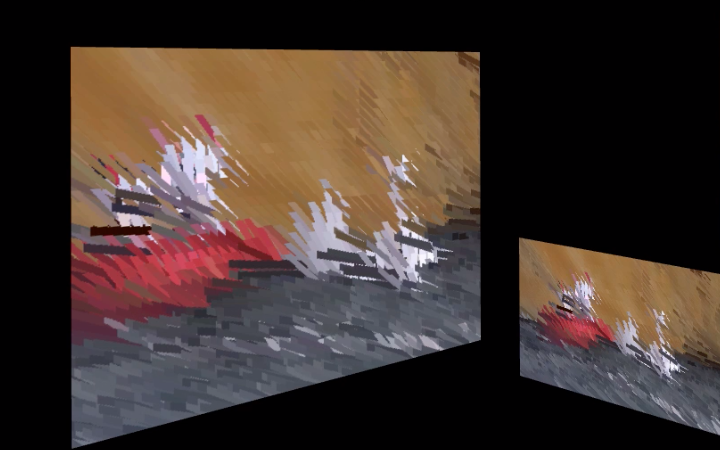

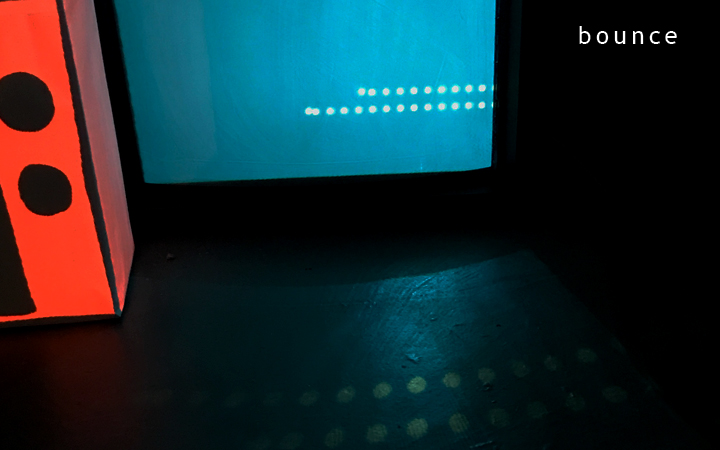

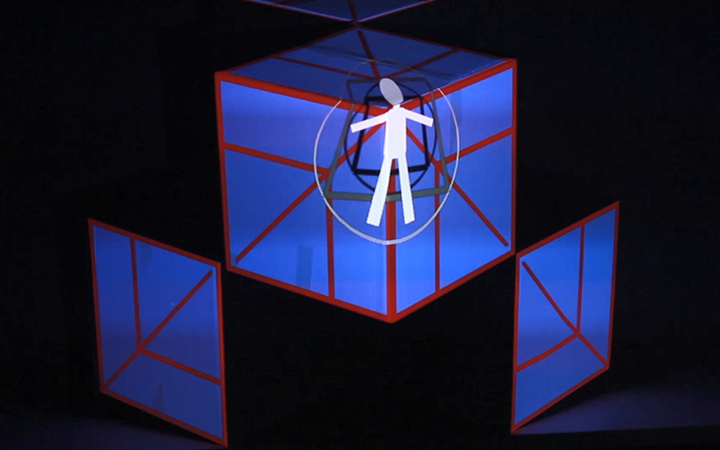

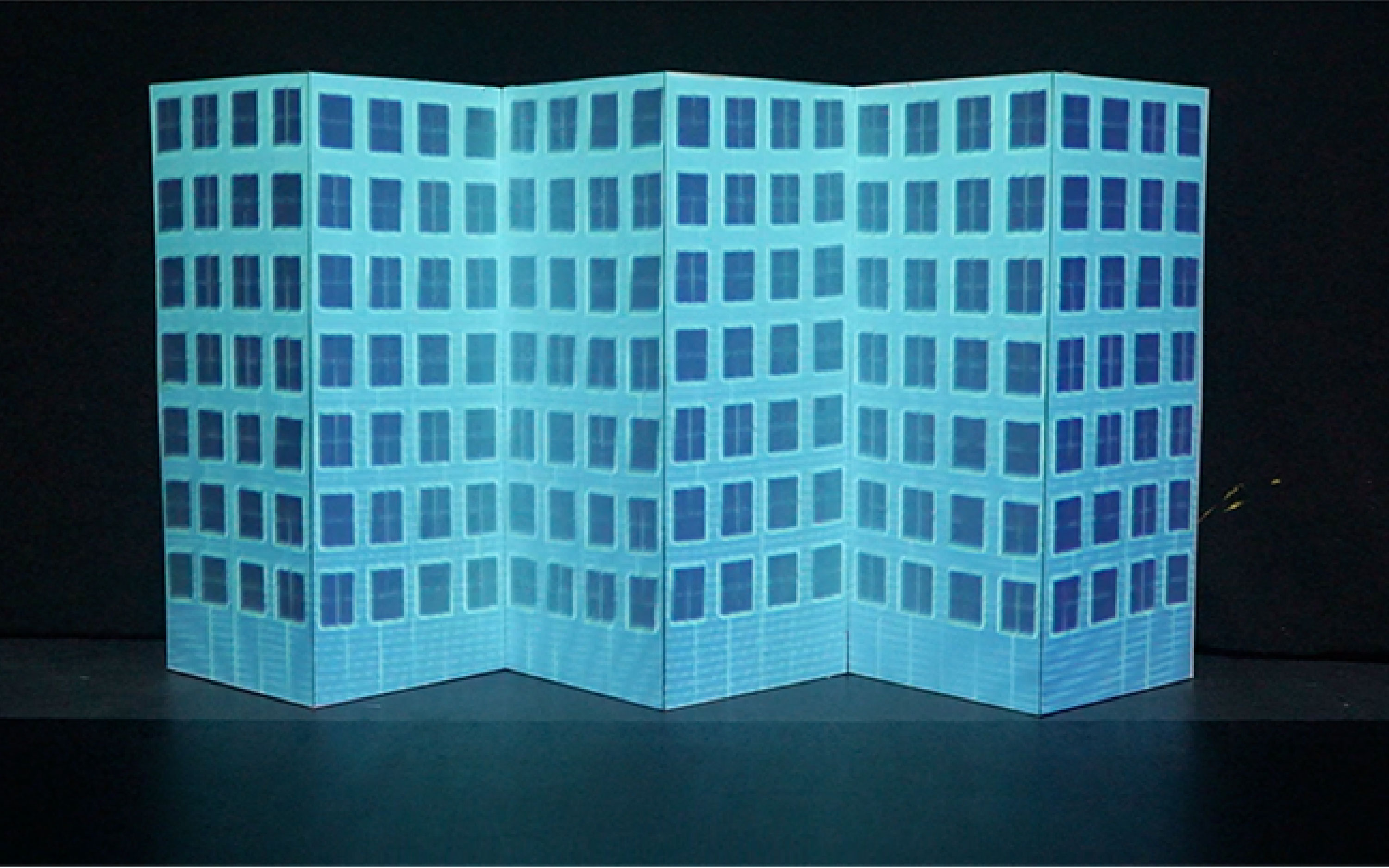

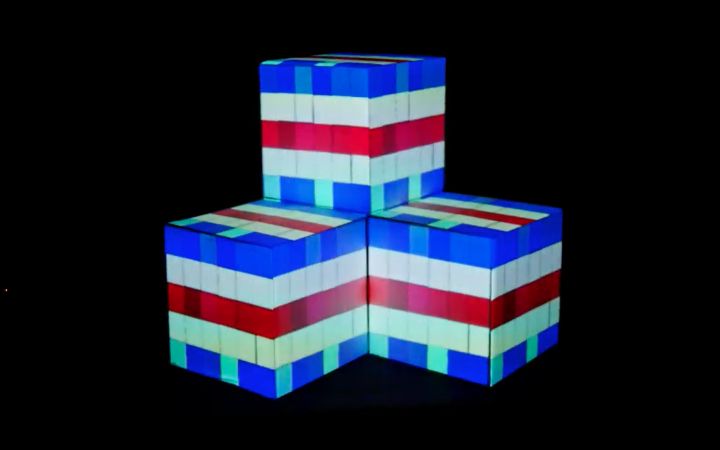

I filmed my video source material prior to beginning experimenting with code - from the outset I thought I might want to work with identifying pixel colour/brightness value and so I took footage of myself in a blue, red, and black outfit - against a white background - to help create clear contrast for pixel sorting.

-- Scenes --

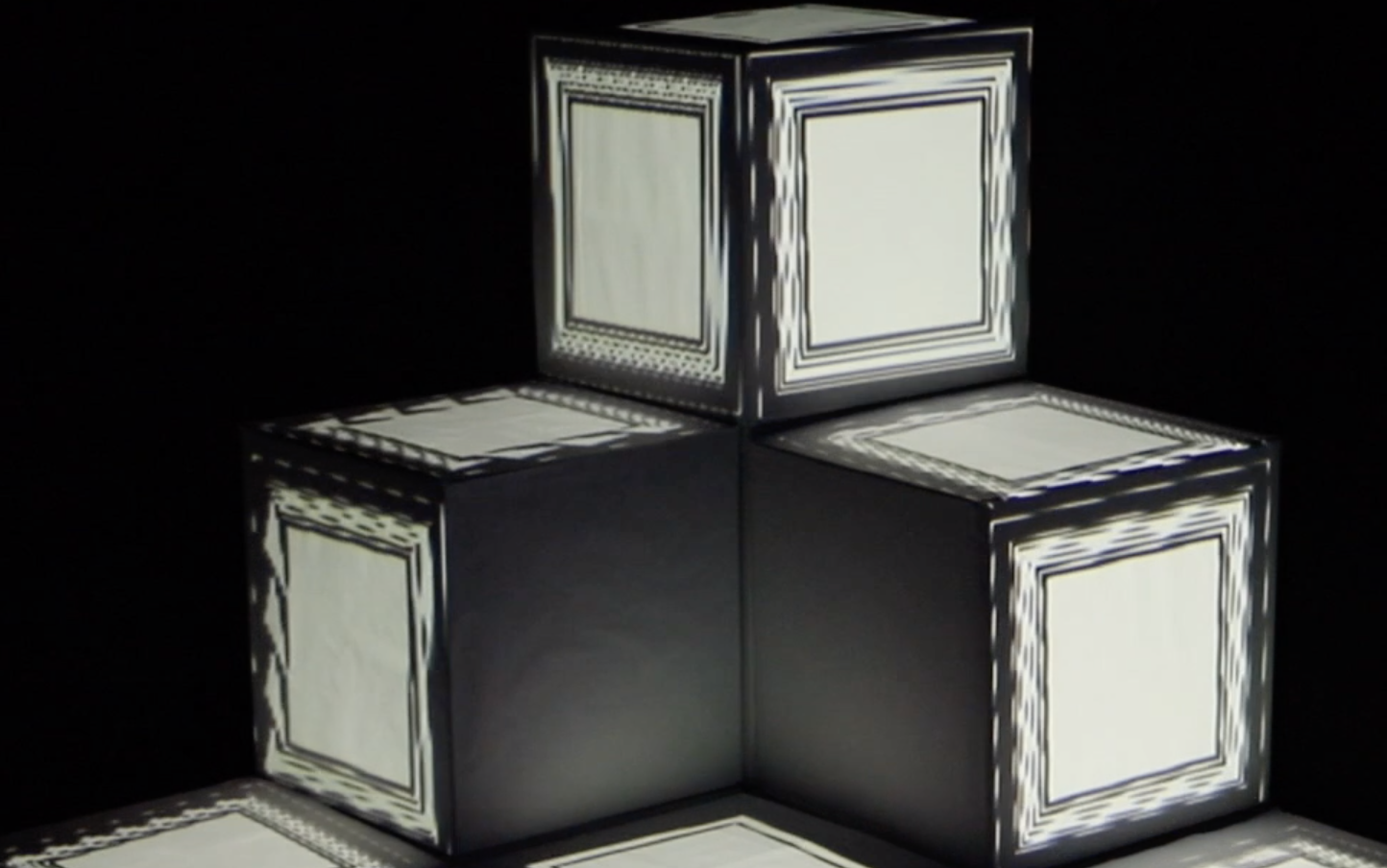

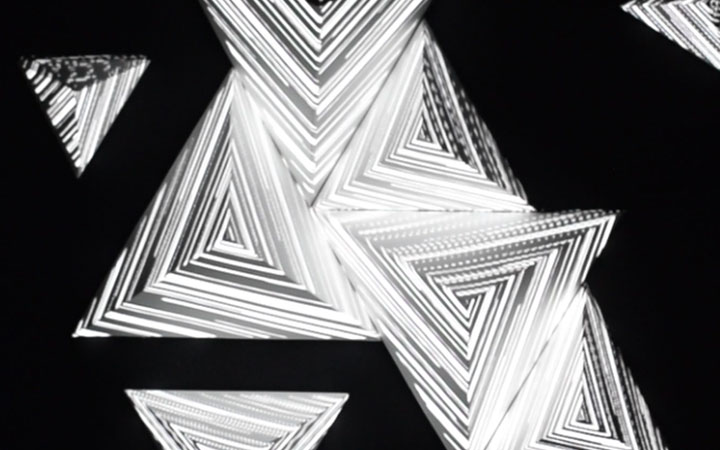

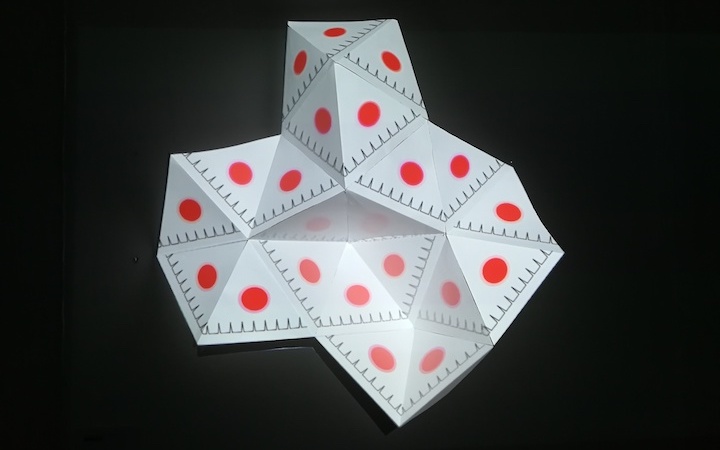

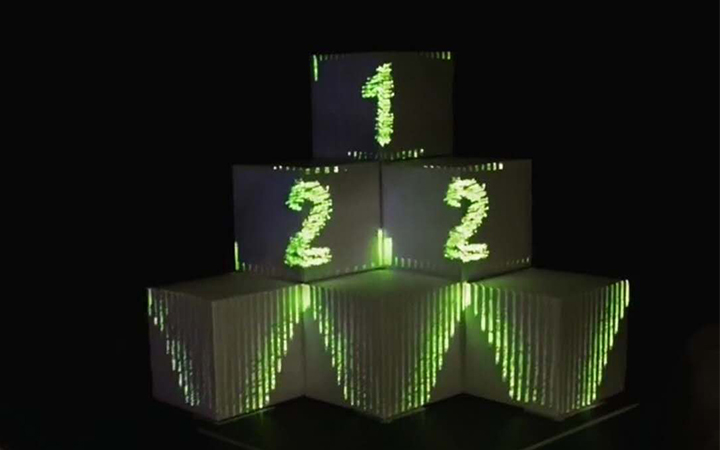

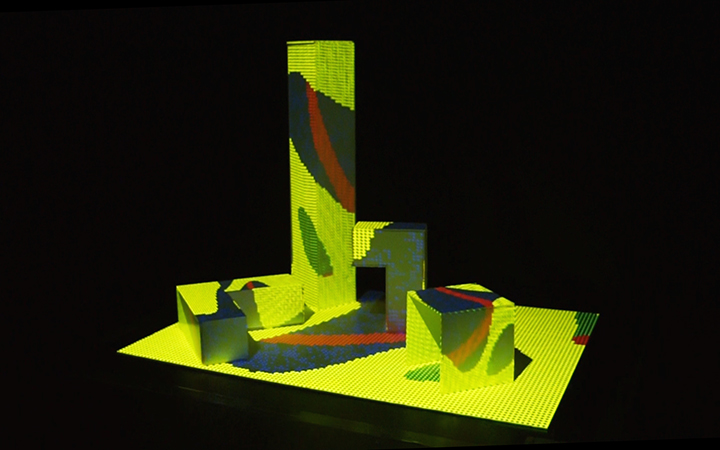

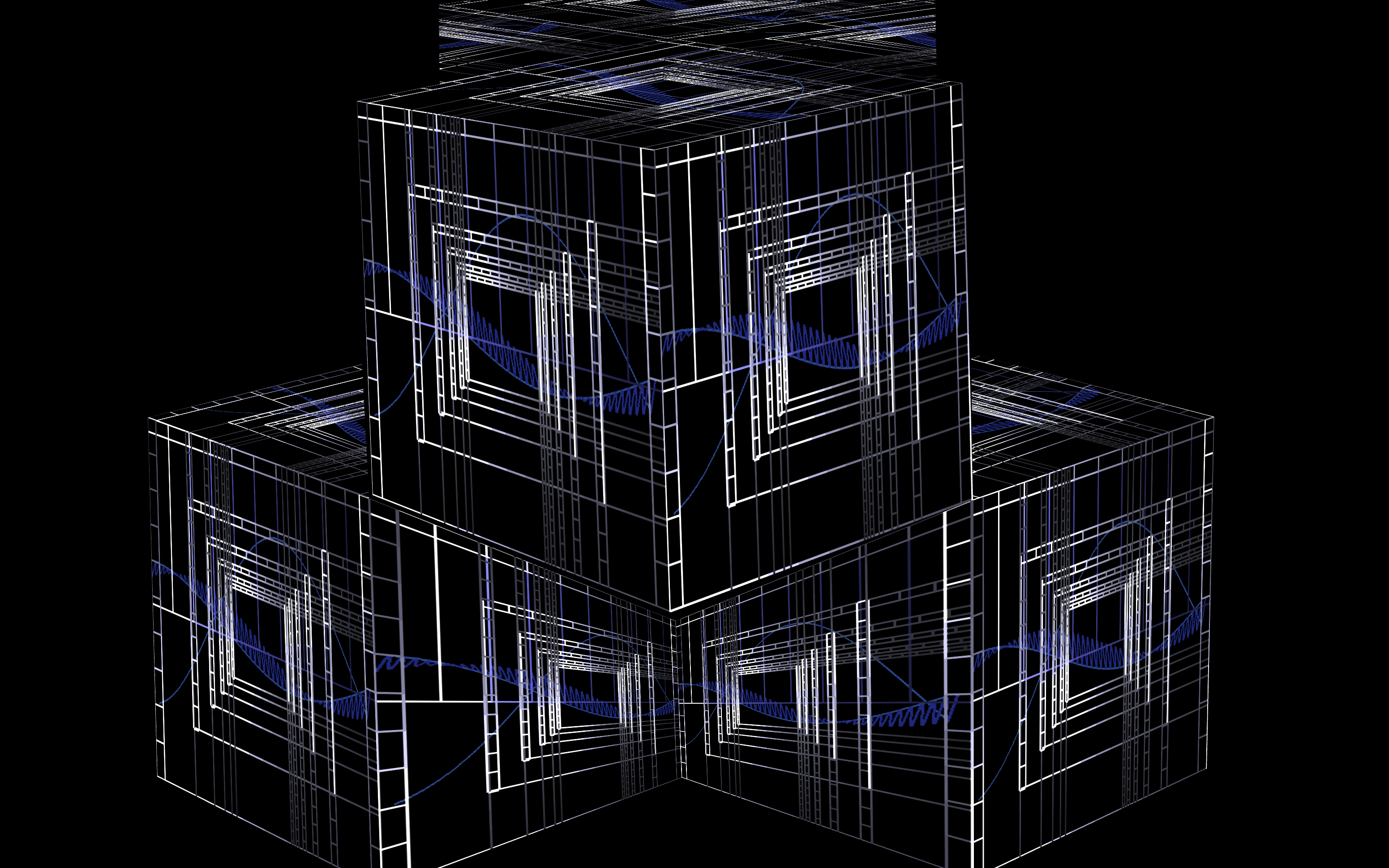

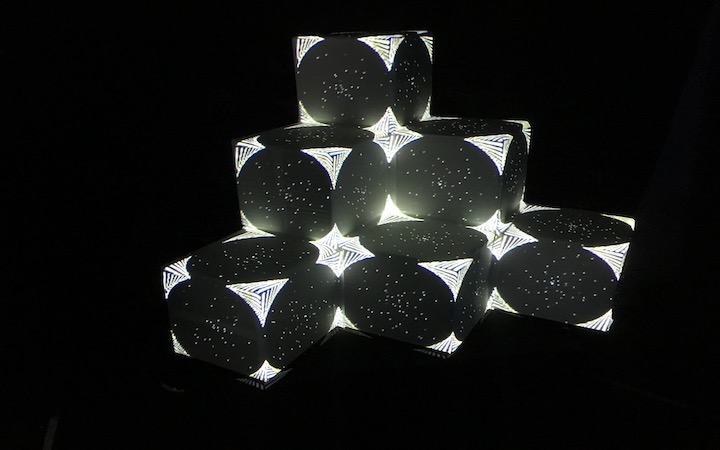

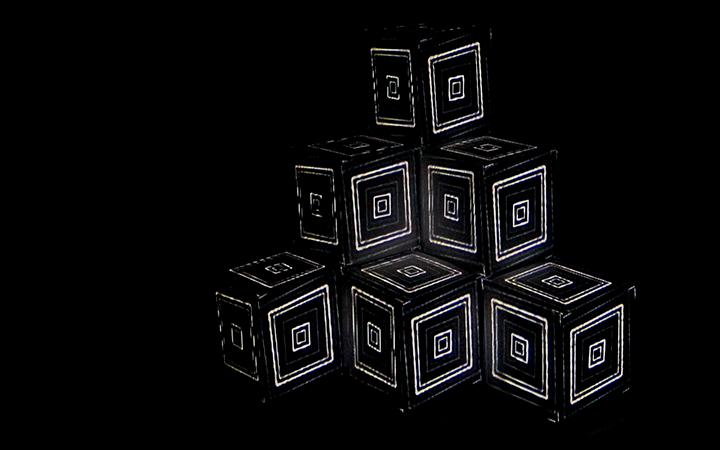

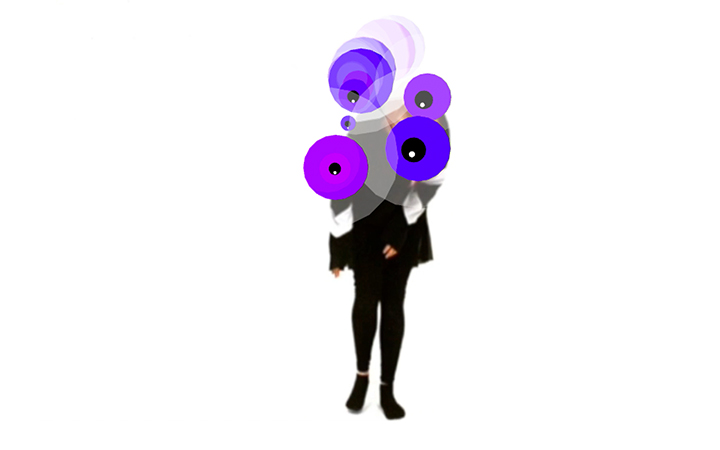

Two scenes are based on in-class examples: Pixel grid - for drawing a grid of circles with each taking their colour from the pixel it sits on top of, and with a scale variable controlled by pixel brightness. I adapted this example to run from a piece of video instead of webcam footage - and instead of increasing circle size by brightness, I set it to a steadily increasing variable in the update function. The figure is bouncing around excitedly and as they become more and more active- their form becomes more and more pixilated. I liked this effect because it looks like a cross between a video game sprite - and something that is boiling.

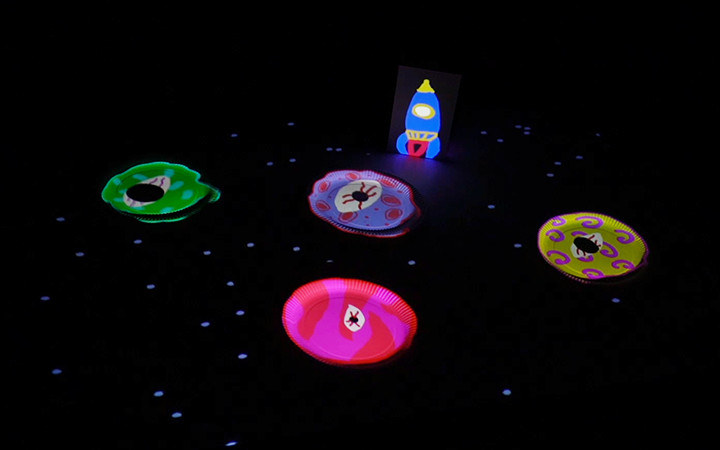

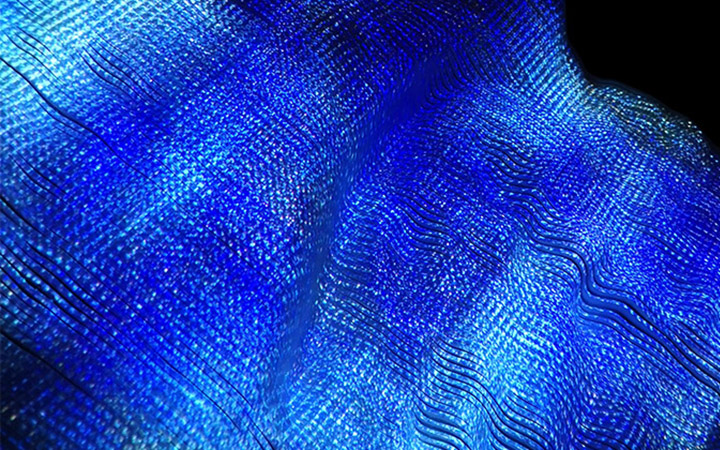

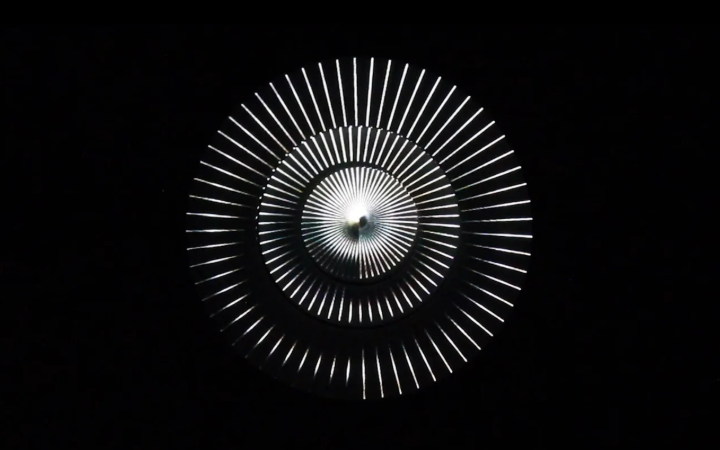

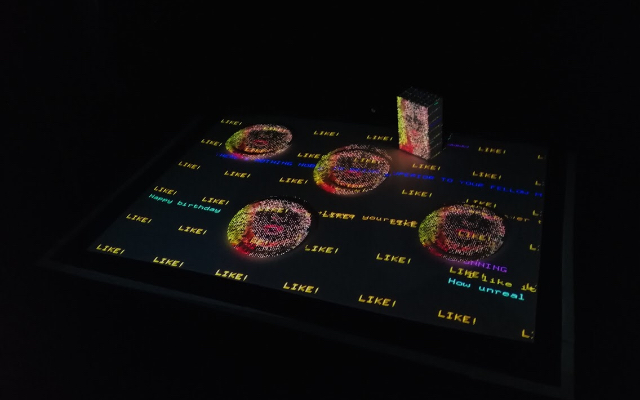

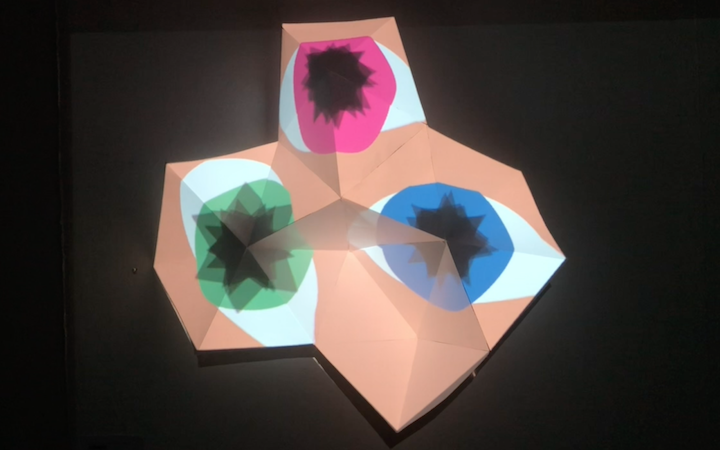

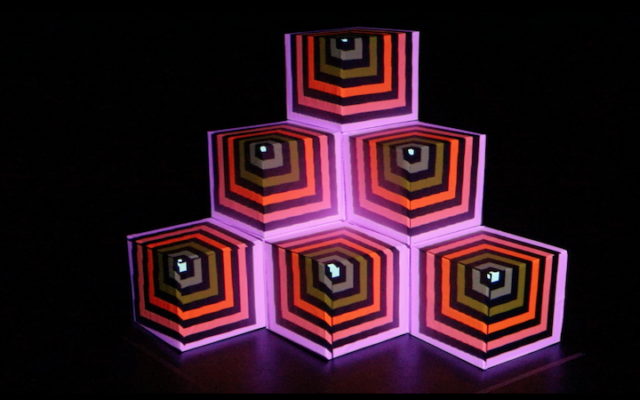

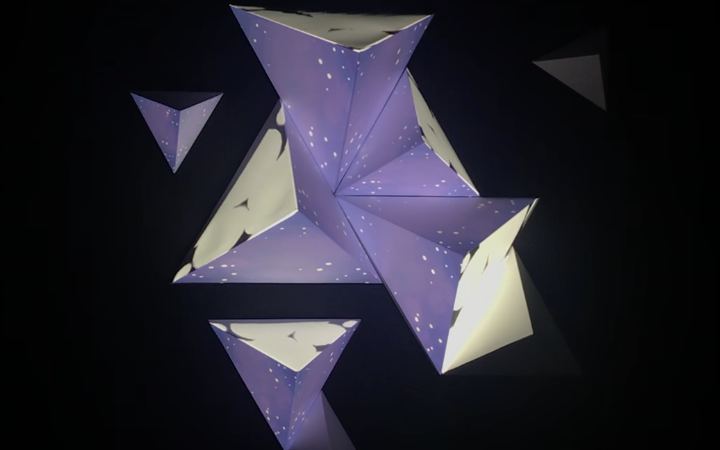

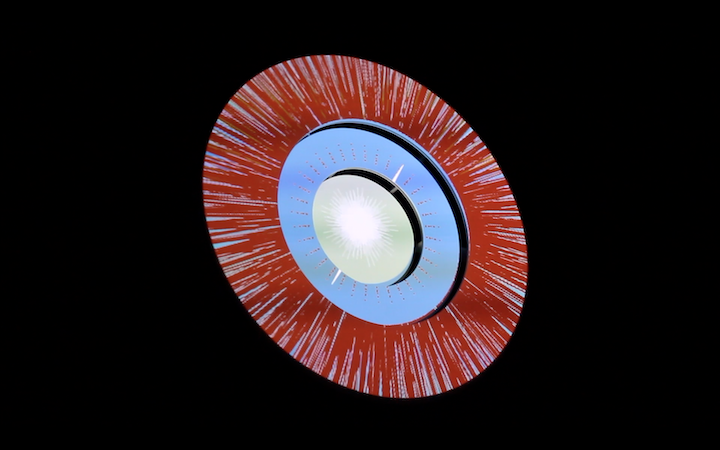

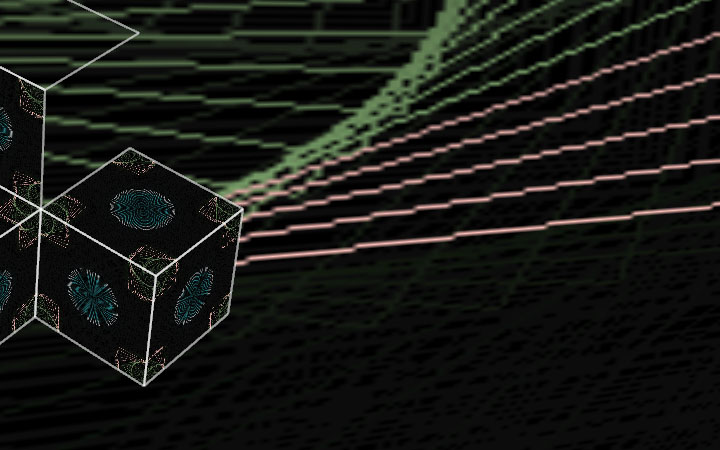

Slit scanner: loading frames taken from video into a buffer (in this case, 360 frames max - in order to draw a 360-degree circle) - and drawing a line taken from a subsection of that frame. In this version of the slit scanner - the line is then translated 360 degrees to create the effect of a rotating disk. I thought the visuals output by the slit scanner were beautiful, they reminded me of the iris of an eye - and so I used this approach with the blue footage to create a calmer, sleepy eye. The slit scanner is drawing the Irish and the pupil/whites of the eyes are ellipses. The opening and closing eyelid is a png with alpha transparency, its position is oscillating using a variable updated by a sin function.

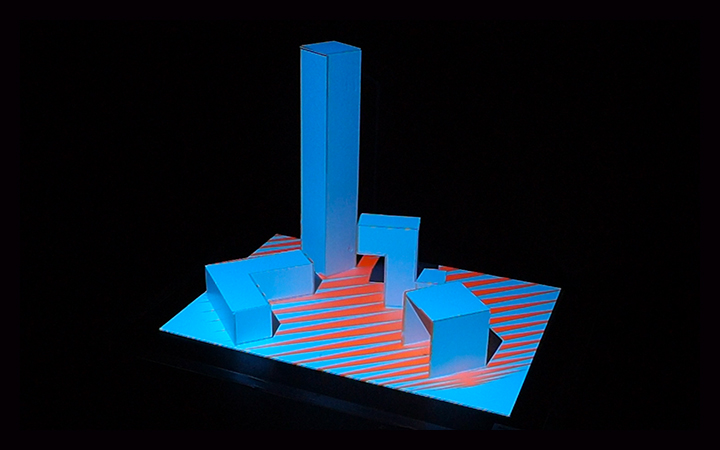

The third scene is based on an exercise by Golan Levin from ofBook. It works by setting a target colour with an individual variable for red, green and blue. We sort through the pixel array of each frame, checking each pixel as we go, and taking a variable for each red, green blue value at each. We subtract the value of these red, green and blue pixels from the value of the target colour's red, green, and blue values - and using the Pythagorean theorem we get a single colour-distance variable by getting the sum of the square root of the three colour distances.

// Code fragment for tracking a spot with a certain target color.

// Our target color is CSS LightPink: #FFB6C1 or (255, 182, 193)

float rTarget = 255;

float gTarget = 182;

float bTarget = 193;

// these are used in the search for the location of the pixel

// whose color is the closest to our target color.

float leastDistanceSoFar = 255;

int xOfPixelWithClosestColor = 0;

int yOfPixelWithClosestColor = 0;

for (int y=0; y

// from myVideoGrabber (or some other image source)

ofColor colorAtXY = myVideoGrabber.getColor(x, y);

float rAtXY = colorAtXY.r;

float gAtXY = colorAtXY.g;

float bAtXY = colorAtXY.b;

// Compute the difference between those (r,g,b) values

// and the (r,g,b) values of our target color

float rDif = rAtXY - rTarget; // difference in reds

float gDif = gAtXY - gTarget; // difference in greens

float bDif = bAtXY - bTarget; // difference in blues

// The Pythagorean theorem gives us the Euclidean distance.

float colorDistance =

sqrt (rDif*rDif + gDif*gDif + bDif*bDif);

if(colorDistance < leastDistanceSoFar){

leastDistanceSoFar = colorDistance;

xOfPixelWithClosestColor = x;

yOfPixelWithClosestColor = y;

}}}

// At this point, we now know the location of the pixel

// whose color is closest to our target color:

// (xOfPixelWithClosestColor, yOfPixelWithClosestColor)

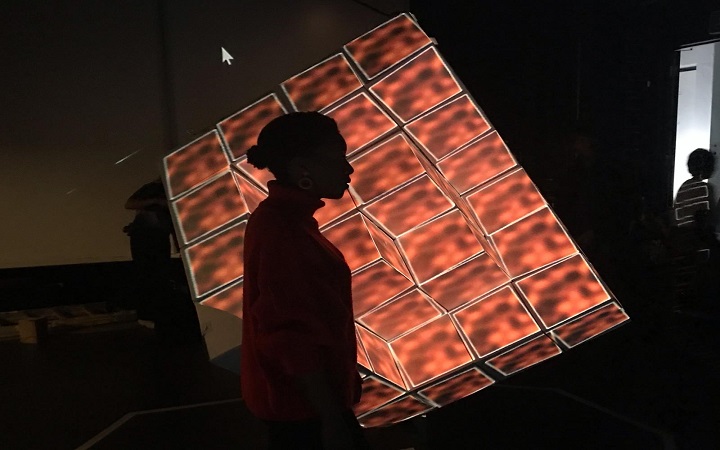

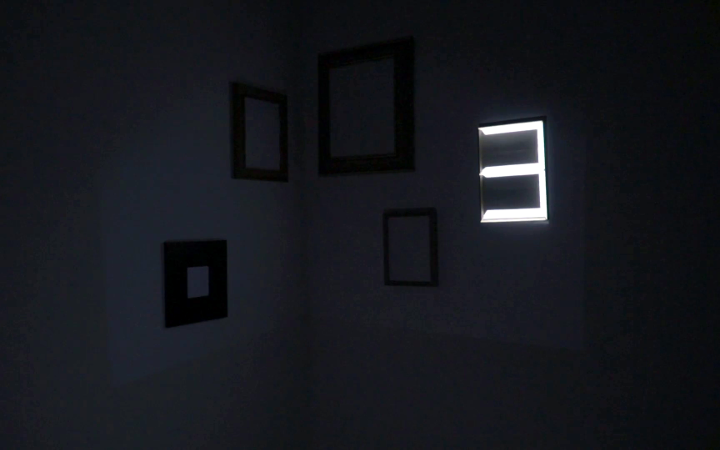

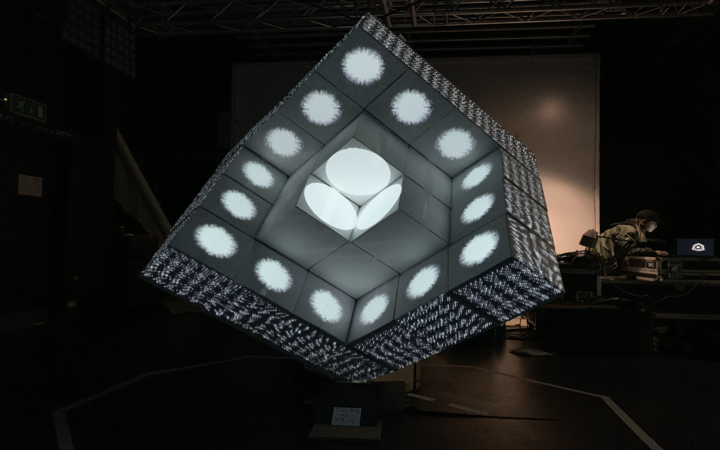

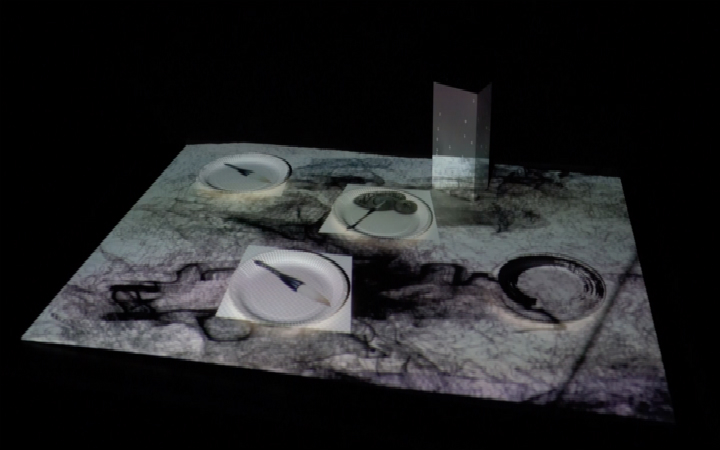

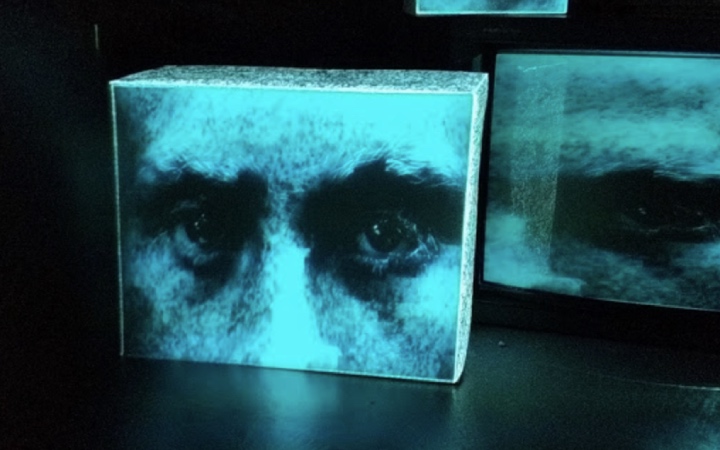

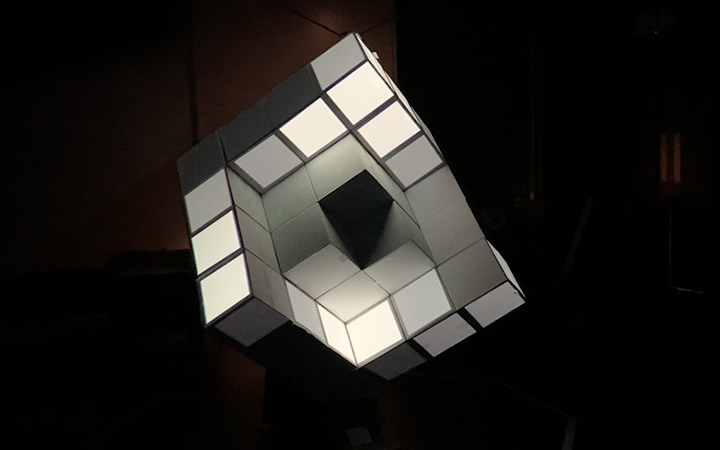

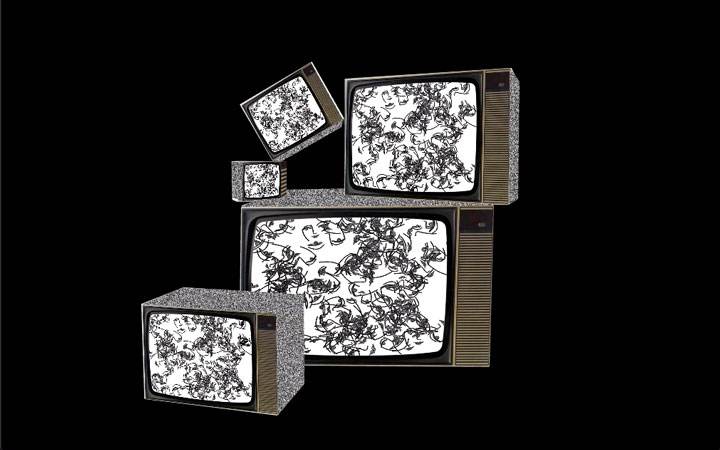

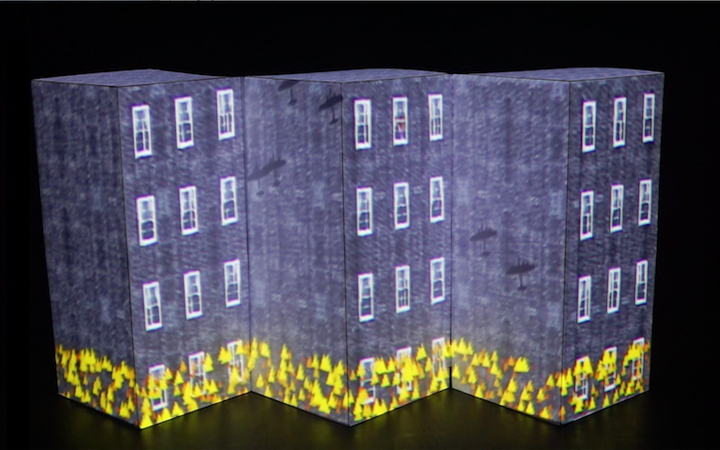

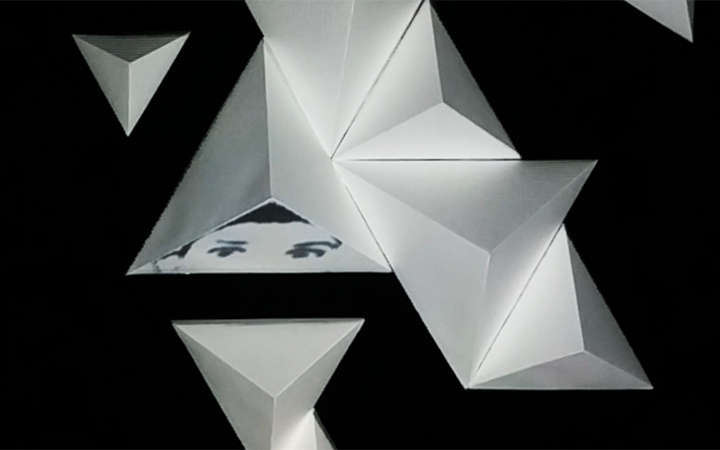

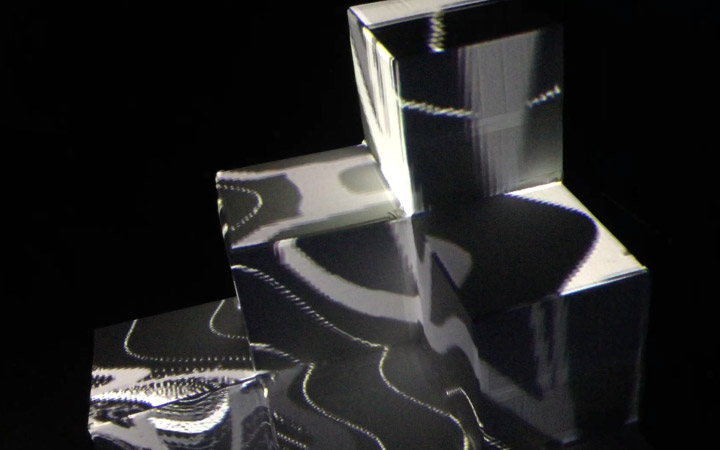

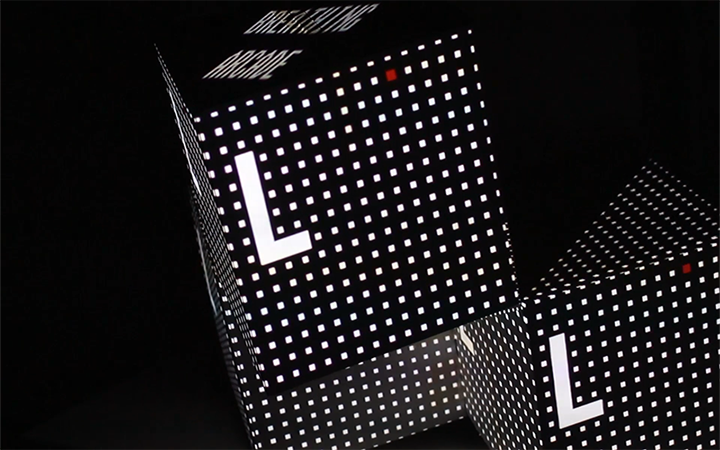

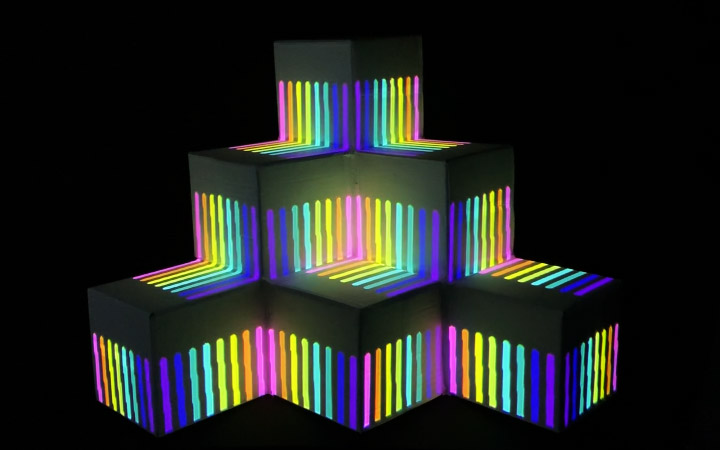

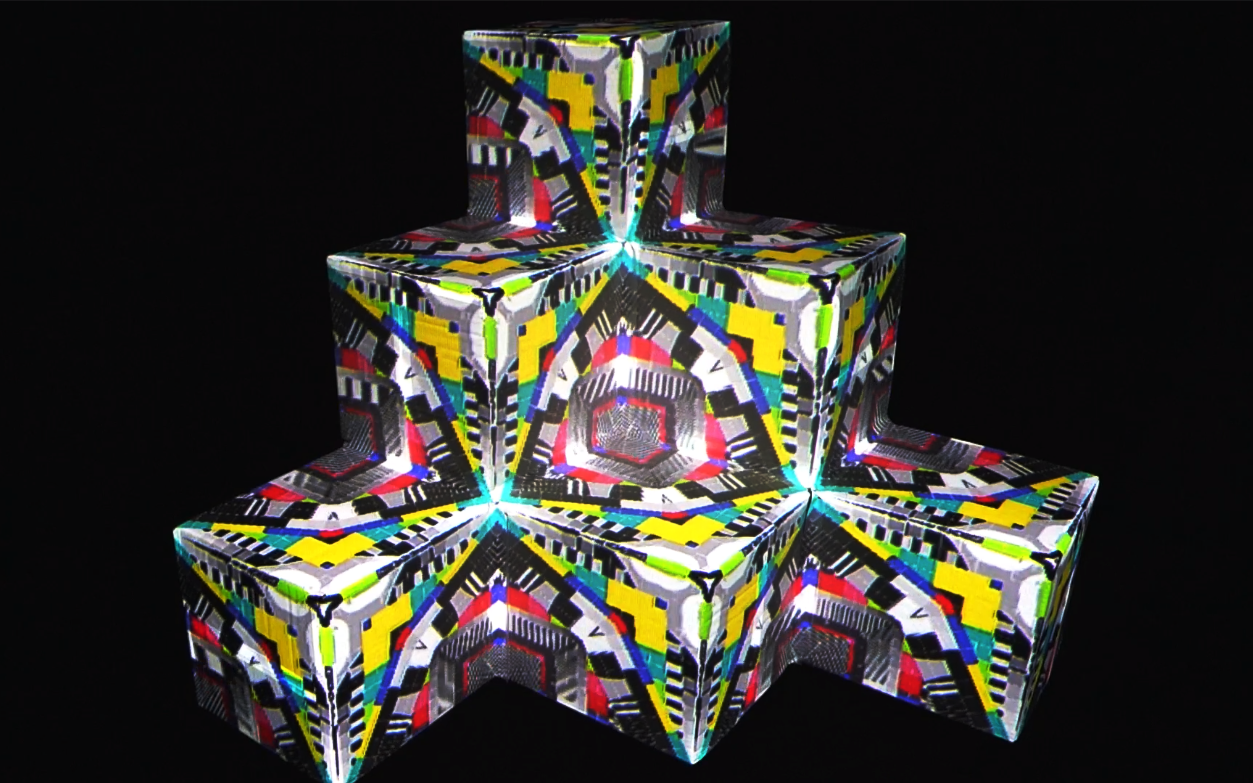

-- Exibition --

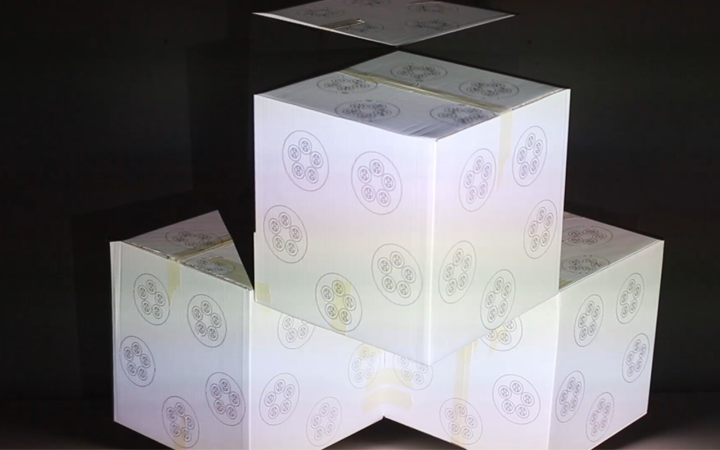

I chose the frames as my projection surface as I thought picture frames might give another level of context, as though the figures are living portraits. There was a slight technical hitch at the last moment and I had to lose the slit scanner from the projection because of interdependencies between the image buffer - the video frames - and the FBO windows - but I've included a clip in the video below.