iGenerate

An exploration into supervised artistic machine learning practises with the deployment of sophisticated audio tools developed for live performers and DJs.

produced by: Theo Vlagkas

Introduction

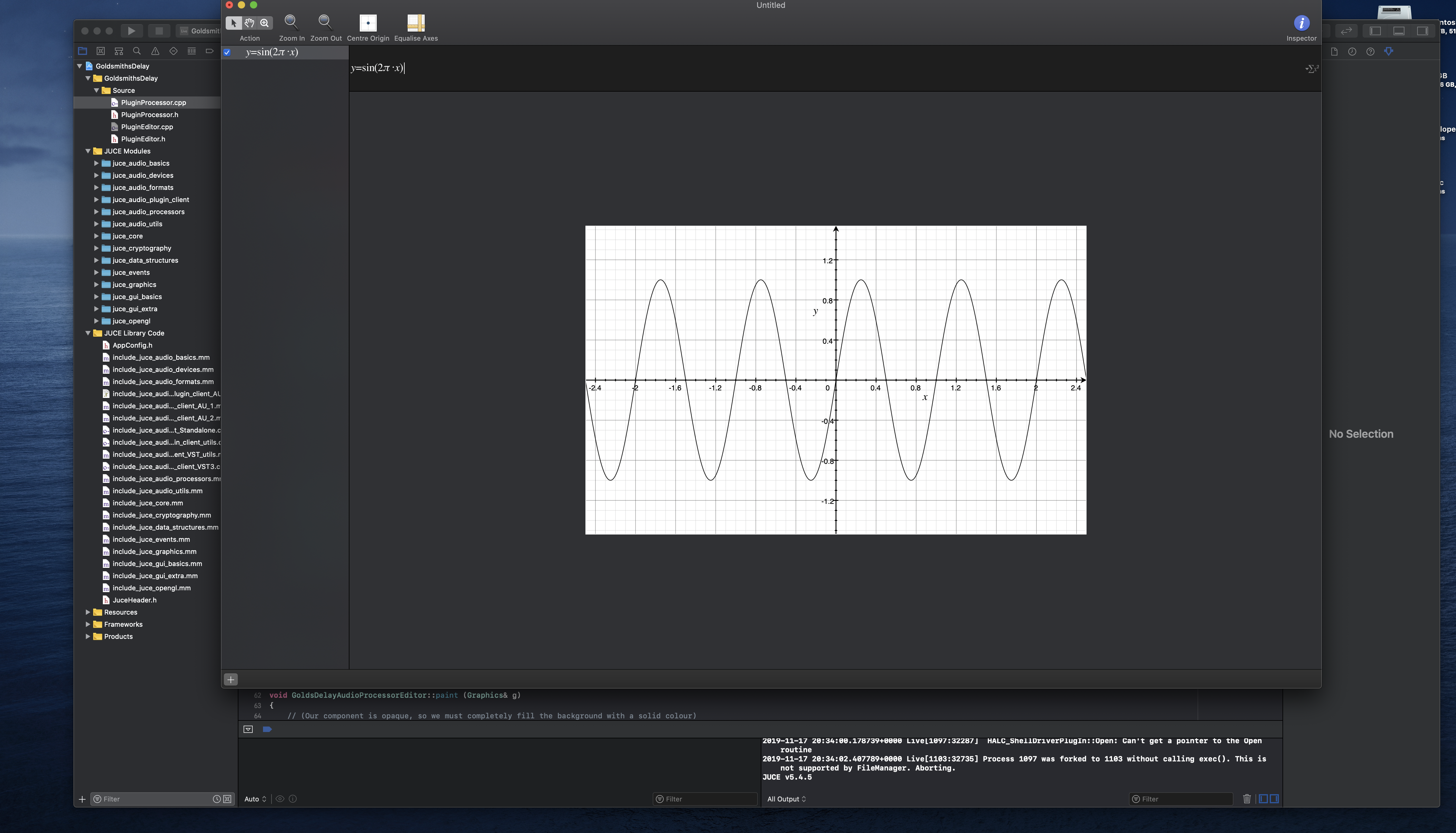

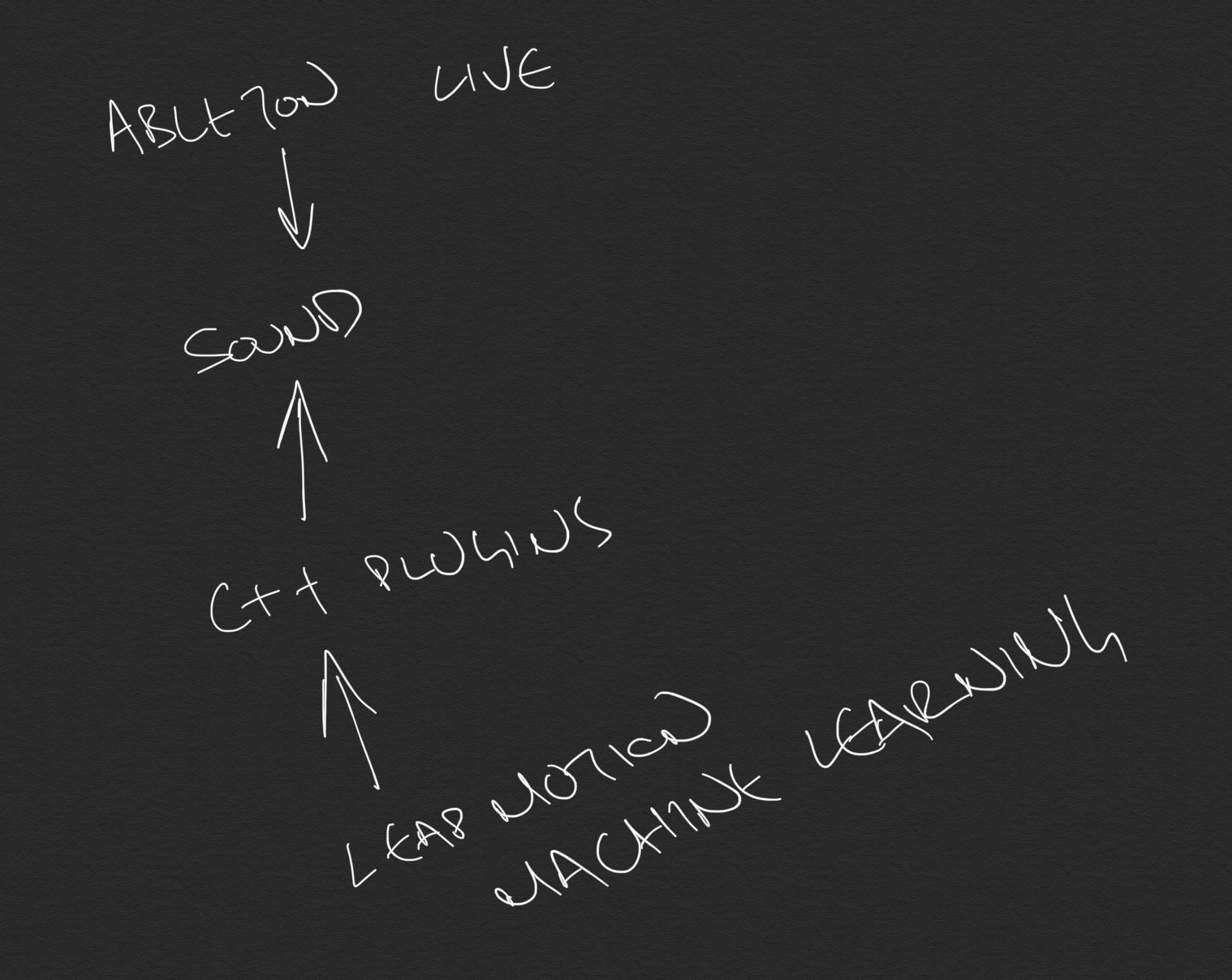

The technical part of my project provides two audio plugins purely built in Juce, a powerful C++ application framework. For the artistic part of my project I have used Rebecca Fiebrink’s Wekinator free open source software so as to deploy Machine Learning practices and by using a Leap Motion controller the project achieves to eliminate midi-mapping limitations for live performers and DJs.

Concept and background research

As I am coming from a sound design & music technology background I always find myself exploring and discovering new & old audio technologies. Moreover I find C++ audio programming truly fascinating and also intuitive programming environments like Max/MSP extremely interesting where I many times use for internal patching and communication amongst my audio visual software & for artistic creativity.

One of my favourite audio technologies or tools in that context is the ability of easily mapping software buttons, sliders, knobs, etc. to external midi controllers. Most of DAWs nowadays provide intuitive ways for mapping and many midi controllers have the ability to easily adapt in any digital audio workstation. And while one has the option of mapping one-to-one or one-to-many and easily control with the movement of a physical knob, button, slider, etc., it gets tricky to simultaneously control many-to-many mappings from the physical world to the digital workstation as humans are naturally limited to two hands.

Hence, my purpose for this project is on one hand using creatively my C++ plugins and on the other hand deploying machine learning so as to achieve the below:

1. To give the option and capability to a DJ, a live music performer, or a producer to effectively map many-to-many and efficiently control simultaneously

2. The ‘controlling’ to also create a novel, innovative & different way of composing music

By achieving these two objectives machine learning deploys both as an expressive tool for creativity and also as a means of successfully controlling many-to-many mappings.

Technical

In order to recreate the installation you must have installed all the below on your machine:

1. Ableton Live 10

2. Max 8

3. M4L - Max for Live

4. Processing

5. Wekinator-Kadenze

6. Xcode

7. Juce & Projucer

All the material for my project can be found on here.

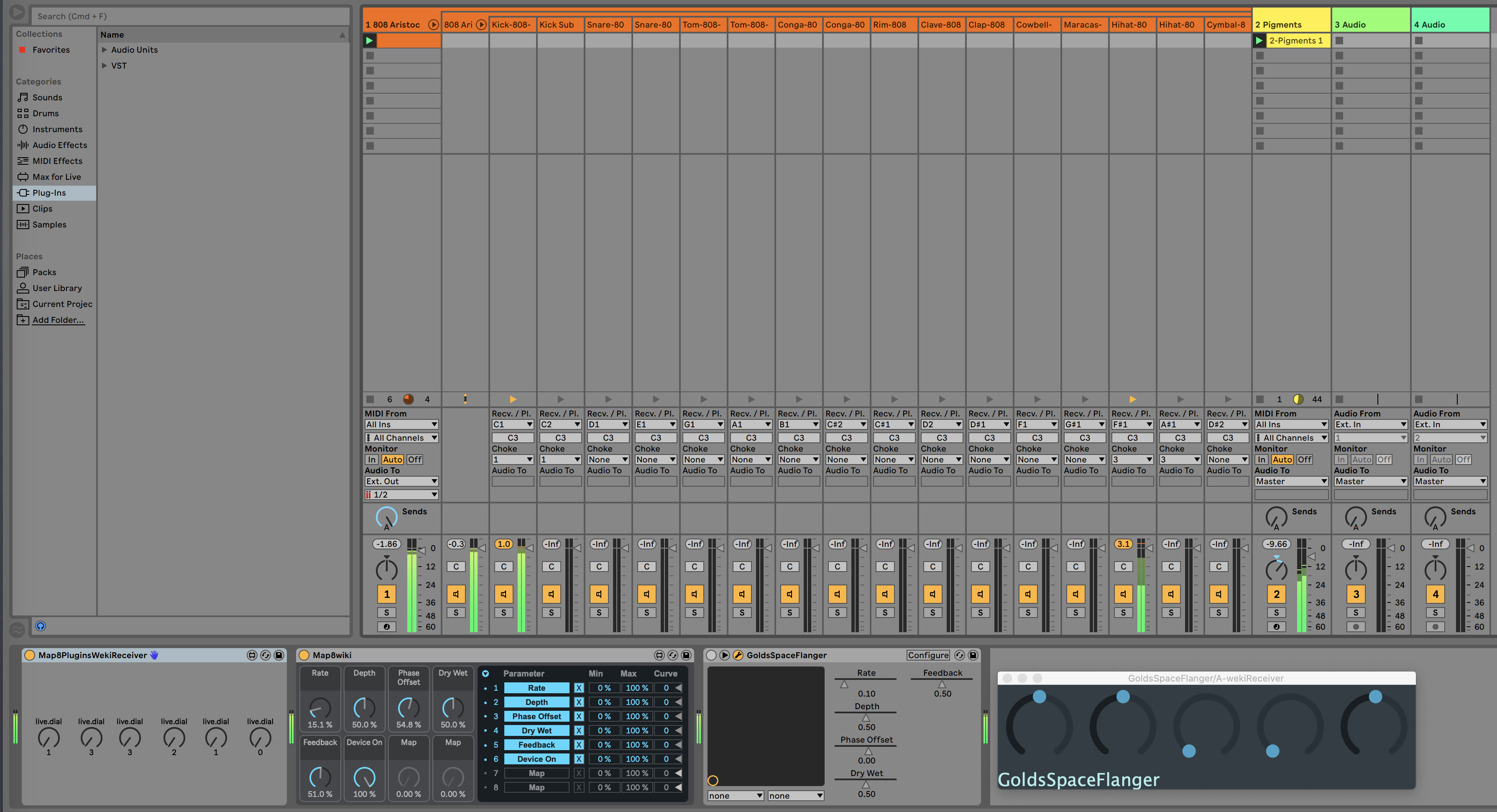

After you download the material you have to copy and paste the M4L audio effects in the correct folder on your machine in order to load them on your Ableton Live Max devices folder. Afterwards you need to open the C++ plugins via Juce & Projucer and load them locally as AU plugins and set the debugging to Ableton so as to show up on the Audio Plugins folder under the My Company folder.

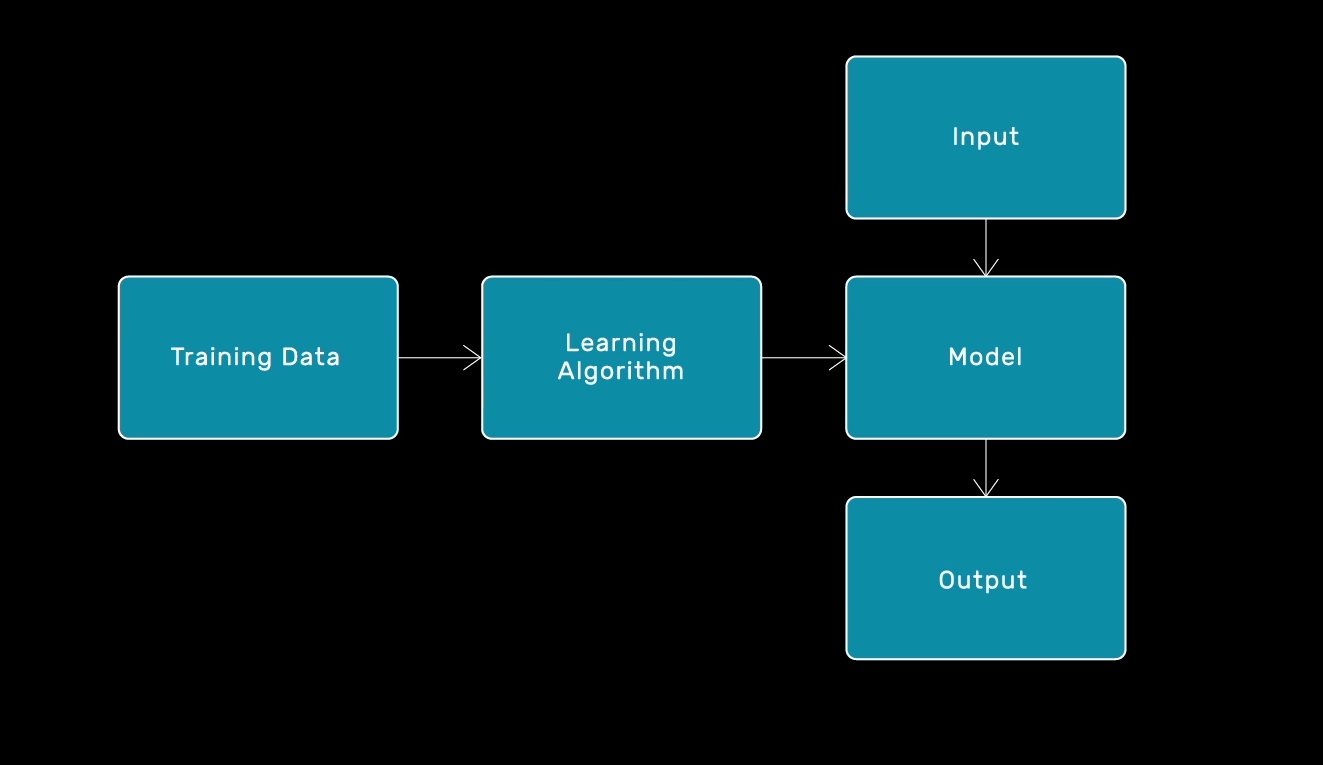

On both of my examples I have used regression only, as I found it to be the most appropriate means of supervisory machine learning that would help me to achieve my artistic and creative goals.

I started experimenting with Ableton Live and M4L as I am familiar with their capabilities and usage and my purpose was to both push their potential by using ML so as to provide a solution to the simultaneous many-to-many control and also create a cool new way of musically interacting and composing.

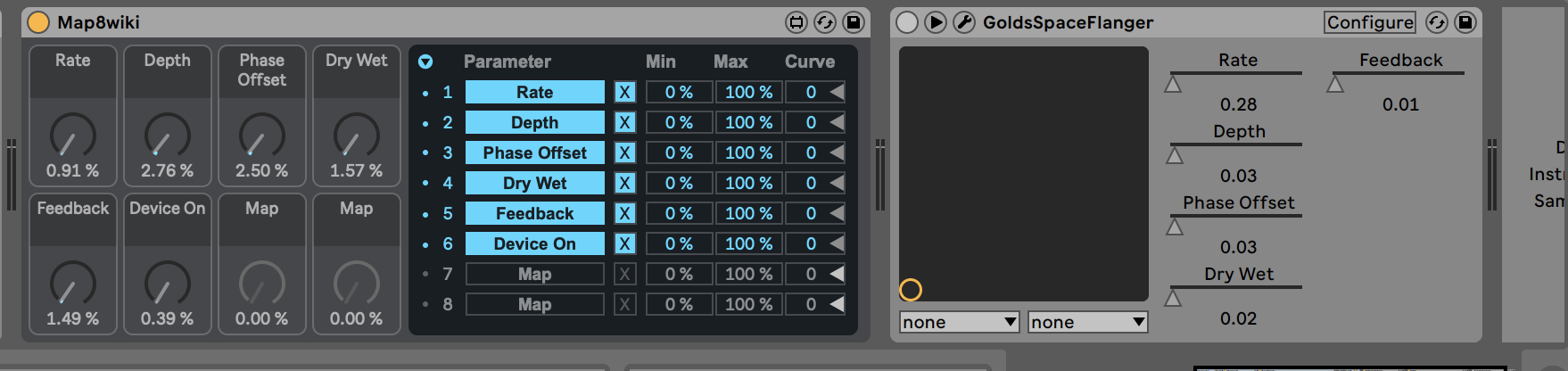

For the data input I have used the given Leap Motion Processing example that has been provided with the Wekinator examples. I have used & trained my regression data only using one hand with Leap Motion as I was thinking that the second hand would be deployed on something else in the context I envisioned my project; for example a DJ would need the other hand to search for music, a live performer to control other aspects of his/her performance, a studio composer/producer to possibly control a DAW/computer. Also, I have used one layer neural network model and hard limits as the mapping is intended to match midi knobs. In Max for Live I have created a fairly simple patch that receives the hard-limited numbers and consequently matches from 1 to 127 or from 0 to 1(on—off). Then the patch sends the numbers to a slightly modified version of Map8 (included in the M4L essentials pack). The Map8 easily allows the user to midi map anything in the Live environment, thus it seemed a logical solution for my mapping and project. For the Wekinator model I have used one-layer Neural Network as anything above that was making all the training more complicated and as I realised by experimenting it wasn’t needed for the project I was creating.

As you can see and hear the models respond very nicely both when I am using my Flanger or the Delay deploying some ‘generative mistakes’ as it was intended for artistic and creative purposes.

Regarding Juce and Projucer I have provided the video above as it is fairly complicated and it wouldn’t make sense to write long text without providing visual material and thus created the choice of video documentation. While I have used Ableton to load my C++ plugins any DAW software can be used in order to only load the plugins. I tried them with Logic as well and they worked exactly the same as seen on the photo material further below. The main reason I used Ableton underlies due the fact that Ableton communicates instantly with Max & M4L which was essential for the creative purposes of my final project.

Future development

The future development of my coding skills and my creative coding practise requires more integration between coding languages and aptitudes. I want to be able to use effectively and efficiently APIs and get more sophisticated when it comes to software application design. I also wish to get more experienced with audio frameworks and audio-unit plugins and start creating software synthesisers. Furthermore, I wish to combine all the skills acquired such as solid C++ in turn developing audio software applications and get more experienced in Juce framework environment and blend audio coding with advanced user interface. Lastly, I want to continue developing my computational artistic skills by practising and always discovering new technologies.

Self evaluation

I feel that my project has successfully met my aims. To begin with, I did manage to provide two powerful C++ plugin applications where I have increased my coding capabilities. From a machine learning point of view, the gestural controls and analysis of the hand movement has worked the way I intended to and it can be more engaging for a performer and the audience (in a future relative environment). Also, by using ML I have managed to personalise my needs (a user could easily do the same by proving different training data) and it was fairly straightforward to design a tool to control many-to-many mappings simultaneously without one feels forced to learn how to code so my machine learning techniques could apply to non-programmer artists. Therefore, in retrospect I am very pleased that my project has achieved to fulfil both my intentional artistic and technical purposes.

References

1. Workshops in Creative Coding video tutorials & lectures material by Dr. Theo Papatheodorou - https://learn.gold.ac.uk/course/view.php?id=6852

2. Advanced Audio-Visual Processing video tutorials & lectures material by Prof Mick Grierson - https://learn.gold.ac.uk/course/view.php?id=11573

3. Data and Machine Learning for Artistic Practice video tutorials & lectures material by Dr. Rebecca Fiebrink - https://www.udemy.com/course/free-learn-c-tutorial-beginners/ , https://www.kadenze.com/courses/machine-learning-for-musicians-and-artists-v

4. Udemy C++ Turorials by John Purcel - https://www.udemy.com/course/free-learn-c-tutorial-beginners/

5. Juce Framework Tutorials, Audio Programmer YouTube channel - https://www.youtube.com/playlist?list=PLLgJJsrdwhPxa6-02-CeHW8ocwSwl2jnu

6. Juce Learn Page (tutorials and documentation) - https://juce.com/learn