Taureau VR

Taureau VR is a mise-en-scene prototype in virtual reality for an electro-acoustic opera called Yawar Fiesta composed by Annette Vande Gorne.

produced by: Raphael Theolade

Introduction

In an age where the value of reality is being questioned, the limits between the digital and the human are blurred with acceleration; the musician Annette Vande Gorne composed Yawar Fiesta, an electro-acoustic opera drawing on contemporary dualities. Named after a Peruvian traditional ceremony commemorating in a symbolic way, using two animals, the condor and the bull (taureau in French), its independence against the colonial oppression; the opera Yawar Fiesta conceptually opposes tradition and modernity, having and being, interiority and surroundings, oral and visual culture. This prototype is a 5 minutes VR extract of Taureau, the second of five acts of Yawar Fiesta. The project was born from a dialogue between a music composer and visual artists, it brings together computational art, concrete music, and contemporary painting.

Concept and background research

The Yawar Fiesta takes place every year in some villages in the Peruvian Andes. This festival pits a condor captured in the mountains by the natives against a bull raised by the villagers in the European tradition. This tradition commemorates Peru's independence by appropriating a rite imported by Spanish settlers. Werner Lambersy, a French-speaking Flemish poet, chose to symbolise Human’s existential spiritual, social and political struggles with this traditional ceremony.

The Yawar Fiesta in Peru

I have a passion for the notion of illusory space (audio and visual), both for its necessary position within the human imagination and in its attempt to represent a fictional place. These questions made me want to mix computational techniques, with contemporary painting, and musique concrète, conceiving VR as a medium allowing the immersive journey beyond the academic framework of painting, through animated pictorial layers and musical composition.

The recent challenge of exploring the potentiality of VR as an expressive medium has been the driving force behind my commitment to this project. I met Annette Vande Gorne in 2016 during a musique concrète workshop in her Musique & Recherche center in Belgium and soon started a discussion around her opera Yawar Fiesta. In fact, VR, like musique concrète or architecture, is an artistic practice allowing expressivity based on space manipulation. It is also an up and coming, affordable technique, which I have been excited to explore theoretically since 2017 and practically during this MA.

Indeed this project drove me to apply to this program at Goldsmiths. When I started the first research, I was planning to work with traditional 3D techniques. I quickly understood that taking advantage of new opportunities related to interactivity and real-time would make the project more interesting and more likely to suit the wanted artistic direction. As the search for spatial transcription does not necessarily require very detailed graphics solutions but rather immersive qualities: parallax and interactivity. Moreover, proposing a “real-time” Yawar Fiesta also makes me independent of production companies. I can now work without the need for heavy rendering machines and with mostly open-source tools.

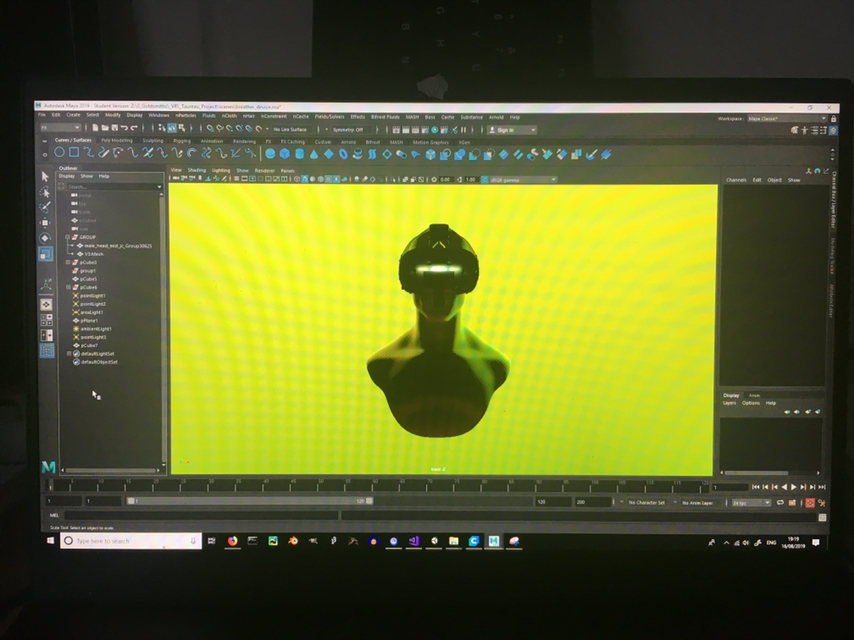

My installation for the MA final show creates a welcoming environment which acts as a liminal and mystical place where the spectator is invited to sit before entering into the digital world, invisible at first sight as you enter the space. Inspired by James Turrell work I have used two LED wall washers bars to reproduce the green-yellow warm colour palette present in the digital scene and to transform the spatial impression of the physical place. The back-lighting set-up silhouettes the person sitting on the transparent chair, and emphasis the visibility of the bright green LEDs on the black HMD.

As for the opera seat, I bought a reproduction of the well-known Louis Ghost armchair designed by Philippe Starck for Kartell. The sci-fi looking item is representative of the Baroque aestethic but also of contemporary consumerism. Its transparency gives a sense of immateriality resonating with the digital 3D surfaces present in the VR world, and fits nicely in the booth next to the column in G08. I was specifically looking for a balance between a dystopian vision and academic Western features.

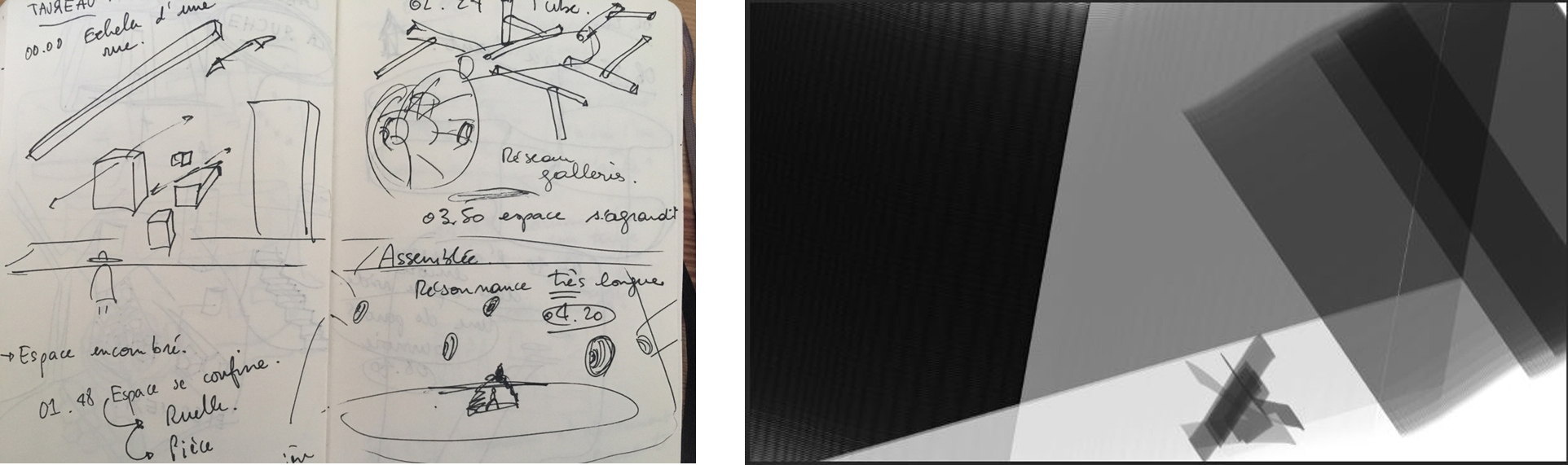

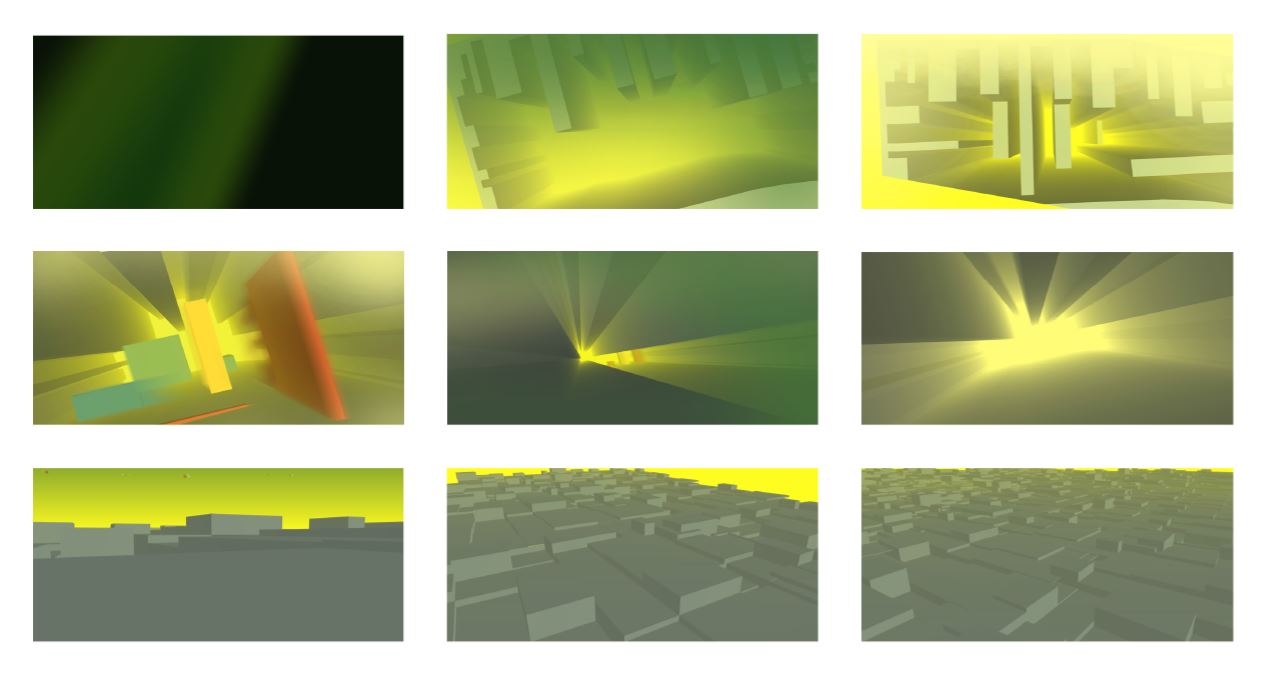

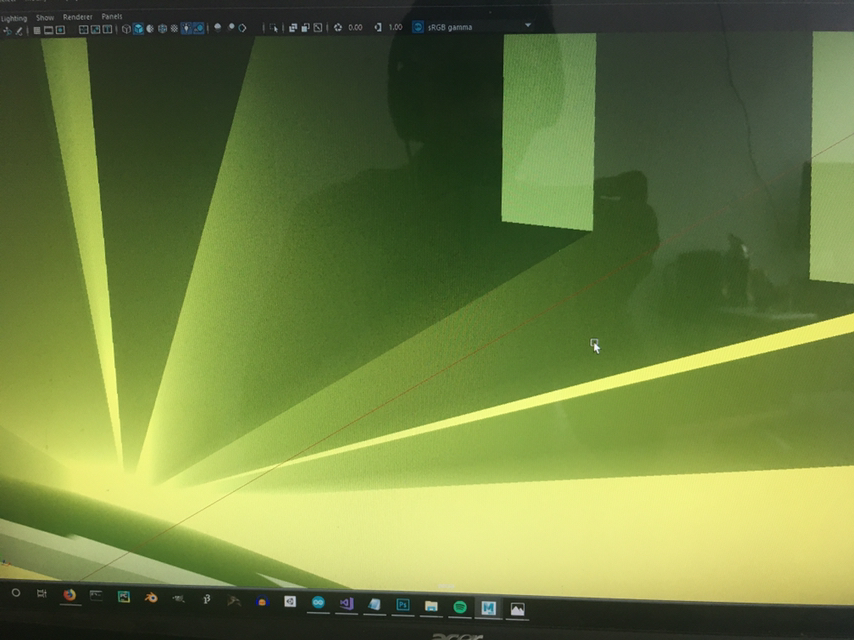

Scenography and design 3D research, and final setup

The digital graphic design is a collaboration with the painter Charlotte Develter. We shared the look development task. I was in charge of designing sketches of the spaces present in the music piece over time, while she painted a reinterpreted coloured version of these sketches. I would then translate this photoshop 2D images into 3D environments ready for VR. We designed 20 key different pictures in total. I used two of them for this project and imagined two transitional scenes in between adding movement and variation over the 5 minutes. The colour palette for Taureau, the cubes and perspectives were chosen to symbolise contemporary society (density, pollution, speed, gigantism, colonialism).

Below : a selection of design research steps from early sketches and ideas, to 3D look development.

Technical

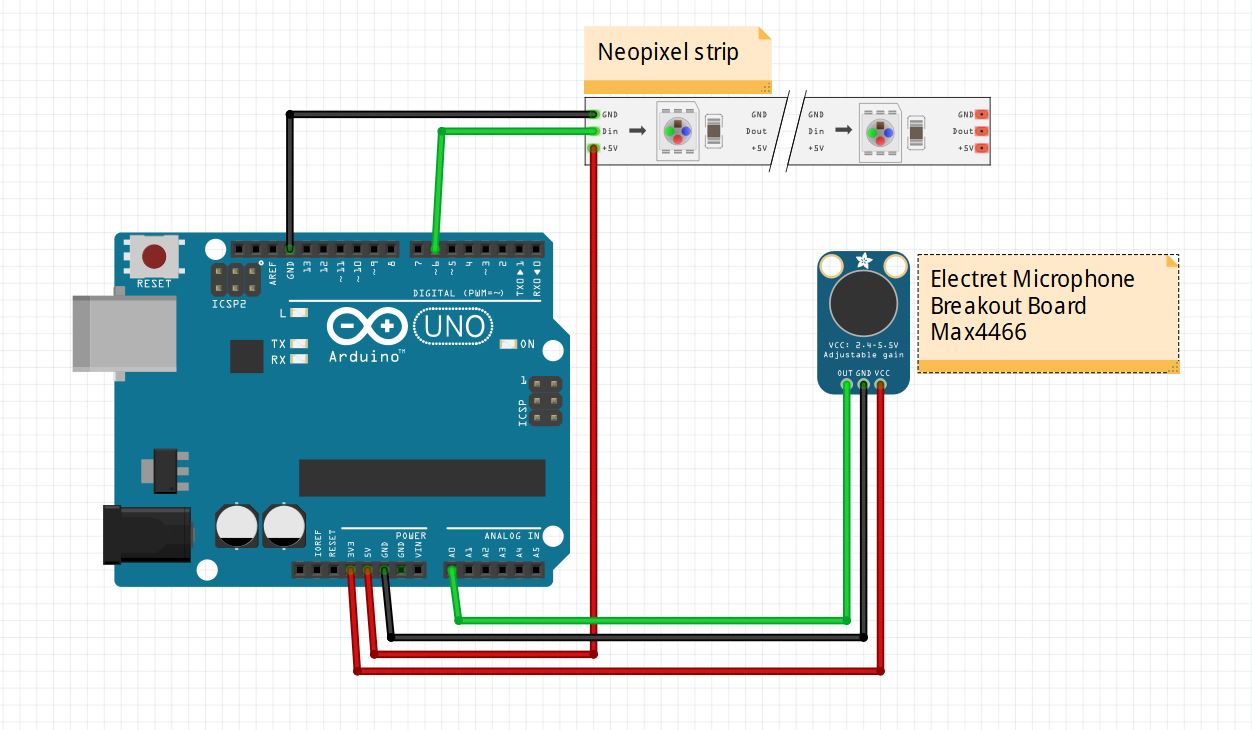

The interaction in this VR experience is based on a subtle and organic interaction. As the opera lasts 90 minutes, it was more relevant to set up a sensory connection to the environment in which the spectator travels rather than to offer a playful experience. A breath sensor is linked to light intensity and fog intensity in the CG scene and interacts with simple background animations. Cubes rotate or pulse as the user breathe while being immersed in the digital landscape. The interaction through breathing gives a feeling of embodiment close to a meditation session. In addition, I chose to visually connect breathing to a LED strip attached to the HMD, connecting the virtual world and the real environment, and to some extent the human and the digital. The sun and lens flare present in the scene are audio reactive and linked with a script to the amplitude of the music, this creates a sensitive lighting effect.

LED tube and breath sensor schematic

Furthermore, I implemented hands with a leap motion sensor. I realised that having a body presence in the environment helps the viewer to apprehend the scale of the surrounding elements. This embodiment solution also makes the digital décor tangible. The spectator is now immersed in the CG world rather than traveling in a mental dream vision.

Finally, the illusion of parallax offered by the interactivity of a 6dof HMD (6 degrees of freedom head-mounted display) enhances the sensation of space, unlike a 360 stereoscopic video in which only the head can rotate around a fixed point.

In addition, I had to design and 3D print various small pieces to hold the breath sensor on the HMD and to close the silicone tube which contains the LED strip.

Custom devices for VIVE HMD

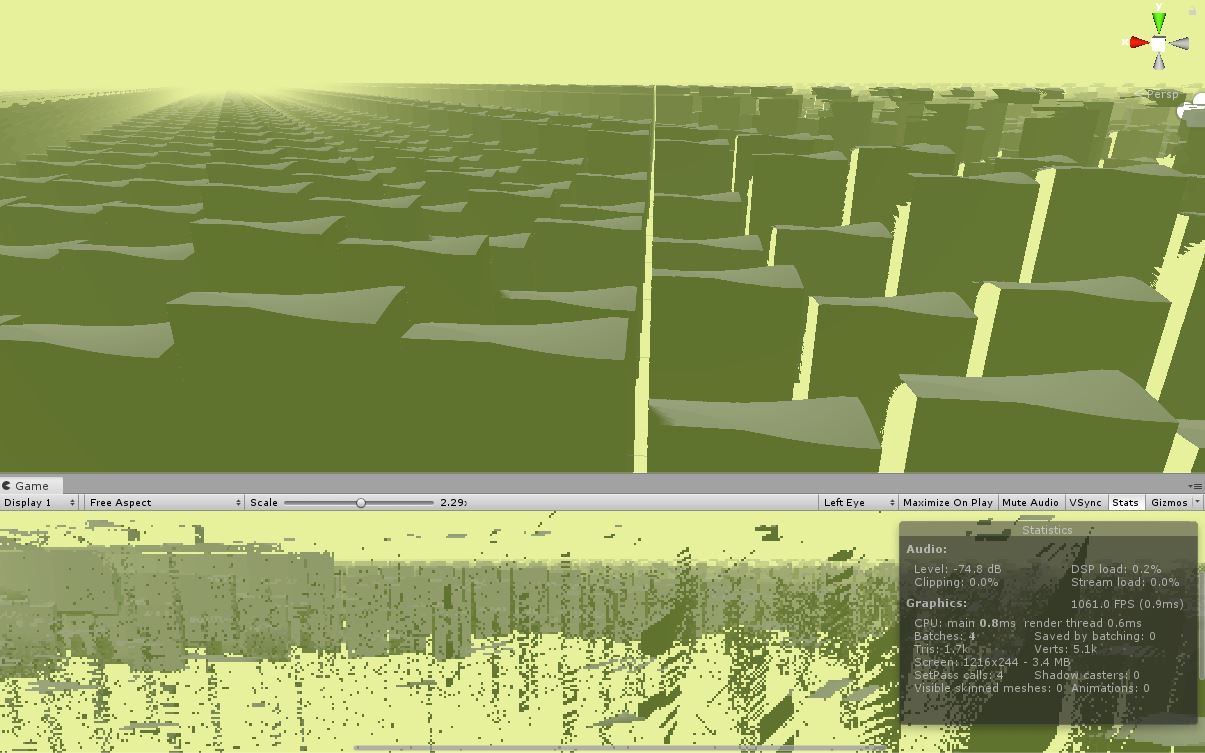

In terms of coding, the core of this project is generated with the Unity API in C#. I mostly used custom scripts, tools, and shaders to produce and animate the graphics constituted of primitive cubes and planes within Unity. To build the CG environment in such a way made it ready for interaction and flexible for further development and modification.

I originally thought about generating the graphics only with shaders, firstly spending a few weeks experimenting with GLSL and HLSL. I also tried to work with raymarching, but I, unfortunately, struggled too much with these advanced techniques to achieve a satisfying result. Raymarching was generating many artifacts making it too hard to combine with classic Rasterizer rendering techniques that I had to use for leap motion hands.

Raymarching test in Unity

I also spent a lot of time to find a way to implement the fog in the scenes and to keep real-time lighting without compromising a decent frame rate suitable for a smooth VR experience. This brought me to use the Aura2 plugin and had to dig into real-time rendering optimization, which is a completely different paradigm than the traditional 3D animation rendering with Raytracing.

Besides of this CG exploration, I implemented the breath sensor and the LEDs using an Arduino with a MAX4466 electret microphone and Neopixels. Even though technically really simple, to fine-tune the setup and to make it work nicely and fit neatly on the HMD is rather complex. I briefly tried to experiment with Wekinator and FFT to recognise breathing patterns and to filter the signal but I eventually kept the most simple and efficient solutions too average out and filter the analog input.

Once filtered the breathing signal is sent to Processing via serial. Then in Processing, when it reaches a defined threshold, two simple behaviors (rotation and expansion) are triggered and animate the graphics in Unity with OSC. I scripted a handy application to live tweak parameters and launch the Unity build. From the app, the threshold can be adjusted depending on the noise level in the exhibition room and the velocity of the animations can be changed. Thanks to this app, during the show the VR installation could be started in two clicks.

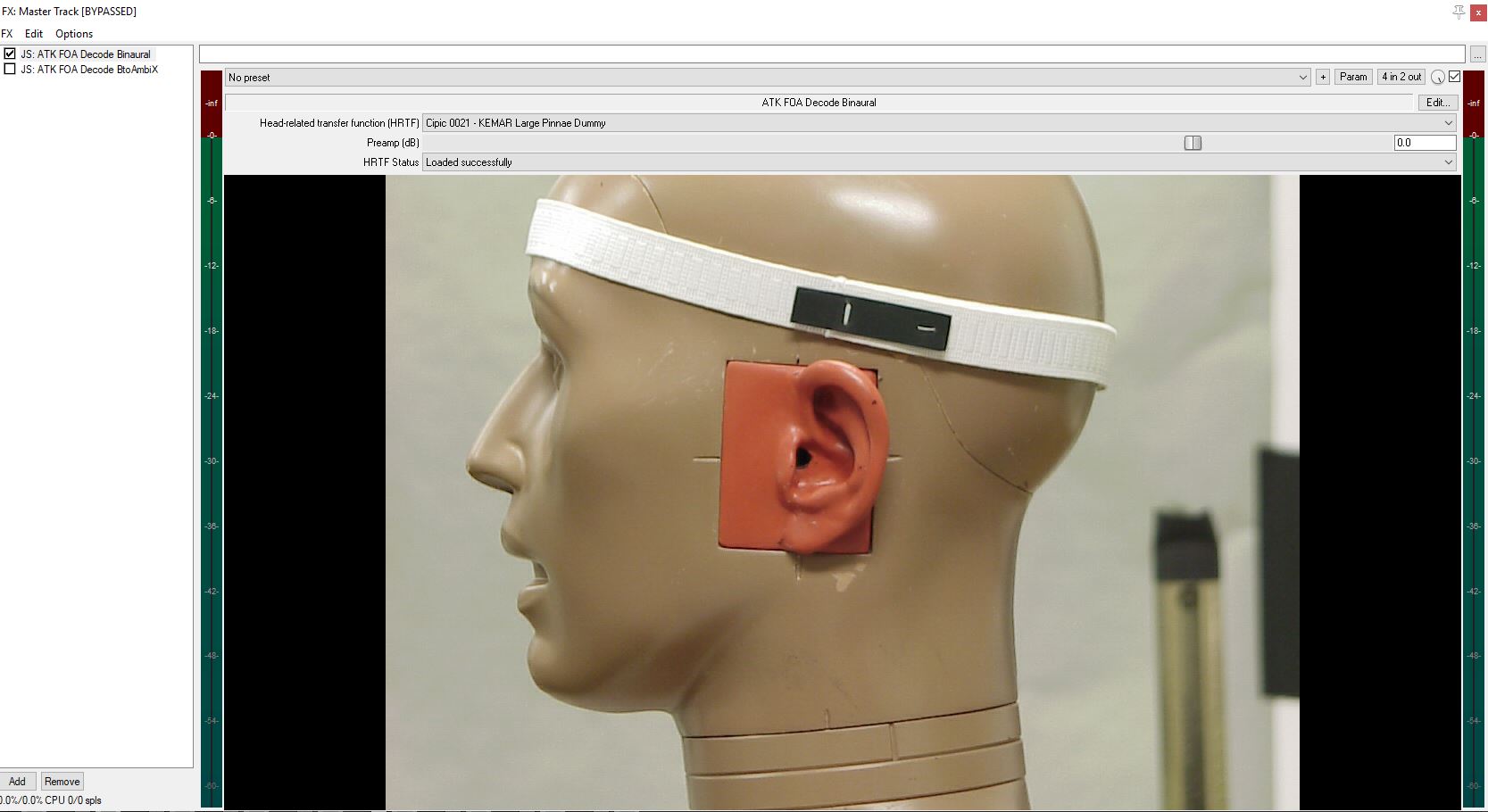

Finally, I converted a 7.1 music format into an ambisonic binaural file (with Ambisonic Toolkit), suitable for VR application, which is able to render spatial experience. Head movements will allow the spectator to have the impression that the sound is anchored to the virtual space, instead of being attached to the head of the spectator. It simulates a 7.1 system in the virtual world and provides a better sensation of presence in the space.

Binaural encoding in Reaper

Future development

I consider this 5 min long prototype as the achievement of the development stage of this opera project (supported by the CNC-Dicream in France). The next step will be to find funding and to hopefully hire a couple of people to produce the 85 minutes left. I plan to develop three different versions of the piece. One for gallery spaces, very similar to the prototype presented for this final project. A light webVR version, and hopefully one for larger audiences, with live music, dancers, and projections. The project was also submitted to Rencontres Internationales Paris-Berlin a few days ago and will hopefully get some exposition during this event.

Self evaluation

Learning Unity, C# and real-time rendering paradigm was difficult. I struggled with the artistic constraints of this particular project and the VR technical complexity in regards to final school project requirements. Although tempted by more complex graphic generation I was always limited by VR and 3D possibilities and also by my limited skills in a field which is closer to 3D game development than 3D animation. I spent a lot of time trying to solve basic issues related to this medium, like drivers and plugins compatibility, shader and rendering issues, optimisation … VR is a new tool and the working path is not well documented yet, feeling often caught in bleeding-edge technology problematics. I also didn’t find that working with Unity C# API was straight-forward due to my lack of experience. I wish I could have gone further with the creative coding part but I think it would have been very hard without a deeper understanding of HLSL and complex rendering techniques.

On the physical computing side, I think the breath sensor would need refinement. The microphone technique requires better filtering and more elegant animation algorithms.

Even though I wanted the interactions organic and subtle I think they could beneficiate to be slightly more obvious for the viewer and richer. Finally, I think I needed a neater wiring system for the VIVE and a better audio strap including headphones. People were sometimes slightly confused about how to put the HMD and the headphones on.

Nevertheless, I am happy with the overall result, I received positive feedback from visitors and also from highly qualified people from the game and animation industry. I am finishing this MA year with a decent prototype for this project and enough knowledge and confidence to pursue this exciting ongoing research.

References

- Adafruit NeoPixel Überguide’. Adafruit Learning System, https://learn.adafruit.com/adafruit-neopixel-uberguide/arduino-library-use. Accessed 16 Sept. 2019.

- Anderson, Elizabeth. ‘An Interview with Annette Vande Gorne, Part One’. Computer Music Journal, vol. 36, no. 1, Mar. 2012, pp. 10–22. DOI.org (Crossref), doi:10.1162/COMJ_a_00102.

- Arduino - Processing: Serial Data. YouTube, https://www.youtube.com/watch?v=NhyB00J6PiM. Accessed 16 Sept. 2019.

- Audio Visualization - Unity/C# Tutorial [Part 2 - GetSpectrumData in Unity]. YouTube, https://www.youtube.com/watch?v=0KqwmcQqg0s. Accessed 16 Sept. 2019.

- Aura 2 - Volumetric Lighting & Fog - Asset Store. https://assetstore.unity.com/packages/tools/particles-effects/aura-2-volumetric-lighting-fog-137148. Accessed 16 Sept. 2019.

- Charlotte Develter, http://charlottedevelter.com. Accessed 16 Sept. 2019.

- CNC | Dicream. https://www.cnc.fr/web/en. Accessed 16 Sept. 2019.

- C# SDK Documentation — Leap Motion C# SDK v3.2 Beta Documentation. https://developer-archive.leapmotion.com/documentation/csharp/index.html. Accessed 16 Sept. 2019.

- David Lively’s Blog | Coding, Graphics, Math and Other Misconceptions. http://davidlively.com/. Accessed 16 Sept. 2019.

- Kevin Watters. https://kev.town/. Accessed 16 Sept. 2019.

- Musique & Recherches. http://www.musiques-recherches.be/. Accessed 16 Sept. 2019.

- Simple Minecraft Voxel Terrain in Unity. YouTube, https://www.youtube.com/watch?v=r77vL_bmg-o. Accessed 16 Sept. 2019

- Simplex Noise Shader for Unity’. Gist, https://gist.github.com/nukeop/9877f5dc39f6ef57716a75e0aa0ffd27. Accessed 16 Sept. 2019.

- Technologies, Unity. Unity - Manual: Unity User Manual (2019.2). https://docs.unity3d.com/Manual/index.html. Accessed 16 Sept. 2019.

- The Ambisonic Toolkit - Tools for Soundfield-Kernel Composition. http://www.ambisonictoolkit.net/. Accessed 16 Sept. 2019.

- Werner Lambersy. http://evazine.com/wlam/wlam.htm. Accessed 16 Sept. 2019.