The Picture and the Portrait

This work is a self-portrait created using machine learning trained on my own photographs, writing, and drawings.

produced by: James Lawton

Introduction

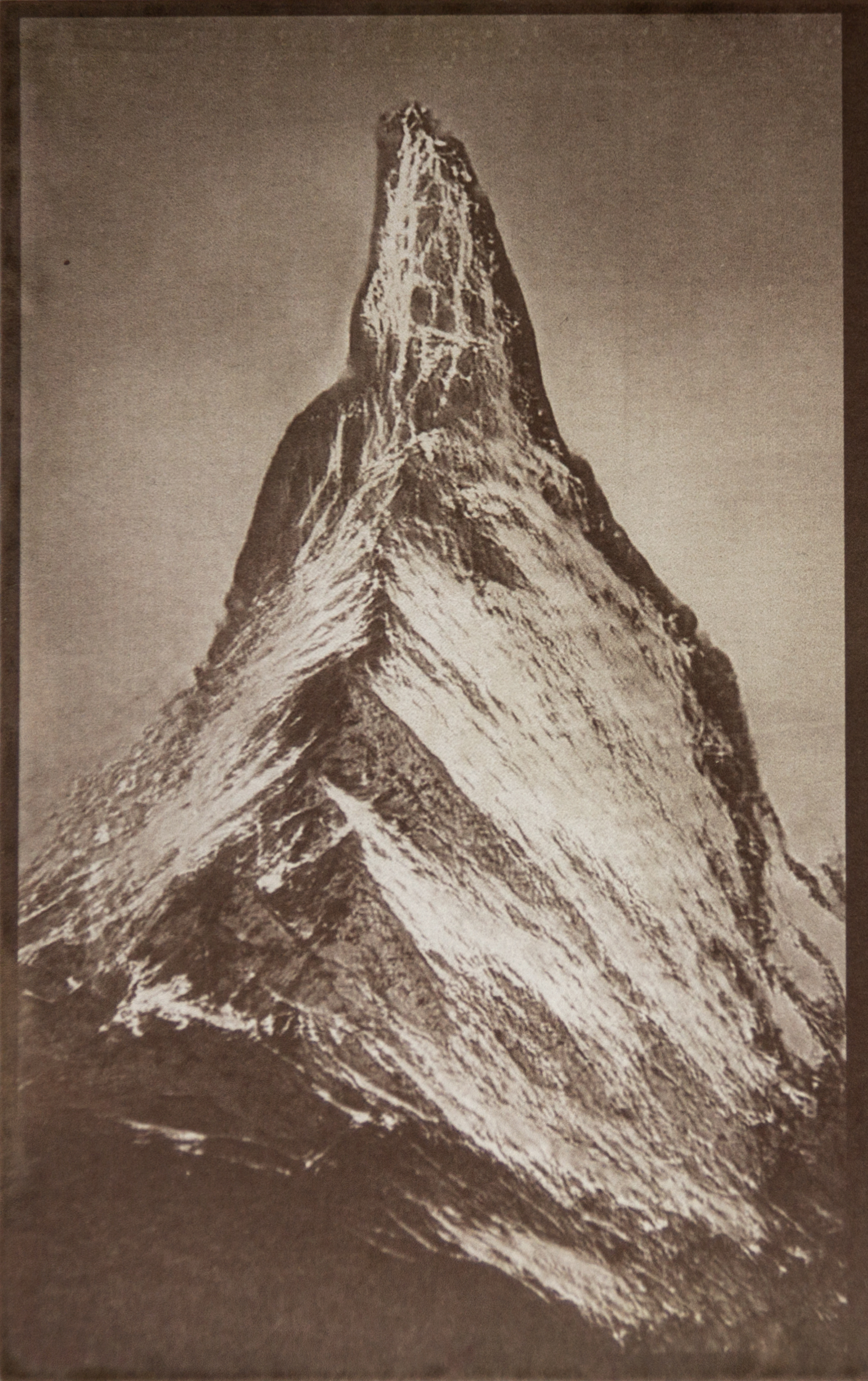

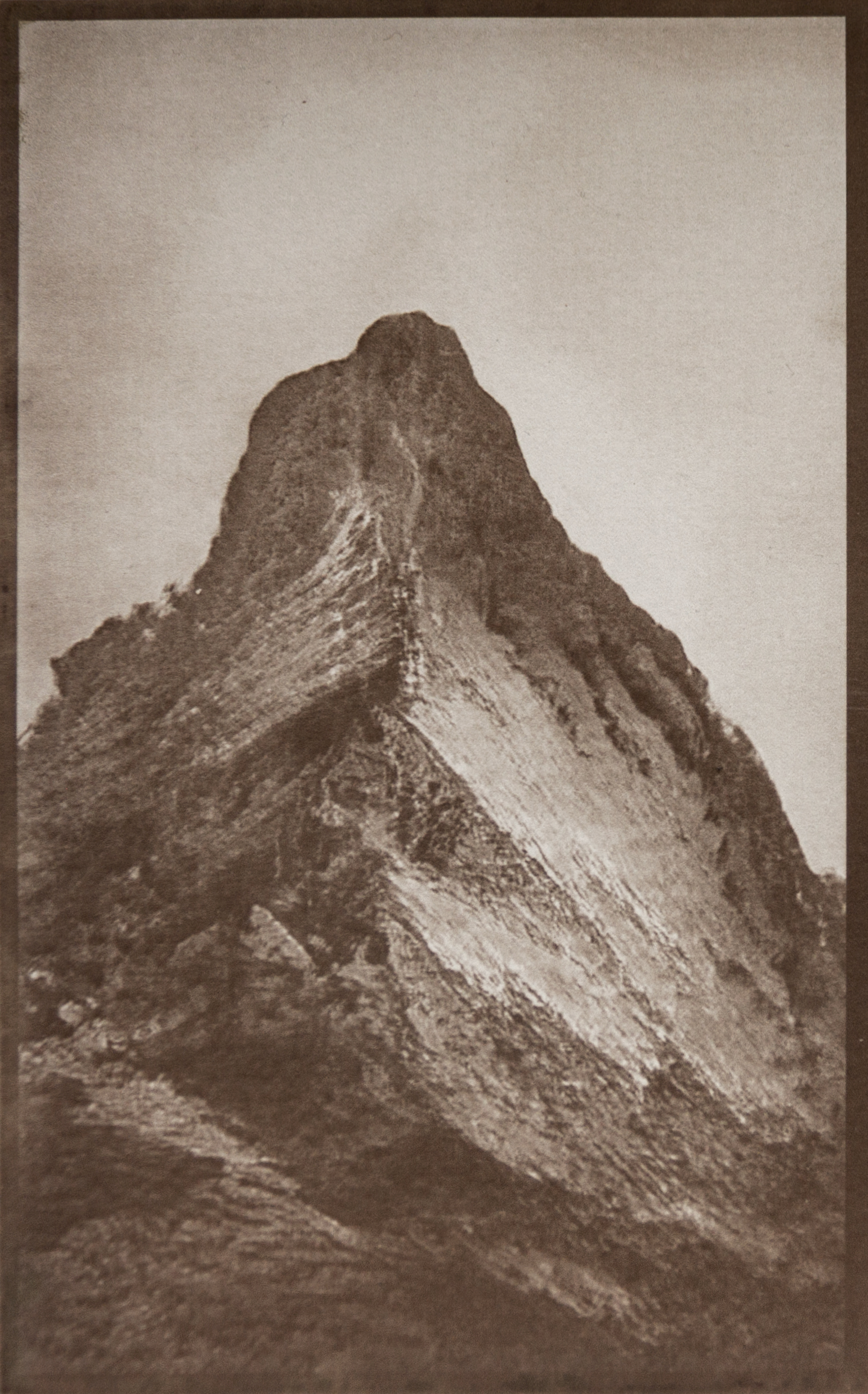

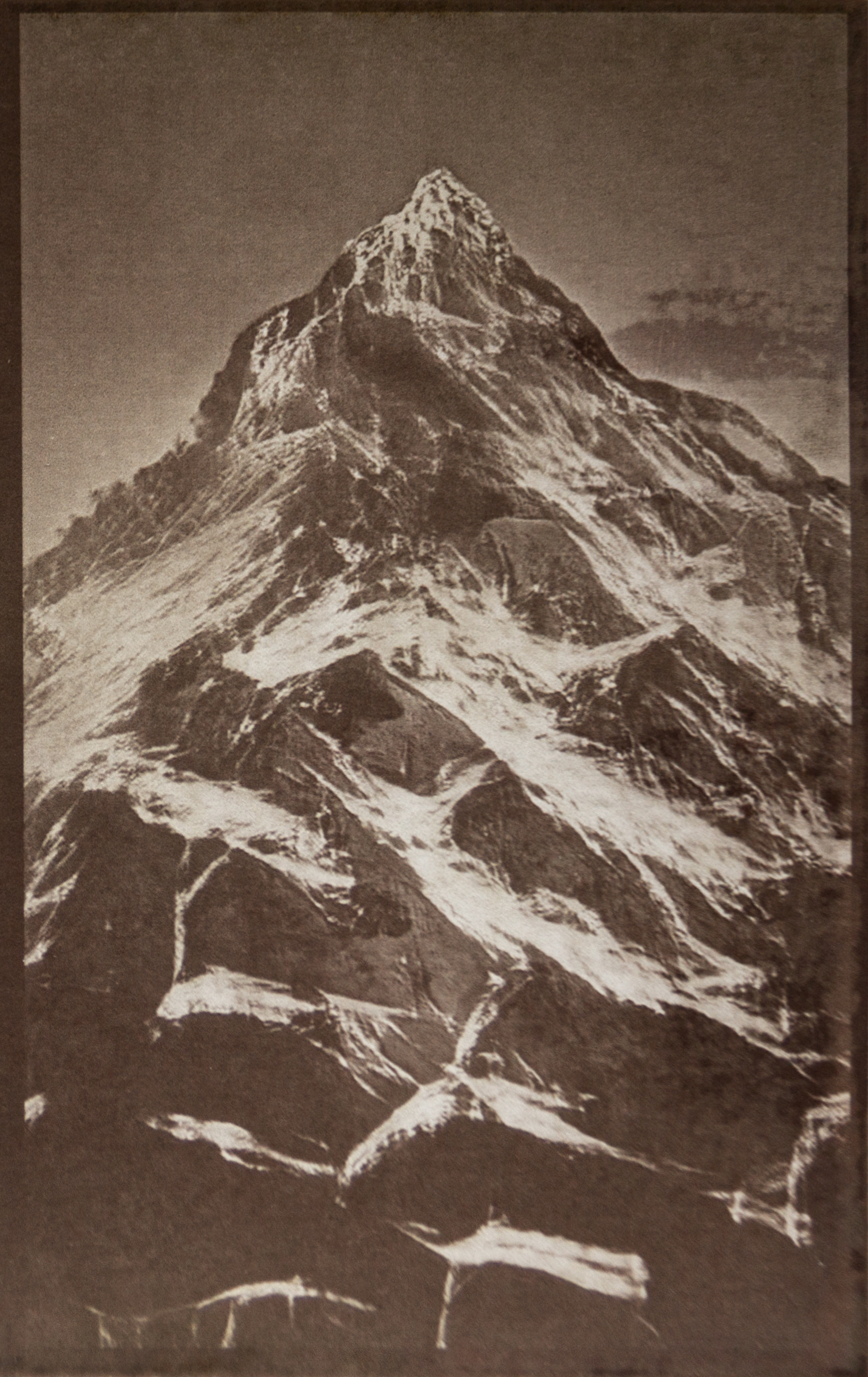

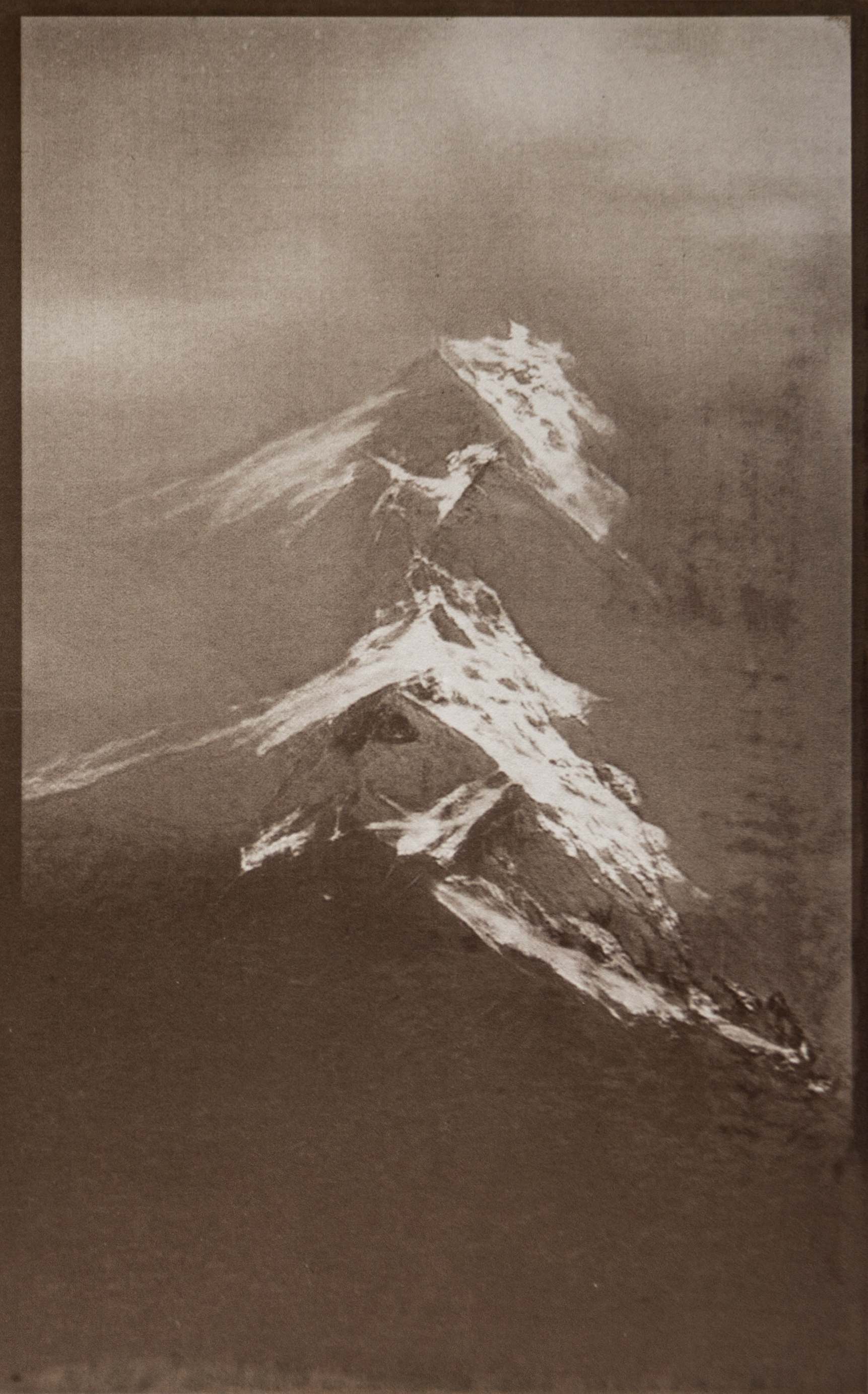

This work is a self-portrait made with machine learning trained on my own photography, drawings, and writing. I trained multiple datasets based mostly on my own photography and writing to see what the AI would learn and reflect back to me. I wanted to see what it would see about me; how it would make me. There are many questions on this subject one could consider: algorithmic bias, user honesty, creator bias, questions of agency, the accuracy of our digital selves, how we build our image of self, how others see us, and many more. The viewer is an important part of the work's life; it lives through their perception and participation.

Concept and Background Research

To be brutally honest, much of the inspiration for the project came from a frustration with previous projects and the evaluation of them, particularly my project in last year's degree show, as well as difficult events in the recent year. These included the pandemic but also things like my dog of 17 years dying, my father being diagnosed with ALS, and frustrations with what seemed to be my inability to create anything of any real artistic value. Things were no longer rewarding and educational; it was all demoralizing. While this seems like a harsh comment it was also my honest starting point: I only had my photographic library, my camera, some experience in illustration, and some writings I had done through the years. That was my independent creative output and I decided to harness those things as they were the only things I knew to any respectable degree. I needed to make something with these things and I was naturally in a very self-reflective mood due to the events around me.

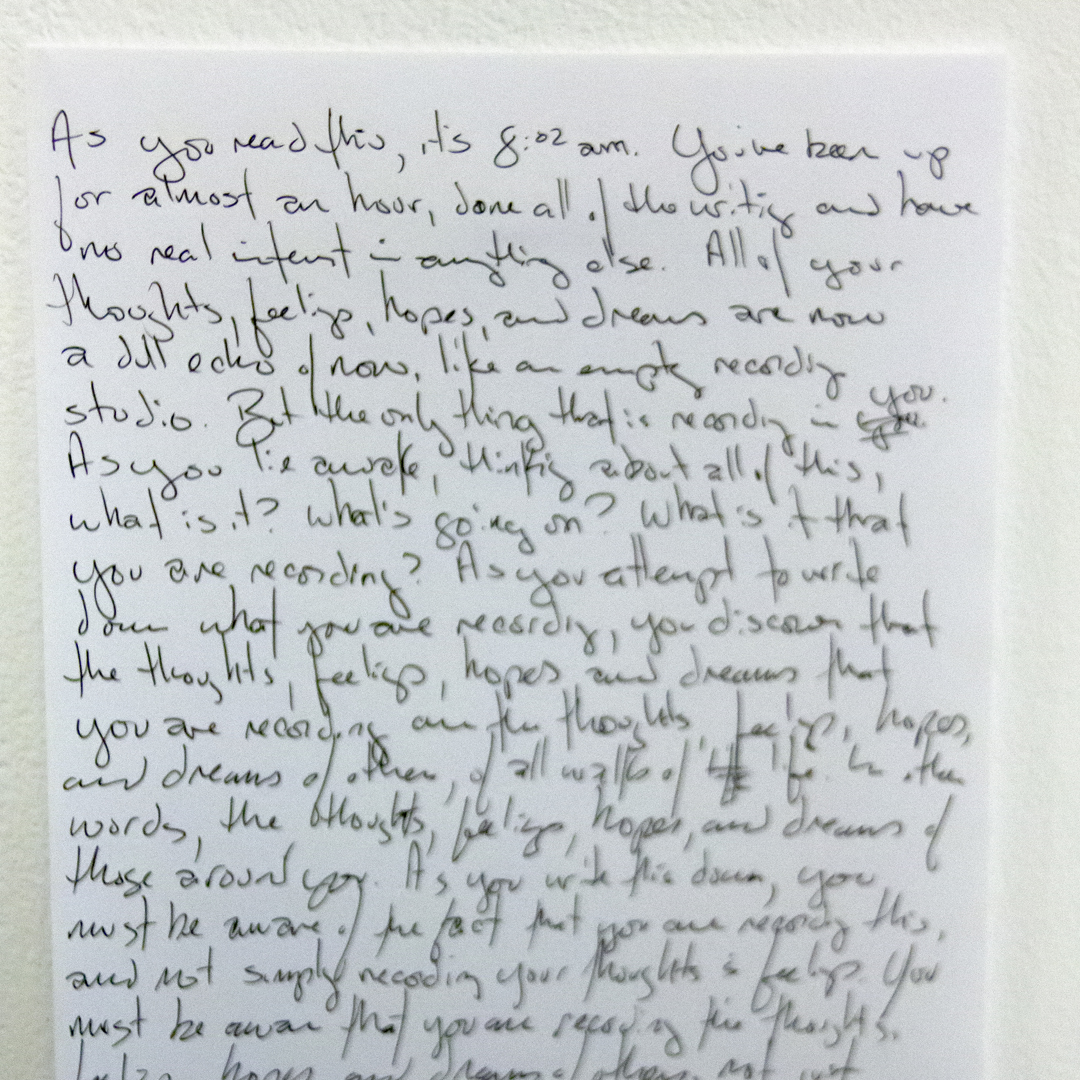

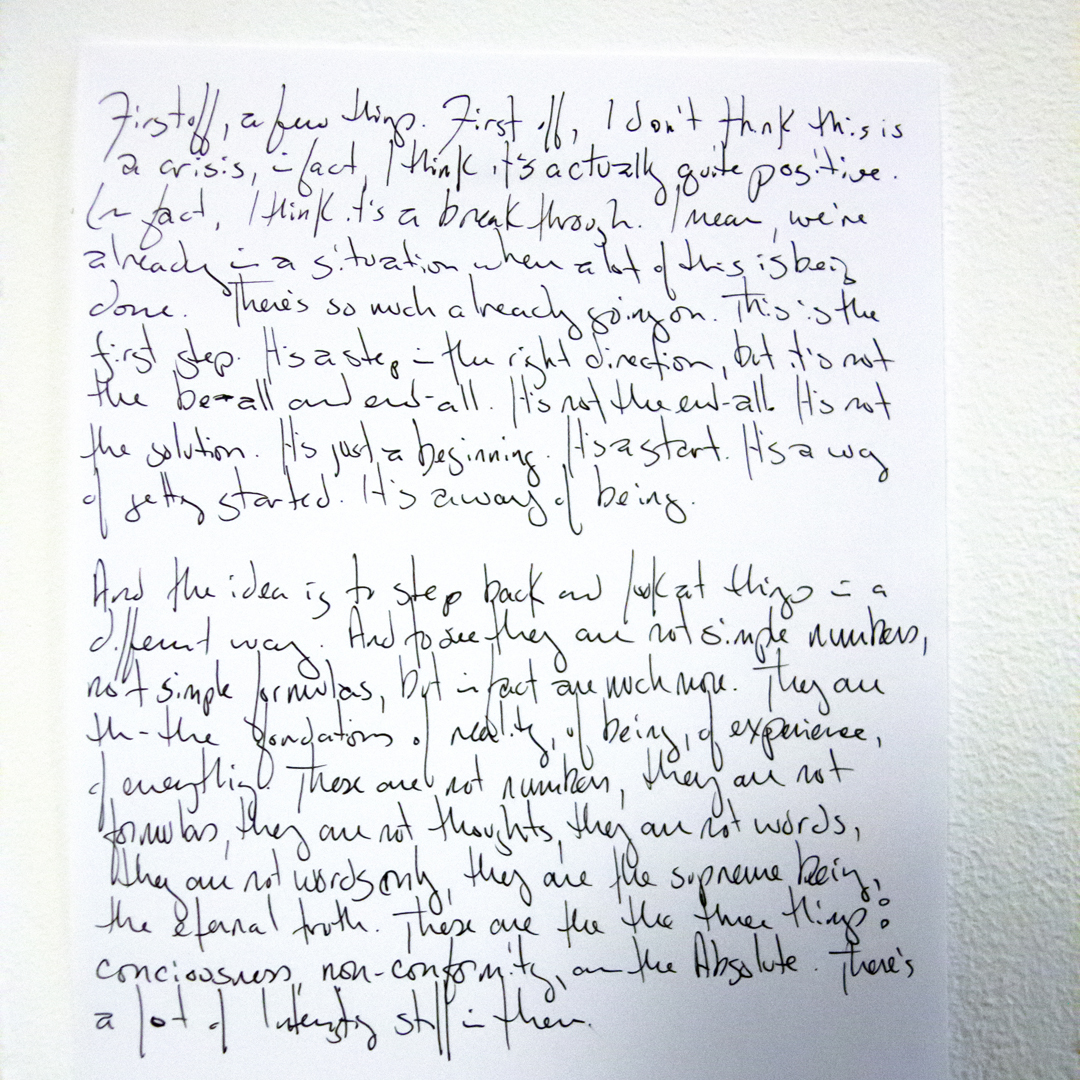

My concept was simple: use my own artwork to train machine learning models in order to see what the AI would give back to me. I wrote a research paper this year as part of the MFA that focused on how humans perceive text and images generated by artificial intelligence. This was partially inspired by my direction with my final project but it also influenced the work through a more nuanced understanding of how we see ourselves and others see something of us. This paper particularly influenced the decision to remove many of the results from their original context, such as salt printing the images like old photos, or hand-writing the text instead of printing it. The viewer often contextualizes things very different this way. My first step was to go through my old work that I had on hand in London, this was mostly very large databases of images I had taken through the years as well as some writing. Next, I needed more photos, particularly of myself, along with new drawings and keeping a diary for the vast text necessary for training. Finally, I had to try things. This was where most of the work came in. There are many resources for this type of work but much of it isn't easy. I tried simple things like RunwayML but also audited the computer department's AI class and read two well know books, following examples and generating what seemed like a lifetime of content -- much of it not that good.

Technical

Runway was the start and finish for the images but not the middle. There was an entire Keras/TensorFlow/Collab phase of learning and experimentation that melted my brain. Ultimately though the images seemed to be much more about the input vs any changes to core code. StyleGAN2 and A LOT of CAREFUL, LABORIOUS curation of the input and output resulted in some amazing images.

The text was slightly different as I both really enjoy natural language programming and found GPT2 to be a bit problematic. GPT2 can produce lengthy, somewhat coherent text but it's initial training model is very problematic. It is well known that it used text scrapped from the internet and it can produce some shocking and inappropriate content. Feed it more text and it does learn more, but the results swing wildly from subject jumping to getting stuck generating text from one input. I used some of the results but spent a lot of time laughing and frowning at much of the results. I had been working with NLP since last year and wrote a few different versions of my own text generator using the Natural Language Toolkit. It can't generate lengthy, accurate text but it can generate interesting and quirky bits of short text and the python outside of NLTK is *almost all mine.

Finally an important analogue portion of this project revolves around context. Early images were printed with salt-printing techniques, the method of many early photographs. Much of the text was written out by hand, instead of neatly printing it, in order to remove that generic "type" look. At the encouragement of my classmates some of the original drawings were displayed in the show, and finally, visitors were encouraged to interact with the project by writing and drawing for themselves displaying how they felt or what they took away from meeting this digital simulacra of myself.

Future development

If I had time and an amazing external GPU, the sky's the limit. I was only held back by time and money. Even with the code, I could do *SO much more with just a bit more experimentation as well GPU power. I really think I could produce some amazing things. I have so many files, folders, datasets, and notes on new experiments for new results. I have a lot. On the professional side becoming comfortable with Python as well getting some experience with machine learning, NLP, Keras, and TensorFlow may prove very beneficial.

Self Evaluation

I think this project looks good. I played catch-up to some very famous works already out there and sometimes like my results better. As mentioned above, if only I had a bit more time and A LOT more GPU power I could do SO MUCH. People responded well also, both my classmates and visitors. I hope it lives on.

References

Bird, Steven, Ewan Klein, and Edward Loper. Natural Language Processing with Python. 1st ed. Beijing ; Cambridge [Mass.]: O’Reilly, 2009.

Chollet, François. Deep Learning with Python. Shelter Island, New York: Manning Publications Co, 2018.

Dillon, Sarah. “The Eliza Effect and Its Dangers: From Demystification to Gender Critique.” Journal for Cultural Research 24, no. 1 (January 2, 2020): 1–15. https://doi.org/10.1080/14797585.2020.1754642.

OpenAI. “GPT-2: 1.5B Release,” November 5, 2019. https://openai.com/blog/gpt-2-1-5b-release/.

Jentsch, Ernst. “On the Psychology of the Uncanny (1906).” Angelaki 2, no. 1 (January 1997): 7–16. https://doi.org/10.1080/09697259708571910.

Lippmann, Walter. Public Opinion. New Brunswick, N.J., U.S.A: Transaction Publishers, 1997.

MacDorman, Karl F., and Debaleena Chattopadhyay. “Reducing Consistency in Human Realism Increases the Uncanny Valley Effect; Increasing Category Uncertainty Does Not.” Cognition 146 (January 2016): 190–205. https://doi.org/10.1016/j.cognition.2015.09.019.

McLuhan, Marshall. The Gutenberg Galaxy. London: Routledge & Kegan Paul, 1967.

McLuhan, Marshall, and Quentin Fiore. The Medium Is the Massage. London: Penguin, 2008.

Mori, Masahiro, Karl MacDorman, and Norri Kageki. “The Uncanny Valley [From the Field].” IEEE Robotics & Automation Magazine 19, no. 2 (June 2012): 98–100. https://doi.org/10.1109/MRA.2012.2192811.

NVlabs/Stylegan2. Python. 2019. Reprint, NVIDIA Research Projects, 2021. https://github.com/NVlabs/stylegan2.

Rogers, Carl R., Elaine Dorfman, Thomas Gordon, and Nicholas Hobbs. Client Centered Therapy: Its Current Practice, Implications and Theory. Reprinted. London: Robinson, 2015.

Shelley, Mary Wollstonecraft, and J. Paul Hunter. Frankenstein: The 1818 Text, Contexts, Criticism. 2nd ed. A Norton Critical Edition. New York: W.W. Norton & Co, 2012.

Weizenbaum, Joseph. “ELIZA—a Computer Program for the Study of Natural Language Communication between Man and Machine.” Communications of the ACM 9, no. 1 (January 1966): 36–45. https://doi.org/10.1145/365153.365168.