Acoustic Fields

Acoustic Fields (2019) is a site-specific installation in Lecture theatre RHB 144 that attempts to enhance a participants awareness of the technological devices and systems that are present in the space, constantly working, even when the lecture theatre is not in use. Acoustic Fields explores human-technology relations by isolating and amplifying the sound of each object, in relation to a person's position in the space. By combining machine vision (to track a person's position in space) with amplified technological sounds, it seeks to explore how these sounds mediate the audiences' perception of space and of the objects themselves.

produced by: Jonny Fuller-Rowell

Introduction

This practice-based research investigates how the sounds of functioning technological objects mediate our experience of an environment. Using openFrameworks running on a raspberry pi combined with a webcam the device tracks a person's position in space, calculating a persons distance from an object and mapping the distance with the amplitude of the objects sound. The installation seeks to explore how these technological sounds mediate the audiences' perception of space and of the objects themselves by investigating how objects transition from functioning unconsciously as 'background relations' to conscious 'alterity relations'. The intention of situating this artwork in a lecture theatre as a opposed to an art gallery, is to gain a more permanent perceptual change as the viewer questions their own experience of everyday space.

Research questions;

- What happens when you amplify and isolate sounds from within their environment?

- How does our understanding of human-technology relations change when objects which function as background relations move into our conscious awareness.

- Can we develop a different understanding of functioning technological objects through sound?

- Can our sensitivity to these sounds / objects continue beyond this experience, leading to a heightened awareness in everyday environments?

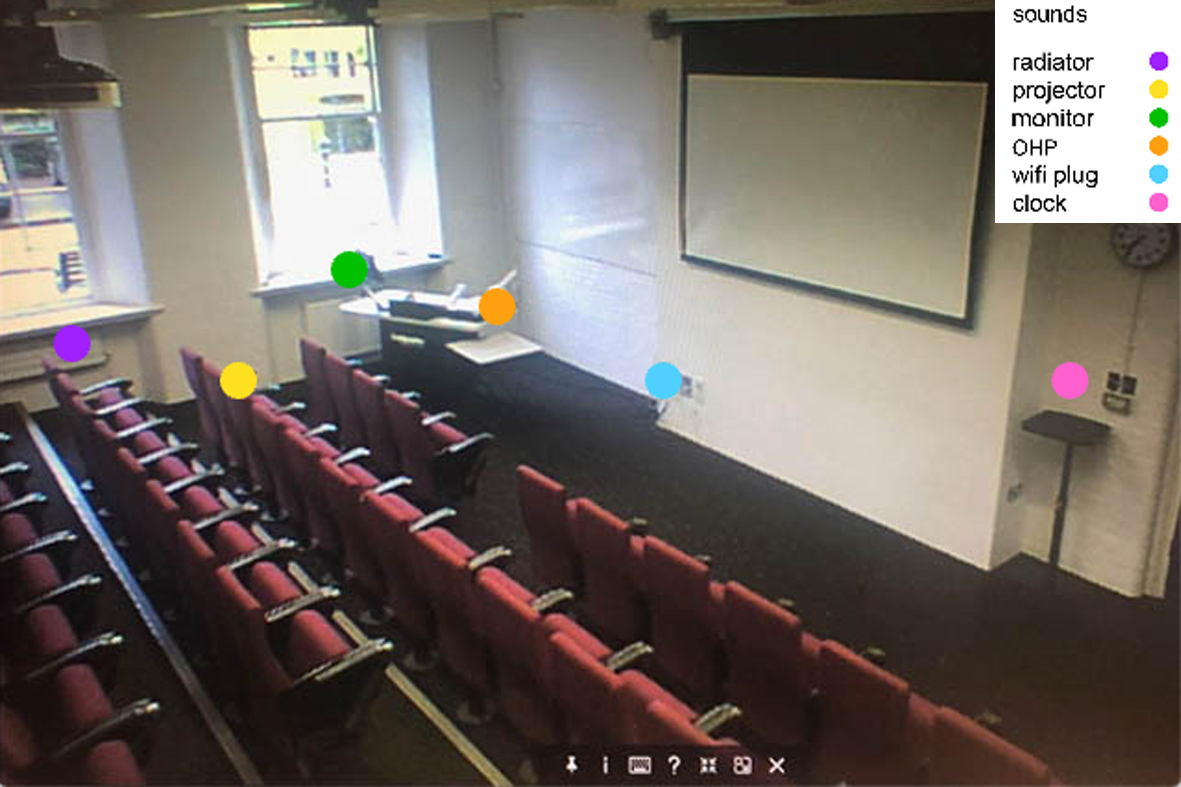

PS3 eye, raspberry pi and battery in a glass lidded biscuit tin monuted on wall / PS3 eye connected to a raspberry Pi 3 and a battery / Guide image of RHB 144 with plotted points of objects in the space.

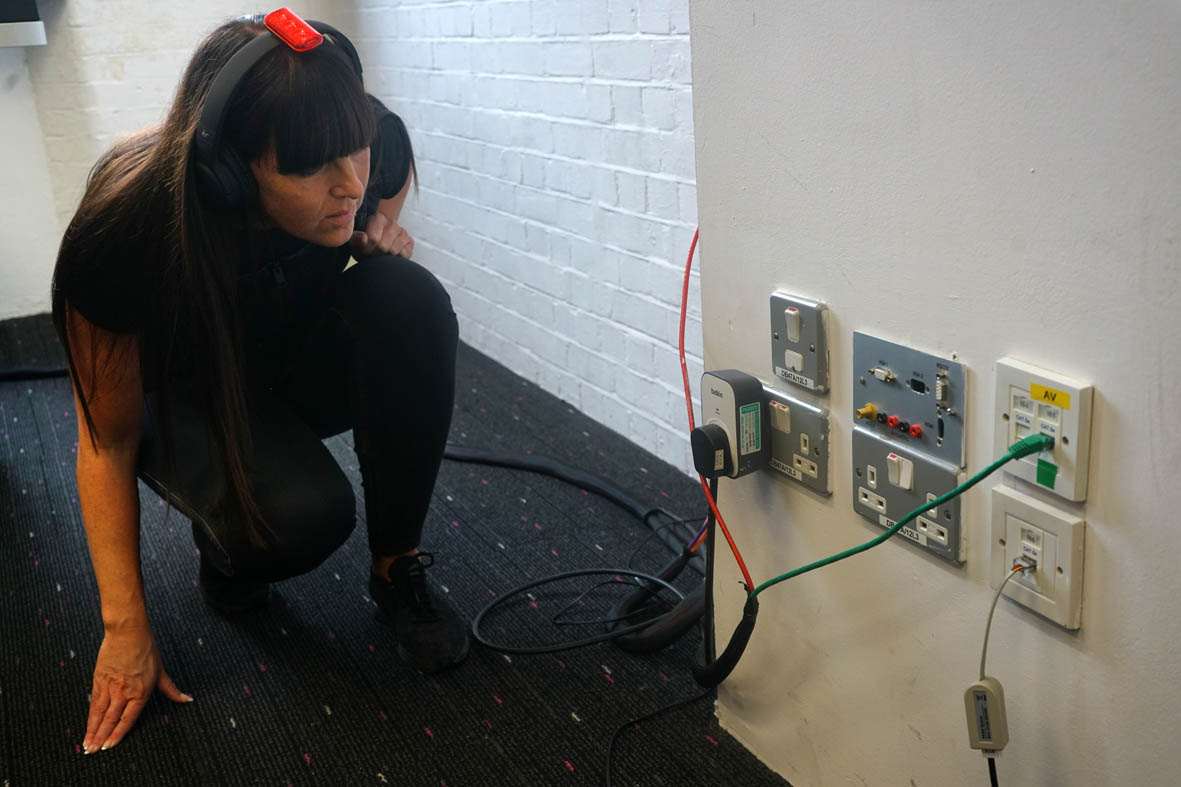

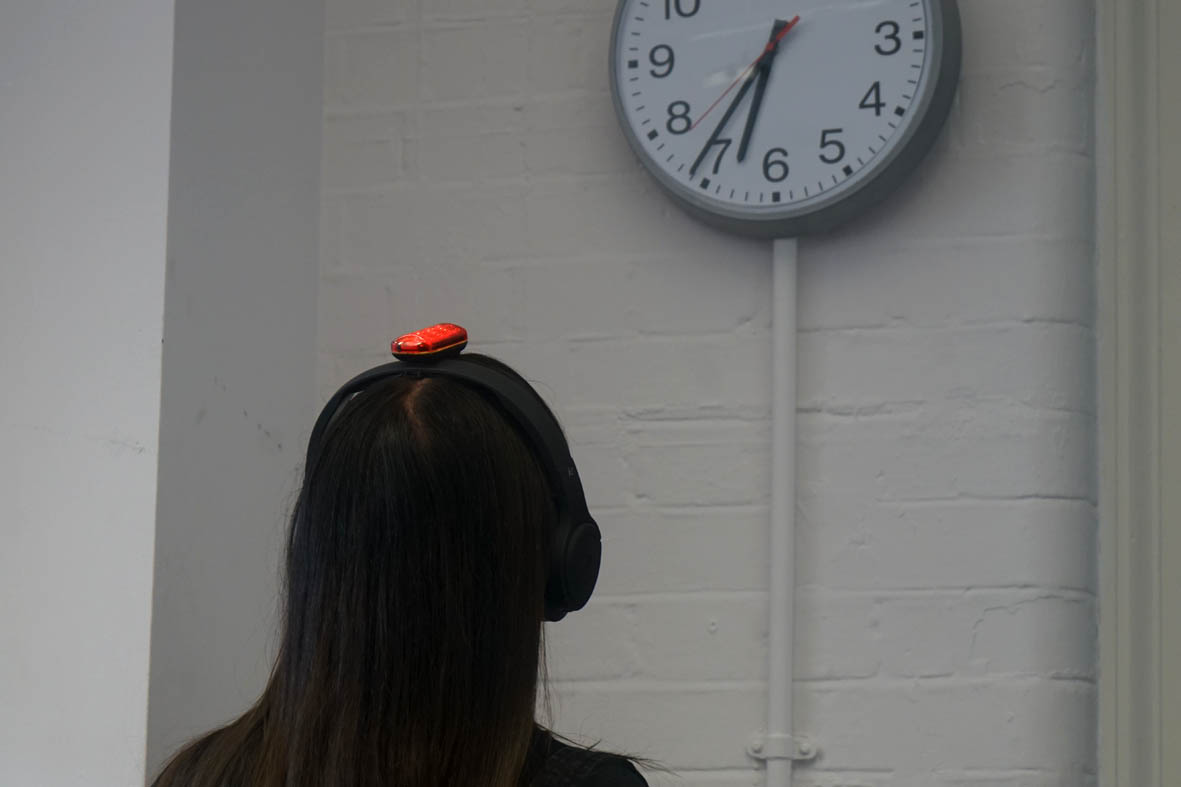

Documentation of Acoustic Fields in lecture theatre RHB 144, particpant listening to sound of technology in the room.

Concept and background research

I was inpired by Don Idhe's Post-phenomenological theory exploring our developing relationship with technology. I was most interested in the interplay between his terms 'background relations', where technologies are the context for human experiences and actions and 'alterity relations,' where humans interact directly with technologies with the world in the background. I wanted to use sound as way of toggling our perception of these objects from background to foreground. I was also influenced by Martin Heidegger's 'tool analysis' which argues that, for the most part we are not encountering things as 'present in our minds', how much do we unconsciously experience the world and what effect are these unconscious technological mediations having on us? Also Graham Harman's 'Objects Orientated Philosophy' was a major influcence, he theorises that objects exist independently of human perception, he considers the relationship between a human beings and objects to be the same as that between two or more objects.

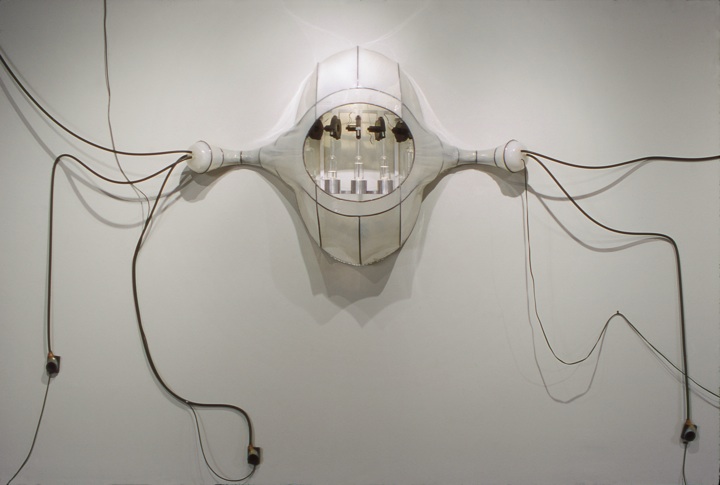

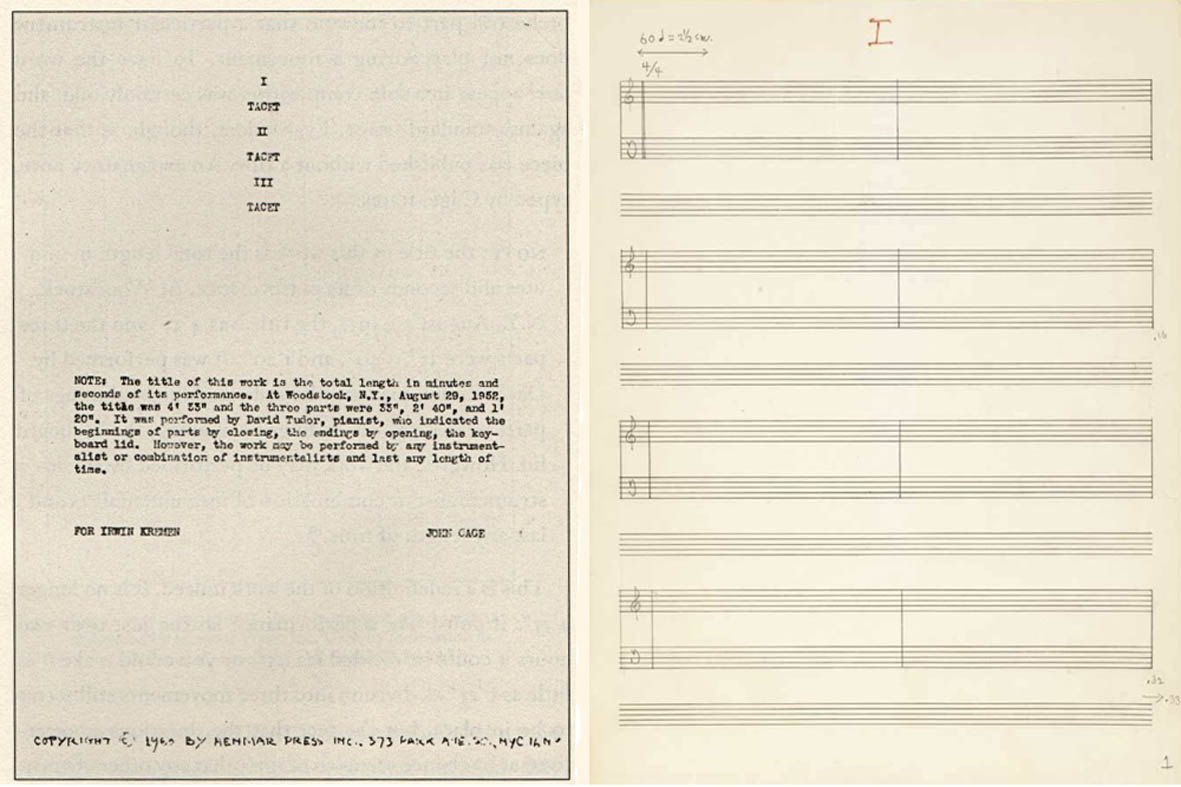

I was also inspired by sound artists, David Cunningham, Sabrina Raff and John Cage. David Cunningham's The Listening Room (2003) attempts to make the audience more conscious of what they are 'listening' to, to expose the space itself by magnifying the sounds of the room. His work allows the resonant frequencies of a space to become audible, however instead of isolating these sounds Cunningham's system merges these sounds into one ambient cacophony escalating in amplitude until triggering a noise gate and beginning again. Sabrina Raaf's Unstoppable Hum (2000) generates its own humming produced through a set of “fans” that blow air into corresponding glass jars filled partially with water. She is interested in the interplay between background-foreground relations however by appropriating these sounds they become something new, separated from their original source, Acoustic fields amplifies rather than alters, investigating how objects transition in and out of consciousness and ultimately how that affects our perception of space. John Cage's 4'33” (1952) is also an important influence, each 'performer' quietly listens with full awareness to the sounds audible at that moment, making the distinction between hearing (passive) and listening (active).

Sabrina Raaf, Unstoppable Hum (2000) Media: Steel, Rubber, Aluminum, Glass, Custom Electronics, Dimensions: 84” x 70” x 12” / John Cage, 4'33'' (1952) score, 11 x 8 1/2" (27.9 x 21.6 cm) sheet paper. / David Cunningham, The Listening Room (2003) Installation view in Chisenhale Gallery, London.

Technical

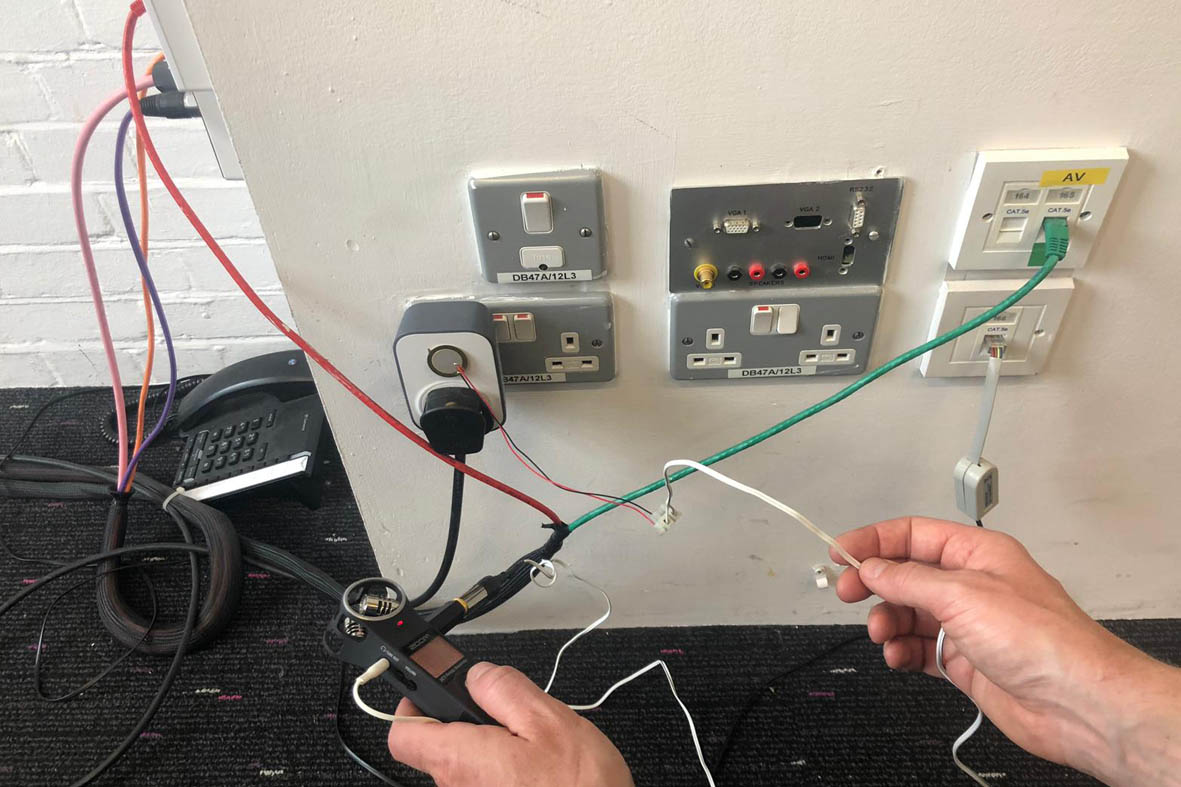

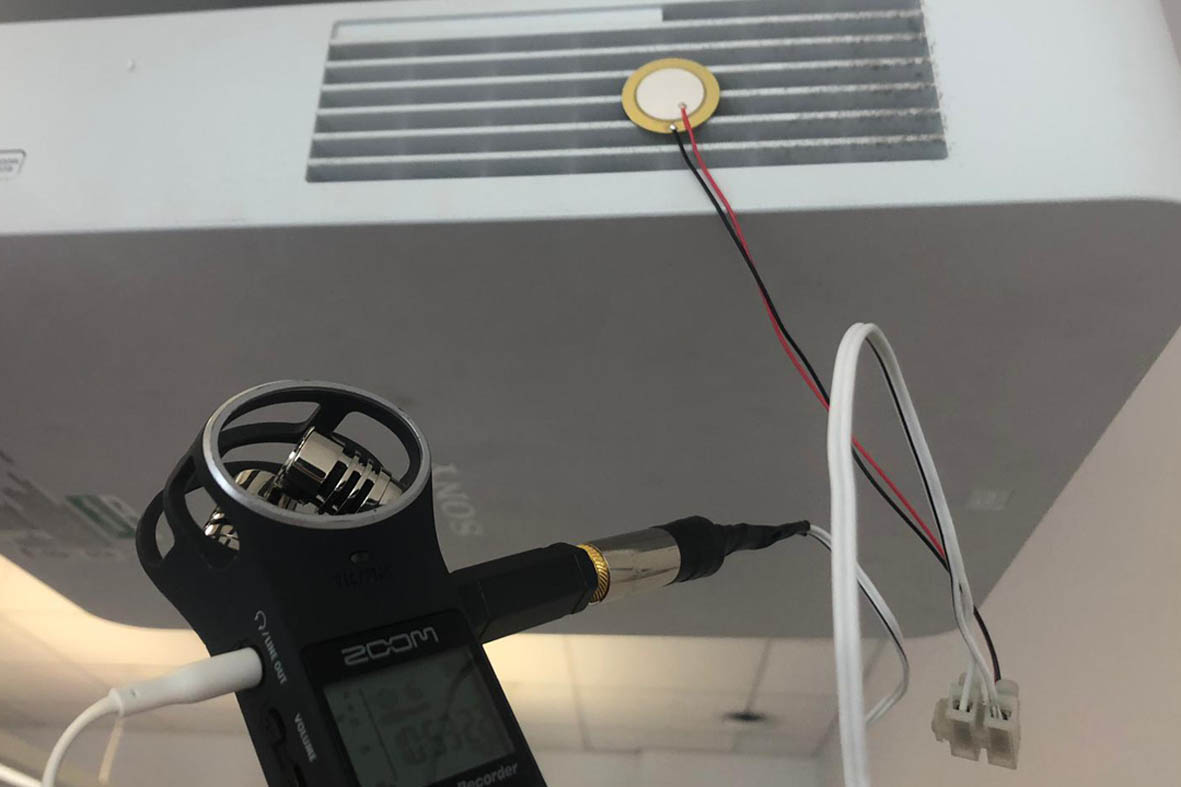

I wanted a way to track a persons position in space even if the space had more than one person in it. I decided to use colour tracking instead of motion tracking to do this and use a red light mounted onto some bluetooth headphone. By mounting the camera on the ceiling the camera would be able to single out the person wearing the red light and track that persons position. Objects were plotted onto the scene with prerecorded sounds. By using distBetween I could then work out the a persons distance from each object and the map the amplitude of each objects sound with the persons distance from the object. Therefore as the participants walks closer to an object its sound increases. I used a piezo contact microphone to record the objects sound as unlike normal air microphones, contact microphones are almost completely insensitive to air, transducing vibrations from only structure-borne sounds, thus heightening their material quality. Making this work practically was the main change. I installed openFrameworks onto a raspberry Pi 3 and used a PS3 eye webcam with a small mobile phone battery for power. I housed the device in a biscuit tin with a glass lid and made a bracket to mount onto the ceiling. I used VCN to control the raspberry pi from my computer to make it easier to set up the program using a test participant to walk around the space so I could find the best points of activation. The points were not necessarily positioned on the actual objects but the person position in space when closest to an object. Once installed participants were invited to experience the space with no introduction. Unguided written feedback from participants was collected and used in the findings.

Object recordings with a piezo contact microphone.

Future development

“The work made me more aware of the workings of objects, the isolation worked but I would have liked to hear them all at the same time” (audience feedback)

Vision objectifies the world into objects and though sound also has this capacity it can also merge the sounds of objects into one. I would like to experiment by activating more than one object at a time perhaps having a point where all the sound merge so no one object is distinguishable, to see how that effects the experience of the site and a persons relationship to the objects in it. I would also like to set it up in different locations to see if the results are more or less effective. Perhaps experiencing the amplified sounds in more familiar locations (where participants have more personal associations with objects) would produce different results.

Self evaluation

“This artwork made me pay attention to hidden sounds and made me feel sensitive to objects that are usually invisible to me” (audience feedback)

“This is the first time I have experienced an artwork that focuses on sound and it was effective. It made me reconsider the sound vibrations that objects make and made me realise that so many objects are still working/functioning even in an an empty auditorium” (audience feedback)

“After experiencing this piece, my perception was heightened for sure, I could hear the hum of the projector and the click of the radiators when I sat in a different lecture space the following day” (audience feedback)

I think the idea was quite successful, the feedback was positive. I did feel a different relationship to the technological objects and made me think about how I physically experience a space. My main challenge was practically setting up the installation. My initial idea was to set the webcam up on the ceiling facing directly down so it would always have a direct line of sight to the red light on the headphones, however the ceiling was not high enough. The device had to be mounted high up in one corner of the room in order to see the whole scene, this meant that the webcam was seeing the space horizontally not vertically, and therefore defining the objects was more challenging. I had to reduce the 'activation' radius around the objects which triggered the sounds, therefore there was less variation in the ampitude of the objects sounds. The effectiveness of the red light used to track a persons position was not always reliable. The sunlight from the windows in the room effected how well the webcam could see the red light mounted on the headphones, it worked with the lights off on a cloudy day but with sun coming through the windows it did not work. If the device had been mounted on a high ceiling this would have eradicated the light interference problems and would have had a direct line of site to the person. I have leant to always start with a specific space in mind and work from there. This was the first time I have used openFrameworks on a different system (raspberry pi), which was a big learning curve, installing the software and getting the webcam to work was a challenge, however to make something work in the 'real world' even though the code was relativity simple, was a rewarding experience.

References

CODE, week 12 – color tracking

HARMAN, G. (2002). Tool-Being: Heidegger and the Metaphysics of Objects.

HEIDEGGER, M. (1962) (first published in 1927). Being and Time. Translated by J. Macquarrie and E. Robinson. Basil Blackwell, Oxford.

IHDE, D. (1990) Technology and the Lifeworld: From Garden to Earth. Indiana University Press

LABELLE, B. (2015). Background Noise Second Edition Perspectives on Sound Art. Bloomsbury Academic,London.

PRITCHARD, H. (2018). 'At the border of self and other'. Computational Arts-Based Research and Theory exercise, Goldsmiths university.

OOHASHI, T. (2000). Research paper - Inaudible High-Frequency Sounds Affect Brain Activity: Hypersonic Effect. Journal of Neurophysiology.

CUNNINGHAM, D. (2003). The Listening Room.

CAGE, J. (1952). 4'33”.

RAAF, S. (2000). Unstoppable Hum.