The Unbearable Lightness Of Mixed Signals

A balloon-drone, light-installation that uses face-detection to engage with its audience -an agent of pre-cognitive dissonance that explores the tension between beauty and fear.

“There was never a time when human agency was anything other than an interfolding network of humanity and non-humanity”

Jane Bennet, Vibrant Matter.

produced by: Owen Planchart

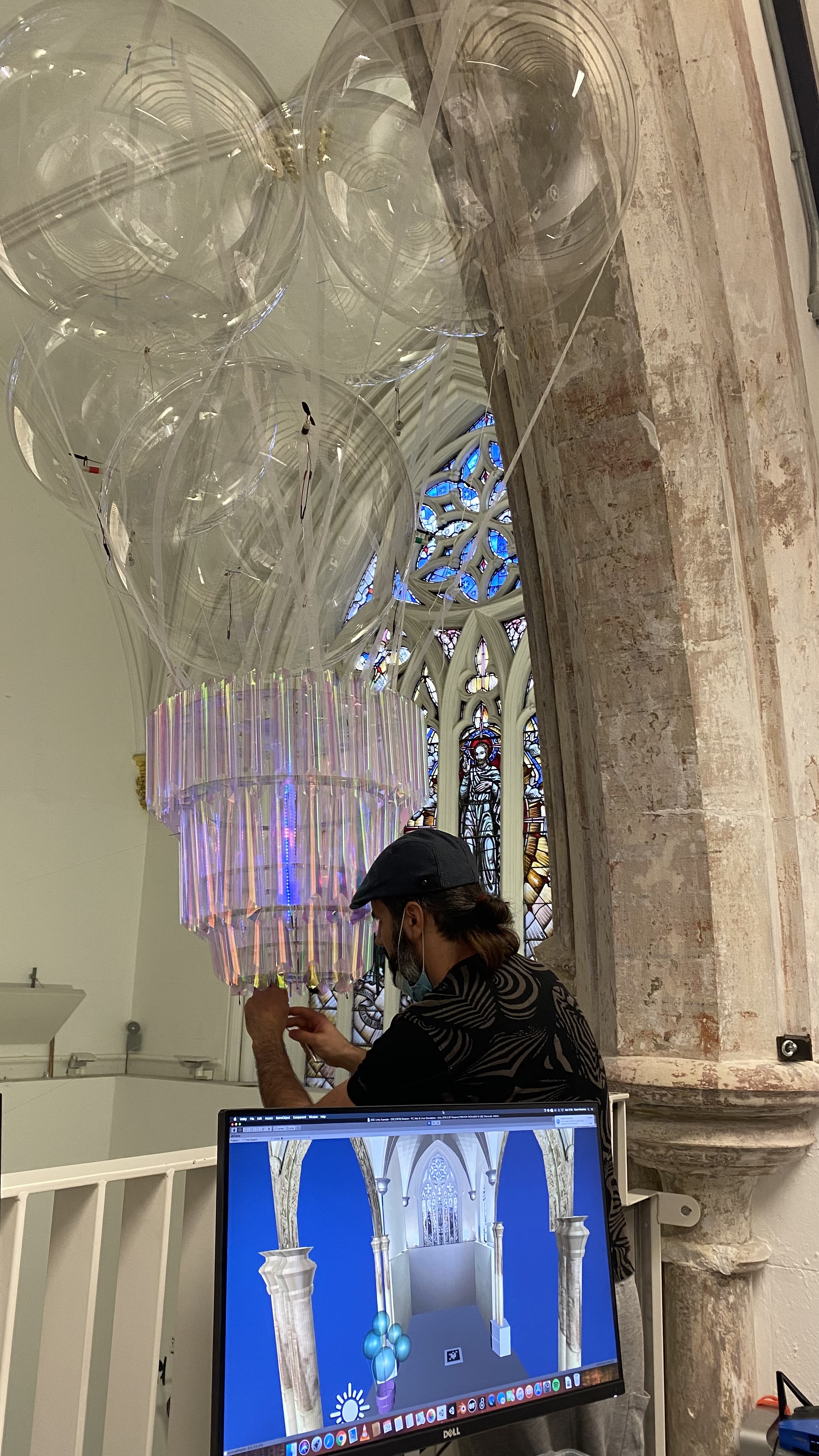

The Unbearable Lightness Of Mixed Signals is an affectively disobedient object that inspects the limbic friction between joy and fear. Conceived as a balloon-drone-floating-chandelier, it uses face-detection in a predatory way. Its agency is derived by increasing the volatility within its material properties: light-emission, refraction and suspension are all waiting to be stirred by the presence of a witness.

Affective Disobedience

Through no fault of their own, chandeliers are disobedient objects: by design they are attractive, bright and translucent, but by default they are suspended precariously from a great height, which means we are biologically hardwired to avoid them -even when we know them to be harmless. This piece is an investigation of what happens when an object with a bi-directional emotional signature becomes animate, creating an intricate space of conflicting affects in order to engage with its viewer.

From a technical perspective, the idea is to unhinge the chandelier from its static coordinates without letting it precipitate to the ground, by using helium balloons and motors. When a spectator gazes at it, it responds by changing its blue-flame to a red-flame lighting-pattern and by propelling itself vertically; before it reaches the ceiling, the propellers stop spinning and the chandelier falls, once it is just above head-height the flame turns green and the propellers break its fall.

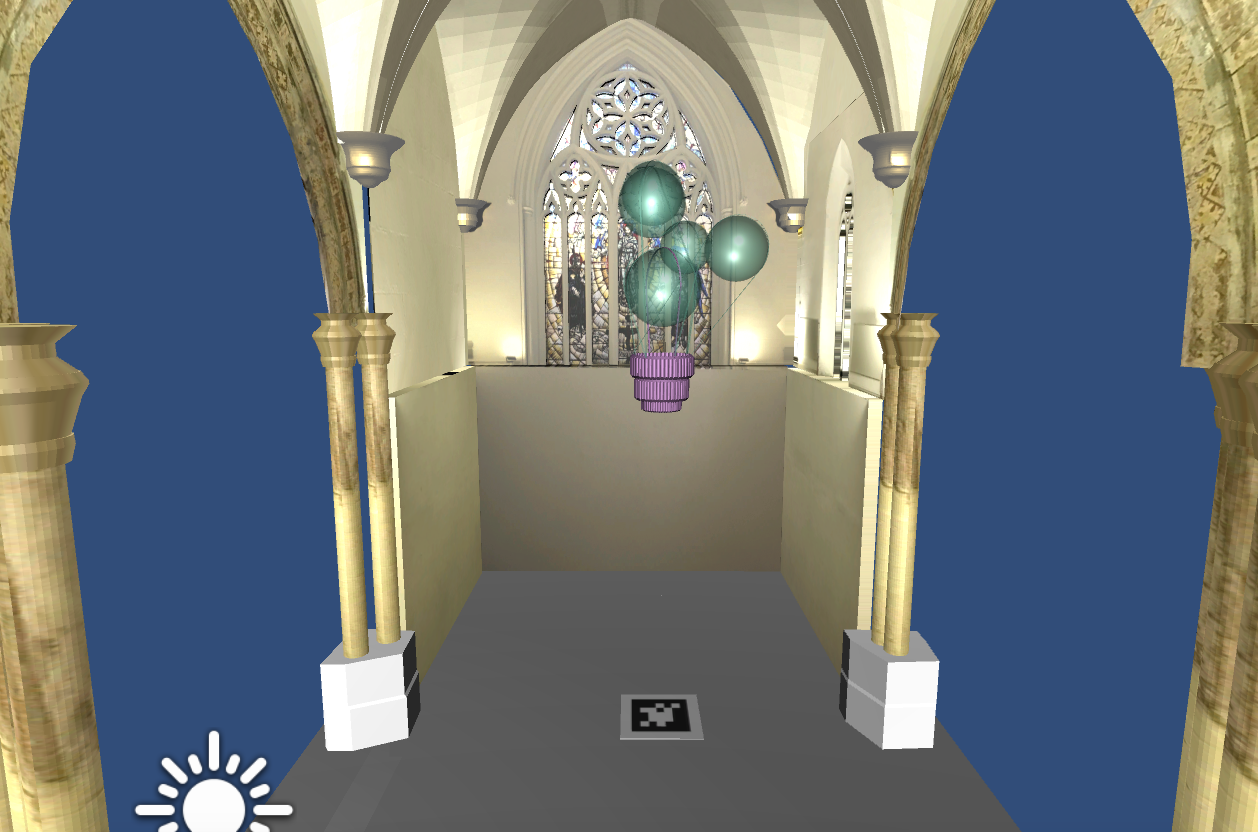

From a conceptual stand-point the aim was to let the chandelier's full affective spectrum play against the cultural, social and scientific expectations, and welcome a new kind of reading - a new kind of "thing-poetry". To explore the hauntological chasm of a medieval ornament performing a new pattern of lighting and movement in relation to an audience, with the help of modern technologies.

Whilst reading the Odyssey's myth of the Sirens (the tale of mythological creatures that lure you with beautiful song only to tear you to pieces on their island made out of the skulls and bones of sailors) it occured to me that the assemblage we call the chandelier had the same net affective connotations as this epic poem: it is both beautiful and potentially lethal, fragile yet imposing, it seduces us with all its opulent charm but instills fear at the sheer impossibility of its suspension from a great height. The narrative tool which Homer uses to connect us to Odysseus' plight is to combine seduction with threat, he uses this tension to latch onto our awareness and steer it into the heart of his story. The chandelier is a compressed affective space that distills the array of its properties into a similar emotionally incongruous experience to the Greek poem. The purpose of The Unbearable Lightness of Mixed Signals is to animate the affective disobedience of the chandelier in relation to its audience; to enunciate the non-linguistic expressivity of its "thingness" as it plays out the friction of its built-in luxury and violence.

Objects often communicate affect more clearly by virtue of their silence. Jane Bennet also speaks of the "porosity" of things and their ability to trespass the membrane of otherness, enabling a dynamic flow of forces: they are "susceptible to infusion, invasion and collaboration by and with other bodies". Accentuating, elevating and animating this intercorporeal dissonance embedded in objects is the space this installation aims to probe.

It is here that I would like to bring attention to the work of the Creative Design duo Studio Drift. Their piece 'Materialism', whilst not exactly computational, perfectly illustrates the affective vibrancy of objects by reverse engineering them into their most basic constituents. We are thrown into a space of contemplation as the familiarity with these common objects gets obliterated without physically adding or subtracting anything. The work rearranges our affective response to the everyday artefact and elicits a complex cognitive process similar to a timelapse of someone piecing a puzzle together.

Technical

The biggest challenge was always going to be the loss of helium by the balloons, but as this is not computational I will not go into the painstaking details. Suffice to say, that most floating installations of this kind only perform for 30 minutes at a time -when all was said and done this piece was up for more than 4 days.

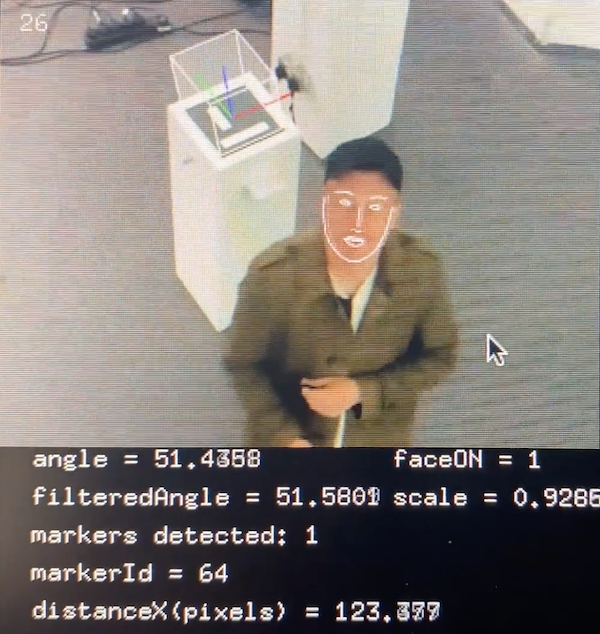

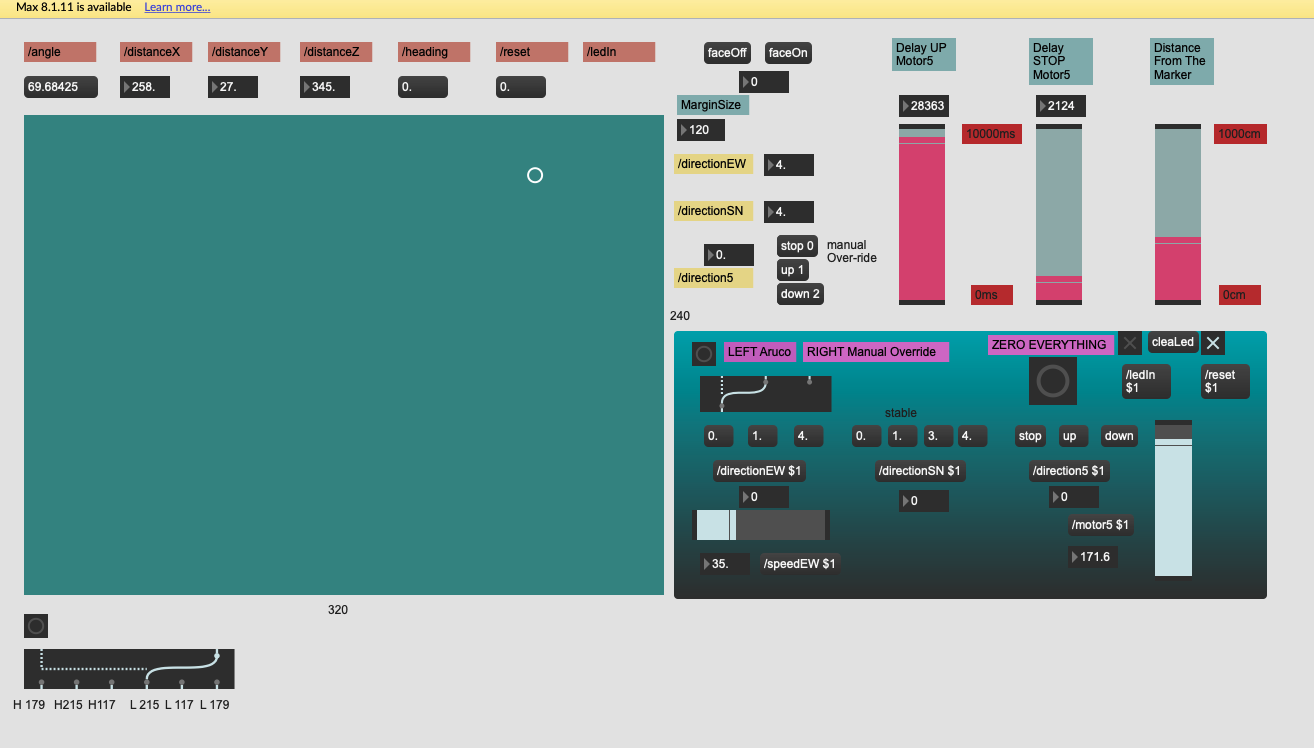

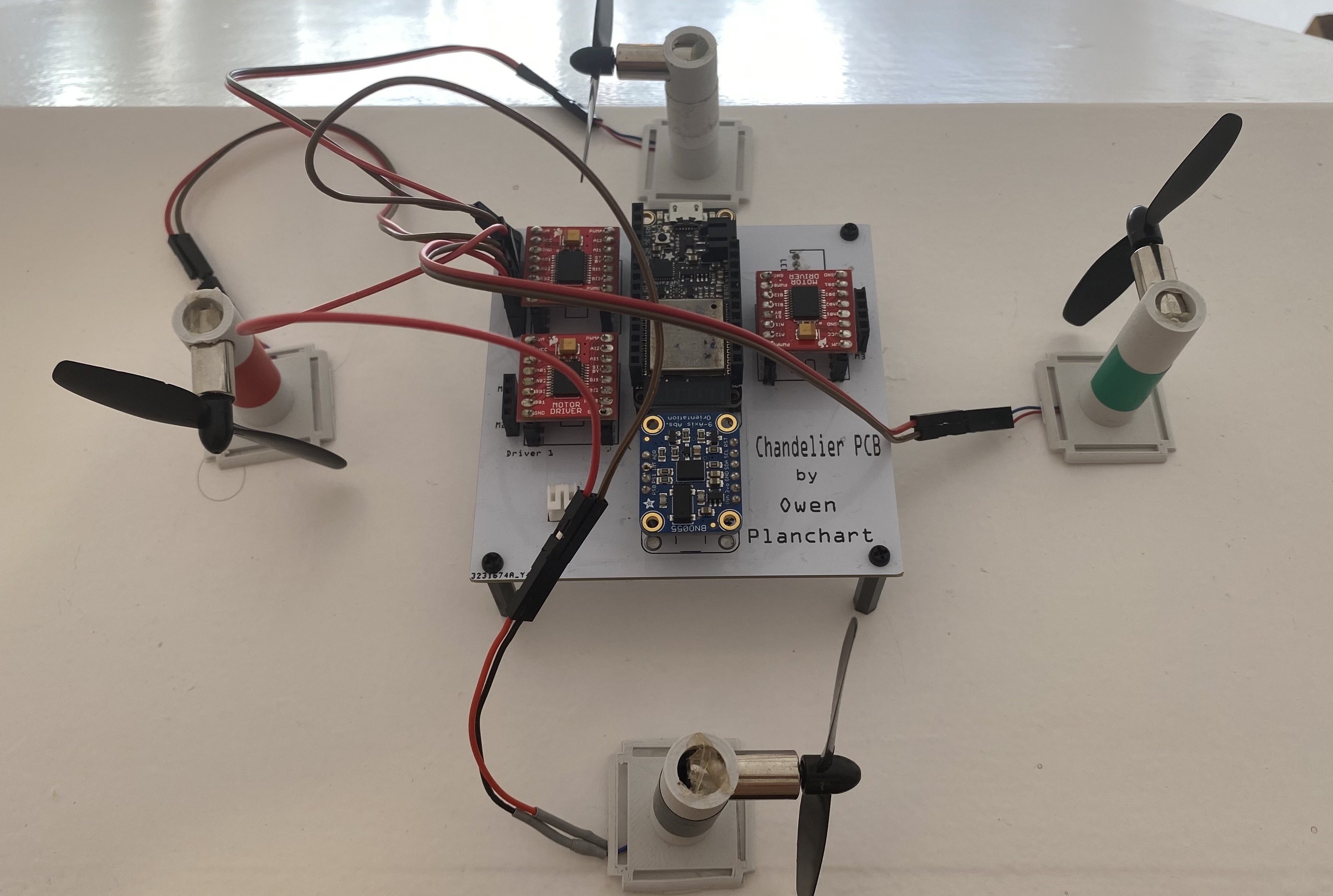

Using a Raspberry Pi micro-controller, the installation sends a video stream to an off-board computer for face-detection algorithms which in turn get converted and sent as motor information to an esp-32 micro-controller, driving the balloons away from the onlooker below. As the viewer gazes up at the chandelier it turns from a blue-flame into a red-flame lighting pattern. All this information is parsed by Max MSP. The camera also detects an Aruco marker behind the viewer that gives information about the chandelier; this information is sent by openFrameworks to Unity to simulate its location and rotation inside a virtual 3d model of the Church space.

HARDWARE:

- RaspberryPiZero and Camera Module V.2.1

- ESP32 Huzzah Feather Board

- WS2812 Addressable LEDS

- BNO055 Inertial Measurement Unit

- TB6612FNG motor drivers

SOFTWARE:

- gStream 1.0

- Arduino 1.8.10

- Max 8

- OpenFrameworks 10.1

- Unity 2019.3.3

- TouchOSC

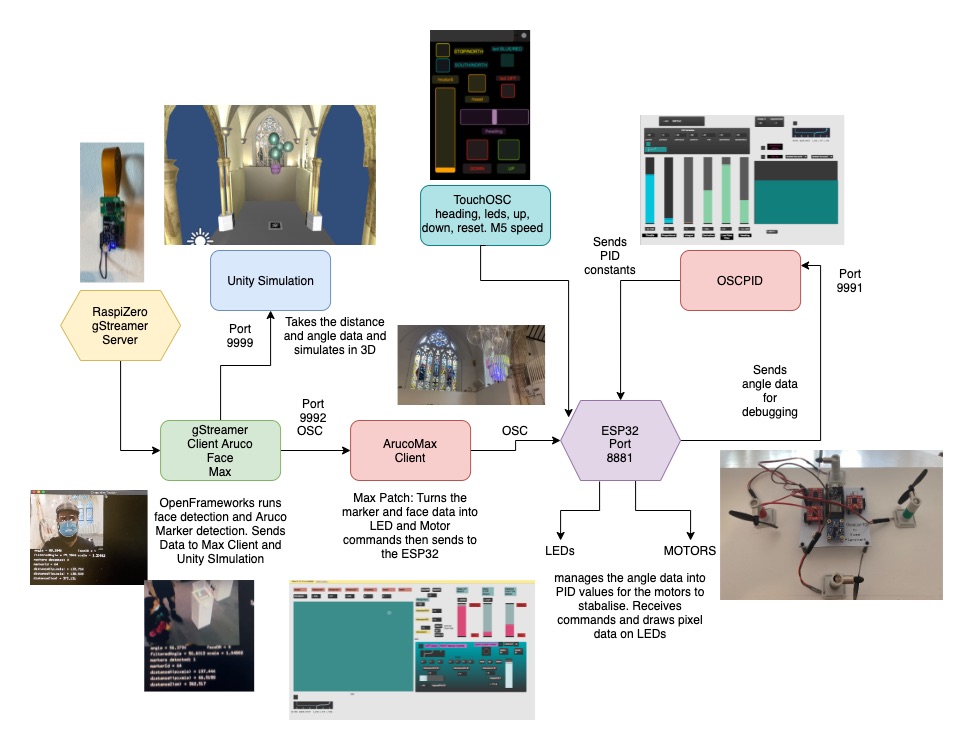

This is an image of the overall pipeline:

The three most important libraries for the interaction in this project are ofxGStreamer, ofxAruco and ofxFaceTracker. Weaving them together onto a single sketch was no easy task. Once I had the stream up and running it became apparent that it was a lot more efficient to do all the detecting and calculating off-board. Face-tracking was by far the most difficult to integrate as it kept crashing the frame-rate of the sketch. It also had the problem of dealing with face-masks (which Kyle McDonald's addon managed to do very well). Whilst automation would have been ideal, it was paramount that I could monitor what the chandelier was seeing. Gstream is a framework that allows for the Raspberry Pi to send video via a wifi network. After a lot of fine-tuning resolutions and protocols the system had very little latency. I did however have to compromise on the resolution of the feed in order to reduce any lagging or screen-tearing. I also used a confidence-score (more on this later) to make sure that there were no false-positives.

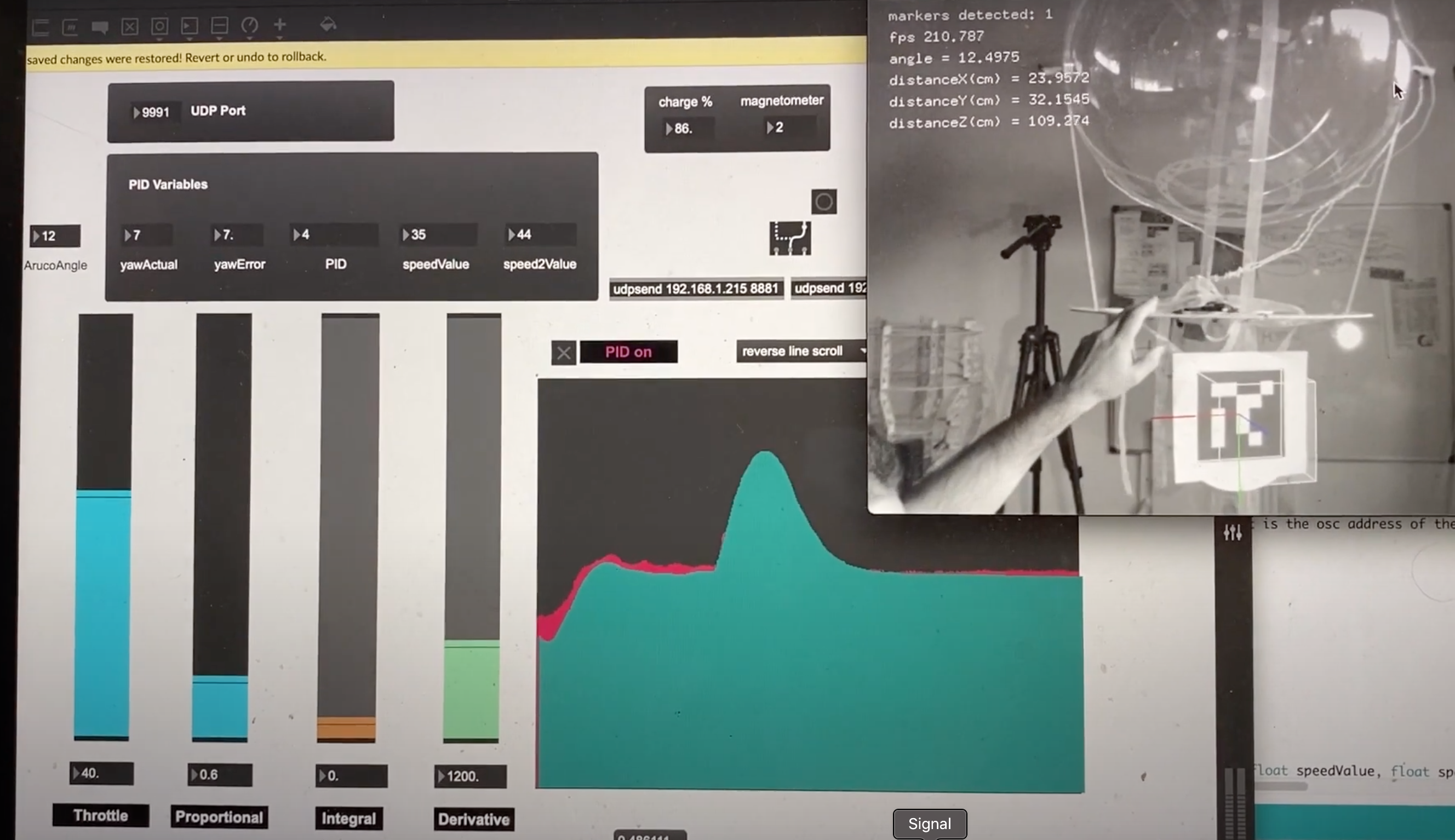

On the Arduino side, the most complicated problem to solve was the absolute rotation of the drone. The magnetometer(compass) within the IMU would de-calibrate as a result of the EMF from the wires and motors. I tried to solve this problem by adding computer vision and Aruco markers and using a complementary filter to listen to the gyroscope for small changes in rotation, but trust the marker over extended periods. This worked well to reduce drift and give an accurate angle that a PID system could use to correct the heading of the drone.

The Unity Simulation proved to be one of the most useful tools for debugging what would have otherwise been unintelligible numbers. Angles and distances in a scaled model of the Church allowed for any odd readings to be monitored and corrected manually. TouchOSC was also very useful when dealing with the motors to understand speeds and LED behaviour and provide a manual override and also letting me reset the esp-32 remotely.

Finally, Max MSP was the perfect "middle-man" between OpenFrameworks and the esp-32, allowing me to experiment with different systems that would dictate the behaviour of the drone. The two main modes I used were: 1) setting timers for the drone's behaviour and 2) using the information from the aruco marker to create thresholds regarding speed and motor direction. The first worked well all the time, the second worked well only when there were few people in the space, due to occlusion.

The positioning of the camera played a big role in all interactions. In order for it to see both the marker and people's faces I placed it at a 45 degree angle from the base of the chandelier. This meant that at times the marker would be out of the frame, but this was fine as it was only used when the balloons were at high altitude to cut off the motors.

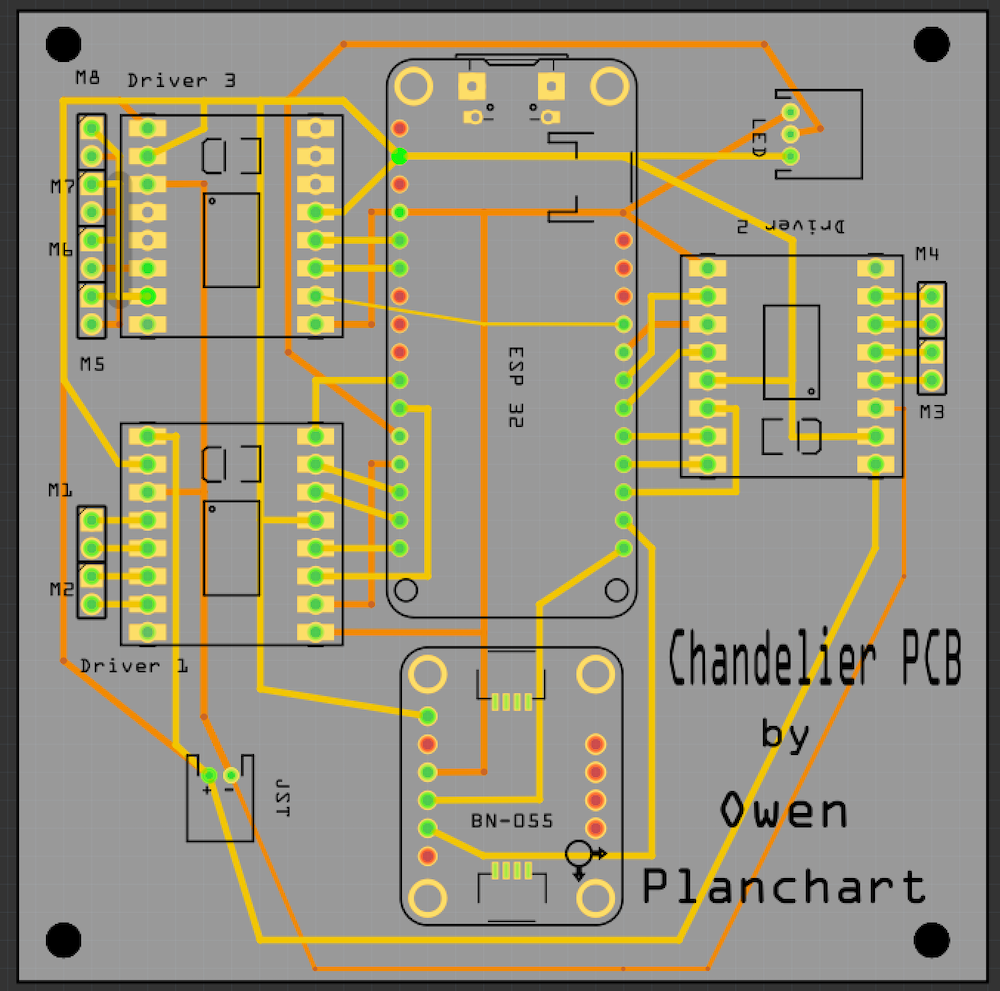

Designing my own shield for the esp-32 was a key step in this project. Not only did it reduce the amount of potential cabling errors but also the payload -which is the biggest challenge when working with helium. I routed the connections using PCB software and then sent it to be physically made by JLCPCB. It housed an esp-32, an IMU, 3 motor-shields with a capacity for 8 motors, two 3.7V battery inputs and an LED jst connector. Having a custom-made pcb-board is the core reason why this project worked at all.

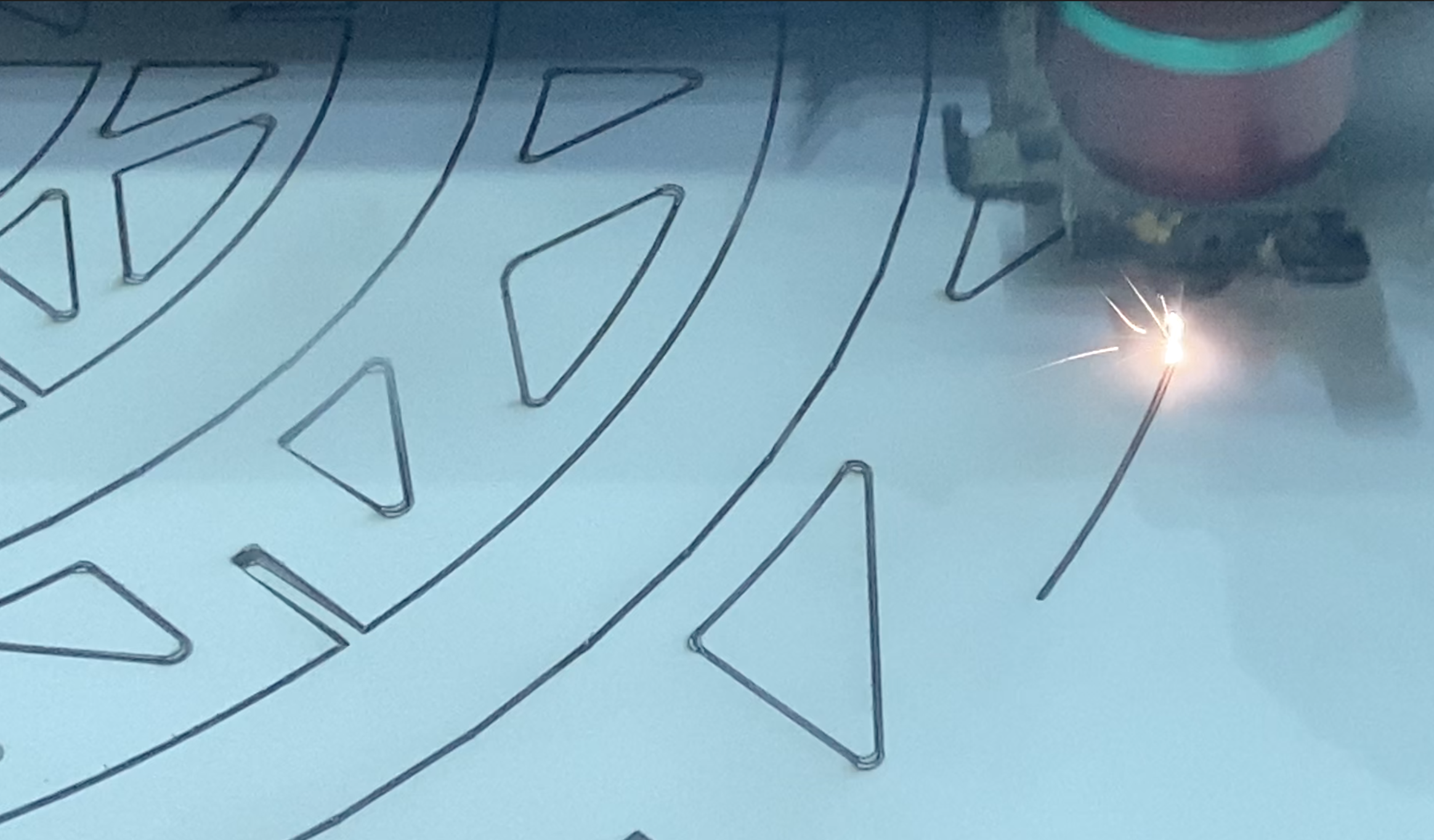

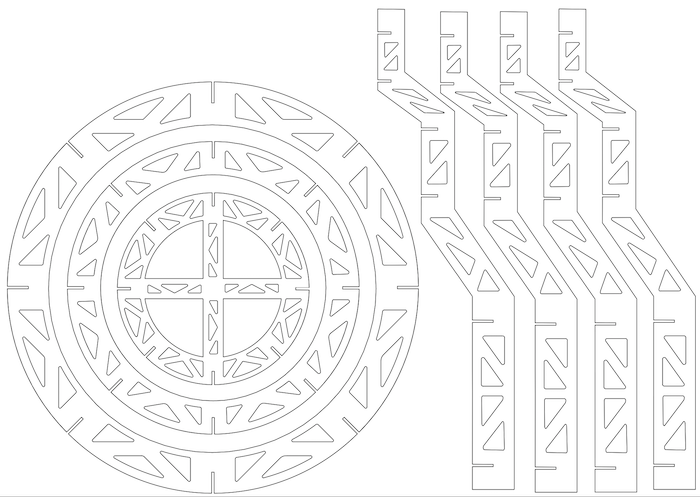

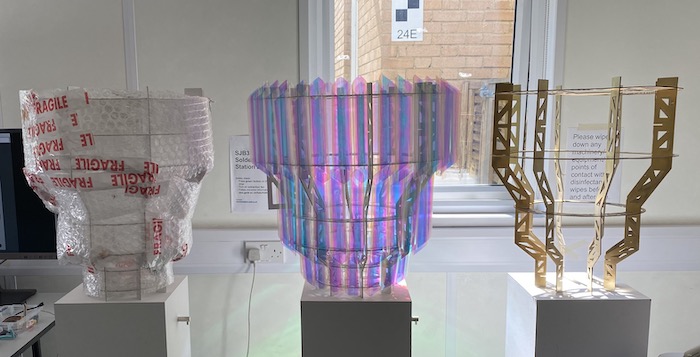

The structure of the chandelier was another key component: it had to be solid, but light. I used foam-board as a material and generated my own frame using the Slicer add-on inside Fusion-360. I also designed bevelled triangles to remove more material using a laser-cutter without losing strength.

Future Developments:

There are a great deal of improvements that could be made to this project for future iterations:

1. The face-tracking could have a new machine-learning model that uses the face as seen from different angles around the z-axis of the camera. Posenet seems to be slightly better at tracking eyes as the face turns, but it would have meant moving away from openFrameworks where the rest of my tracking was taking place. I ended up writing the word “Maybe” in fairly small letters under the chandelier to get people to read it in the same orientation as the camera. The reason for the word came from the most often asked question during the install: “Is it going to fall on me?”

2. Scaling the video feed in an efficient way without cropping the image is definitely a necessary next step to improve this project. It will require looking further under the hood of the framework, but it will be an excellent tool. Overall, I was incredibly impressed with my final solution for an ultra-light-weight computer-vision system coming in under 45g (including battery). The RaspberryPiZero and the Camera module combined with Gstream are the best combination for streaming video wirelessly that I have found by a significant margin.

3. The use of Aruco markers for distance and rotation of robotic systems is definitely an area I would keep exploring. The accuracy is second to none as long as the marker is visible. However when it came down to the exhibition, my inability to account for inertia in my PID system meant that I had no side-control of the drone, meaning less freedom to move and eventually requiring a fishing-line tether. Controlling a helium balloon is not so much like controlling a drone more like driving a car on ice, turning is never quite sharp enough. Lack of friction and momentum increase the margin of error ten-fold. The problem was amplified by the existence of a motion sensor right in the middle of the space that would trigger an alarm if crossed.

4. Although more CPU intensive, Python seems like the next obvious step to try and solve some of these problems on-board. OpenFrameworks has a lot of clashes with the Raspberry Pi ecosystem. In future, I would like to learn the Gazebo robotics toolkit in order to simulate physical computing systems which not only lets you introduce components, but forces as well.

Self evaluation

Although it became incredibly convoluted at times, I feel that my choices for all the different pieces of this project came with a lot of careful attention to computational detail. In the end, each tool was the best at doing its task and whenever I tried to compress into less tools, I ran into problems. Of course openFrameworks would have been able to do all the Max functions, but it would not have allowed for as much experimentation; Unity could have done a lot of the computer-vision calculations but the OSC messaging is less intuitive; the Raspberry Pi could have done the motor functions but not as cleanly as the esp-32.

This piece was always intended as an impressionistic sculpture (it is very hard to make an actual Chandelier fly!). The challenge was to retain the threat element of a large body suspended above head-height without having any of the weight. Although there was little visual threat left by the time all the balloons and the lace holding it were put together, inadvertently the motors added a disquieting and eerie sonic layer. Some viewers also spoke of the movement increasing the fragility of the chandelier and that it was somewhat scary that it could potentially crash into the ceiling. Either way, the conceptual point about mixed emotions remained within the piece.

The confidence score was something that I gradually reduced as the show went on to have a little more generative behaviour. It dawned on me that the agency of the chandelier came exclusively from trying to find faces so why prevent it so much from seeing them for the sake of accuracy? It became more itself by seeing faces on the floor and on the walls.

In the end, my purpose was not to anthropomorphize the chandelier. As a student of Bennet’s this was not an exercise in techno-animism, I was simply observing the characteristics of the object and leaning into the primitive forces of the materials.

The following is a video tracking most of the encounters with materials and process (software and hardware) in trying to achieve the volume, look and feel of this agent-artifact:

References

1. Jane Bennet. Vibrant Matter (2010). Duke University Press. NC 27708-0660.

2. Karen Simek. Affect Theory (2016). Critical and Cultural Theory, 25. 10.1093.

3. Albu, Cristina. Mirror Affect: Seeing Self, Observing Others in Contemporary Art. UMinnesotaP.

4. Donovan Conley & Benjamin Burroughs (2019) Black Mirror, mediated affect and the political, Culture, Theory and Critique.

5. Phil Emmerson. Thinking Abou Laughter Beyond Humour (2017).

Code:

https://github.com/arturoc/ofxAruco/blob/master/src/ofxAruco.h

https://github.com/kylemcdonald/ofxFaceTracker

https://github.com/arturoc/ofxGStreamer

https://github.com/davepl/DavesGarageLEDSeries/tree/master/LED%20Episode%2010

https://gitlab.doc.gold.ac.uk/special-topics-performance/oscimu/-/tree/master/OSCIMU