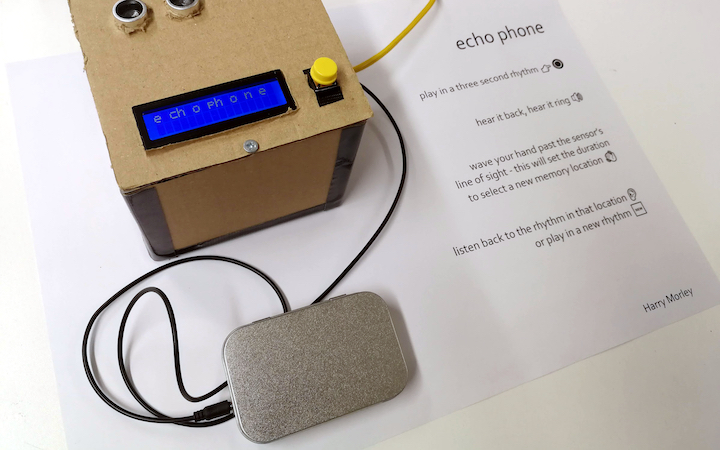

echo phone

This interactive artwork explores call and response and imitation of rhythms, through gesture guided chance.

Produced by: Harry Morley

Description

The system senses button presses, interprets them as rhythms and stores them in memory. It remembers up to 16, 3-second rhythms, which can be recalled by the user, by waving over the ultrasonic sensor. This sets off a carousel-like picker that rotates and jitters through the memory locations. The distance of the wave gesture is mapped to the time it will take to choose a new rhythm (closer is longer time, and vice-versa).

I was influenced by various forms of echo - a dictionary definition of which is “the repetition of sound caused by reflection of sound waves”. In nature, the Lyre Bird has the ability to replicate the sounds of other birds and other sounds in its habitat. In music, call and response (antiphony), is a ubiquitous device found in many traditions and canons of music - particularly in African, African-American and Western folk traditions. I am interested in the idea of participation and dialogue, particularly when these dialogues drive culture forwards.

The concept of echoes is evoked in the following ways:

- Input: the ultrasonic sensor uses echoes to measure distance.

- Memory: the device remembers and recalls rhythms, guided by the ultrasonic sensor.

- Output: the piezo sounds and vibrates the spring, causing analog resonance after the initial noise burst.

The habitat for this project could be viewed as different things, in real and virtual worlds. There is a close and real environment around it where intimate contact is experienced by user and device (discrete button presses, gestures, and sound). The device also inhabits and traverses a 2D landscape of durations (the different rhythms stored as an array of time intervals). The rhythms are translated into visual feedback, for interest and accessibility purposes. The display shows notes as little characters in a random position on the screen. I would develop this project further by adding a generative nature to the way rhythms are processed - combining or mutating rhythms to create new ones, or changing the way it sounds. Noise bursts get quite fatiguing after a while!

Source Code

View and download the code on GitHub

References

- echo_check() modified from "NewPingEventTimer" example by Tim Eckel.

- debounceButton() uses code from the Debounce example in Arduino examples --> Digital --> Debounce

- mod() function (to work with negative numbers) taken from https://stackoverflow.com/a/4467559

- "echo" definition at Merriam-Webster

- Lyre Bird video - BBC Studios