Project " + "

This project aims at depicting man relationship with machines. Man as a conductor of machines shares a dynamic relation. This shared experience is open to different possibilities as expressed through this project where interaction between man and machine is taking place.

Produced by: Nadia Rahat

Project " + "

This project aims at depicting man relationship with machines. Man as a conductor of machines shares a dynamic relation. This shared experience is open to different possibilities as expressed through this project where visual interaction between man and machine is taking place.

Idea:

“When you change the way you look at things, the things you look at change”

Max Planck, Nobel Prize Winning Physicist

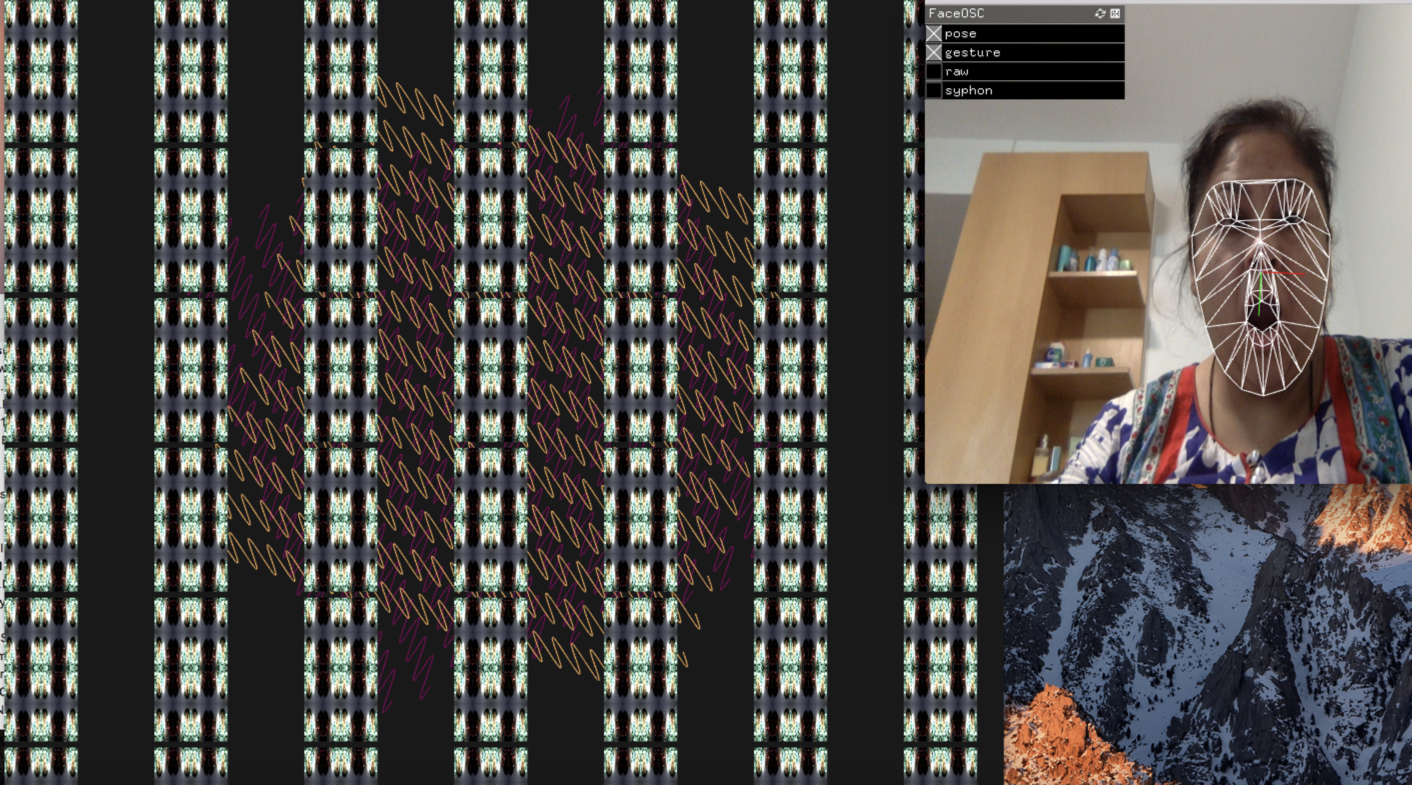

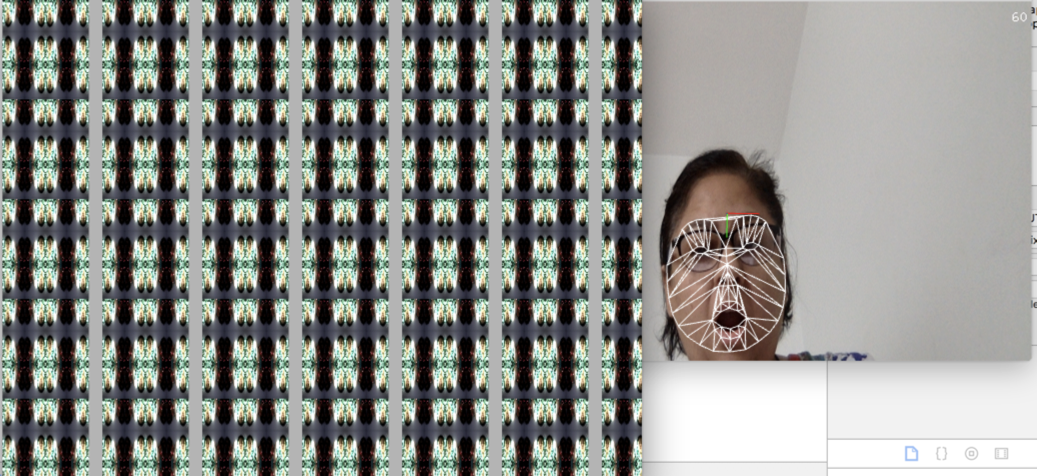

In continuation with my art practice to explore patterns of human behaviour, this project explores and depicts human relationship with machines. Living in a digital age, it has become imperative to investigate and engage in this relationship. This relation is dynamic and changing at a fast pace, constantly posing challenge yet rewarding by providing various possibilities to sustain this relation at the same time. What I try to achieve in this project is that human as the conductor of machines continuously influences machines, which are constantly dependent on man input for their concretization or evolution. Thus, making existence of machine less threatening to us.For visual representation this project went through various development stages. I needed to show dynamic interaction between man and machine so I choose face Osc as it provided me the tool to create face based interaction.I use face Osc and developed code using images and grid of lines to interact with the expression of my face giving the feel of live dialogue and respond that is happening between viewer and computer. The various developmental stages are explained below

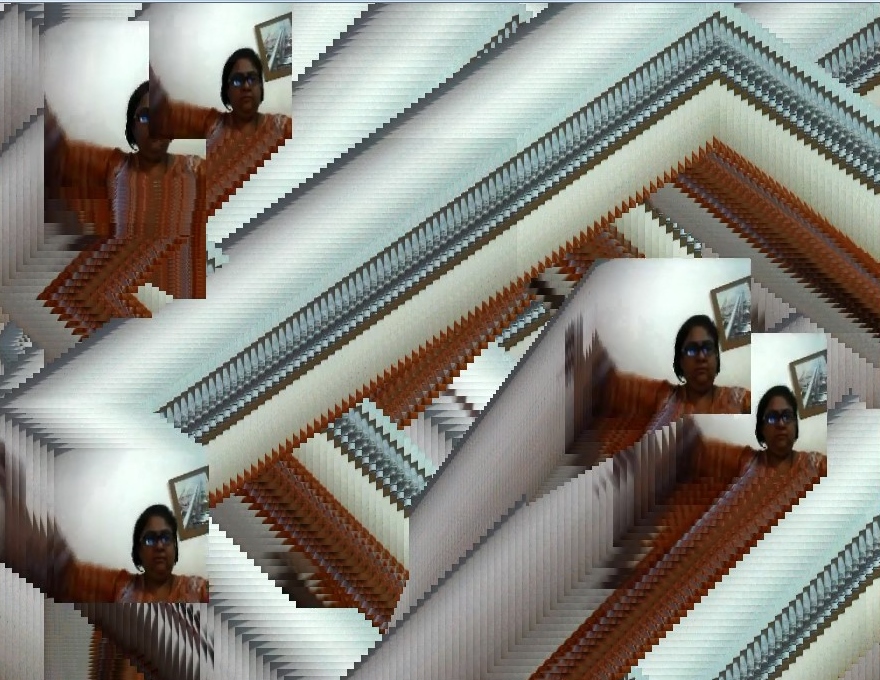

Stage 1:

Images that are used in the code are inspired from the effect of Kaleidoscope. I wanted to use the images generated by the code also so I developed a no of codes to generate different imagery by human input though webcam to be used as images in my final code. These codes gave me diverse variation of imagery. To get the right image I experimented with adding multiple views of human input, five frames leaving trail of reflections , frame buffer and then with inverted frames which provided me with the image that I wanted to use in the code. I developed one unit and did various experimentation before forming grid of mirror reflections. Various experiments that I did can be found on the following links:

The Video of stage 1 experiment 1can be viewed at https://vimeo.com/211749575.

The video of stage 1 experiment 2 can be viewed at https://vimeo.com/211751665

Video of stage 1 experiment 3 can be viewed at https://vimeo.com/212165870.

Video of stage 1 experiment 4 can be viewed at https://vimeo.com/211757145.

Video of stage 1 experiment 5 can be viewed at https://youtu.be/rC_EGo1gof0

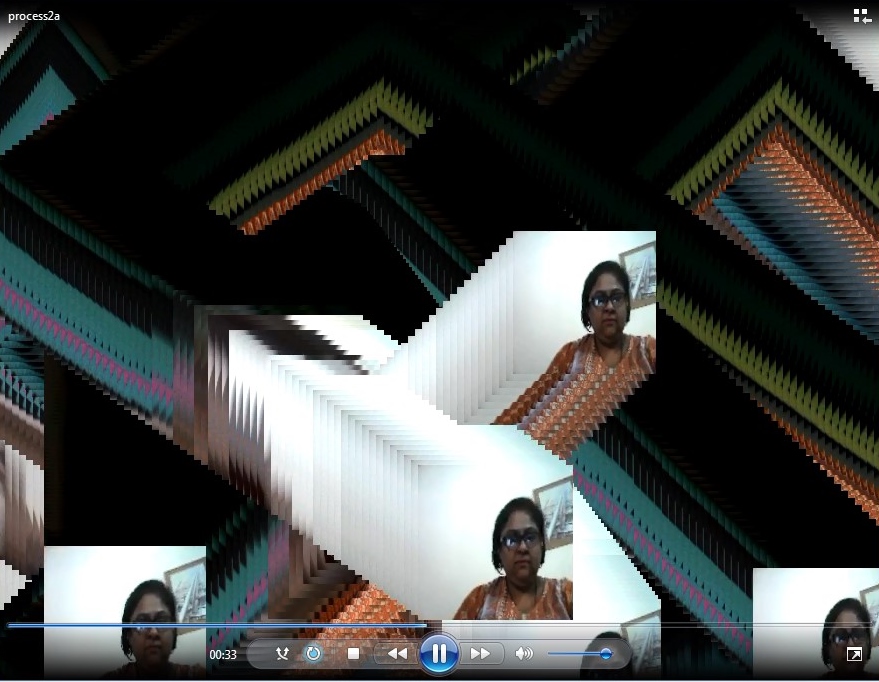

Stage 2:

Once I got the images from the code I focused on the development of code to show interactive dialogue between viewer and computer. I selected to use Face Osc which tracks face and send pose and gesture data over Osc to interact with the computer.My goal was to develop a code that responds to human face expression by changing their dimensions /direction. I used two images that I generated from my experiemntal codes and developed a code that responds to the face Osc data.

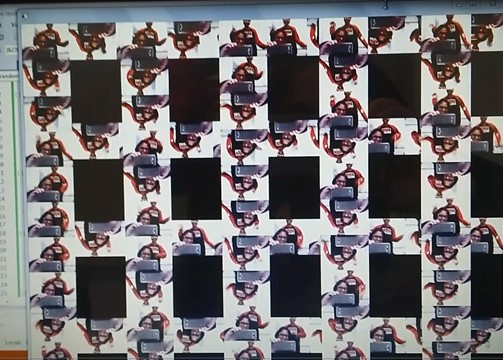

Stage 3:

I developed another code using grid of lines which change shape with the face expression . This decision was more related to supporting my idea as I realised that communication that occur between man and machine is not just visible only but also invisible also. Many times when we code we are constantly thinking and taking to it in our minds so these grid of lines depicts mind waves which also changes as the interaction happens.

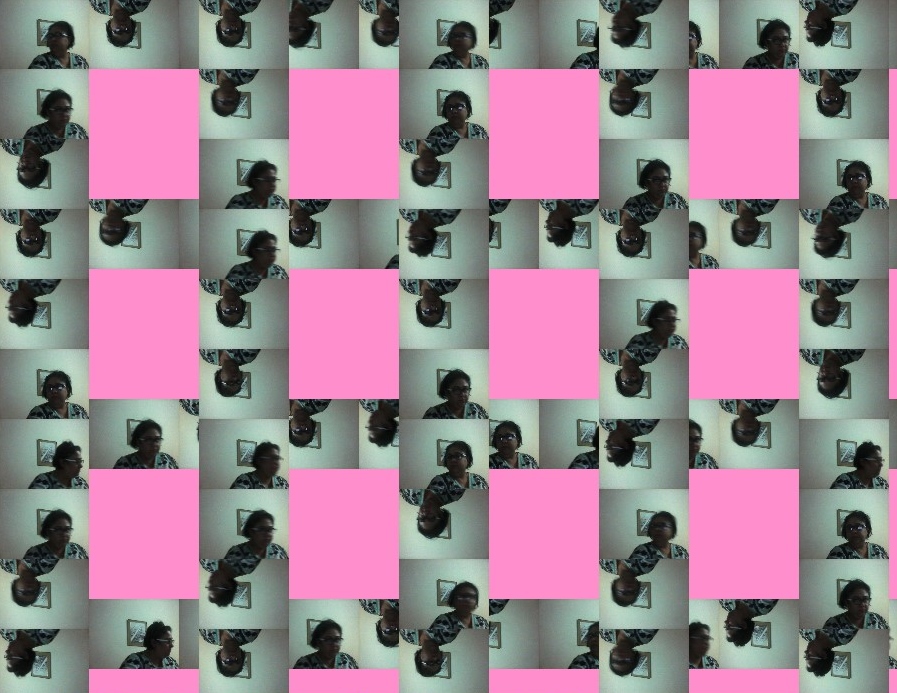

Stage 4:

I combined both codes together showing lines grid running at back with images at front responding to face expression of the viewer. Just like our thinking process and communication are simultaneously running similarly this code depicts the thinking and communication happening and changing at the same time by human input and machine output. In the final video viewer can interact with the images and lines with face osc which sends face pose data to computer and in result the grid of lines and images changes and respondes to this data.

References

EzineArticles.com/1151794

https://github.com/kylemcdonald/ofxFaceTracker/releases

Creators Project

https://forum.openframeworks.cc/t/live-video-vertical-flip-mirroring

https://en.wikipedia.org/wiki/Kaleidoscope

videoeditor youtube.