Digital Tapestry

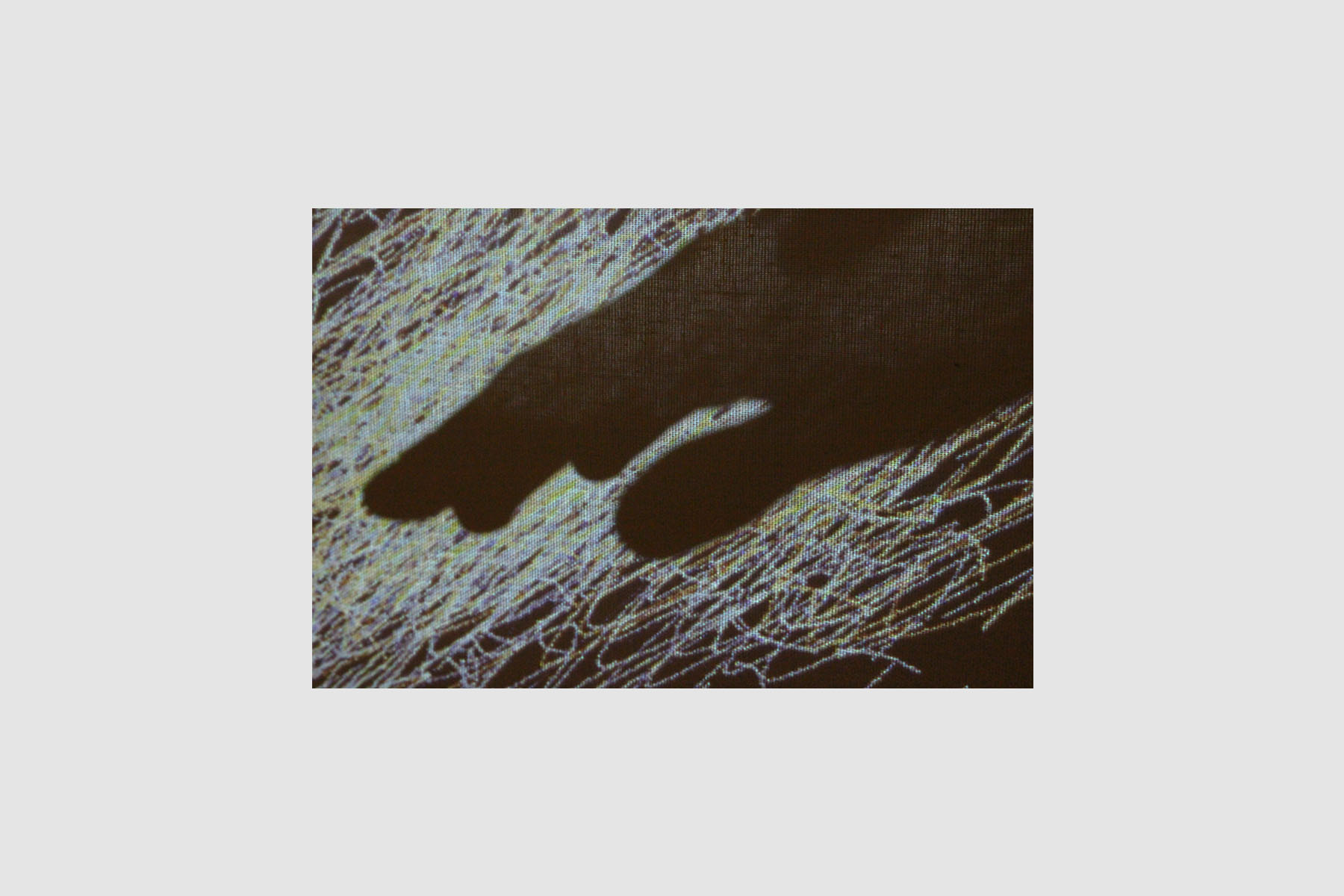

An interactive digital patchwork tapestry projected onto a textile screen, generated by a depth camera

and computer vision.

Produced By: Isabel McLellan

DESCRIPTION:

Each patch has its own visual and textural identity within the patchwork, and they are generated using different computer vision techniques and methods of using the input from the depth camera (a Kinect depth camera). The aim is to create digital work that has a material grounding, where interacting with it feels like a tactile activity even though the results are virtual. The set up of this installation is a projection onto a fabric mesh, so the static fabric comes alive with the projected digital textures and patterns. This project has been heavily influenced by my developing interest in the possible crossover between computation and traditional craft, in this case textiles.

INSTALLATION SET UP

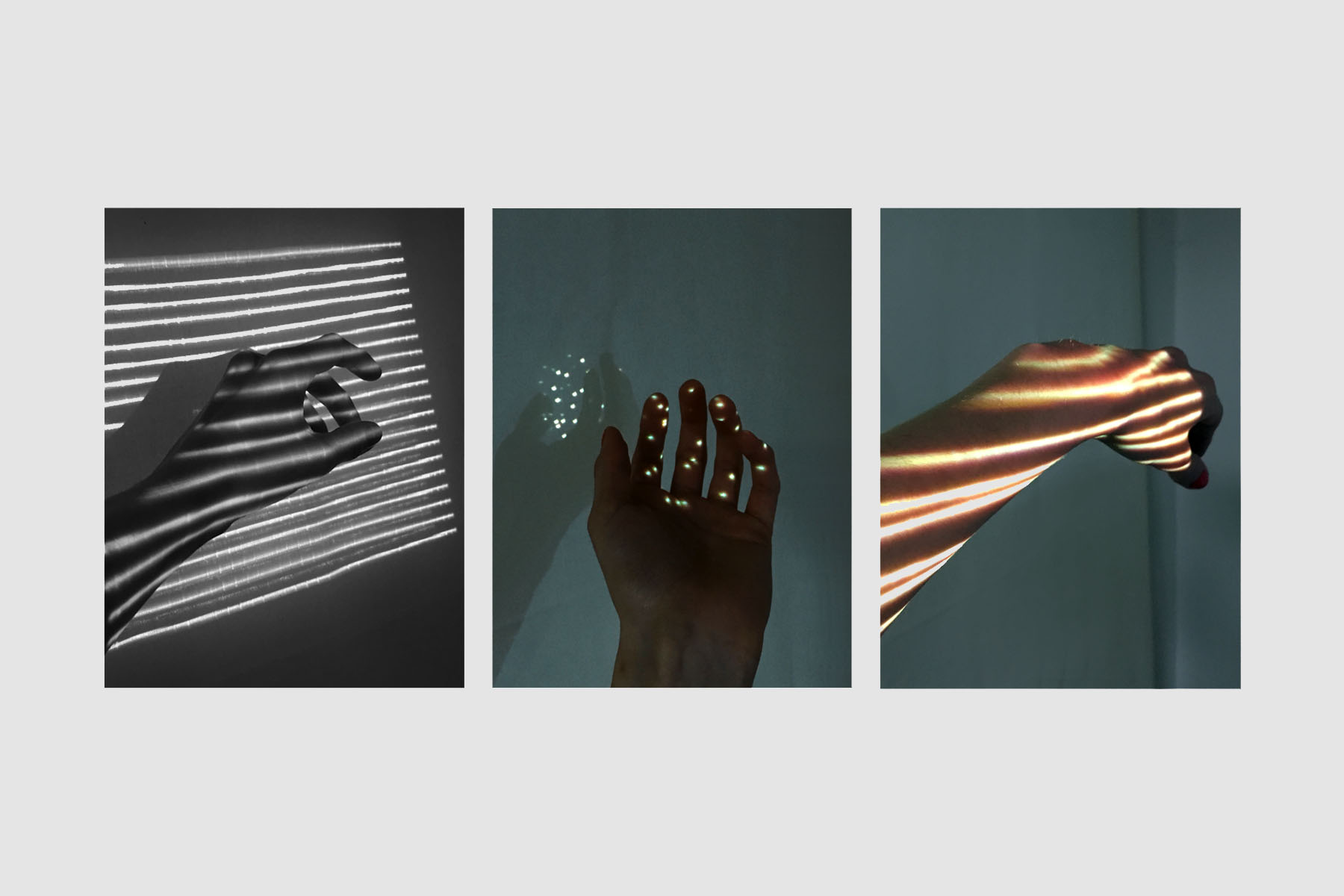

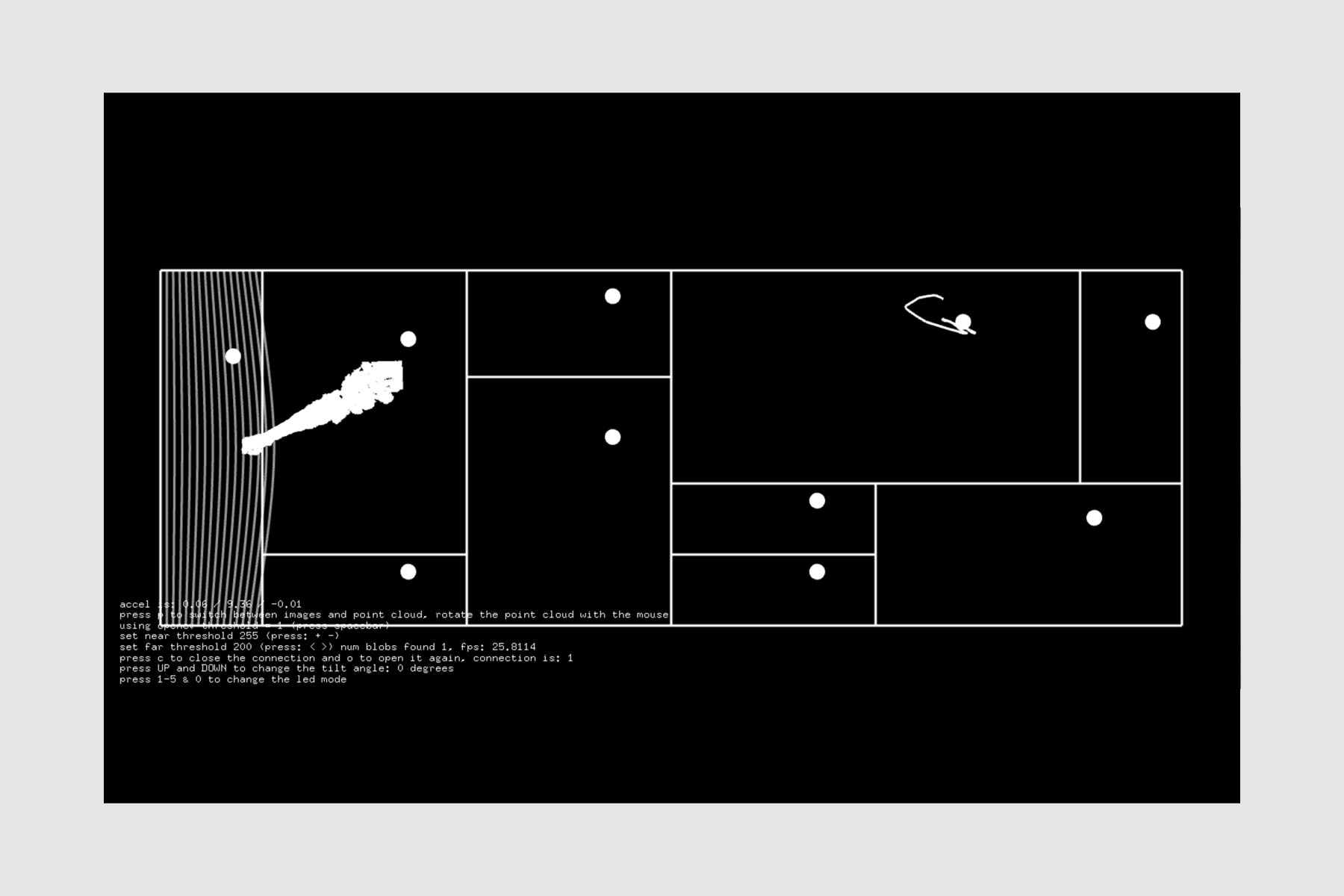

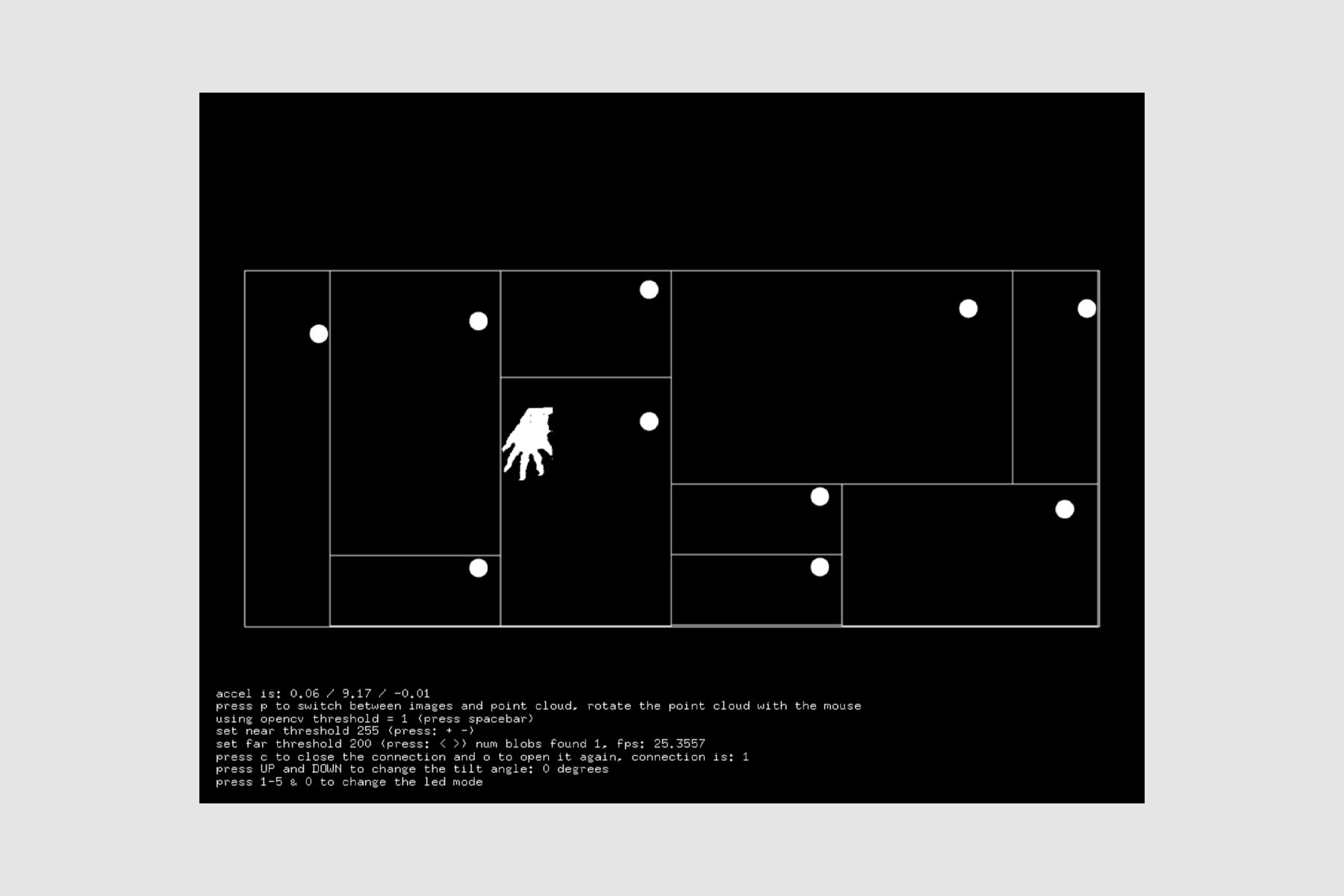

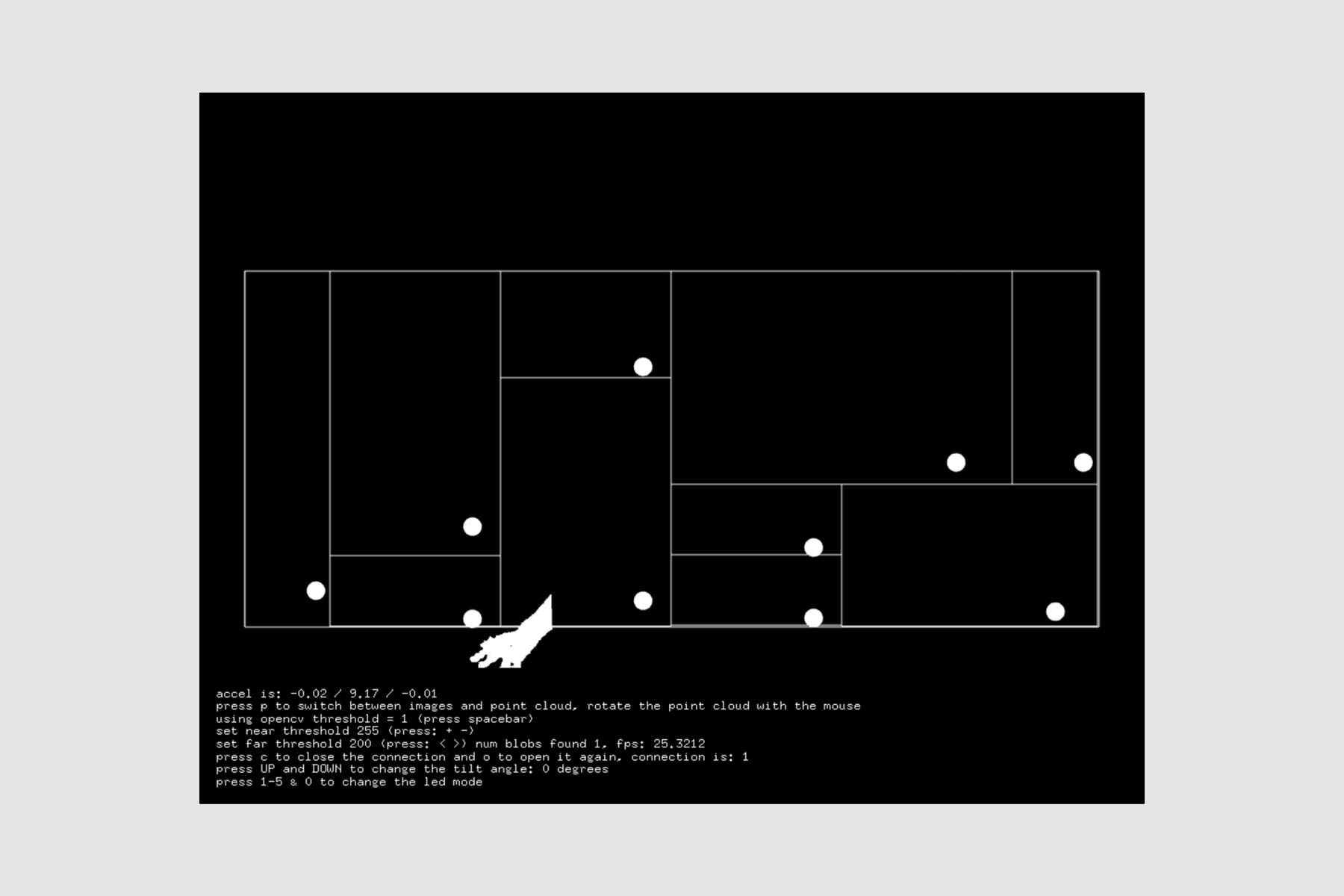

In this program and installation, I wanted different types of actions to generate different results, so some patches come alive with slower, more considered movements and some with faster and more abstract ones, some are more vivid closer to the camera and others further away. Some of the patches align to their corresponding slice of the camera image and others are affected by the camera's entire field of vision. Testing as an installation, I decided that the most effective setup was placing the Kinect onto the projector, so that hand and arm movements would create shadows on the fabric that correspond to the digital augmentations being generated.

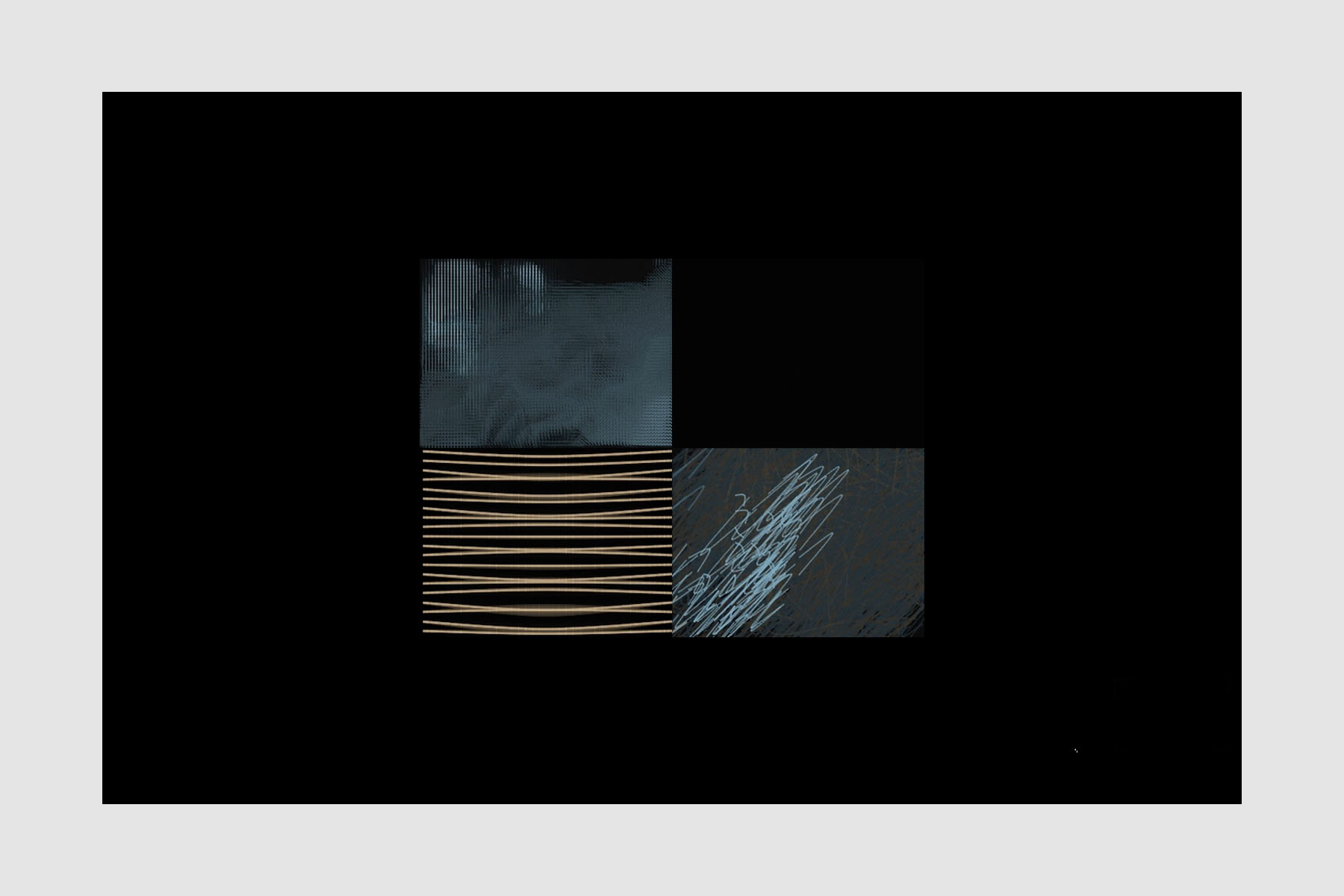

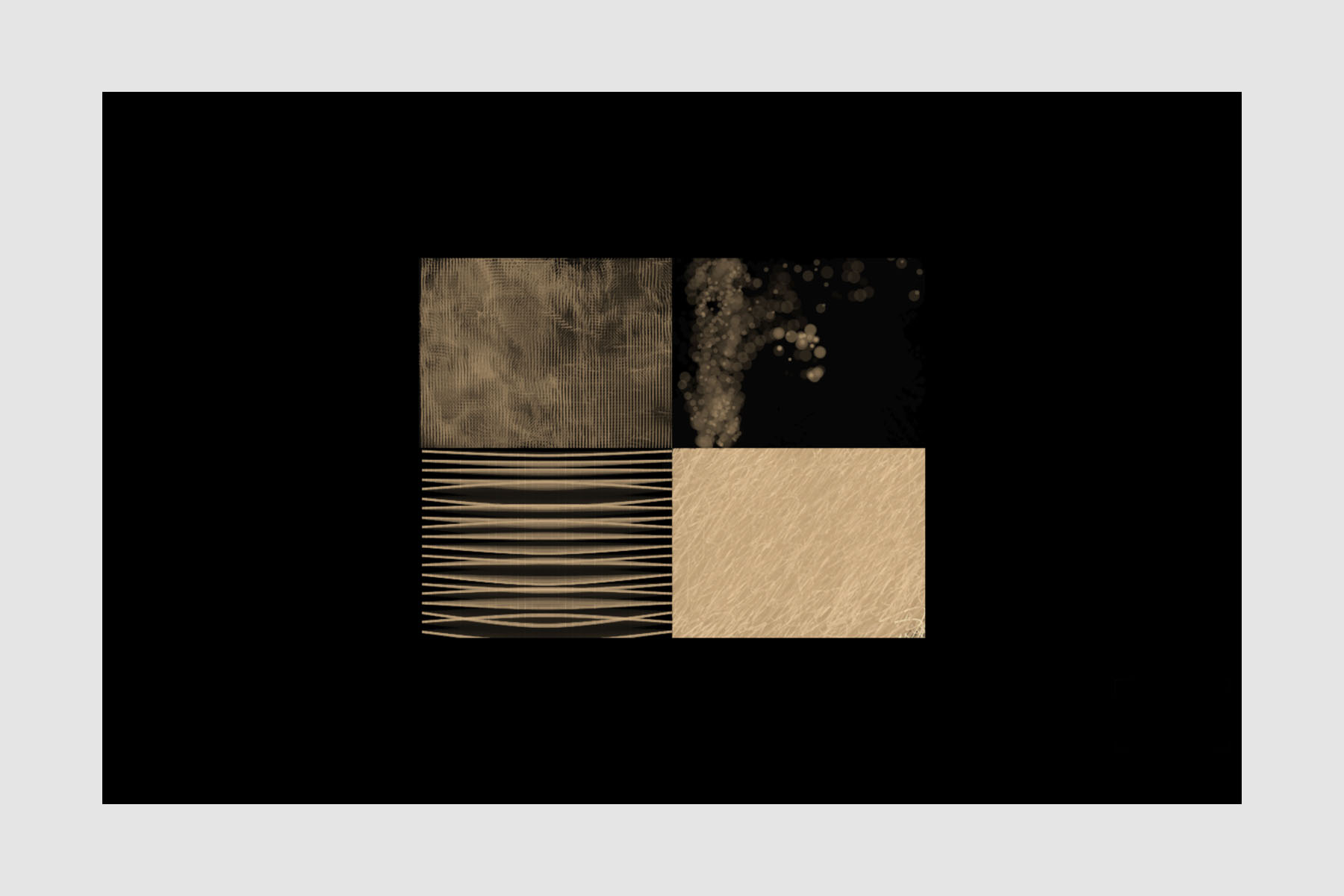

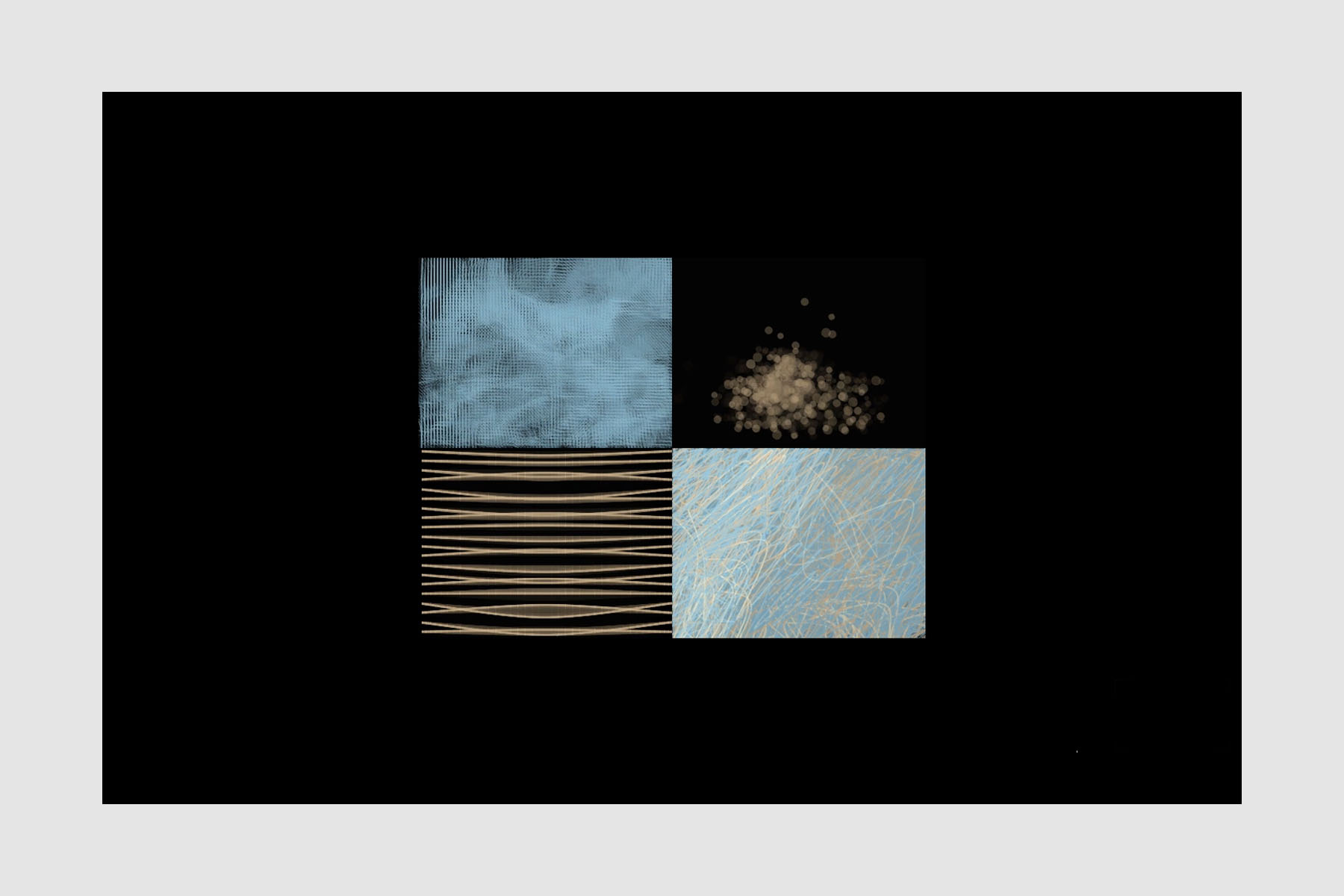

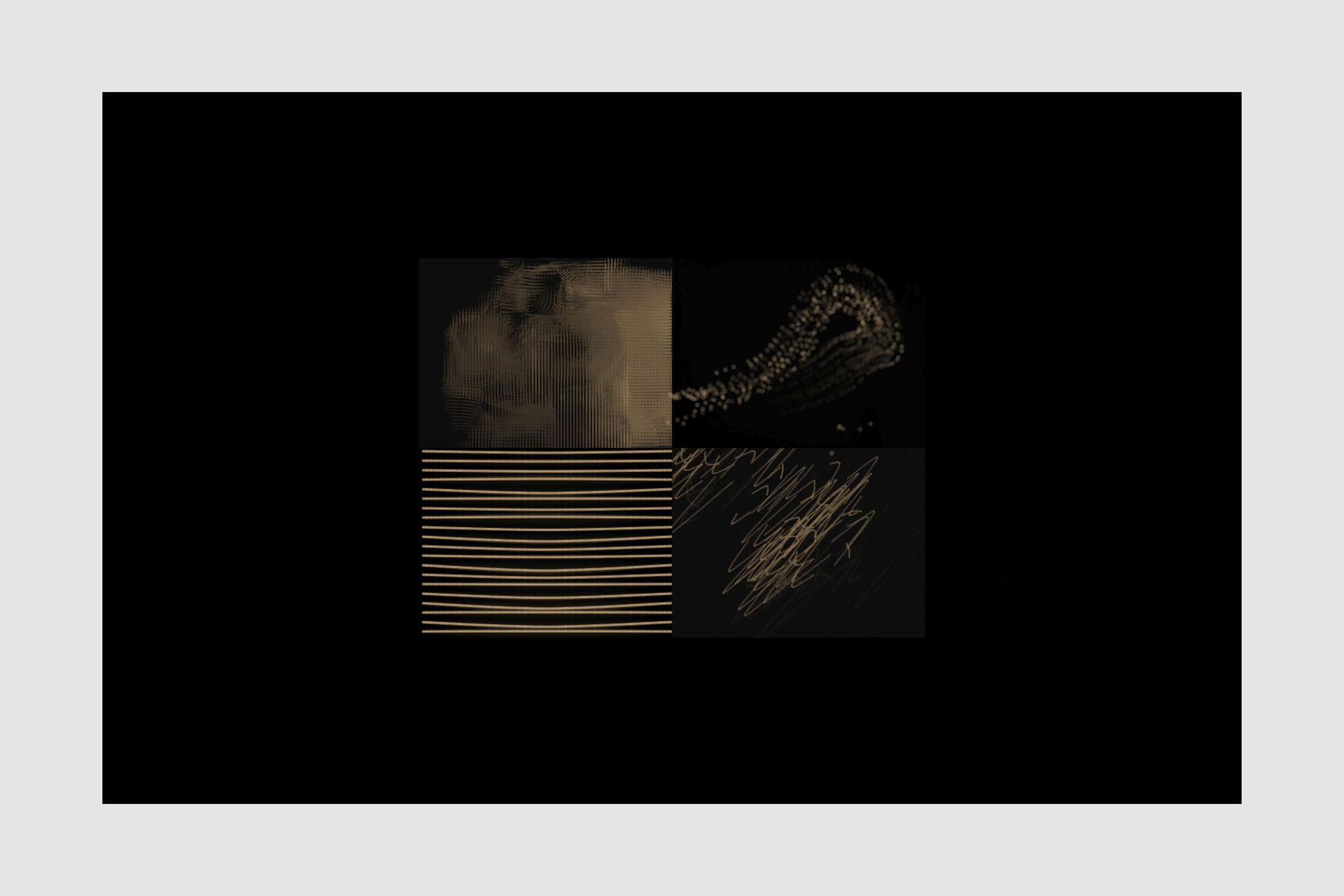

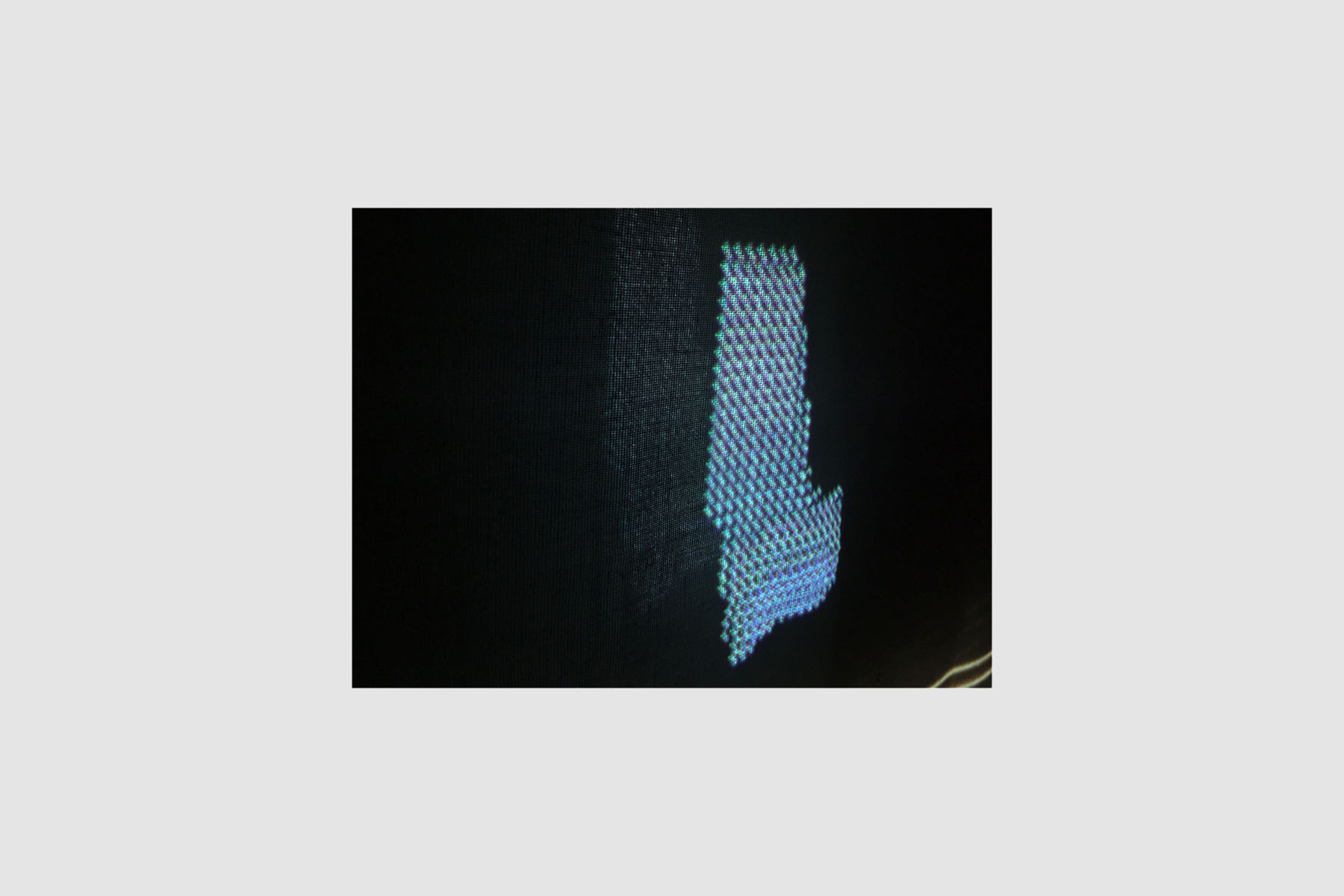

The four patches I created are; a texture created by drawing small lines according to the optical flow data from the Kinect colour image - which forms a sort of crosshatch or weave effect, a brush tool that follows the brightest pixels (closest body part to the camera) - and changes size based on that distance, a grid of threads that warp according to the average optical flow direction (the direction of the hand movements) and an abstract texture created by drawing semi-randomly curved lines according to the optical flow data from the Kinect colour image.

SCREENSHOTS AND INSTALLATION

TECHNICAL AND REFLECTION:

During the process I ran into several issues, and as a result I generated many different prototype patches along the way. When attempting to contain patches within Fbos, the lines appeared jagged and some of the smoothness was lost from the graphics, the vectors were not as crisp as I would have liked. After some research and checking the open frameworks directory, I tested using a smoothing function. I found that when smoothing was used, strange lines appeared on the bottom left patch of the tapestry. This overall though was still an improvement from before so I left the smoothing enabled.

I also ran into some problems with using the depth image from the Kinect with optical flow, instead I used the colour image, sacrificing the piece working fully in all patches in very low lighting. This was a slight issue for the installation projection, it couldn’t be conducted in total darkness as is usual for projections, however it did mean that the fabric screen was more visible which I felt was integral to the concept of the piece. The reason for this difficulty was that passing depth data to a class without it becoming slow or crashing was impossible for me, which also meant that code isn’t as modular as it could have been. I also found that even within Fbos there were still traces of the visuals left over even with a fade out function.

When documenting the piece, I also found it difficult to film on a DSLR camera, as filming the graphics from the projector caused distortion lines across the video. The footage in the video came from a phone which did not produce this effect, and the only the photographs from the higher quality camera. Due to this I much prefer the look of the photographs.

If I had more time with the project I would have liked to have more than one camera input to the piece coming from different locations, regular cameras further away from the projector and lit slightly so they can pick up movement, and the Kinect could be in darkness closer to the fabric screen and projected augmentations. I would also like to test the concept on a larger scale, possible using multiple panels of fabric at different distances and projection map each digital patch to it, to make more of a 3D installation with many more patches. I initially experimented with multiple patches of different sizes, but struggled within the time to make them cohesive and read from their corresponding slice of the camera input. This meant that all the patches would've moved / been triggered in unison whereas I wanted to be able to trigger different patches independently. So to achieve this I scaled back to four.

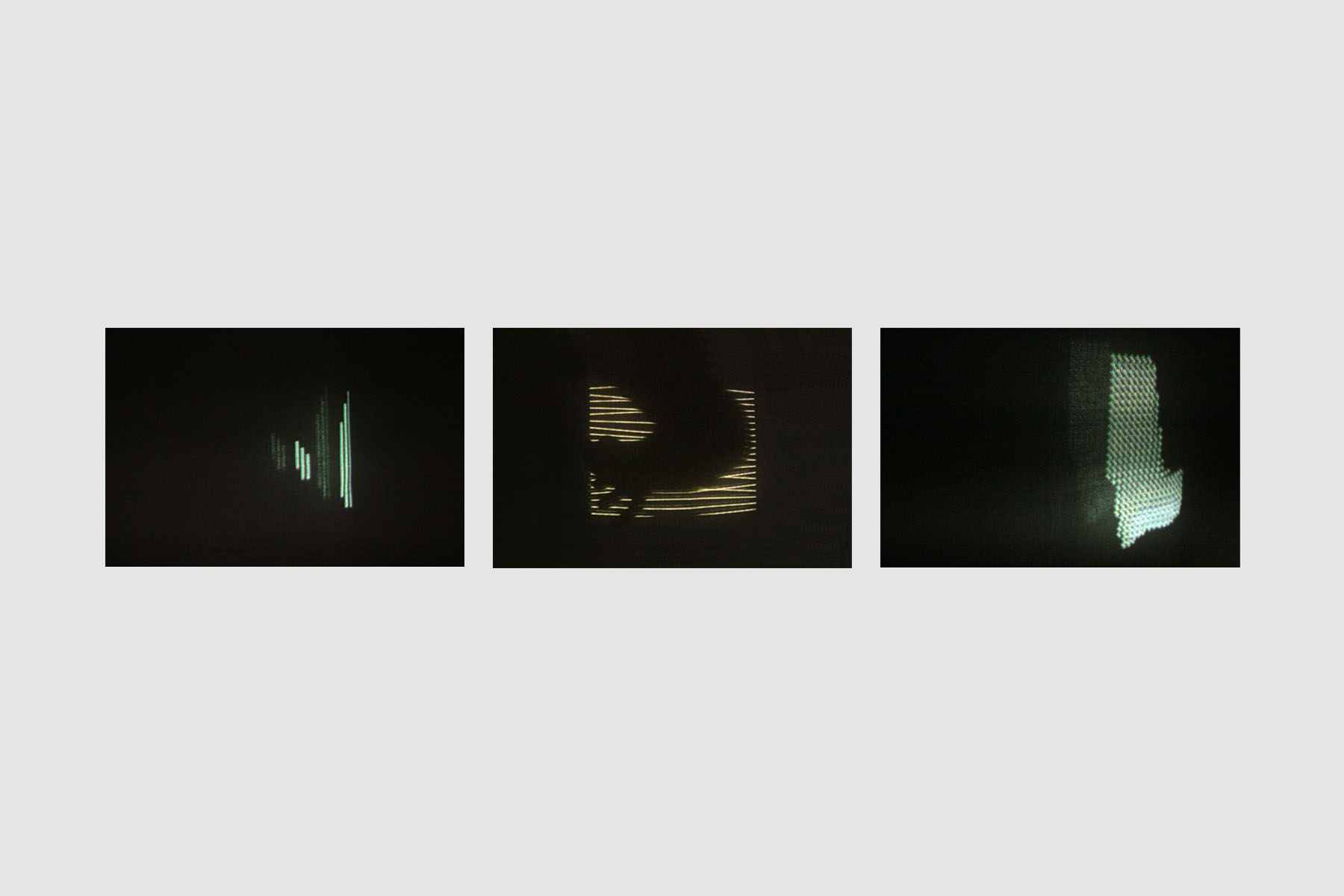

PROTOTYPE PATCHES AND PATCHWORKS

REFERENCES:

1. Openframeworks Kinect example located in examples/computer_vision/kinectExample

2. Optical Flow example from the code examples provided in Week 13, Computer Vision (Part 2)

3. Brightness Tracking example from the code examples provided in Week 12, Computer Vision (Part 1)

4. Colour Tracking example from the code examples provided in Week 12, Computer Vision (Part 1)

5. Openframeworks ofBook, https://openframeworks.cc/ofBook/chapters/lines.html: A Web of Lines

INSPIRATIONS:

Body tracking -

https://vimeo.com/326629188

Projecting shadows -

https://www.creativeapplications.net/processing/komorebi-platform-for-generative-sunlight-and-shadow/

https://uva.co.uk/works/new-dawn