ab_tempora

Wrapped in an algorithmic flow of video technology two dancers intend to make sense of what they are going through and what they are seeing in their self reflected distortion. This performance relies on the slit-scan photography technique and other realtime video effects to reflect upon our consumption and the contraction-dilation of moving images.

produced by: Romain Biros

dancers: Rebecca Piersanti & Sophia Page (masshysteria collective)

This performance emerged from a long term collaboration with masshysteria, a dancer collective based in London and a long term technical research to be able to use the slit-scanning technique in high resolution and in realtime with a twist of computer vision. There are no set choreography but different phases and the dancers dance to impact the audio-visual effects until the audio-visual effects make the dancers dance.

concept

‘The mechanical eye, the camera, rejecting the human eye as a crib sheet, gropes its way through the chaos of visual events, letting itself be drawn or repelled by movement, probing, as it goes, the path of its own movement. It experiments, distending time, dissecting movement, or, in a contrary fashion, absorbing time within itself.’ Dziga Vertov

By breaking the linear advancement of the moving image in various ways, I aim to provoke the generation of alternative and multiple dancing trajectories. I also want to interfere with the audience’s sense of cognition as the interaction between dancers and audio-visual systems occurs.

It is clear that the audio-visual systems are interacting and have a cause and effect but it is not always clear which part is leading. I also want the audience to feel that they are viewing something fleeting and original. Due to the nature of the visuals, the interaction, and the semi-improvisation of the dancers, this performance should feel like it would be impossible to repeat.

Making use of the slit scanning effect, time stretching and pitch bending for the voice we want the audience members to feel a certain amount of temporal irregularity when watching - like time is passing in a disrupted way. It is sometimes stuttering, sometimes stopping and sometimes moving very quickly.

The bird view camera placed at the ceiling of the performance space and the appropriation of the phone by the dancers are a suggestion and reminder of the invasiveness potentiality of these devices. The dancers are ‘wrapped’ by moving images of themselves similarly to the amplified narcissism we fall into while ambiguously submitting ourselves to a continuous state of surveillance and permanent record of our data.

background research

'While plato said that art imitates nature, video imitates time' Nam June Paik

This work is inspired by writings of Maurizio Lazzarato (Videophilosophy, the perception of time in post fordism), Robert Pfaller (Interpassivity, the aesthetics of delegated enjoyment) and Jacques Ranciere (The future of the image).

'The technological assemblage of the video device does not show us the time of the event but puts us in the event. With video it is not about supplementing time but constructing it and doing so collectively, in an assemblage, in a flow. Live technologies impose a concept of subjectivity that constitutes a virtual critique of the concept of the spectator, formulated by Joseph Beuys in this way: I am a transmitter and I radiate.' Lazzarato

It is even more relevant today as our phone morphed into a mainstream video recording apparatus. In this performance the video devices are seen and used as an ambivalent surveillance and interpassive capitalist good that both enable and prevent the artist and the dancers to be in the now.

I was aesthetically inspired by the following artworks:

- Wrap around the world by Bowie with visuals from Nam June Paik (1986)

- Reflexus/VJYourself by computational artist Eloi Maduell (2007)

- Veil of Time by visual artist Kevin McGloughlin (2017)

technical

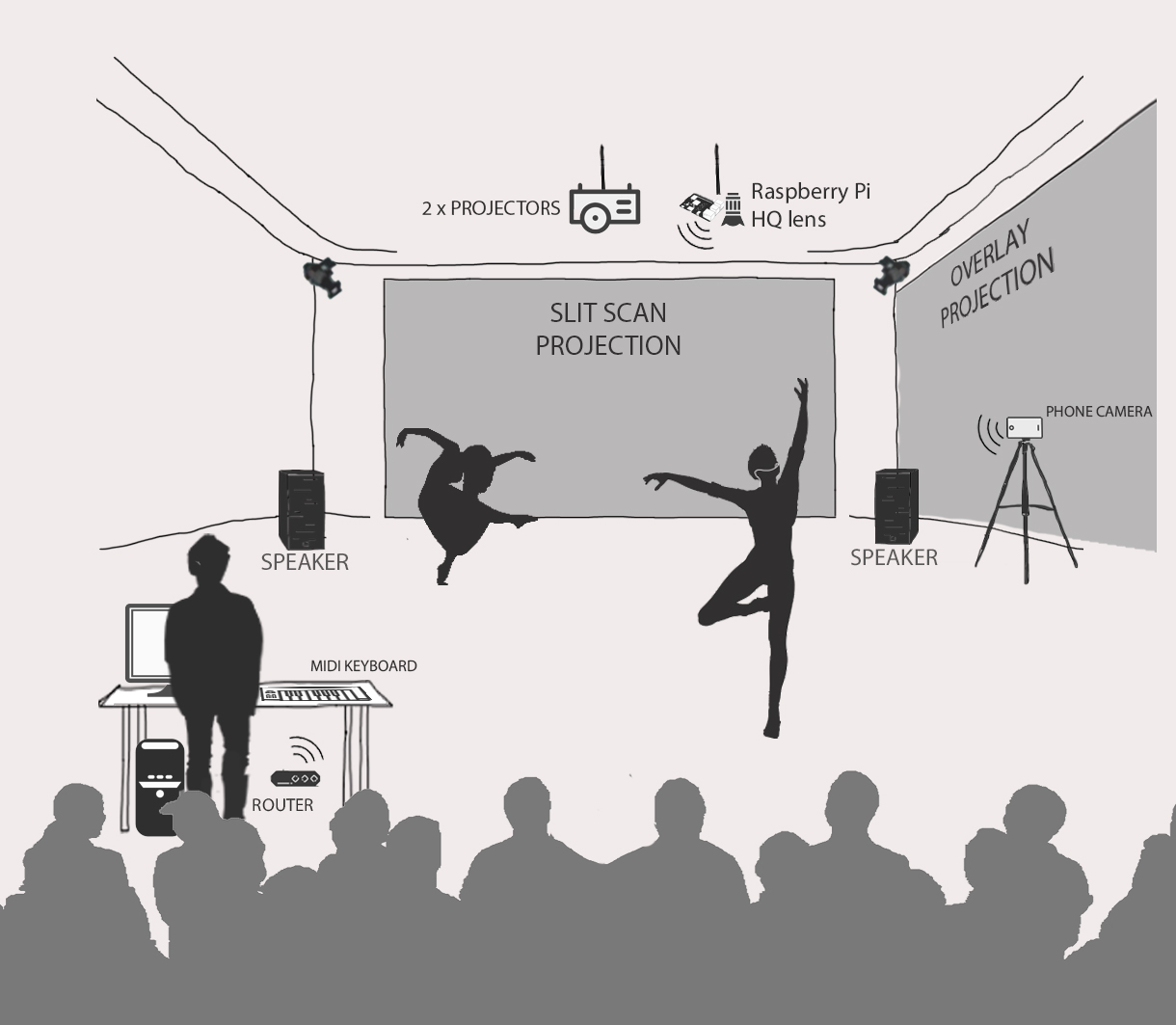

fig 1. stage setup

The main visuals (center screen) are an experimentation revisiting the now old and classic slit scanning technique. I wanted to create a tool that would both enable me to use existing footage and to make a realtime interactive video installation.

Slit scannning high resolution and high frame rates video files or video streams require to do all the computation on the GPU. A circular buffer combined with openFrameworks libraries customisation, continuously stores a number of frames on the GPU memory. As this functionality is not natively supported by openFrameworks, I had to modify the existing official libraries. Then, once images are stored, shaders and various noise functions interpolate the value of the frame number to use for each pixel. Those two aspects were some of the most challenging to achieve for this work.

The other effect (right screen) uses a slightly different technique which consists in repeating and overlaying the moving images while choosing the brightest or darkest pixels as the video files or stream is being read. It is particularly appealing to a dancer who is able to play with their own movement trajectories in real time.

Two video feeds are used in this installation, one from the smartphone camera and another from a Raspberry Pi+HQ lens module placed at the ceiling of the performance space. Both signals are then streamed directly to the main computer which is running the two programs for the two projected moving images. The streaming protocols used are :

- Gstreamer for the Raspberry Pi using the ofxGstreamer addon

- NDI for the smartphone using the ofxNdi addon

Fig 2. Raspberry Pi 4 + HQ lens

In order to have a stable bandwidth for both signals and to avoid interference I set up my own wireless network using a personal router. Upon receiving the signal, openFrameworks reads the video and a computer vision algorithm (frame differencing) is able to detect the amount and the position of the dancer’s movement which will then affect the projected visuals.

When the performance starts, a timer is implemented to structure the performance in different phases and momentum which trigger different audio-visual effects and interaction.

I have composed and semi-improvised the music using Reason (DAW) and a midi keyboard with pre-recorded dancer’s voice. In the first part of the performance when playing the keyboard, the velocity data is sent to openFrameworks using the addon ofxMidi. The higher the velocity the more complex the visual becomes creating a circular dialog between the dancers, the visuals and I. In the last part of the performance, the beat created on reason is also sending midi data to openFrameworks so that each beat triggers a change of visual effect giving a more frenzied rhythm to the overall performance.

future development

This the very first time we have shown this piece in public but this ongoing collaboration already produced other work in the past. A video was shown at Tate Modern Exchange during a masshysteria residency and we also made another experimentation on a previous assignment with Hannah Corrie.

Fig 3. Murmur, Swoosh, Retch made with Hannah Corrie and Rebecca Piersanti

We are now going to continue working on the piece and show it to different events and festivals.

The technology used here could also serve different purposes and be completely open for the public/audience to experiment with the visuals and reactive sound themselves. In a similar vein VJyourself (2018) by Eloi Maduell is a good example to rely on.

self evaluation

Given more time I would have focused on using the projected screen on the left with other type of visuals. Some suggested that I apply the slitscan on the code I wrote, or I could have simply duplicated the right projected screen. In both cases the challenge would have reside in not having the video feed filming one of the opposite screen as this would have interfered in the movement analysis.

A more specific step will be to implement a 3D version of slitscanning on the GPU and in realtime using a KinectV2. This work was already started by Memo Akten but it would require some work to translate the code into the graphic card and the second version of the Kinect.

In terms of the choreography and the dancer’s role in the piece we will still make the performance improvised but more theatrical introducing a mic so that I coud use and glitch their voices in realtime.

I would have liked to improve the audio mixing, as well as enabling the record of the visuals from openFrameworks during the performance. I used ofxFFMPEG in the past on similar project to record the program but there is an impact on the performance and frame rate, and the optimisation is another step I didn’t have the time to complete.

references

- Videophilosophy, the conception of time in post fordism by Maurizio Lazzarato, Colombia University Press, 2019

- Interpassivity, the aesthetics of delegated enjoyment by Robert Pfaller, Edinburgh University Press, 2017

- The future of the Image by Jacques Rancière, Verso, 2008

- The Book of Shaders by Patricio Gonzalez Vivo and Jen Lowe, 2015

- Wrap around the world, by David Bowie with visuals from Nam June Paik, 1986

- Reflexus/VJYourself by computational artist Eloi Maduell, 2007

- Veil of Time by visual artist Kevin McGloughlin, 2017