XIDALIA

XIDALIA is a performance that explores a post-apocalyptic vision of the future, told through song and projected visuals. Sonically ranging from trance-inducing music, to electronic music, to soul; visually including coded animations and video footage processed through code, performance is used as an umbrella term to host many parts that together tell a story through emotion. The piece came about through a spiritual belief system/practice I have been developing that has roots in shamanism, witchcraft and paganism fused with technology; the concept of the piece inspired by sci-fi, speculative fiction, and feminist-techno-science.

produced by: Valeria Radchenko

Introduction - Backstory

It started out as survival instinct, distorted by fear. Humans began to exert power-over all that was not man, began a system of living based on domination and the suffering of those we chose not to hear, that eventually grew deeply unethical and unsustainable. It was easier just to maintain it than to enact global change, especially because it kept us divided, with a select few at the top.

We had been taught to turn against ourselves, forgetting the breadth of what that “we” really holds. We had been forced to forget that we have power too, power-from-within. The power of civilians overcame the system when they stood united on a single notion – that they would not stand for this.

The Shift happened gradually, over centuries, almost imperceptibly, but XIDALIA crystallised in a flash, like a second Big Bang.

All I remember is running down a highway, desperately running away from something and suddenly stopping, realising it was futile to try to outrun it, it was coming anyway. I surrendered to the fact that I was probably going to die, finding a sense of peace as all became light. I blinked my eyes open and it felt like nothing had changed, we were all holding hands, safely witnessing what was emerging, what had already been here...

The context behind XIDALIA’s narrative is that the apocalypse is already here, and it has been here for a while. It is not a single cataclysmic occurance but a complexly knotted network of events and reactions. It goes by many names – the patriarchy, the pharmacopornographic regime (Preciado, 2013), the Anthropocene – it is a manifestation of power-over (Starhawk, 1997), with all the issues the world is facing as symptoms thereof. This concept is something I began developing in the Computational Arts-Based Research and Theory module (see more here).

Concept and background research

The narrative of the piece can be divided into two parts: the ritual (entering a trance state, discovering the portal to XIDALIA) and the arrival (arriving in and exploring XIDALIA, experiencing particle reconfiguration). I felt it was necessary to begin by bringing people into a state where they would be more open to the journey, so I created a ritual soundtrack for the first part which consists mainly of beating sine waves, inspired by singing bowls, and layered vocals, pre-recorded and live. The visuals for the ritual consist mainly of shader graphics and have a hypnotic, psychedelic quality to them, which supports the audio. This part of the performance continues a practice I have been developing that explores trance and ritual by using technology as a magical tool. I'm interested in continuing, updating and queering the lineage of visionary art, psychedelic aesthetics and trance sounds.

The audio and visuals shift to filmed footage and electronic music that meanders through moods and genres as we enter XIDALIA (around 5 mins into the performance). The narrative, embodied through the visuals and music, has a dual nature. On one hand, I show a glimpse of the kind of society I hope to inhabit. A return to the earth, living in harmony with nature’s processes, with elements of technology. This can be seen in the first few shots, where the music is dreamy and I am taught about the herbs available to me locally.

This part was inspired by sci-fi literature (Piercy, 1976; Slonczewski, 2016), as well as Donna Haraway’s concept of “sympoeisis”, or making-with, as explored in “Staying with the Trouble” (Haraway, 2016). In the last chapter, Haraway describes a world where all the theory explored is put into practice – where the human population decreases, where children are raised by multiple parents, where our DNA is mixed with that of other species. This inspired me to focus on creating the world I want to live in, rather than making a piece that criticises our current system of living.

On the other hand, I offer a different perspective. Through working on the project, I realised it was important to also remain present in the world we’re living in now – “to stay with the trouble”, to face the fact that whether or not there will be a future post-apocalypse, we are all going to die. Thinking about this not in a defeatist way, but to bring about a sense of urgency, a call to make the most of our experience as humans before it’s too late.

Transformation is inevitable – but what will come from the materials that compose us, our particles, our consciousness? Will there be new forms of life, like a second oceanic evolution (as in, how everything evolved from oceanic microorganisms), or could there be something other, something we cannot imagine at all? Dreaming about the possibilities brought about a sense of hope, almost excitement, about what could happen to us in the future, bringing about a peace with death.

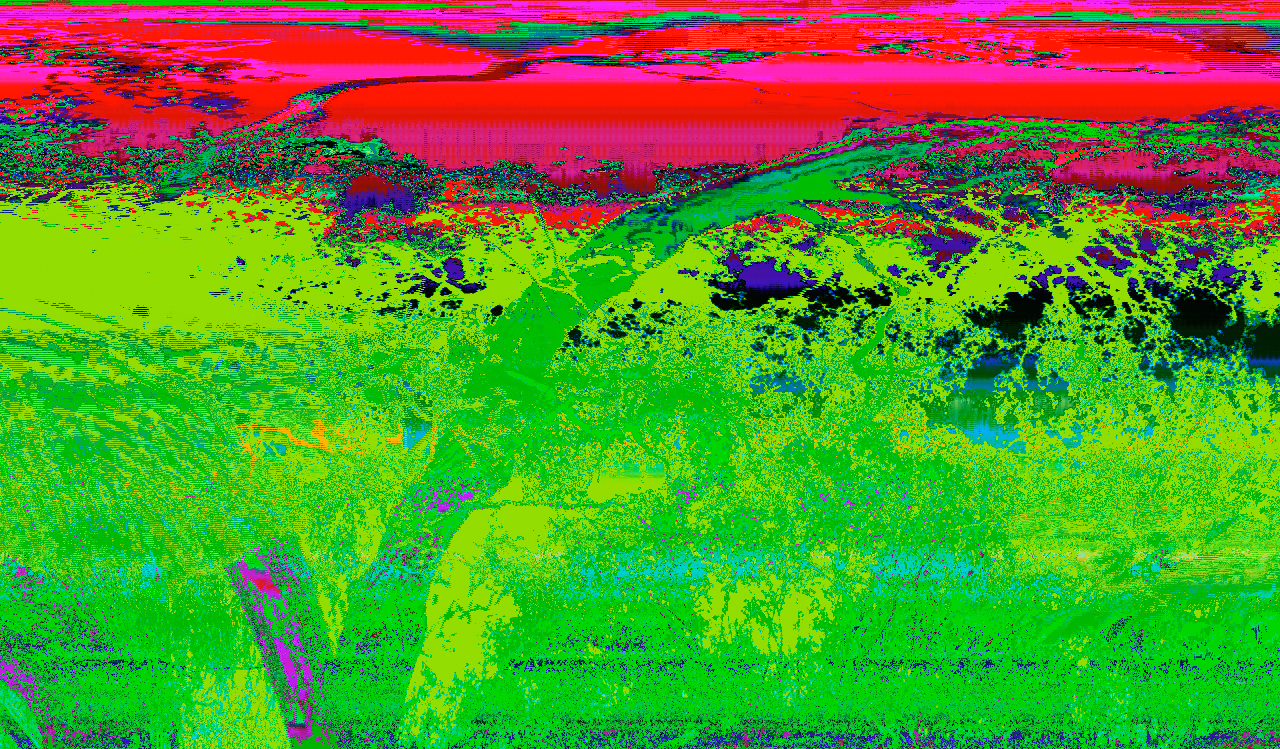

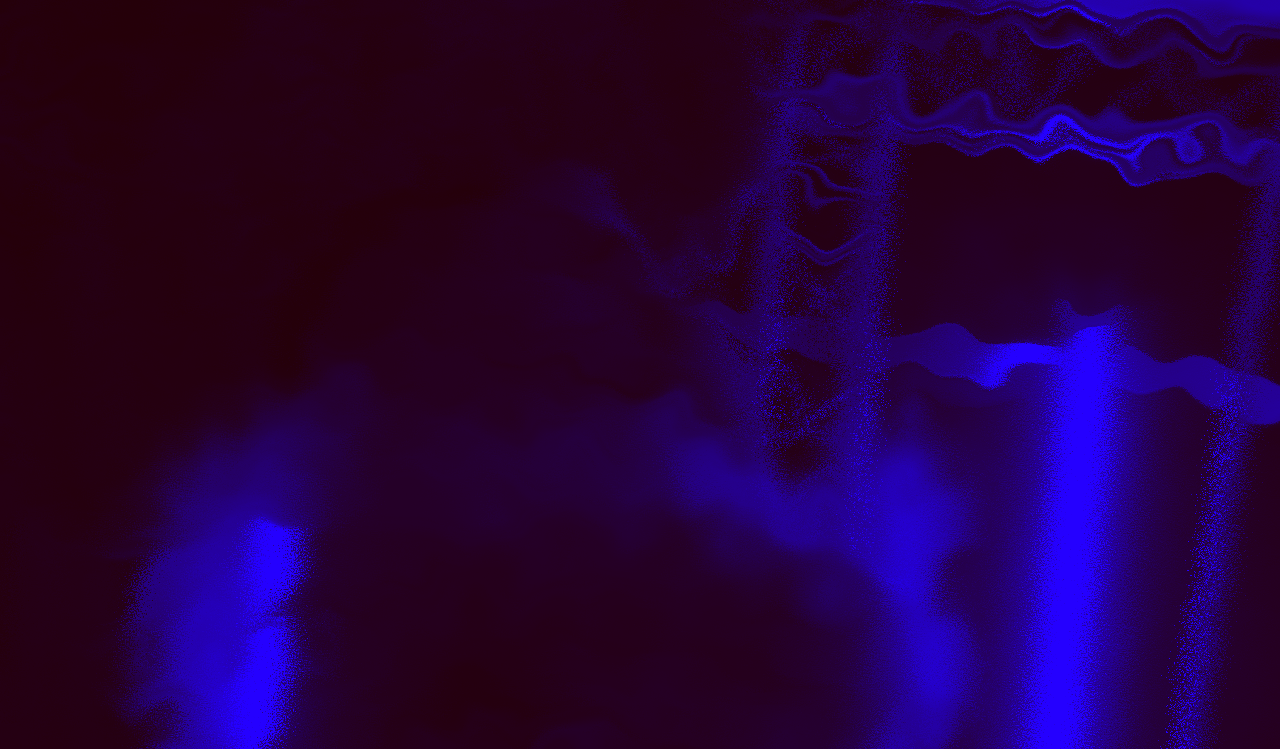

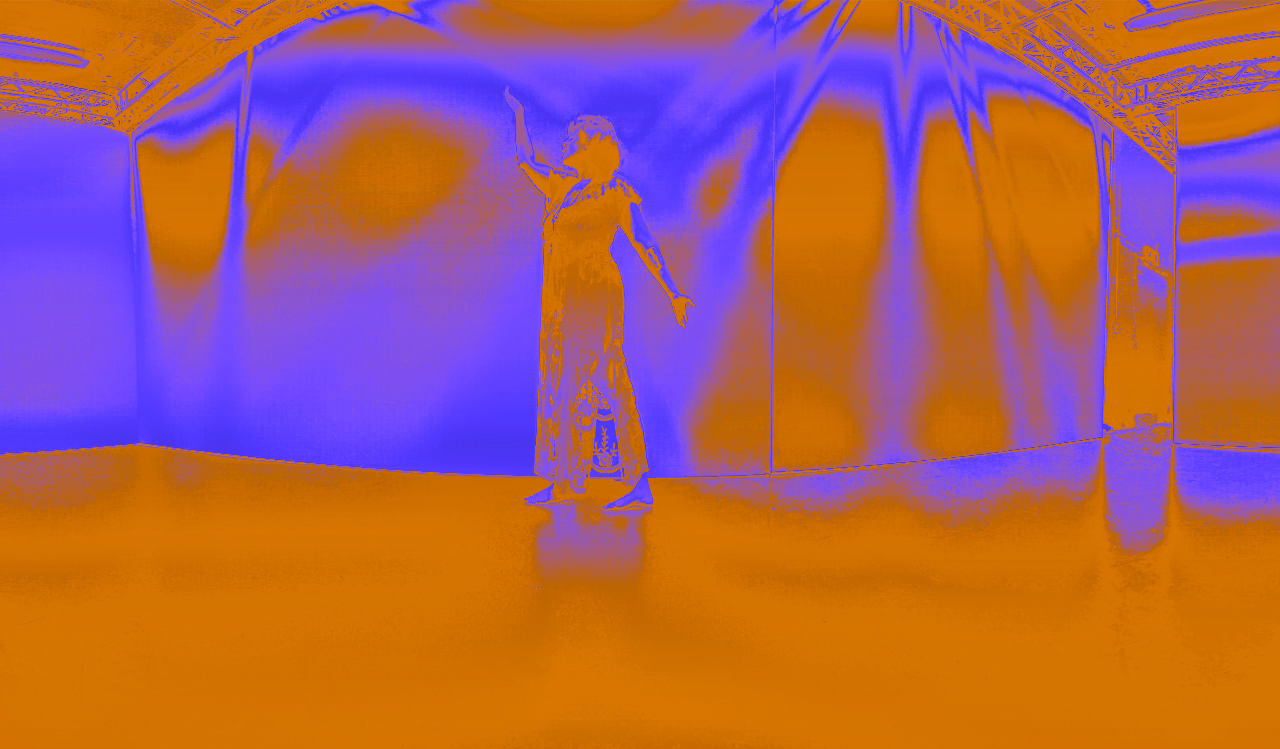

Particle reconfiguration as a hopeful way to make peace with death is expressed through processing videos with code, where the manipulation of pixels is a metaphor for what will happen to our particles. I experiment with glitch, degradation, transformation, and the merging of videos, to turn my body into something other. Glitches were interesting in particular as despite them initially being accidental, code working in a way I didn’t intend, I felt they supported the particle reconfiguration narrative, representing processes such as genetic mutation or perhaps glitches in evolution. This is (partially) why artists collaborate with machines, after all – to produce unexpected results. Through experimenting with footage this way, I began to reimagine XIDALIA as a body, which is also a site of sorts. I became XIDALIA through working on it– something in touch with the past, present, nature, technology, that breaks binaries, that translates and communicates, that carries what is important into the future.

The decision to create video effects was inspired by my classmates Claire Kwong and Bingxen Feng, specifically their term 2 projects for Workshops in Creative Coding (click on their names to see their blogposts). I was also inspired by experiences I’ve had at festivals, nights out, and performances such as “Parasites of Pangu” by Ayesha Tan-Jones (“a dystopian opera exploring the world through the story of an archeologist of the future, based on a Chinese creation myth”) and Ritual Labs (a series of participatory rituals that explore the 12 Labours of Herakles). These experiences took me on emotional and narrative journeys through sound and inspired me to explore the possibilities myself.

A note on electronic music

The methods of producing sounds and making music change as technology advances, with it bringing about new genres, altering the definition of music. I listen to a lot of music that samples everyday sounds, that uses strange synthesised noises that sound like machines roaring – for me, these new sounds and electronic compositions bring about feelings I’ve never felt before, feelings I can’t feel in other situations, but are still somehow familiar (see references for list of musicians). Despite the constant expansion, the possibilities remain infinite, as does the vast range of feelings music can illicit. I think there’s something beautiful and powerful in this, the way humans collaborate with machines with wild results, and I wanted to try exploring this myself – could I take people on a journey just through the sound? I was also interested in archiving and paying homage to things I would want to bring into the future, for example rave culture (or the spirit thereof), through updating and reconfiguring elements of it.

Technical

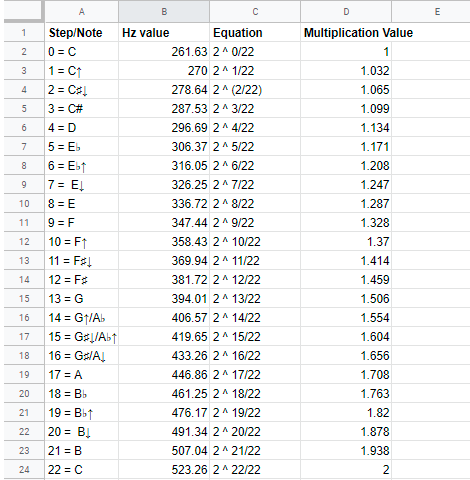

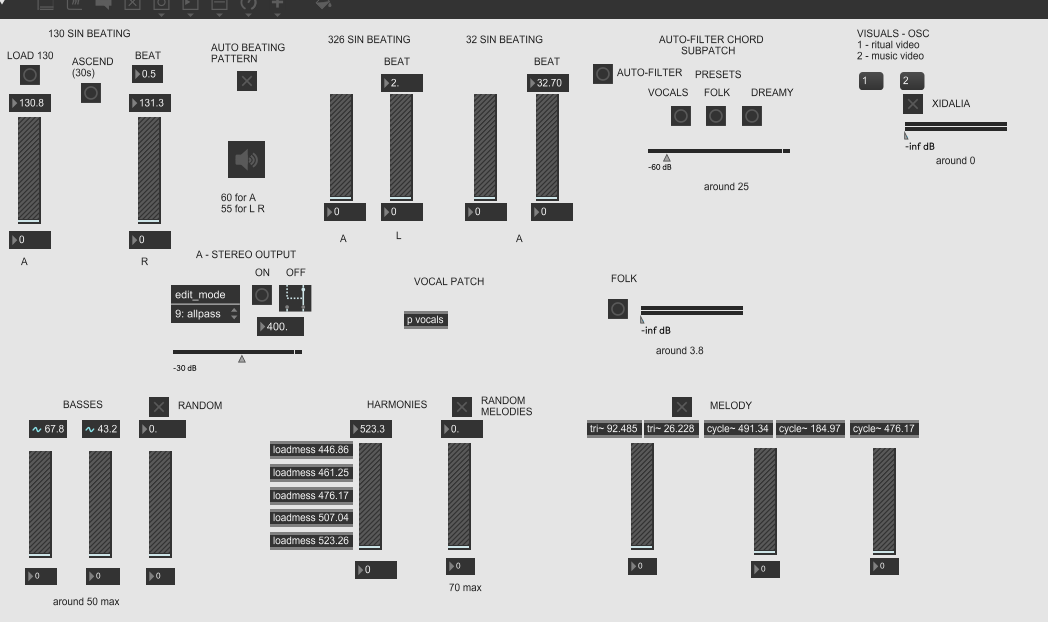

A significant amount of coding was done in Max MSP. I created several different patches for this project: one that was used live to control the audio and trigger videos (XIDALIA MEGAPATCH), one that explored a twenty-two equal temperament system and one that was used to create noises and bass sounds to be used in the electronic song. I used WebGL to create animations for the first half of the performance, and openFrameworks to process video footage in the second half. Some of the video clips include the shader animations, either as projections in the background, or to manipulate colour or movement of the video pixels, playing with layering and degrading.

The visuals were divided into two wide videos that were projected across the three screens. The second part needed to be a video as I was using filmed footage, however the first part was mainly shaders and could have been a program. I did try to make an openFrameworks project, but because I was new to shaders I did not realise my shaders could not be imported directly as they were in WebGL. I spent some time trying to alter the code and include the files in different ways but ran out of time. Ultimately, it was more important for me to have the various visuals than to have them sequenced in code. (One of the reasons why it didn’t work could also be my graphics card, as newer versions of GLSL aren’t available to me).

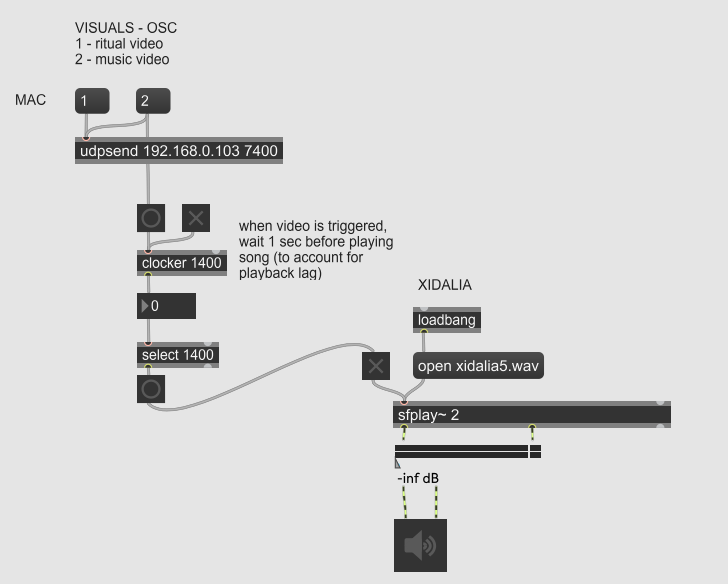

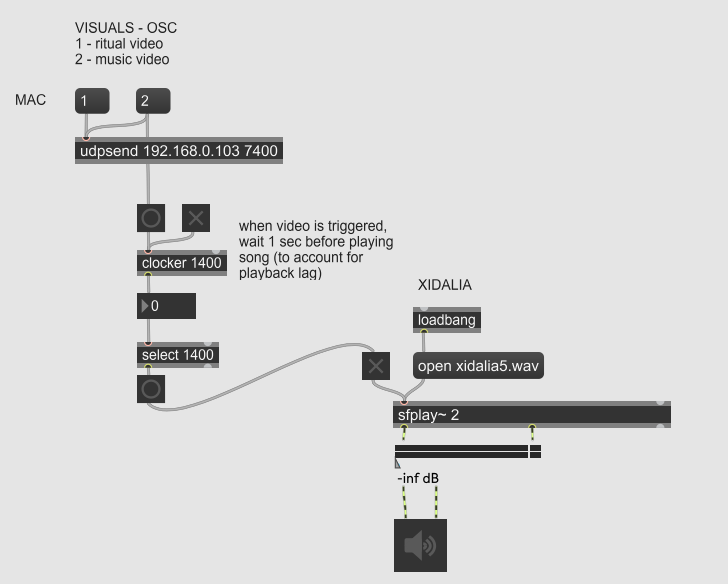

The videos were triggered by my Max patch live on stage. When I pressed a bang, it would send an OSC message to the Mac in G05 that would trigger the playback of a video, that was received by Madmapper through Syphon and finally projected (see image below). OSC is a protocol for communicating across computers – by using this, I could control the playback of my video from the stage. Syphon is a plugin that allows applications to share frames in realtime, in this case Max and Madmapper. The reason why I decided to use this method, rather than connecting my laptop to the projectors directly, was because my computer couldn’t handle running my Max patch and the visuals across three projectors simultaneously. This method spread some of the computational load, whilst allowing me the control I needed.

The bang that controlled the second video also controlled the playback of the song, as it relied on being synced to the audio to ‘work’. There was always a lag between me pressing the bang and the video being projected. I found delaying the song by 1.4 seconds worked well. This is another example of using code was optimal, because I could set a short delay to compensate for the visual lag. What was initially a problem pushed me to create a system that optimised my work and provided a precision I could not achieve manually – pressing a button to make my song play when I would see the visuals appear on the projectors was an inconsistent method that was a bit anxiety inducing – I had to be really quick and it was just never as precise as I needed.

In the interest of being brief, I will only focus on one example for both the audio and the visuals.

Max noises

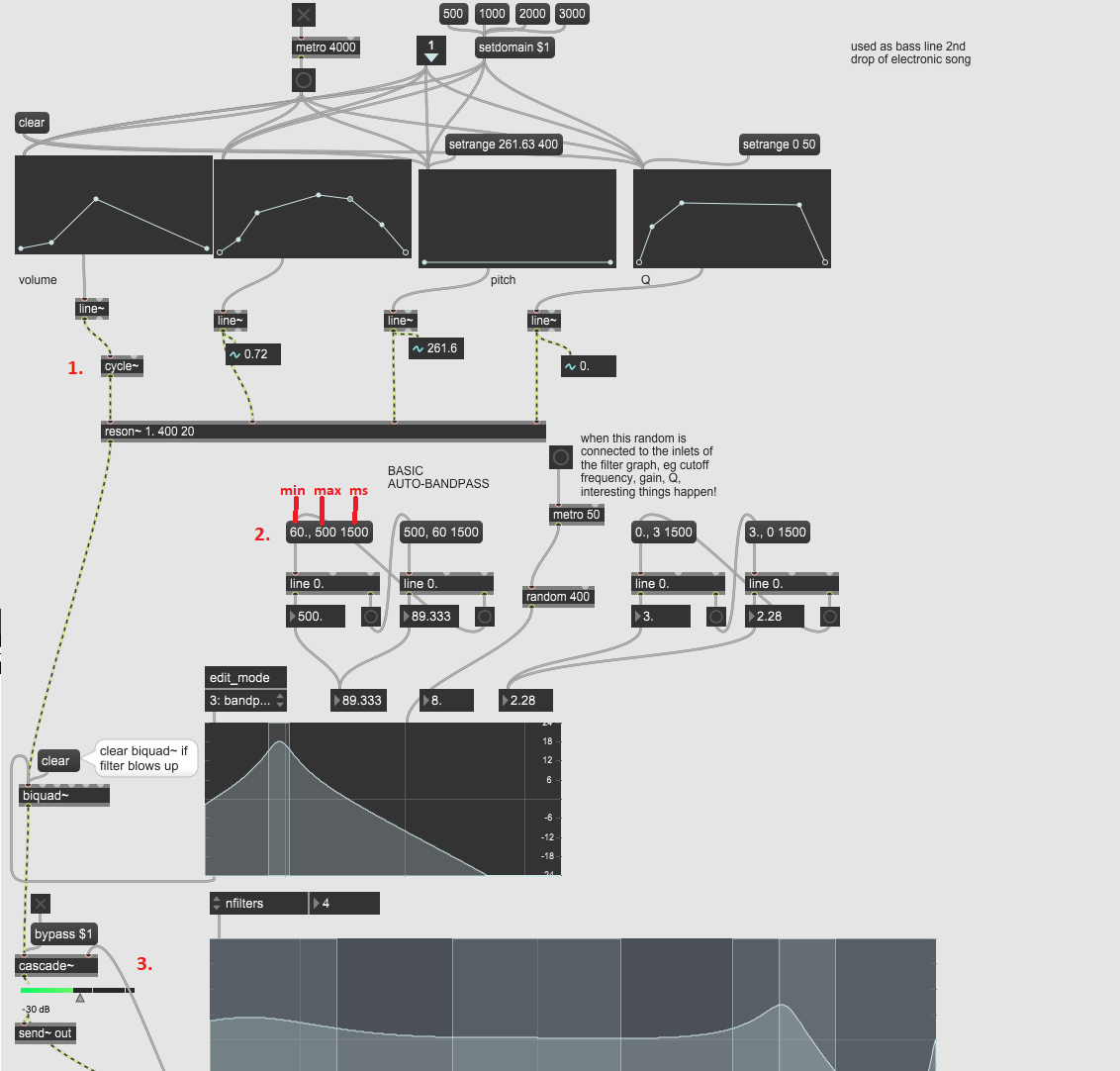

I began my noise/bass exploration by playing with percussive sounds, trying to recreate snares and kicks, but I realised this wasn’t something I needed to make as there are many samples I could use that would do a better job (at least with my skills so far). The only thing that I felt I couldn’t do otherwise, with such a degree of precision, were the bass sounds. Using code meant I could create the sounds I needed from scratch, giving me a more intimate connection to the sound. The drums and synths you hear in the song were all samples and sounds synthesised in Ableton. You can hear the Max sounds in the first and second drops + lead up to second drop, a drop being “the moment in a dance track when tension is released and the beat kicks in” (Yenigun, 2010).

I have included two examples of noises I synthesised in Max that were used in the electronic composition below, as well as an image of the patch. Unfortunately I didn’t record the screen whilst experimenting, but they were made using a subpatch called “sin ting” in the “noise” subpatch of “XIDALIA MEGAPATCH”. The following clips were made by playing with parameters, occasionally setting some to randomly changing values, whilst recording using the Quickrecord function in Max, then cutting parts I enjoyed and processing them in Ableton. I found that if I used random values for certain coefficients, it would create a percussive sound. I also purposefully tried to tamper with the flow by pressing certain message buttons too many times, creating strange glitches that I found useful.

You can hear the unprocessed recording first, then as it is heard in the final track. The first clip has quite minimal processing - I used overdrive and EQ to amplify certain frequencies, and sped up the clip to fit the BPM of the track. The second clip is processed through EQ, amp, overdrive and sidechain compression effects on top of cutting, repositioning and transposing.

Max sound 1, as heard at 2:18 https://soundcloud.com/valeria-a/xidalia

Max sound 2, as heard at 2:47

As seen in the image above, the sounds were generated using a sine wave (1, the “cycle~” object), that was then filtered through an automated bandpass filter (2) then processed through another EQ effect (3, the “cascade~” object). The automated bandpass filter was created out of a need to make automating EQ easier and more precise, something I realised I needed when processing the bass sounds in Ableton. The effect was achieved by using line objects with min and max hertz values and milliseconds (how long it would take to get from the min to the max) set as messages, which would change the values of certain coefficients and increase/decrease volume as the resonance became more narrow. A lot of the nuance and complexity of bass sounds in drum and bass music comes from different effects being modulated in different ways over time, stacked on top of each other. By programming these changes, I could achieve a fluidity and precision that would be more difficult to create by using my mouse. This effect was also used for the chord subpatch and was an option for the sine beating patterns (though I only used this for some of the performances).

Video processing

I created three different effects to use with video footage for the second part of the performance, mainly to do with changing colour, moving pixels and combining pixels of multiple videos. I didn’t expect that exporting the footage would be so difficult. Firstly, my computer is quite slow which hindered performance. I tried different ways of exporting, but for the videos to retain their quality (that was already compromised through being processed), I was recommended to export frame by frame. This process added to the computational load so it skipped frames. My solution to this issue was to slow down the clips individually before using them, and then slowing them down further, if needed, in the openFrameworks project. These frames were then nested and sped up in Premiere Pro. In the future, I’m interested in finding a different process that is more programmable as this was a bit slow. However, I did find that by working this way, I gained a more intimate relationship with the material as I would look through every single frame, altering things slightly in the code as needed, customising parameters for each clip. In the end, it worked, because I needed a wide variety of short clips that would change quite quickly in time with the song.

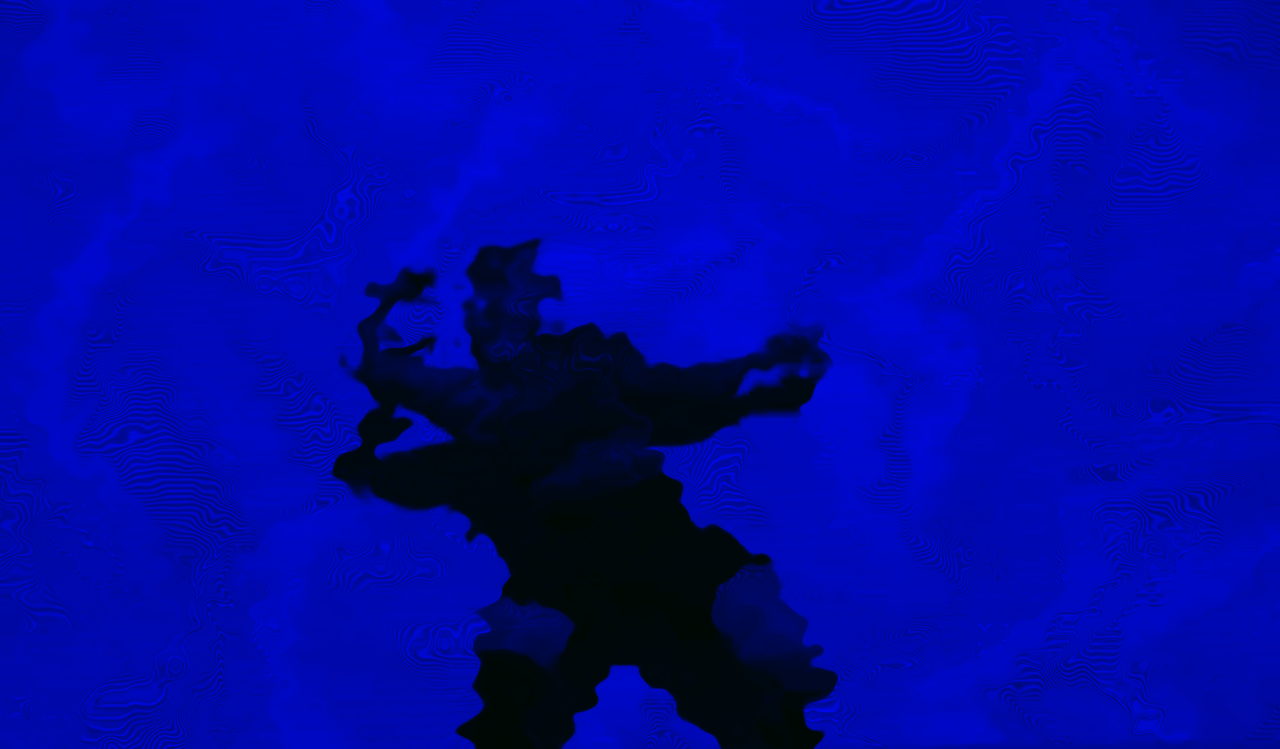

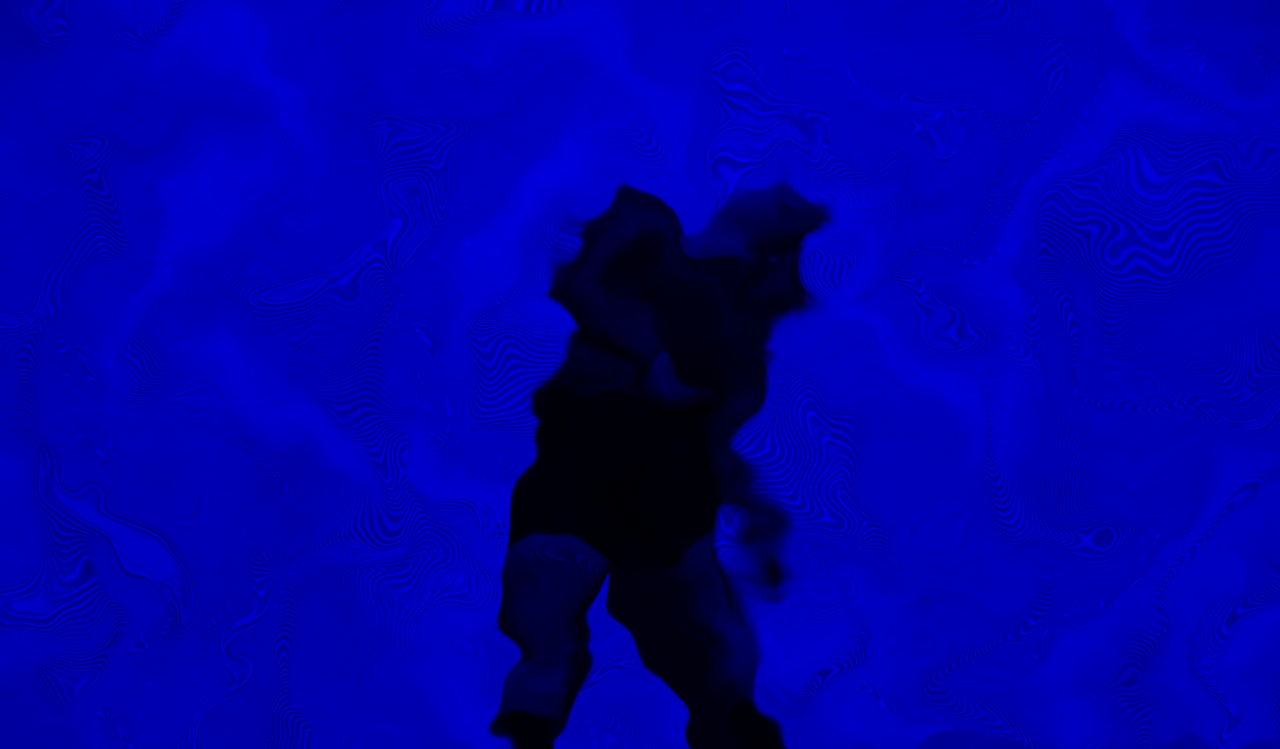

One of the video effects I used most was called vidSynth (see frames below). I modified the Video Camera Synth example from Creative Coding Demystified (Perevalov, 2013) to create an effect that would use the colour of one video clip to shift the pixels of another video (originally live video pixels would modify the video). I combined footage of myself dancing with clips of the shader animations for their colour and movement, customising the parameters for each clip.

Images 1-2 shows how I used shader animations for their colour, using the following line to shift the x coordinates of the shader pixels:

int x1 = sin(x + ofGetFrameNum()*2) + (colorGrab.r + colorGrab.g);

Images 3-4 show using shaders for their movement, to twist and distorting my body, almost as if I’m turning into bubbles, this time using:

int x1 = x + (colorGrab.b - 50);

Generally, I wanted to contrast moments of beauty with moments of oddity and intensity (sonically as well as visually), reflected in not only how I treated the footage but also how it was projected, specifically the strobing effect achieved through rapid changes in colour and ‘bouncing’ the videos between screens. I wanted to embody the ecstatic experience, to remind people to explore the potential of being human, the strange phenomenon of consciousness, while we still have the chance. But I also wanted to express complexity and tension through things looking and sounding twisted and distorted, to reflect the idea that a perfect utopia is not possible or useful to dream about. I believe we need the push-pull of struggle and comfort, just not to the degree we have now, where the systems of living we have put on an immense amount of pressure on our environment and cause unnecessary suffering due to inefficiency e.g. our food production system, which relies on overproduction and disposing excess.

I’ve made music videos before where I relied on what was available to me in Premiere Pro, in terms of changing colour and playing with green screen effects, but I’ve never seen anything like what I created with these coded effects, it gave me something new to play around with and a more intimate relationship with pixels, more control over their behaviour. It is worth mentioning that I created a lot of visuals that didn't make the cut, for example shaders that didn't work well in the space because they were too dark or intense, and processed footage that missed too many frames or that worked better as stills than as part of the video. I have included some examples below.

Future development

In the future, I’d like to extend the piece to really give each part space to breathe. For example, 5 minutes is not really enough time to induce trance-states, and the different sections of the electronic composition could be expanded into separate songs.

I would also like to improve the quality of the visuals, by running the shaders in real time with a blending function, for example, and finding a way to export the frames in openFrameworks at a higher resolution than the size of my laptop screen. Furthermore, in terms of the actual effects, I would like to experiment with optical flow and develop a better understanding of how pixels are drawn, as I’m interested in data-moshing and crossing videos in a more precise way. It would also be interesting to programme different effects in a way that would reduce the amount of editing I do after, perhaps triggering different clips in an audio-reactive way, and exporting the video footage as video.

In terms of the audio, I would like to challenge myself to synthesise more samples, for instance kick drums, snares and strings. Furthermore, I would like to refine the sine singing bowls by playing with the timbre and adding harmonics to replicate the complexity of the real instrument. I would also like to develop the performance itself by including some hardware and perhaps sequencing different sounds in a more complex way so I can focus on singing. It would also be interesting to create unique vocal processing effects.

Self evaluation

I think as an overall piece, it was ambitious and I achieved what I set out to do – to create an immersive audio-visual performance that took people on a journey. The post-apocalyptic narrative doesn’t come through strongly as being about going somewhere, but I think it works in terms of becoming something other, which is a level that was created through working on the piece. In essence, I feel it’s more about XIDALIA as a character, as the story of what will happen to me, than as a world.

I believe there was good use of the space in terms of playing with the video appearing on different screens, and that the music was well-composed – I think my musical skills developed a considerable amount through working on this project as I researched and taught myself what I needed to know, such as EQ and compression techniques, which were necessary in order for the music to sound good in the space.

In terms of the code, the individual sections were quite simple but there were a lot of different elements, which worked for this kind of piece. The system I created for myself was easy to use live and reduced the stress of performing through being able to control both the audio and the visuals from the stage. I think the digital singing bowls worked especially well in the space.

As described in the future development, I would have liked a higher resolution for the shader graphics (which would be achieved through running them live) and for processed video frames, considering how large the projections were. The process of working frame by frame was slow and arduous and required a lot of back and forth between cutting clips on the library computers, processing them on my laptop, finding that I needed to crop the clip or cut it to be shorter, to then process it again etc. Optimising this process would save time in the long-run, time I could spend on more creative decisions. Additionally, one of the shaders created interesting patterns when tiled (see at 2:53 mins in video or the thumbnail for this project) – this is something I could do in the code if I develop my shader skills, but for this project it was done in Premiere by cutting and horizontally flipping the clip.

References

Literary/theory

Haraway, D. J. (2016). Staying with the trouble: making kin in the Chthulucene. Durham: Duke University Press.

Piercy, M. (1976). Woman on the edge of time. New York: Fawcett Crest.

Preciado, P. B. (2013). Testo junkie: sex, drugs, and biopolitics in the pharmacopornographic era. NY, NY: Feminist Press at the City University of New York.

SLONCZEWSKI, J. (2016). Door into Ocean. Place of publication not identified: PHOENIX PICK.

Starhawk. (1997). Dreaming the dark: magic, sex, and politics. Boston, MA: Beacon Press.

Articles

The 5 Deadliest Drops by Yenigun https://www.npr.org/2010/12/31/132490270/the-5-deadliest-drops-of-2010?t=1568653028266

Saturdays Live: Ayesha Tan-Jones, Parasites of Pangu https://www.serpentinegalleries.org/exhibitions-events/saturdays-live-ayesha-tan-jones-parasites-pangu

Music Hackspace: Ritual Laboratory Summer Series https://www.somersethouse.org.uk/whats-on/music-hackspace-ritual-laboratory-summer-series

Blogposts

Shelters by Bingxin Feng http://doc.gold.ac.uk/compartsblog/index.php/work/shelters/

Data Moshpit by Claire Kwong http://doc.gold.ac.uk/compartsblog/index.php/work/data-moshpit/

Max patches

Max built-in tutorials on filtering sound

OSC Syphon tutorial by 1024 https://1024d.wordpress.com/2011/06/10/maxmspjitter-to-madmapper-tutorial/

Percussion Synth Tutorial by MUST1002 https://www.youtube.com/watch?v=JO8OwdE-Rzw

SIN BEATING based on the "Frequency Modulation" patch from Atau Tanaka's Performance module

Musicians/bands

(some of my sounds were created through experimenting with trying to recreate sounds/moods by these artists)

Noisia, Mr Bill, SOPHIE, Former, Klahrk, Bleep Bloop, Sevish, Iglooghost, Hiatus Kaiyote

Shader Graphics

To teach myself about shaders, I used the following resources:

The Book of Shaders by Patricio Gonzalez Vivo and Jen Lowehttps://thebookofshaders.com/

Introducing Shaders by Lucasz Karluk, Joshua Noble, Jordi Puighttps://openframeworks.cc/ofBook/chapters/shaders.html

Shader Tutorial Series by Lewis Lepton https://www.youtube.com/playlist?list=PL4neAtv21WOmIrTrkNO3xCyrxg4LKkrF7

I used episode 15, water color 2, as a foundation for my shaders https://www.youtube.com/watch?v=ye_JlwUIyto&list=PL4neAtv21WOmIrTrkNO3xCyrxg4LKkrF7&index=17&t=0s

Crystal Caustic by Caustic https://www.shadertoy.com/view/XtGyDR

Video Processing Effects in openFrameworks

Perevalov, D. (2013). Mastering openFrameworks: Creative Coding Demystified http://b.parsons.edu/~traviss/booKs/oF/Mastering%20openFrameworks%20-%20Yanc,%20Chris_compressed.pdf. The examples I used were: Replacing Colors, Horizontal Slitscan, Shader Liquify and Camera Video Synth

Special thanks to everyone in G05 for providing constructive criticism, technical support and for generally being encouraging: Adam He, Andy Wang, Ben Sammon, Chris Speed, Christina Karpodini, David Williams, Taoran Xu.