Eflowtion

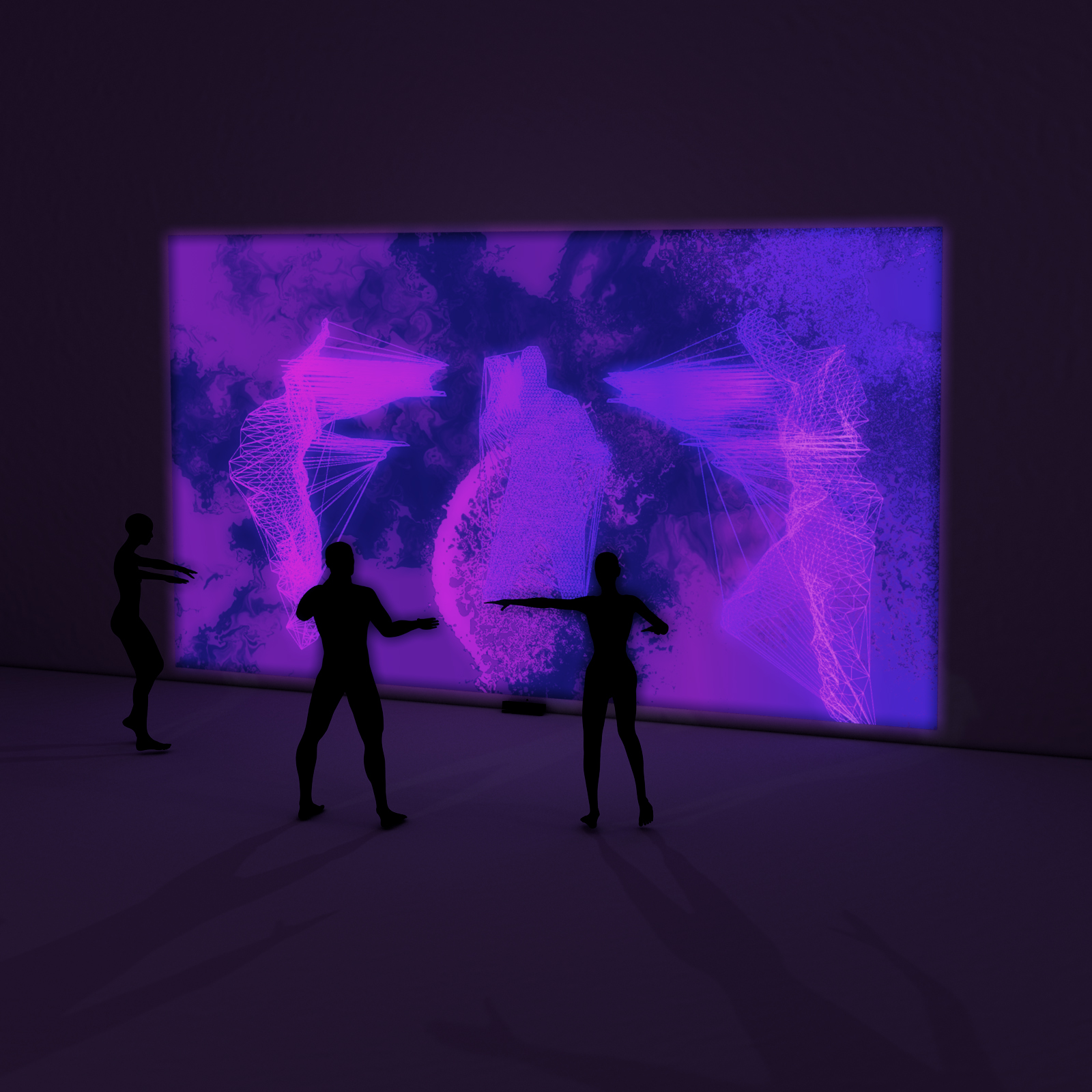

Eflowtion is an interactive projection that simulate states of intense emotion through the user’s poses.

produced by: Chris Speed

Introduction

I came up with this concept a few months back as I was disappointed with my submission for last term and wanted to make something that truly challenged me this time around. Using only code, my goal was to make something that is on the same aesthetic level as the work I produce outside of university [1], but this was no small feat. However, I was quite surprised to find that the elements I thought would be difficult were surprisingly simple and vice versa. There were many code examples online where people had already done the hard work, so I just had to piece everything together to create my own interactive system.

Concept and background research

Originally my concept was to have two distinct modes that symbolise happy and angry emotions but this seemed too broad to be communicated effectively. So upon a Google search I found some stock images that show raised arms can represent both emotions. So instead, I decided that the interaction was to be based on power stances alone, as body language is proven to be an effective mood enhancer [2]. This would hopefully encourage users to move their bodies in a playful manner. The meaningful interaction of this piece was something that revealed itself to me as a process of discovery. As a computational artist, I earned many practical skills in topics such as computer vision, machine learning and connectivity.

For this project the work of Memo Atken [3] proved to be an invaluable resource, particularly his research into fluid simulations for C++. The creations from Myron Krueger in his 1970s laboratory Videoplace [4] also proved to be a strong influence on my initial design choices. Reading the books Making Things See [5] and Kinect Hacks [6] turned me onto many other artistic techniques using these depth cameras. Later, I also rediscovered my love for work of Robert Hodgin, particularly his project ‘Body Dysmorphia’ [7].

Technical

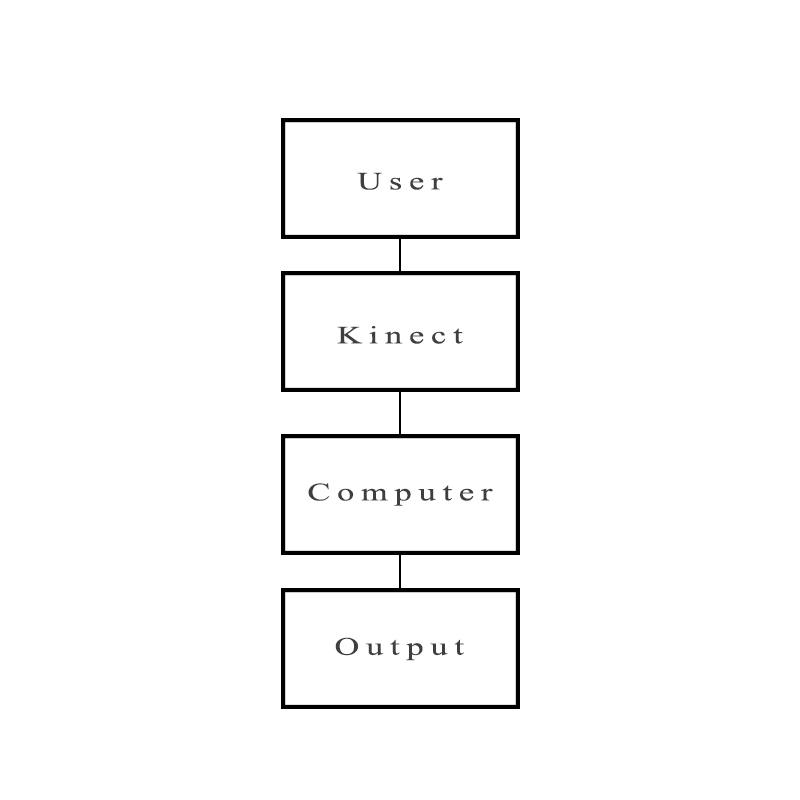

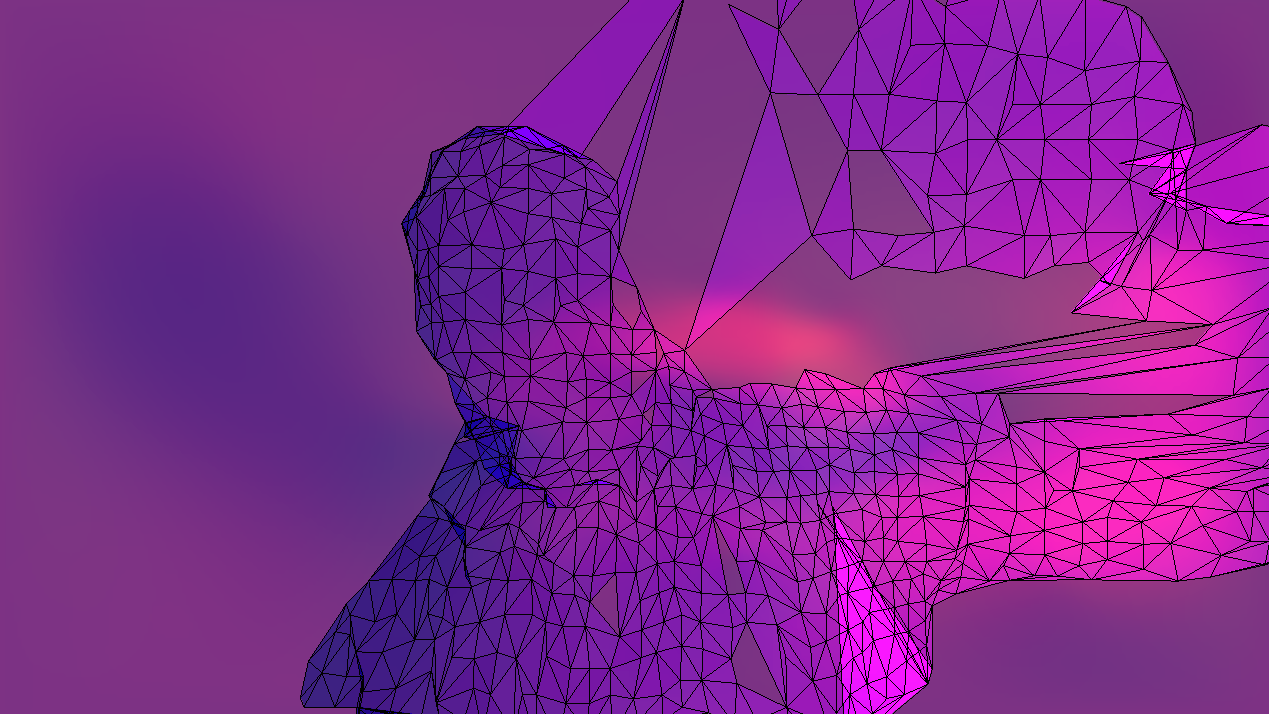

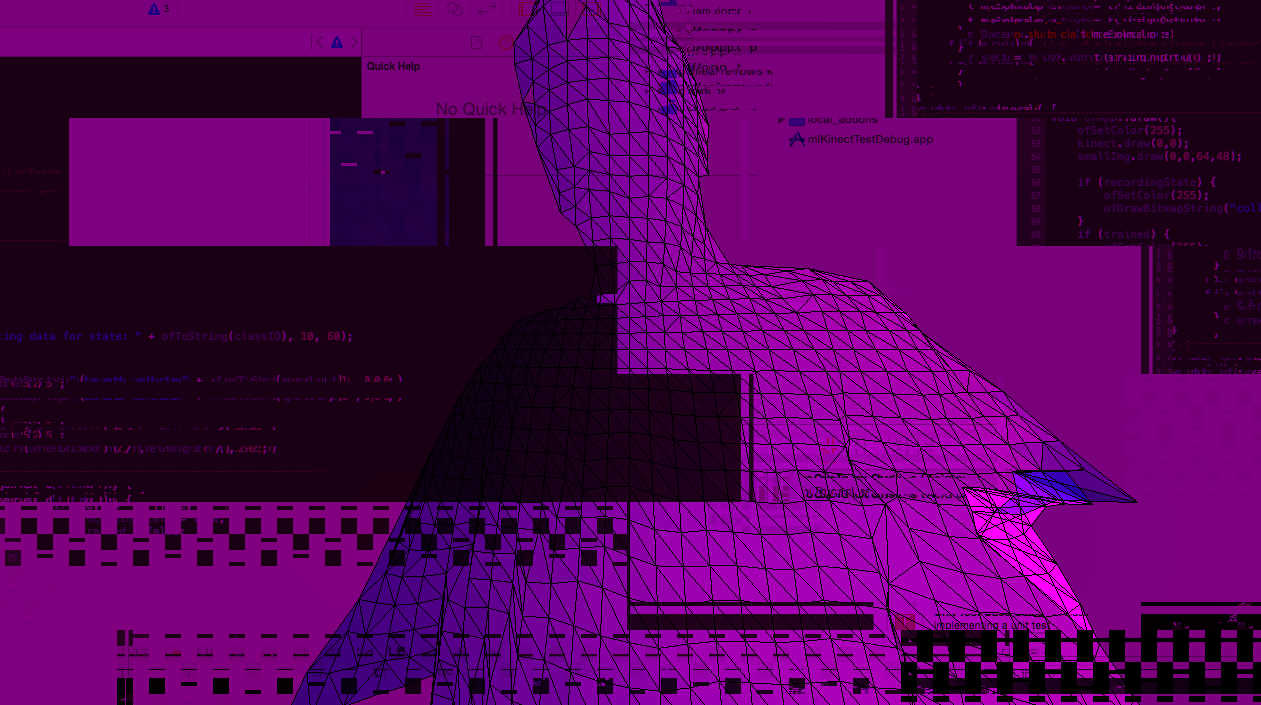

The interaction of Eflowtion is based upon a singular Kinect that records psychical input trained to recognise ‘power stances’ through a custom Open Frameworks addon I made called ofxKinectDetect. The points from the RGB image are triangulated to render a 3D mesh of the user, this is surrounded by an optical flow based fluid simulation and whenever you raise your arms to the machine it responds with a sudden glitch. I chose to use the Kinect because I have worked with it before and value it’s high depth data range for an affordable price [8].

I began with an example found online by Kamen Dimitrov [9] in which he used ofxDelauney to triangulate the Kinect’s points. When I was happy with my alterations, I ported in some code from ofxFlowTools [10]. Similarly, I removed all of the unneeded functionality and got it to work with the Kinect instead of a webcam input. But then I soon reached a wall when realising that skeleton tracking would be essential in recognising gestures and this option was now deprecated on Mac OS. Luckily some feedback directed me to use machine learning as an alternative. Originally I considered using Wekinator and watched many of Rebecca Fiebrink’s Kadenze videos on the subject [11]. However, we had already covered this as a lab assignment in class [12]. So I instead used the ‘ofxHandDetector’ addon we made but converted it to work with the Kinect as a way of classifying gestures, then using these state changes to trigger the displacement. After debugging and optimizing my code, I found some time to briefly look into GLSL shaders [13,14] but found it difficult to integrate into my project. Instead I opted to use ofxPostGlitch by maxillacult [15] and mapped it to the data incoming from ofxKinectDetect.

Future development

There are many opportunities to further develop this work; but unfortunately I did not have the time to realise these extensions. For instance, I really wanted to include a feature that categorised the speed of gestures through an algorithm such as dynamic time warping. I wanted to use these changes over time to store previous frames in an array and create a time echo effect from the Kinect feed. This would hopefully turn out like the live visuals flight 404 created for an Aphex Twin performance [16].

Self evaluation

Nevertheless, I am satisfied with how the final product turned out. This time I put in much more effort and I am proud to include it my portfolio. From now, I intend to dig deeper into OpenGL for future projects [17].

References

1. Chris Speed Visuals, [Accessed April 2018], http://chrisspeedvisuals.co.vu

2. Youtube, 2012, [Accessed April 2018], https://www.youtube.com/watch?v=Ks-_Mh1QhMc

3. ofxMSAFluid, Memo Atken, [Accessed April 2018], http://www.memo.tv/ofxmsafluid/

4. Youtube, 2008, [Accessed April 2018], https://www.youtube.com/watch?v=dmmxVA5xhuo

5. Borenstein, G. ed., (2012). Making Things See 3D Vision with Kinect, Processing, Arduino, and MakerBot. Available at: https://makingthingssee.com/ [Accessed April 2018].

6. St. Jean, J. ed., (2013). Kinect Hacks. Available at: http://shop.oreilly.com/product/0636920022657.do [Accessed April 2018].

7. Body Dysmorphia, Robert Hodgin, [Accessed April 2018], http://roberthodgin.com/portfolio/work/body-dysmorphia/

8. Litomisky, K. (2012) Consumer RGB-D Cameras and their Applications. University of California: Riverside, p.8. Available at: http://alumni.cs.ucr.edu/~klitomis/files/RGBD-intro.pdf [Accessed April 2018].

9. GitHub, 2013, [Accessed April 2018], https://github.com/kamend/KinectDelaunay

10.GitHub, 2017, [Accessed April 2018], https://github.com/moostrik/ofxFlowTools

11.Kadenze, [Accessed April 2018], https://www.kadenze.com/courses/machine-learning-for-musicians-and-artists-v

12.Papatheodorou, T. (2018) ofxHandDetector - make a classifier addon (gentler), Workshops in Creative Coding 1.

13.Shaders, ofBook, [Accessed April 2018], http://openframeworks.cc/ofBook/chapters/shaders.html

14.Perevalov, D. ed., (2013). Chapter 8: Using Shaders. Mastering openFrameworks - Creative Coding Demystified. Available at https://www.packtpub.com/application-development/mastering-openframeworks-creative-coding-demystified [Accessed April 2018].

15.GitHub, 2013, [Accessed April 2018], https://github.com/maxillacult/ofxPostGlitch

16.Aphex Twin NYE, Robert Hodgin, [Accessed April 2018], http://roberthodgin.com/portfolio/work/aphex-twin-nye/

17.The Book of Shaders, 2015, [Accessed April 2018], https://thebookofshaders.com/