Talking to my Brother

Jonas Grünwald

Introduction

'Talking to my Brother' is an interface between an individual and a Brother MFC-8460N Multi-function printer.

The room installation explores an alternative, more intimate way of interacting with a commonplace consumer electronics device.

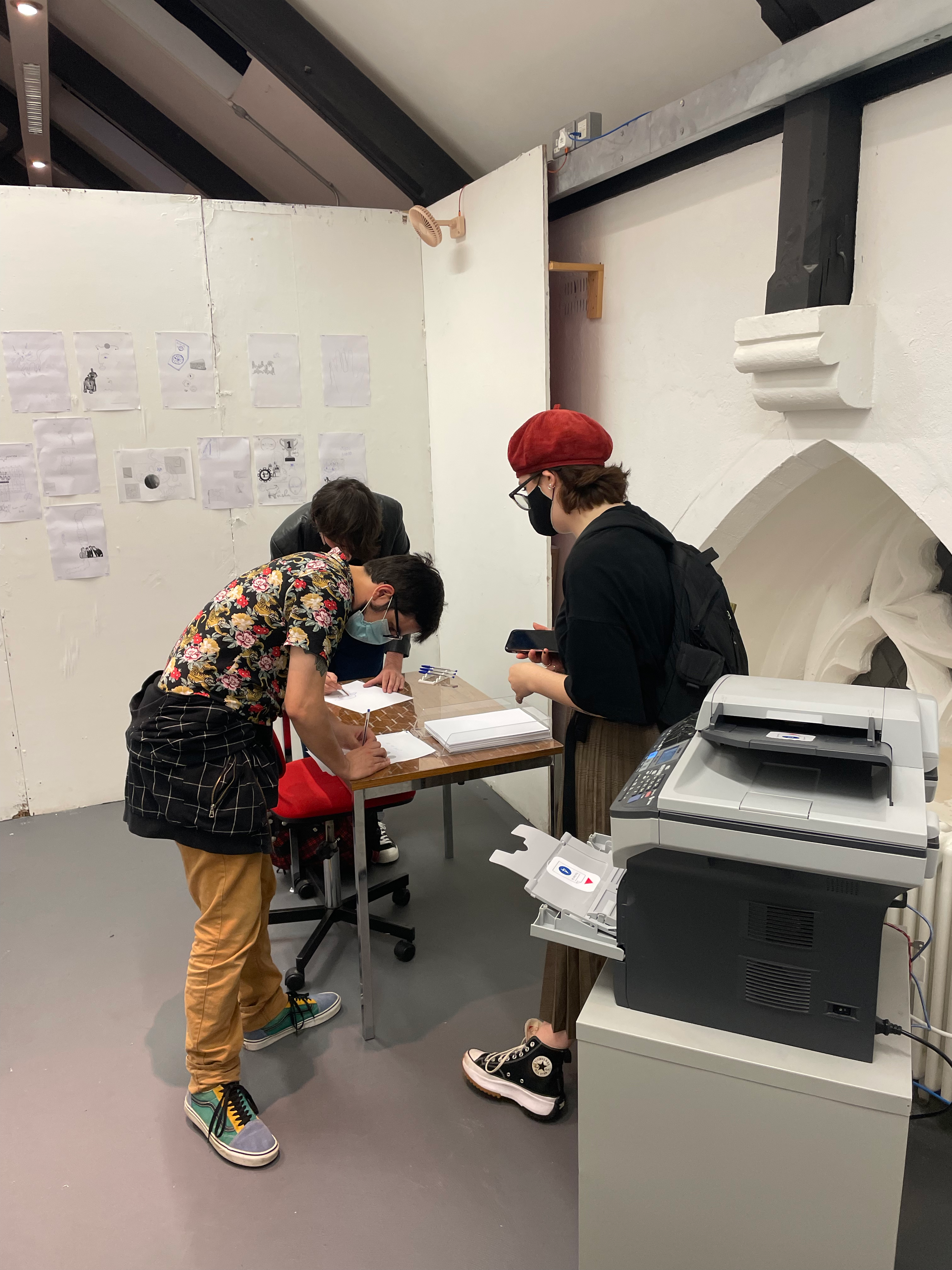

An individual feeds a message on a piece of paper into the printer to be scanned, and then allows the printer to respond by placing the paper back into the paper feeder.

The printer then prints a response on the same paper and passes it back to the individual, who may then respond once again and repeat the cycle, creating a dialogue.

Brother is a connected device, basing its responses on content created by other individuals, published, and distributed on the internet, a process of copying and reproducing media, representative of the original role of the telecopying machine before widespread adoption of the internet. Building on the ideas of systems theory and cybernetics, the work attempts to create a network in which the device and the human interacting with it are equal participants.

Concept and background research

The project aims to subvert the expectations of visitors towards a generic office device, not usually seen as an entity capable of acting with agency to produce unexpected content in response to human interaction and present an alternative system of Human-Machine-Interaction, in which both human and machine are subjects, and the interaction takes the form of a conversation or dialogue.

The Network

In the framework that we are used to, printers act as servants - inanimate objects that fulfill jobs sent to them according to the exact specifications provided by the device controlling them.

In the installation however, the printer at its center is elevated to be a subject rather than an object, building upon the ideas of early cybernetic theorists and practitioners, who envisioned a future in which our relationship with machines could transcend the concepts of autonomy and selfhood.

In accordance with the ideas of third-order cybernetics as first established in 1980, the installation attempts to create a system in which the boundaries between human and machine are breached, through a feedback loop which includes the human observer as an equal node to the machine, as well as connecting all humans interacting with the machine to each other, and to other individuals through the internet.

Human observers are able to send messages to the machine and receive a response. Both entities can initiate the exchange, the machine is able to do so when fed with a blank paper by the human observer, at which point it will autonomously create an initial message.

In the network, the responses the machine generates are based on human or computer-generated content that has been published on the internet, thereby creating a connection between the human observer in the physical space, and other human or computational entities who authored the published content.

The machine collects memory fragments from each human observer feeding messages into it, which are needed for internal technical reasons, but are also used to later respond to other human observers entering the physical space and interacting with the machine. Through this process, the human observer entering the space also changes the nature of the network and its interactions with other participants, creating the mentioned feedback loop.

The nature of interaction with Brother is entirely different from what we would expect from a printer. There are no digital displays to send information from the device to the human (the built-in printer status display does not contribute to the actual exchange), there are no buttons to send information from the human to the device (the built-in buttons are not used at all in the exchange), the only interface between the human and the device is a piece of paper, that the human draws or writes their message on, and then feeds into the printer to receive a response.

The exchange is intended to be a ritual performance of a set of physical actions as outlined by paper guide booklet, made to look like a generic printer manual, containing all the steps needed for the interaction between device and human. The decision not to include any digital displays, or other interface elements in the installation was a conscious one, the focus should be on this raw, paper-based process of communication.

The Machine

The idea of centering the installation around a generic looking office device comes from a long personal fascination with seemingly "boring" overlooked consumer electronics devices, and the idea that they could go beyond what they were designed and built to do and offer alternative routes of interaction that no one would expect from them.

In my personal practice, I tend to also concern myself with the very internet-based idea of discovering, reproducing and resurrecting imagery published, copied, modified and shared by humans online, this aligns with the core ideas of the installation, as it makes heavy use of these types of images in the response generation process. Drawing on the ideas outlined by Hito Steyerl in "In Defense of the Poor Image", this process embraces the qualities of copied and modified images of all sizes and resolutions and aims to explore and unearth these images from the depths of the internet, to weave them into the exchange between human and machine.

The device itself was also chosen in this context, the photocopy / fax machine being one of the first entities to make reproduction of visual messages accessible to everyone, before widespread adoption of the internet, bringing the idea of copied / reproduced images and documents into common consciousness.

The Environment

The room containing the printer was designed to allude to its natural environment, the office space. Items such as the desk and office chair setup, a wall mounted fan and a generic office plant are hints at this idea, however the intention was not to create a realistic set of an actual office, but rather place these items around the room to create a hybrid environment supporting the modified nature of the device, rather than hiding it, with cables, wiring and duct-tape partly exposed to the viewer as well.

Additionally, the pieces installed into the room, are meant to support the mentioned ideas of copying and reproduction of the networked images in alignment with the internal processes of the device. An example of this is the Shutterstock desktop plate I constructed, consisting of a physical version of the Shutterstock watermark, familiar to most people, engraved onto a Perspex sheet that is mounted on the faux wood top of the desk.

Technical

Overview

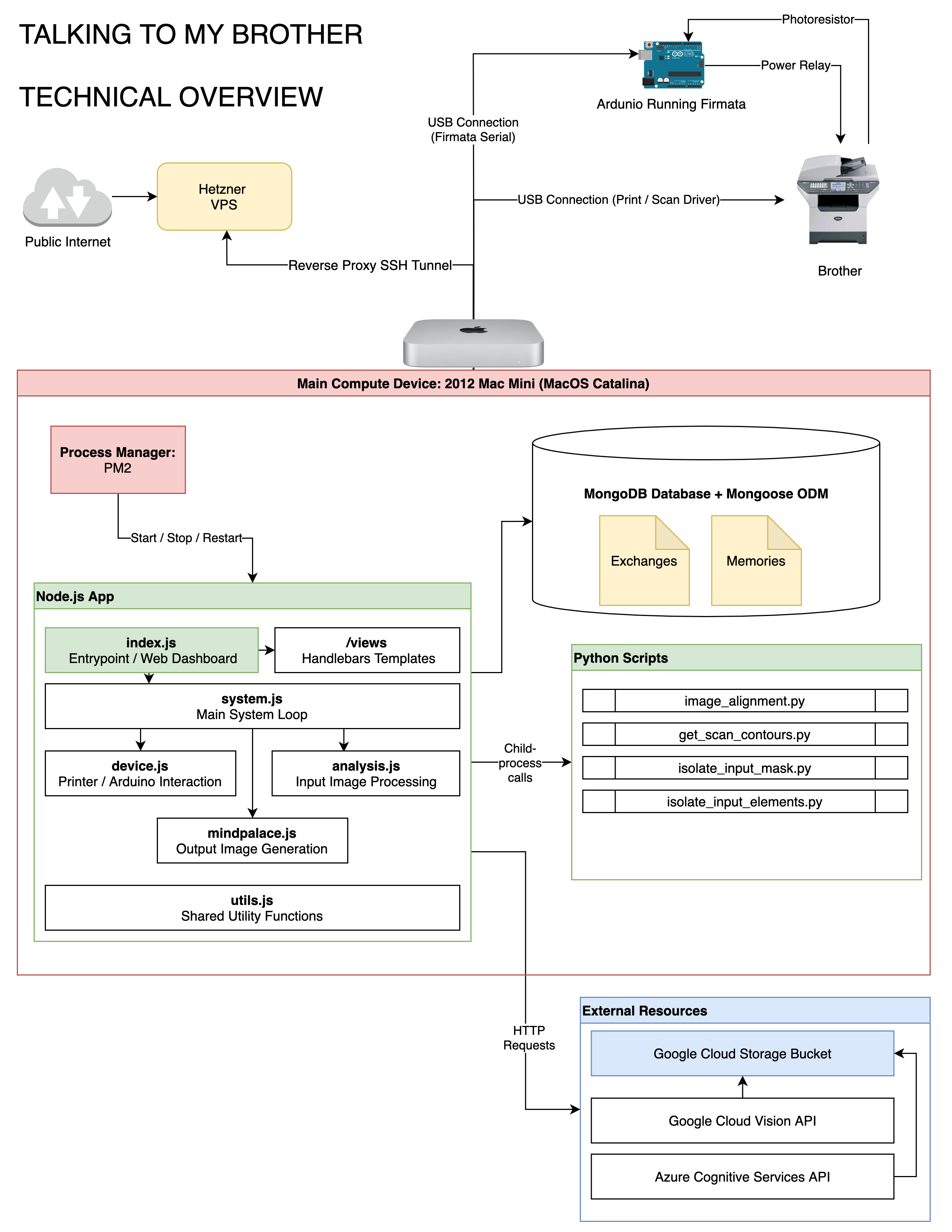

The setup of the installation consists of a computer (Mac Mini) connected to the printer as well as an Arduino with Firmata installed on it, running software I developed to drive the interaction system.

The core piece of software is an application written in JavaScript, run in node.js. Many of the necessary computer vision tasks however are implemented in Python scripts making use of the excellent OpenCV bindings for Python. These scripts are called from the JavaScript app whenever necessary, which spawns them as a child process and then reads their standard output. The Python scripts receive input from the JS app through command line arguments (usually just file paths to the images that need to be analyzed) and then simply write any output that needs to be communicated to the JS app into a serialized JSON object to the standard output which is then parsed by the JS app. The JavaScript app connects to a MongoDB database, making use of the Mongoose Object-Document-Mapper to define schemata for the two collections used to store the application data, Exchanges, and Memories.

The output generation process makes heavy use of external APIs to do Object Character Recognition and run visual search queries. APIs from Google Cloud and Microsoft Azure are used to facilitate this.

Unseen to visitors of the installation, the application also exposes a web dashboard, presenting an overview of all scanned messages and stored memories, alongside meta-information, which can be accessed remotely, through an external server acting as a reverse proxy to allow access directly to the computer from the internet.

Core Loop

The main system loop consists of sending a scan request to the printer in a set interval and parsing the response. The scan request targets the automatic document feeder, so if there is no paper in the feeder, the scan program output indicates a failed scan, and the loop continues. When a piece of paper is put into the feeder, the next time a scan request is made (usually every 200 milliseconds), the paper is scanned by the device, and an image is stored on disk.

At this point the main loop is interrupted, and the system runs a series of computer vision analysis tasks on the image. The first task is a simple contour detection, providing information on where elements are present on the page, this information is later used to try placing any response element in the white space of the page, rather than overwriting any existing elements if possible.

Then, the drawings of the human (blue pen) are separated from any prior response the printer may have printed on the paper (black ink). This is a lot less simple than it sounds, since the color planes of the scanned images are severely misaligned, the black ink areas of the images contain pixels of all colors, including the blue pixels that need to be separated, so a fair bit of targeted erosion / dilation is necessary to generate a mask that sufficiently separates human from machine input.

This mask is then used to extract image fragments of human input from the input image. Image fragments are cropped areas of the page, representing a single element of the drawing, so for instance if a person draws a sun and a moon on the page, two image fragments are generated that are cropped images of just those two elements.

Text detection using the Google Cloud Vision OCR API is done on the entire page however, considering text detection done on the previous scanned message to ensure the printer does not reply to the same text every time when an exchange continues over multiple cycles.

For each image fragment, the printer generates a visual response fragment, the main method used here is a visual search on the internet, using Azure Cognitive Services. For text information, the printer mainly scrapes websites for images using the detected words to generate a visual response.

The collected images are composed into a single output image, taking into consideration empty space on the scanned page, which is sent to the printer as a print job, targeting the printer’s manual feed tray, usually used to print on items like envelopes.

Each input image fragment is stored as a memory in the database, this is done so that on subsequent scans, a comparison can be made between recognized fragments on the page, and recently stored memory fragments, using Scale Invariant Feature Transform in OpenCV. This way, any fragments that match up to recent memories are disregarded, so that the printer does not respond to the same images repeatedly when an exchange goes through multiple cycles. As mentioned in the concept section, these memories are also then used to respond to future messages.

Learning to Love my Brother

A large part of the technical work was spent not just developing the core logic, but also finding elaborate ways to work around the limitations of the specific device used in the installation.

The device being an over ten years old, no longer supported printer came with one main defect: After completing any print job, the next scan job would simply hang forever, with no way to recover. Most likely a processing issue with the printer’s mainboard, there was no way to fix this - however, it could be avoided by restarting the printer after it had completed a print job.

I spliced open the printer’s power cable, wired a relay into it, and connected it to an Arduino plugged into the computer in order to restart the printer from my code. However, an issue with the proprietary drivers provided for the printer made it impossible to tell when a print job had completed, the drivers are of the shoot-and-forget type, flagging a print job as completed once it had been sent to device, not actually once it had been printed. Since a job would only actually print once the human inserts the paper into the specific slot, using a simple timer was not an option, and there was no other way around this issue, so I wired up a photoresistor from the Arduino to the printers status LED light, to detect when it would stop blinking, which indicated that a print job had completed, so that I could then trigger the power cut to restart the printer so that it would not hang on the next scan job.

These absurd lengths I went through to get the device I had working, something that could have been entirely avoided by just buying a modern printer, are an essential part of the work for me, which centers so much around the printer adopting a personality and connecting with humans around it on a deeper level.

Self evaluation

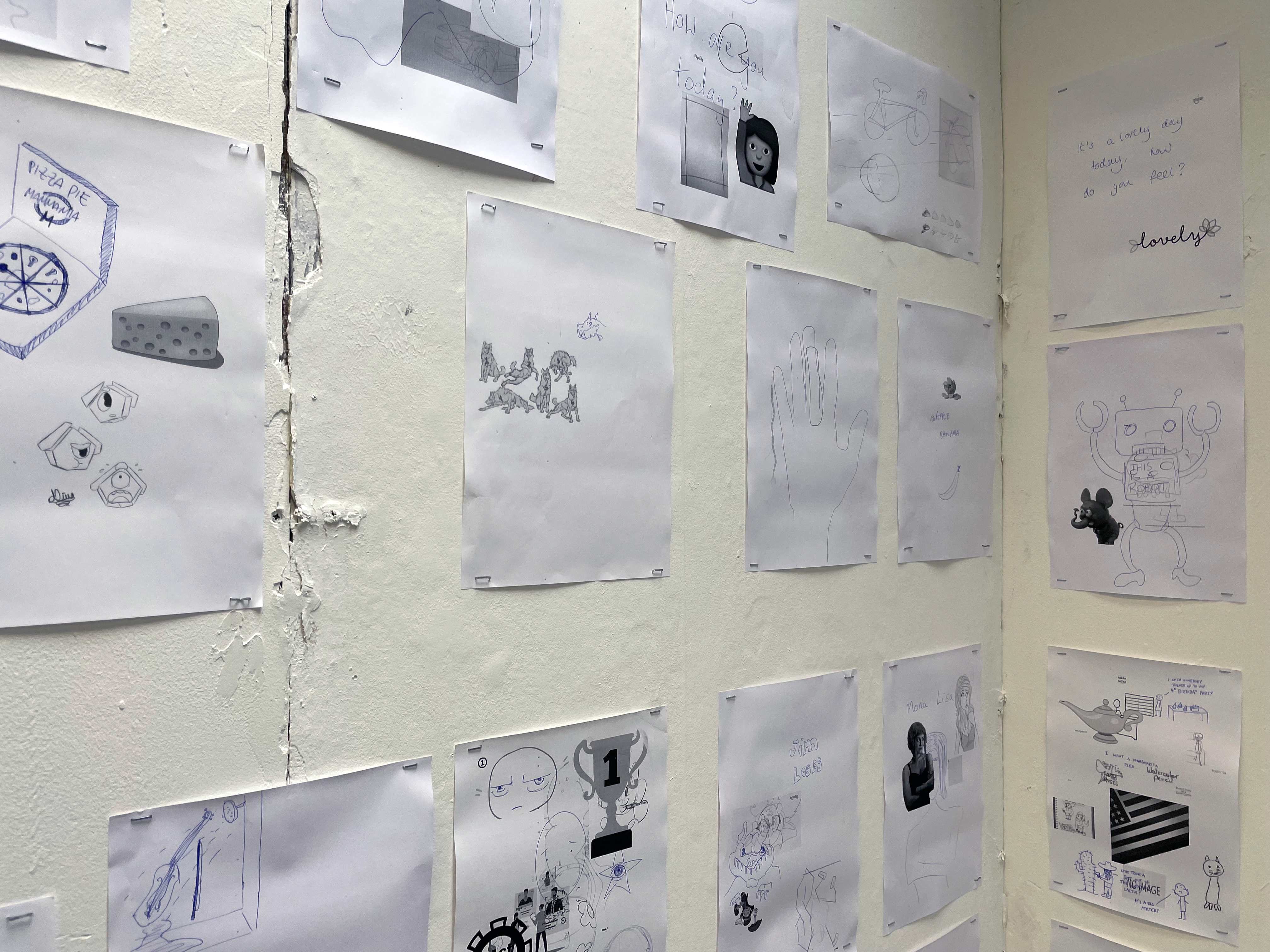

Over the course of the degree show, over 500 messages from visitors were scanned, and double the amount of memory fragments were stored by the device. Many visitors took their responses home with them, but the ones left in the space were stapled to the walls for other visitors to see.

Overall judging from the feedback I received and from watching people interact with the installation I am very happy with how this project turned out.

I had many more ideas of additional methods I could add to generate more varied responses, but the currently implemented ones already generate a wide variety of surprising and sometimes fitting response images, and I find the whole setup to work quite well as it is.

.

References

Kane, Carolyn L. “The Tragedy of Radical Subjectivity: From Radical Software to Proprietary Subjects.”

Leonardo, vol. 47 no. 5, 2014, p. 481.

N.K. Hayles, “Cybernetics,” in Critical Terms in Media Studies, ed. W.J.T. Mitchell and Mark B.N. Hansen.

University of Chicago Press, 2010, pp. 147-149.

Hayles, N. Katherine. How We Became Posthuman: Virtual Bodies in Cybernetics, Literature, and Informatics.

University of Chicago Press, 2010, p. 8.

Steyerl, Hito “In Defense of the Poor Image“

E-Flux Journal, Issue #10, 2009