Social dysDance 1

Ernie Lafky

Social dysDance 1 is an improvised interactive dance for the camera which explores relationships in our current liminal space and time. The primary relationship is between the dancer, Shinichi Iova-Koga, and his laptop computer. Using a Kinect depth camera, Shinichi’s improvised movements and shapes are perceived by the computer as data. This collection of numbers is fed back to Shinichi graphically.

The piece created between the dancer and the laptop shifts and hovers between human and computation, figurative and abstract, flesh and math. The body is at times fully visible and at other times merely a fragment. Together Shinichi and the laptop meet at the intersection of generative computational art and dance, creating an unstable hybrid object. This third object shows traits of both parents, but is also its own entity. The computer / human collaboration points toward a destabilisation of anthropocentrism.

Concept and Background Research

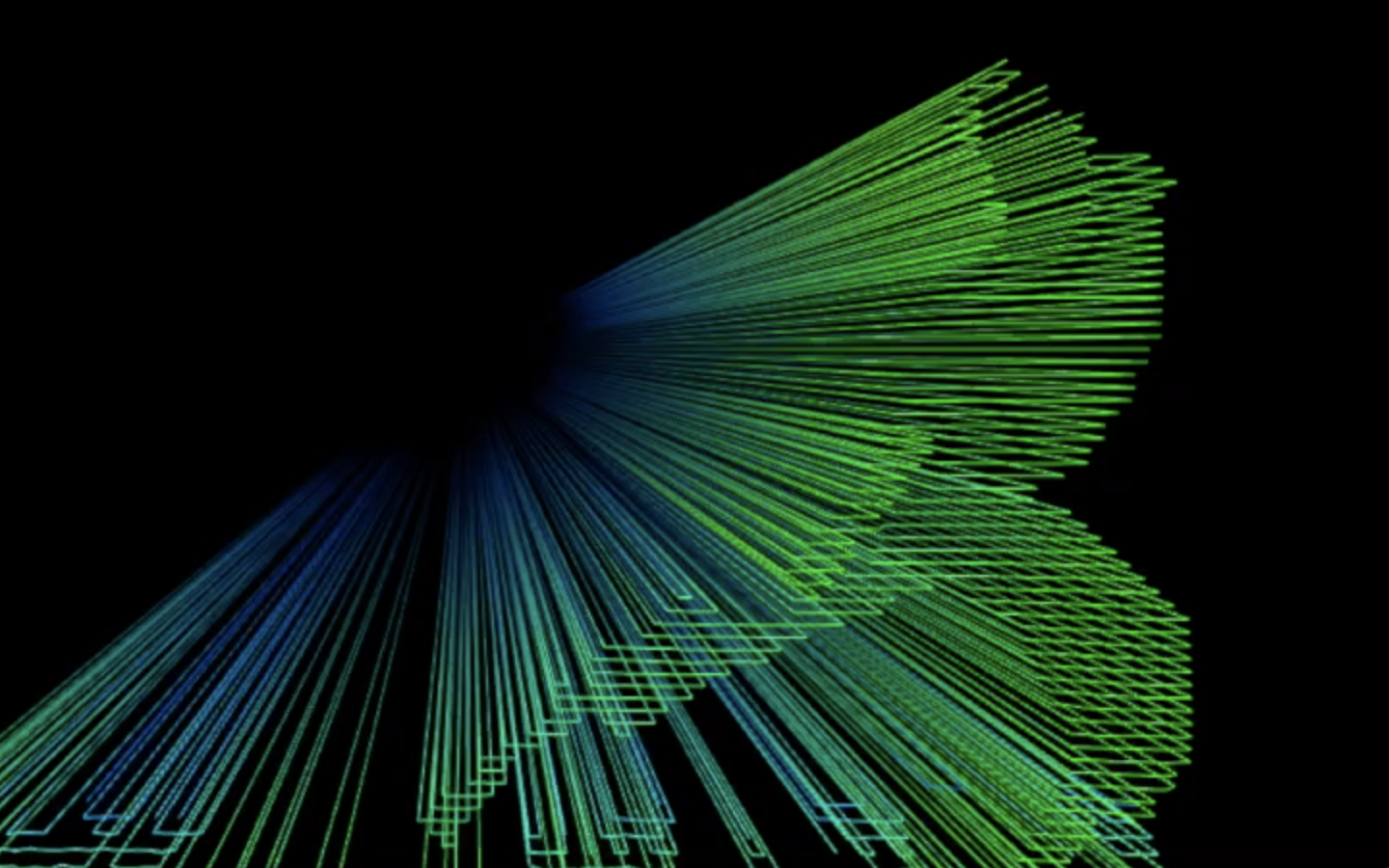

“Hybrid and unstable” is the core of the design solution for Social dysDance 1. The graphics are 2D shapes in a 3D space, blurring the line between dimensions. The Kinect depth camera generates 2D images with 3D data embedded in them, creating a kind of sculptural relief when rendered in a 3D environment.

The inspiration for this play between dimensions can be traced to early modern paintings, particularly analytic cubism. For example, in Picasso’s “Ma Jolie” (1911-1912) the thick lines, geometric shapes, and flat planes of color emphasize two dimensions, but there is still a semblance of pictorial depth, albeit from multiple simultaneous perspectives.

Also inspired by analytic cubism is the tension between abstraction and figuration. In both “Social dysDance 1” and many cubist paintings, a human figure is discernible, but it is often obscured by pure abstract geometry. Shinichi danced around this boundary in his choreography, at times appearing as a body and at other times disintegrating into colourful patterns of shapes and lines. As with “Ma Jolie”, we struggle to perceive and make visual sense out of what we see, but this ambiguity is fundamental to the tension and pleasure to be found in both works.

From a content perspective, there are numerous possible interpretations for this formal exercise, but the one that most interests me is the disintegration and destabilization of the autonomous human self. The arc of Social dysDance 1 is the transformation of a whole, cohesive human body into something increasingly fragmented and abstract. By adjusting the detection areas of the Kinect depth camera in different parts of the piece, the dancer is sliced by a thin detection area in the third section, reduced to only a face in the fourth section, and radically fragmented in the final antic section as he moves through a space with multiple voided detection areas.

Similarly, I tried to allow the laptop to have some autonomy and presence as a being in its own right. I wanted to move in the direction of using the computer as a collaborator and not just a tool. To give the computation a presence in the piece, I chose visuals that were inspired by retro computer graphics. All of the graphics are generated during the performance using code only. There is no attempt to make them look representational. The colors resemble false or pseudo colors that are often used for information visualization, emphasizing that the computer sees the performer as merely a set of data.

On the other hand, a more narrative, accessible, and human interpretation of Social dysDance 1 is suggested by the title. One of my inspirations was the new social reality forced by the lockdown due to the Covid-19 outbreak. As Zoom calls became more central to my human interactions, I found myself developing a more intimate relationship with both my laptop and my own image. I would look at my pixelated friends, of course, but I would also stare at my own image and adjust how I looked in real time. This melding of obsessive self-reflection with technology became one of the core tenets of Social dysDance 1. While I’m certainly glad that Zoom (and other video conferencing apps) exist, I noticed something lonely and isolating about them. In some ways, my laptop became my closest friend, and I saw how one could drift toward a mental breakdown. This feeling I hoped to evoke in the final sections of the piece.

Shinichi and I used the same approach in the dance. As Shinichi improvised, he acted as both a dancer and a graphic designer. He watched himself dance and saw how the computer interpreted what he did. He was both inside and outside the piece, both performer and audience, like a selfie with an incomplete self.

In my view, both interpretations of the piece (anti-anthropocentric or descent into madness) are valid, and in this writing I choose to not privilege one over the other.

Contemporary inspirations for Social dysDance 1 included two interactive dance works: “Glow” by Chunky Move and Frieder Weiss and the “Digital Body” series of videos by Alexander Whitley and his various collaborators

While researching and watching various interactive dance pieces, I noticed that many of the works shared the same design challenge. Namely, the dance could get overwhelmed by the motion graphics. It’s very easy to create an enormous amount of motion on a screen, but much less easy for a dancer on a large stage to have such a commanding visual presence. As an audience member, I felt that I was forced to focus on the dance or the motion graphics, but that I couldn’t focus on both. In “Glow”, however, choreographer Gideon Obarzanek used the “video as lighting” and focussed the graphic imagery to enhance and emanate from the dancer’s body. Dancer and image began to form a cohesive whole.

Taking this idea even further, Alexander Whitley created 3D data of a dance while in lockdown that he then made available to computational / digital artists. These dance videos fuse the graphics and the dance. I like to think that Social dysDance 1 takes Whitley’s experiments a step further by allowing the dancer / choreographer to create the dance and manipulate the visuals in a real-time improvisation.

Technical

As mentioned above, Social dysDance 1 used a Kinect depth camera to capture video of Shinichi with depth data embedded in each frame. The Kinect was connected to Shinichi’s laptop which was running an openFrameworks executable. In the openFrameworks app, the Kinect data was turned into a point cloud which rendered a subset of the pixels in the 3D space using the depth data. The point cloud was turned into vertices which were stored in a mesh, and the vertices were connected by lines when the point cloud was drawn. These functions formed the core of the visuals.

Another key component was the sequencer. I wanted to let Shinichi dance with his laptop without any intervention from me. This goal was both aesthetic and practical. We needed to work asynchronously due to our geographical separation (the UK and Switzerland) which could not be overcome with the Covid-19 lockdown. Also, Shinichi was extremely busy and in demand, so he needed to be able to record his improvisations whenever he had a spare 15 minutes. The sequencer allowed Shinichi to run through the piece by just tapping the spacebar on the laptop. The sequencer would then trigger different sections and sequences depending on the amount of elapsed time.

The colors were controlled by two different parameters. I mapped the colors to the distance each vertex was from the camera in order to heighten the 3D effect. But I also mapped the range of possible colors against the elapsed time, which made the colors slowly cycle during each section.

For rehearsals, I created a fairly elaborate GUI so that we could adjust parameters during our exploratory rehearsals. We rehearsed over Zoom, and Shinichi would have to adjust the app’s sliders on his laptop, so it had to be fairly intuitive. I used an openFrameworks plug-in called ofxGui and based the set-up on code by Andy Lomas.

Shinichi and I created several different sections of the piece by adjusting the parameters controlled by the GUI. We primarily changed the near and far detection thresholds for the Kinect, which allowed us to create very narrow slices in the space. This technique gave us the fragmented look that was core to the aesthetic of the piece. Once the optimal parameters were defined, I saved them into multiple arrays which would then get accessed when triggered by the sequencer.

The musical drone that plays with the video was created by fellow artist and Goldsmiths Student Kris Cirkuit. The sound effects that sync with the visuals and Shinichi’s movements, however, were created by me using a simple vocoder created in Max/MSP. The code from the vocoder was taken from an online tutorial, but I did add some simple GUI elements that allowed me to improvise while playing the video. I wanted the audio to have some of the same spontaneity and bodily presence that the improvised dance had. Once the tracks were created, they were then mixed down using Garageband and added to the video as a soundtrack.

Self Evaluation

There are many improvements I would have made had we been living in an ideal world, but of course 2020 has been far from ideal, both on a personal and (obviously) global level. Given everything, I’m extremely pleased with how the piece turned out. I used the limitations to my advantage and came up with a design solution that could work for a live online interactive dance piece. The piece holds together well aesthetically, and I personally find it compelling and at times even beautiful. That said, there were several missed opportunities as well as potential for future development.

- Ideally the piece would have been streamed live over a platform like Twitch or YouTube. A live stream would make the real-time graphics and dance improvisation make a bit more sense. But the logistics of adding another element of risk when I had such limited access to my dancer made live-streaming fall off the feature list.

- My initial goal for the piece was to have a duet between a dancer and a laptop. I tried multiple approaches to have Shinichi dance with a set of boids, but the piece only came together once I removed the boids altogether. The boids gave the laptop some autonomy and the illusion of behavior, making it feel like a live collaborator, but I just couldn’t arrive at a satisfying design solution. Creating some kind of computational being that can improvise with a dancer will be a goal for future development.

- Along the same lines, I originally wanted the audio to be created in real-time just like the graphics. To reduce risk, however, I decided to treat the audio more like a score for a movie. Overall, I’m pleased with the soundscape and think it matches and enhances the visuals. In a future iteration, I would like to have real-time generative audio.

- At times the colors are a bit too dark for the screen. The color shifts during each section were added relatively late in development. They added some nice movement to the piece and were definitely worth it, but they could have been more carefully tuned and refined.

References

openFrameworks code

- The basic Kinect programming was taken from the kinectExample, provided by openFrameworks in the Examples folder.

- The set-up of the GUI elements and the basic particle system classes were taken from Particle Lab 7 (the optimised boid code) by Andy Lomas, created for the Computational Form and Process class at Goldsmiths University, 2020.

Max/MSP code

- filtergraph~ help provided by Max/MSP

- "Delicious Max/MSP Tutorial 4: Vocoder"

Photo of Pablo Picasso’s “Ma Jolie” taken by Wally Gobetz at the Museum of Modern Art in New York City