Isolation Collaboration

'If I had the chance, I'd ask the world to dance

And I'll be dancin' with myself.'

Billy Idol "Dancing With Myself" (1985).

produced by: Kris Cirkuit

Introduction

"Isolation Collaboration" is an alliance between a laptop, an analogue synthesiser, and a human performer. Exploring the potential of semi-randomised computer-controlled data as an active participant in artistic creation, it is a reflection on the effects of Lockdown, social class, and the heavy restrictions on social interaction on live performance. Please note, the intro the video is deliberately silent.

Background and concept

"Don't be fooled when they say, 'We are all in this together'.

They have a different definition of the word 'we'."

Madani Younis

Council estates are often stereotyped, as are the people who live on them. Class identity is something I found challenging whilst negotiating the habitus of rigorous academic life. I found myself trying to disappear, to tone it down, to become smaller. At one point, I was discussing this work with my peers, and they did not recognise my flat as being a council home. The reason for this was that none of them had ever been inside a council flat. I was shocked but not surprised. After further discussion, I decided I would film myself entering the flat. I needed to make my point explicit. I wanted to challenge stereotypes in this work and also highlight the fact that Lockdown did not affect all people equally.

Lockdown has prooved challenging for performers across the board, and streaming has taken hold with varying degrees of success. After watching many performances, I found most of them caused me to feel more disconnected than connected. The content I found engaging was the content that was less staged, more authentic experiences. I decided to perform the piece in my home, using no fancy cameras, one -take only, with minimal editing and post-production.

The creative work of some friends influenced the concept of filming it in my council flat. Durning Lockdown, they formed an impromptu duo(Music In Our Underpants) and performed in their home. A link to some of their work, "Frustration" is below.

https://www.youtube.com/watch?v=xrptWcipGFc&ab_channel=TheRestartsChannel

At the same time as this, I wanted to collaborate with colleagues and friends on musical projects and found these people were depressed, despondent, and unresponsive. "Isolation Collaboration" arouse out of these feelings of disconnect, the desire for connection in a world changed beyond recognition and the acceptance of fate. In the end, my only collaborator was my computer.

Technical

The technical aspect of this project in many ways was dictated to me by my computer. The computer is a 2012 MacBook Pro. The RAM and hard drive have been updated, but there is only so much power I can coax out of it! I avoided overloading the threads, and thus grinding to a halt by dividing the labour between Open Frameworks, MaxMsp, and Ableton Live. I have used a Kinect sensor to capture my movements. I mapped the point cloud and controlled the colours, their size, and their opacity in Open Frameworks.

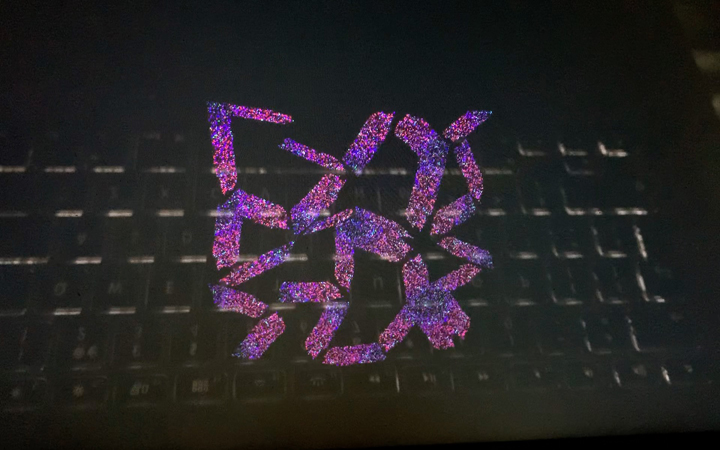

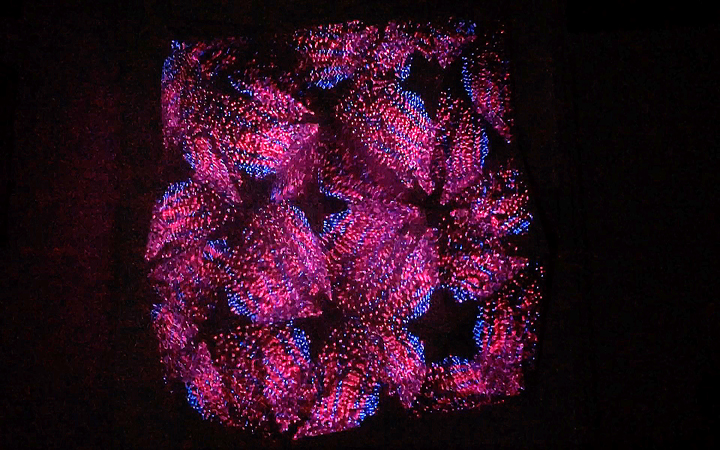

Additionally, Open Frameworks receives midi information from Ableton Live, and this information changes the colour palette. The point cloud was loaded to a Fbo source and using OfxPiMapper n. I projected the patterns onto an Olga projection mapping kit. The goal was to create an abstract, kaleidoscope style pattern. I achieved this by rotating and inverting the Fbo patterns to various triangles on the Olga kit and varying my movements and distance from the Kinect sensor. To overcome the challenge of projecting in such a small space, I rented a super-short throw projector.

The above photo shows the mapping as it looked on the computer screen. The photo below is a sample of the results mapped onto an Olga projection mapping kit.

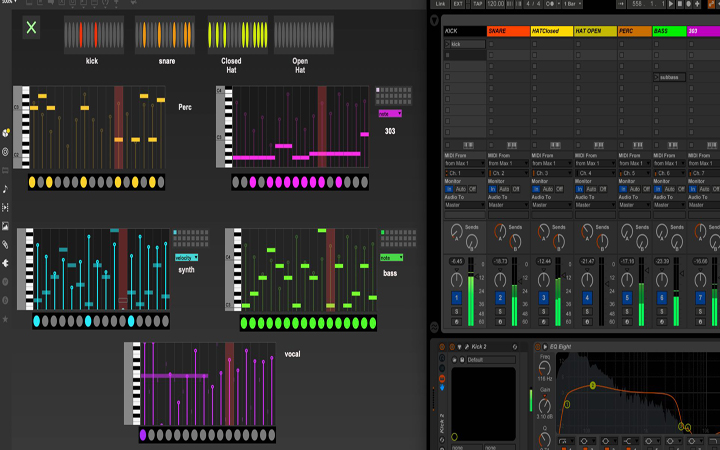

Midi sequences were produced in MaxMsp. Using step sequencers and coded randomisation patches, I created semi-random combinations to be sent to Ableton Live via Network Midi. I could have used a "follow action" procedure in Ableton. I chose to use MaxMsp as it provided offered greater potential for experimentation.

To preserve precious CPU and to facilitate the sound quality control, I routed the midi information to Ableton. I used network midi to this, and once I ensured the audio midi setup was correct, it was straightforward.

Aware of the limitations of my computer processor and memory, I deliberately chose simple instruments and samplers as I did not want to overtax my system.

Future Development

This project offers plenty of scope for further development. The MaxMsp patch could be more complex, with the patterns created being expanded and the relationships between them could be made increasingly complex.

The projection mapping is a versatile tool and lends itself easily to other patterns, the introduction of video, and more significant interaction. I believe the use of Ableton to create the sounds was a solid choice and given more computing power could be made more complicated and with improved sound as the processor allows.

Self Evaluation

As a part-time student on the Computational Arts MA, I did not have to create a work for this show. My work here is unmarked, ungraded. This does not mean the work is insignificant. I felt compelled to make this work, driven forward, and overall I am pleased with the result. I am grateful to my fantastic crit. group. They were invaluable in helping with clarity of purpose and feedback.

There is room for improvement. I would like to have spent more time and energy on the MaxMsp patch. I think it would have been desirable to have more connection between the sequencer sections, and I would have liked to have developed the sequencers more fully.

The code in Open Frameworks was simple. It did what it needed to do but would have benefited from more depth. I used quite a bit of code from the example files for ofxPiMapper, ofxMidi, and the classroom code for using the Kinnect. Additionally, I do not think the interaction between the Midi code and the Kinnect is apparent enough. It could do with being more evident or abandoned as it is unnecessary.

I found working with the Kinect and projection mapping inspiring, and I enjoyed it! Going forward, I hope to use the skills acquired in other works. Although I have a working knowledge of MaxMsp, I learned new things and additional methods. These will also prove useful in the future. Taking part in and contributing to the show, going through the Viva process, and completing the work has been beneficial and the experience will provide a solid footing for the degree show next year.

COVID Interruptions

Due to the COVID restrictions and limited physical exhibition space, I made the decision early on to exhibit virtually on this piece. Instead of fighting to change things, I accepted the situation and turned it around. The challenge of working under the strange constraints of these times enabled me to think differently and make a work I might not have otherwise.

References

Mahfouz, Sabrina. "Smashing It: Working Class Artists on Life, Art, and Making It Happen". The Westbourne Press, 2019.

Taylor, Gregory. "Step by Step: Adventures in Sequencing with Max/MSP". Cycling '74, 2018.