In Concert

In Concert is a web application for an experimental music collaboration project, inspired by open source principles. Musicians and non-musicians openly share ideas and source material to create a dencentralised network of compositions. Creativity doesn’t exist in a vacuum.

Project report

Background research:

I was particularly influenced by the writings of Lawrence Lessig, an American academic who has talked extensively about copyright laws and their effect on innovation. He argues that modern digital copyright laws have become too restrictive, stating ‘Why should it be that just when technology is most encouraging of creativity, the law should be most restrictive?’. I was also inspired by the open-source software movement which allows its own software to be studied, changed and redistributed, although potentially with the condition that users allow the same liberties to be taken with their own creations. Sampling and re-interpreting ideas has played an enormous role in the development of Popular Music forms such as Folk, Jazz, Blues, Rock and Hip-Hop. Alarmingly there has been a recent court case where a song was successfully sued for copyright infringement of an older song, not for any direct note for note lifting, but for being influenced by the style of the track (Pharrell’s Blurred Lines vs Marvin Gaye’s Got To Give It Up in 2013). Considering how important influence has been in the evolution of pop music, this can be seen to be a very dangerous precedent.

What I tried to achieve:

I had two main goals for my project. The first was to experiment with an open-source approach to music composition, as a protest against overly restrictive copyright laws that I would argue are damaging to the creative process. Rather than hide influence I wanted to celebrate it openly, where people can see connections between musical ideas.

The second goal was to trial a different method of musical collaboration. Rather than two or more people trying to collate their ideas into a single work, I wanted to create a space where musicians could maintain their individuality whilst sharing their ideas and source materials with each other. This space could then be set up as an interactive digital format for the public to explore and discover the connections between ideas.

My Process:

Initially, I knew I wanted to make a web-based application so that it would be easily accessible and receive contributions from as many people as possible. I chose p5.js as my framework due to my background with Processing (both created by the Processing Foundation), and also p5’s extensive sound library.

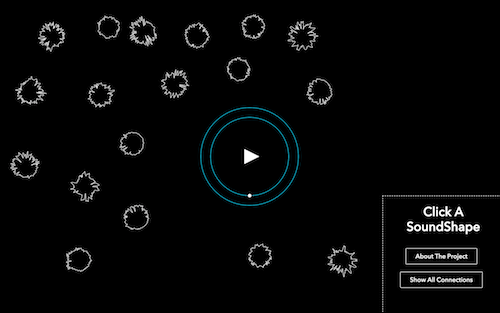

My first draft contained a traditional looking audioPlayer and the design of the soundShapes, where each track’s waveform is drawn as a circular shape. This worked well, giving the shapes a consistent aesthetic quality whilst maintaining their own individual characteristics. For example, tracks with a greater dynamic range tended to be spikier looking whilst tracks with a more uniform volume looked smoother. I liked the way the shapes seemed to represent little sound ‘organisms’, and I added to this effect by using Perlin noise to make the edges of the shape gently undulate.

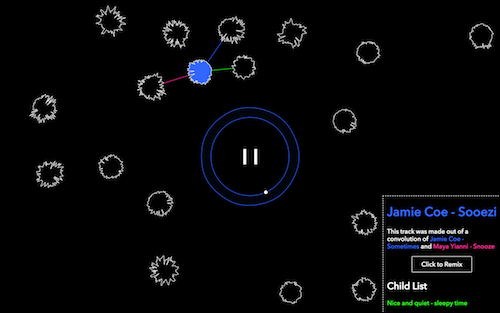

I then adapted this circular design for the audioPlayer as well, where the inner circle is the playhead, and the outer edge is a visualisation of the track’s current amplitude.

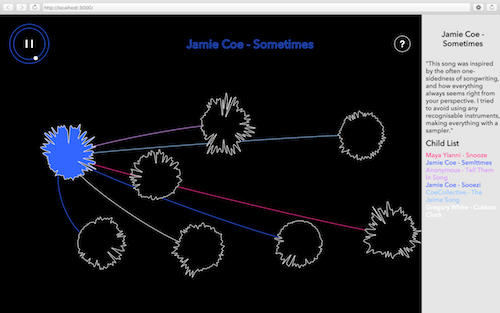

I developed a helpMenu, which explained the connections between shapes, along with a hyperlink system as another method for skipping between tracks.

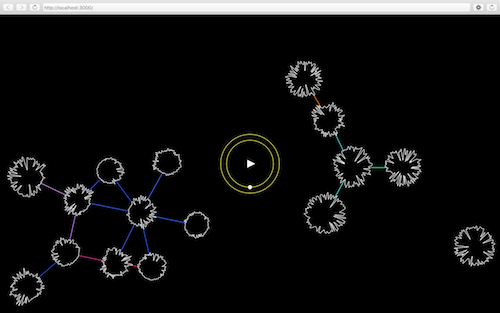

I moved the audioPlayer to the middle to attract more attention to its visualisation. I also tried displaying all connections at all times, although I later decided to make this optional with a button.

The helpMenu was moved to the bottom right corner, with messages to guide the user.

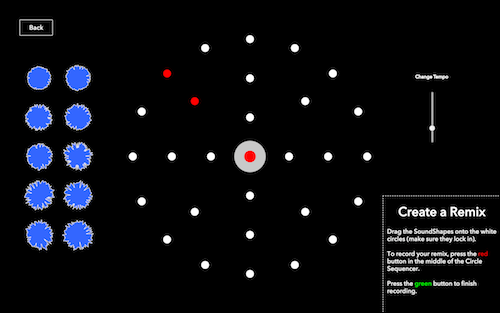

The circleSequencer consisted of a number of premade samples taken from a selected track which could be dragged onto the main interface and used to make a new remix.

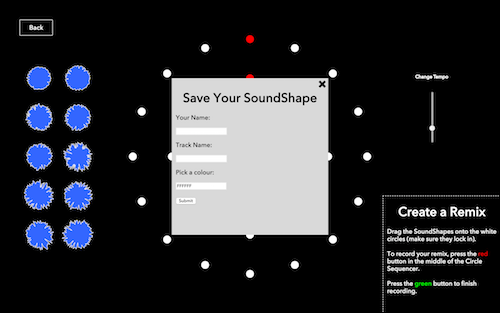

Once a remix has been recorded, a pop up form allows users to add meta-data. When submitted, the track is saved and the browser is refreshed.

Reasoning for your choices:

One of the main themes of my project was the use of circular-based shapes. This was something I picked up on over time and starting to accentuate, beginning with the circular waveform soundShapes, then adapting the audioPlayer into a circular design, and finally creating the circleSequencer as a traditionally square-shaped sequencer re-imagined as a circle. This gave the entire project a strong sense of cohesion, but also emphasises the non-linearity of the format. Unlike the traditional music album, these songs could be experienced in a non-linear space where the close of one track would always lead into the start of another, with no defined ending point.

Another important feature was my choice not to display any hierarchy when drawing the connections between shapes. When looking at the connections, it is not possible to tell whether a shape is the child or parent of another (although this is clarified in the helpMenu). I did not want to create a sense where one shape appeared more important than another because it had come first or had more connections.

My reasoning for building the circleSequencer was mainly as a feature for the exhibition. I felt it was important to not only allow visitors to explore the pre-existing shapes, but invite them to contribute their own shapes using a simple remix tool. Therefore, the circleSequencer was built to be easily used by both musicians and non-musicians.

Challenges you faced:

One of the main challenges I faced was how to express the meaning of the connections between shapes. Because the project encouraged re-interpretation in any way imaginable, there was a wide variety of ways people re-imagined each other’s ideas and source material. Examples included a traditional sounding dance remix using audio stems, to a transplant of a vocal melody as a lead line in a song of a completely different style, to a semantic understanding of an instrument (eg: A keyboard that sounded like an alarm clock) used as subject matter for new lyrics. Because of this wide variety and complexity of meaning, the only satisfying way to explain it was to include a description of each track in the helpBar, which gave a short insight into how the track was made, with hyperlinks to other tracks that were used.

Another challenge I faced was p5’s lack of a function for saving a .wav to a specific file path. Their saveSound() function allows users to download a .wav on the client side. However, what I needed was the ability to save a user’s remix to a specific folder on the server. I therefore had to go into the code for the p5.sound library and add an additional function which converted a p5.soundFile into a .wav audio blob. From here, I could upload the blob to my local server where I could save it to the correct path.

Details on technical implementation:

For the exhibition, the project will be displayed on a computer running a local server created with Node.js. All the meta-data for tracks is stored in a JSON file which can be written to when a new soundShape is created.

One of the key processes I used was an algorithm to draw a circular shape made up of a series of points. In this way, I could adjust the radius of each point based on a corresponding amplitude value from a track’s waveform array at that point in time. This allowed me to display the waveform of a track as a circular shape. In the same way, I could visualise the current amplitude of the track with the audioPlayer as a circular series of points, which creates complex and beautiful patterns as it changes over time.

Another useful feature I developed was the hyperlink system in the helpMenu. This allowed users to click on a track name, which would then be immediately loaded into the audioPlayer. In order to do this, I marked all track names in the description and child links sections with html span tags and assigned them classes. By doing this, I could access the classes in my Javascript code and create a callback function to switch to their track when they are clicked on.

Possible future development:

In terms of future development I would like to firstly get the project officially online and encourage more contributions. It would be helpful to create a user login system and a download option for each track along with any source materials. I feel the project could also be made more social, perhaps giving users the opportunity to leave comments on each other’s tracks. I could also design some more remixing tools like the circleSequencer for non-musical users, expanding the idea of what a remix could be by focusing on other features such as lyrics, chords or melodies.

Source code:

Here is a link to my source code on GitHub.

References:

Lessig, L. ‘Free Culture, The Nature And Future Of Creativity’ (2003, The Penguin Group)

Lessig, L. ‘Remix, Making Art And Commerce Thrive In The Hybrid Economy’ (2008, The Penguin Press)

Miller, P (editor). ’Sound Unbound, Sampling Digital Music And Culture’ (2008, The MIT Press)

Code reference:

jscolor.js by Jan Odvarko (see http://jscolor.com)

'Chapter 6 - Autonomous Agents' from Shiffman, D. 'The Nature Of Code' (2012, Magic Book Project)

'Chapter 6 - Dynamic Data Structures' from Bohnscker, H / Groß, B / Laub, J / Lazzeroni, C (editor). 'Generative Design, Visualise, Program And Create With Processing' (2012, Princeton Architectural Press)

File Upload example from Binary JS library (see https://github.com/binaryjs/binaryjs/tree/master/examples/fileupload)

Socket.io example from Socket.io library (see https://github.com/socketio/socket.io)