/MarksMade/StainedLand/*.png

A photographic installation that uses a salvaged greenhouse as a frame to tell a visual story about the afterlives of landfill sites.

produced by: Felix Loftus

Introduction

/MarksMade/StainedLand/*.png is a fragmented story that invites viewers to rethink landfill sites as places where new myths are being created by using creative computing methods. A focus is put on two sites. The first is Stave Hill Ecological Park in Rotherhithe, a community led park on the remnants of the London timber docks and a domestic waste landfill site. The park’s hill was landscaped from rubble and domestic waste excavated from the site. The second is Beddington Farmlands, a new ecological park next to an operating landfill on the fringe of South London. The story tells of the creation and existence of new spirits of the land through the ritual act of preparing landfill sites, referencing the Irish mythological immortal spirits, the Aos Sí, who dwell inside hills, and the formation of earthen long barrows in Neolithic Britain which were ceremonious burial sites (Hutton, 2014). The piece comprises of a series of photos of landfill sites which have been engraved onto the glass of the greenhouse, two written stories presented on an e-reader, durational photography of plants from landfill sites presented on an e-ink screen, and a hypertext piece on another e-ink screen.

The two stories can be found below:

2. The Stave Hill Burial Ground

The stories are based on the structure of the Immortals, a series of Irish myths. In these stories someone from the human domain is taken, or falls into the realm of the Aos Sí. During their time with the Aos Sí they learn something about life such that if they return to the human world, their perspective has shifted. (Williams, Chapter 2, 2016) In my modern re-interpretation, there are mythical creatures within landfills.

Images of Installation

Concept and Background Research

This body of work is the outcome of two concurrent, and overlapping research strands. The first is an arts-based research project into creating alternative modes of environmental photography using computational methods that can help engender better multispecies relations and human relations to land (Loftus, 2021). In this piece, my aim was to use environmental photography as a multispecies storytelling tool to re-story areas that are undergoing a period of ecological restoration. This was achieved by engaging with two alternative photographic verbs; gathering and fermenting. I will explain my use of these terms during my technical write-up.

The second research strand is the practical element of my research into environmental justice in tech with No Tech for Tyrants and Earth Hacks’ international research group. In the latter, I have been helping to formulate principles for environmental justice in computer science and software studies with a team of researchers in the field of environmental tech. (Earth Hacks – Forthcoming). Through this project, I aimed to create a digital environmental camera based on principles for environmental justice in tech.

These two strands are interwoven through the ecologist Robin Wall Kimmerer’s idea of re-storyation. Kimmerer believes that to create genuine, holistic ecological healing of our environments, we not only need practical work towards ecological restoration (such as rewilding, agroecology, and permacultures), we also require new stories and ideas about our relationship to the land we inhabit (Kimmerer, 2013). Through this piece, I aimed to work on both. I have developed an alternative mode of photography following principles for environmental justice, that is both cheap, accessible and open-source, but also low-power. And I have also worked towards using computational methods to re-story an environment that is largely un-storied: landfill sites in the UK2. So, this piece is under the banner of ‘restor(y)ation’. My final aim was to convey these ideas visually under the banner of restor(y)ation and so develop a clear aesthetic for the style of computing I am developing.

I will now briefly outline my arts-based research into landfill sites before giving an overview of the computational technology in this piece, where I will draw out how my decisions were led by these two research strands.

Photography at Beddington Landfill and Grasslands using the Raspberry Pi Camera

Landfill Sites:

My choice to re-story landfill sites was influenced by British writers who are rethinking how to understand British landscapes after colonialism and the aestheticization of British land through landscape painting (Bermingham, 1986 and Fowler, ) and landscape photography (Loftus, 2021). My aim was to re-story the land in a way that did not celebrate the country as necessarily beautiful while ignoring issues of land justice and ecological degradation. Instead of photographing ‘idyllic’ British fields, I chose to image landfill sites. These sites are of vast importance to ecologies in Britain, with over 19800 sites existing, with many active for 80+ years. This choice was influenced heavily by Richard Mabey’s anthropological work of ‘brownzones’ in the UK in his book The Unofficial Countryside (Mabey, 1973), and Anna Zett’s artistic interventions in waste processes in Germany (Zett & Groom, 2019).

After visiting and researching the histories of ex-landfill sites I recognised that much of the work of ecological restoration was being done either by human volunteers or by the plants and other species who inhabited the sites. I decided to write stories and image the sites in a way that reflected this. My story, The Stave Hill Burial Ground recognises the important work of human volunteers of ex-landfill sites that are converted into community gardens. The photographs that were generated during the installation were designed to recognise the work of the plants. This was inspired heavily by the poetry of Grace Nichols, such as in “Wild About Her Back Garden” (Nichols, 2014) who recognises in plants an urge towards cohabitation and mutual healing. The overall aesthetics of the photography use scientific imaging methods to create a style that references woodcut drawings in folk stories from the UK.

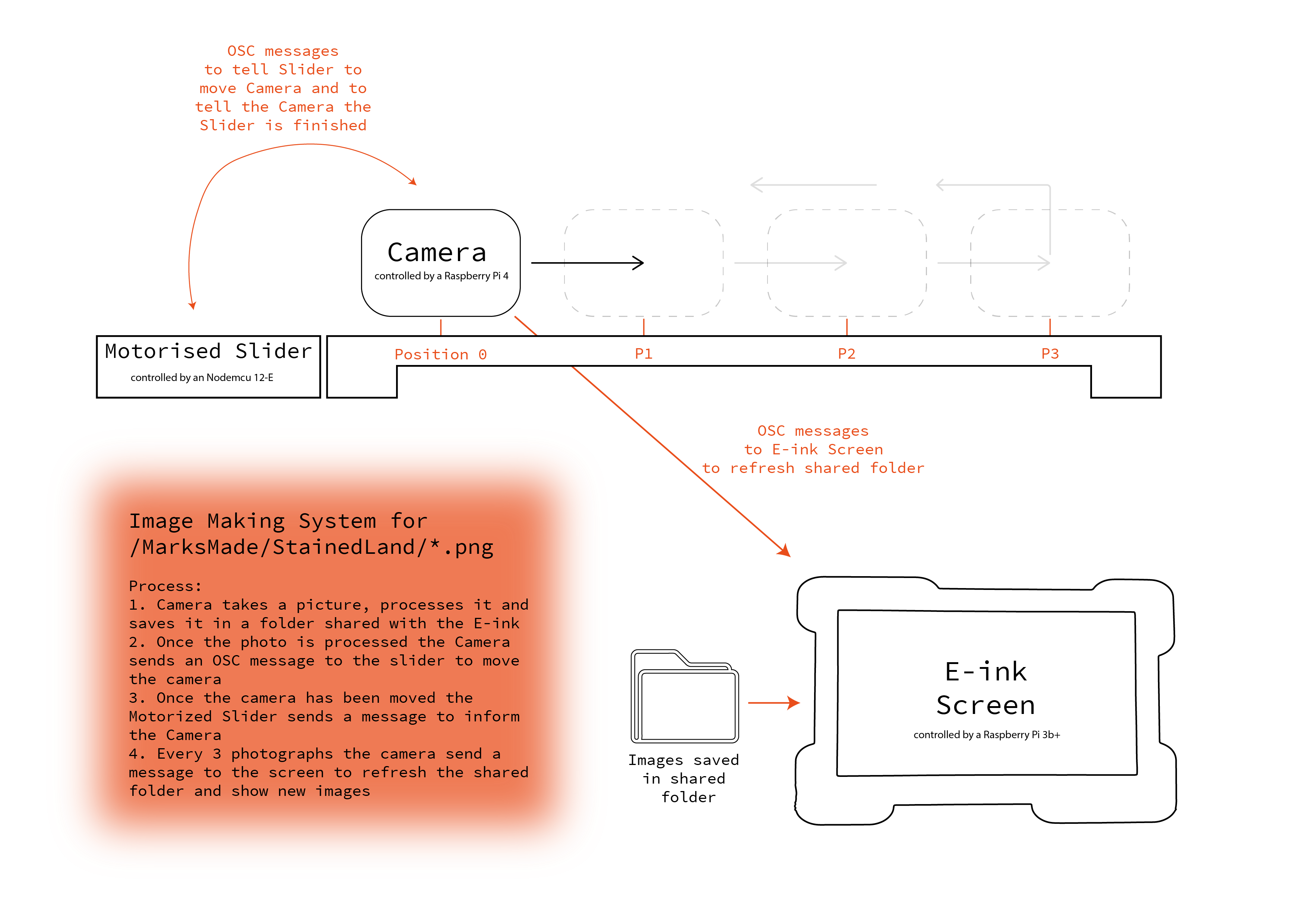

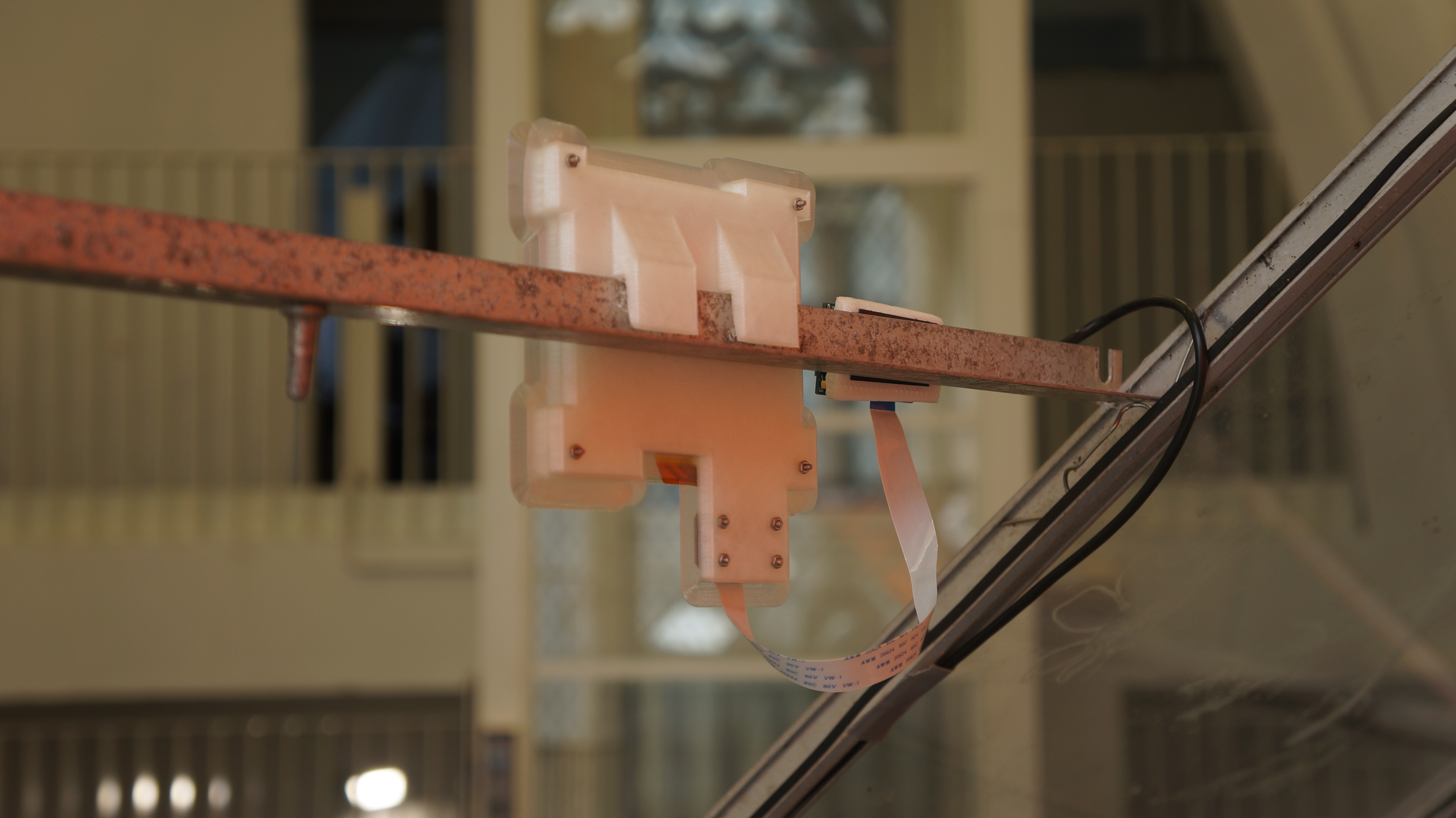

Image 1: A diagram of the structure of the imaging system in the installation, Image 2: a closeup of the Camera on the motorised slider

The Installation Setup

This installation comprised of:

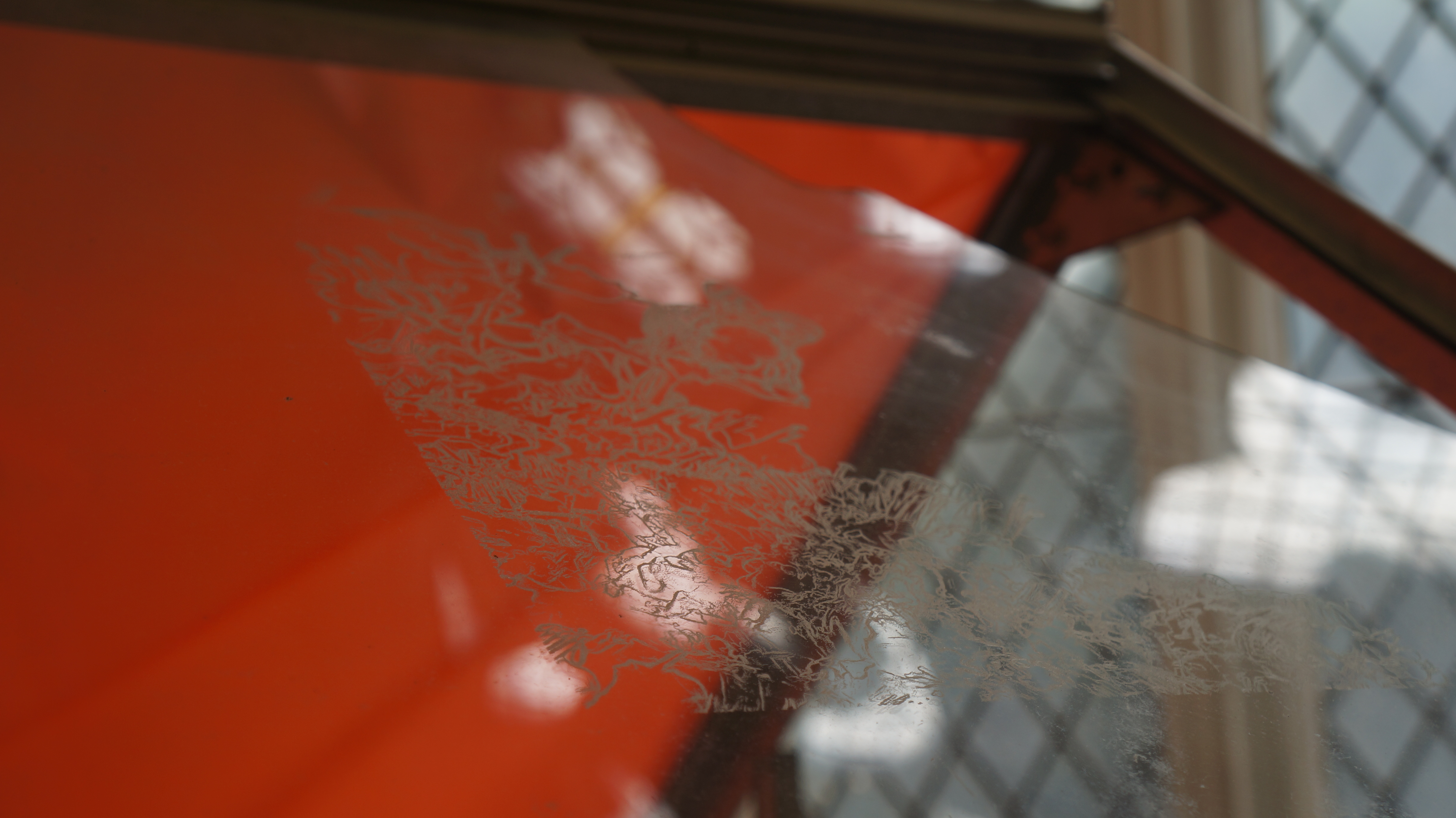

1. Photography of landfill sites engraved onto the glass panels of the greenhouse.

The engravings were created using image processing scripts written in Python and using photographs created on a custom Raspberry Pi camera. The aims of the program have been to generate exhibition worthy images with a folk aesthetic without need for a high-tech camera or computer, and for the camera to have a low power usage. This development followed Earth Hacks’ principles for environmental justice in tech, and Low Tech Lab’s principles for designing open-source systems. These principles aim for tech to be; open-source, accessible (cheap), aim for a low material impact (both in terms of power and materials used), and for the tech to have at its goal environmental healing for all (Earth Hacks, Forthcoming & Low Tech Lab 2019).

This system can be built for less than £150 and the code is accessible for anyone to use. The necessary parts are:

- A Raspberry Pi (preferably a 3B+ or a 4)

- A Raspberry Pi Camera (HQ or standard are both fine)

- If using an HQ camera then a lens is necessary

- A screen with four GPIO buttons

- A power bank

- A micro SD card

No need for photoshop or an expensive computer.

To achieve this, I decided to follow the process of film photography, a process I love for the mystery of not quite know what photos you’ve composed until you get the photos back from development. I chose to program in Python, because the picamera library has great written integration with the HQ camera and the newer Raspberry Pi. My code is arranged in two modes: gathering and fermenting

I see each of these modes as a photographic verb. The first gathers still images or images of movement over a period of time, the second ferments them into something new using computational methods (contour tracing, foreground extraction, contrast-stretching, photogrammetry, and engraving onto glass).

I use these verbs in opposition to ‘capturing’ or ‘taking’ a photograph since I do not see these verbs as reflective of this image-making process. In previous work I have experimented with the verbs ‘conjuring’ and ‘decomposing’. This project is an extension of this work.

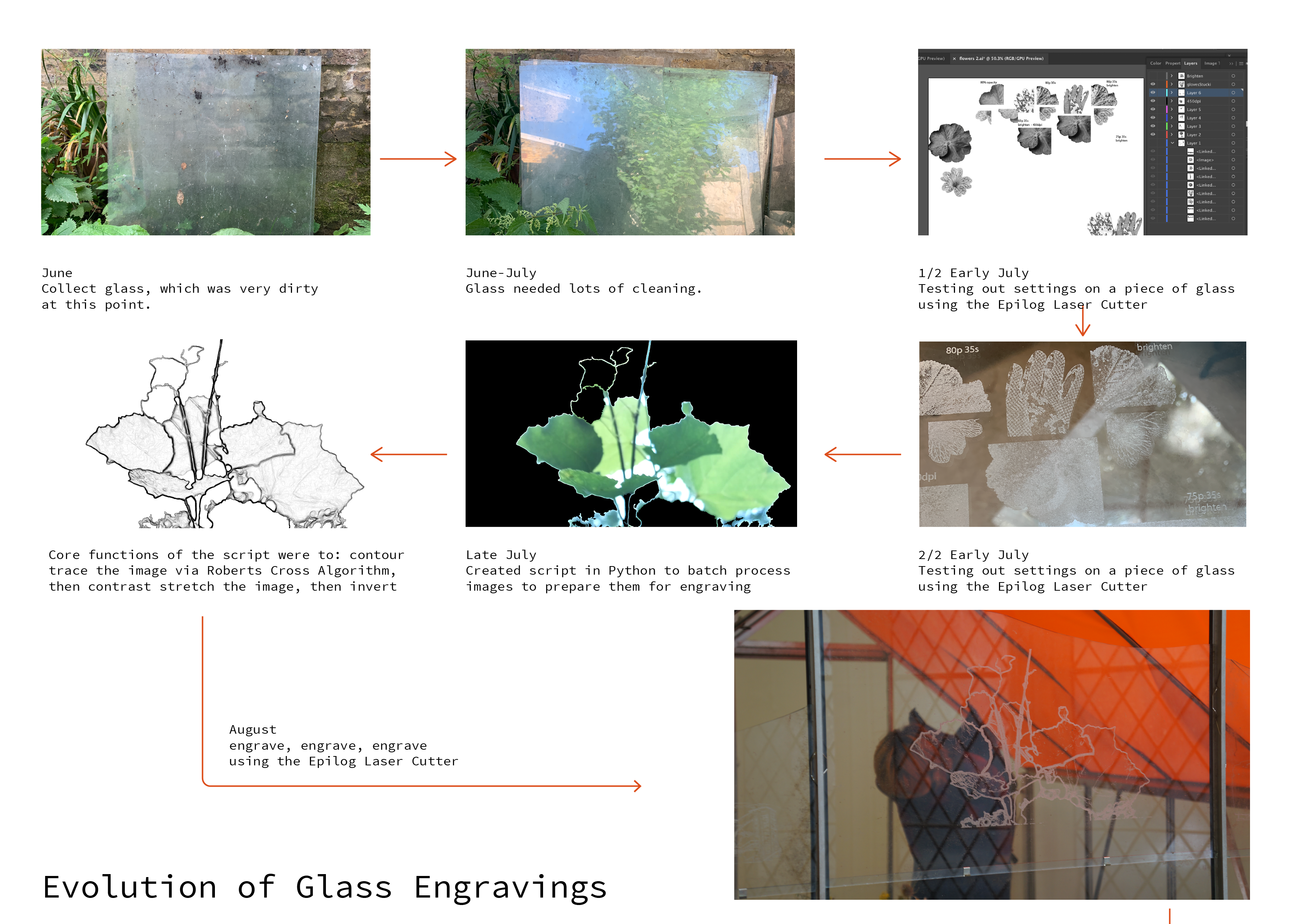

The process of engraving of my photos onto glass extended the fermentation since it opened up the photographs to combine with their surroundings through reflections. The selected images were inspired by stone carvings on Neolithic earthen long barrows. (Hutton, 2014) After some initial tests with engraving raster files using some images I had taken, I found that the images would need to be very specific to appear clearly on the glass.

The images needed to:

- Have clear edges

- Have a high contrast

- Be very bright, with minimal grey or black areas

To do so, without losing the image I created a script for preparing the images to be engraved. This was achieved using a scientific imaging library, sci-kit. The benefit of this library is that it is used for microscopy and so has a range of functions for enhancing an image (a process that is useful for determining different parts of cells and other microscopic subjects).

I also used this script for processing a series of images collated through processing a geographical dataset of historic landfill sites in the UK. This script uses Google maps’ API to take screenshots where there was a landfill site.

Image 1: Diagram of the Glass Engraving Process, Image 2: Engraved Photo of a Bee at Rotherhithe Ecological Park Created Using Gathering and Fermenting Scripts, Image 3: Engraved Microscopic Photograph of Two Candeluna Seeds Created Using Gathering and Fermenting Scripts, Image 4: Closeup of Panel Engraved with the Rotherhithe Ecological Park Floorplan and the Microscopic Photography of Bees from the Park

2. Raspberry Pi Camera on a motorised slider

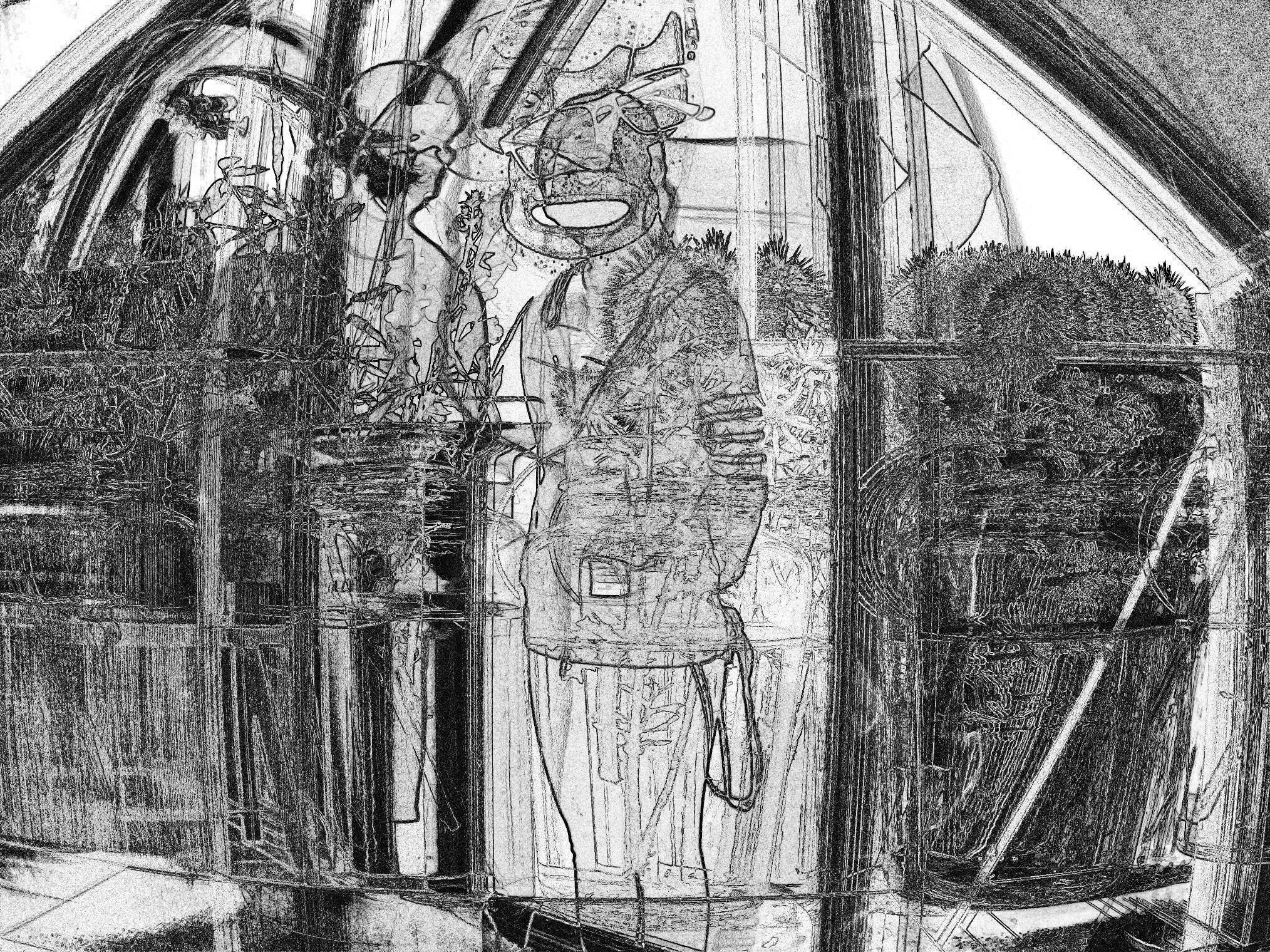

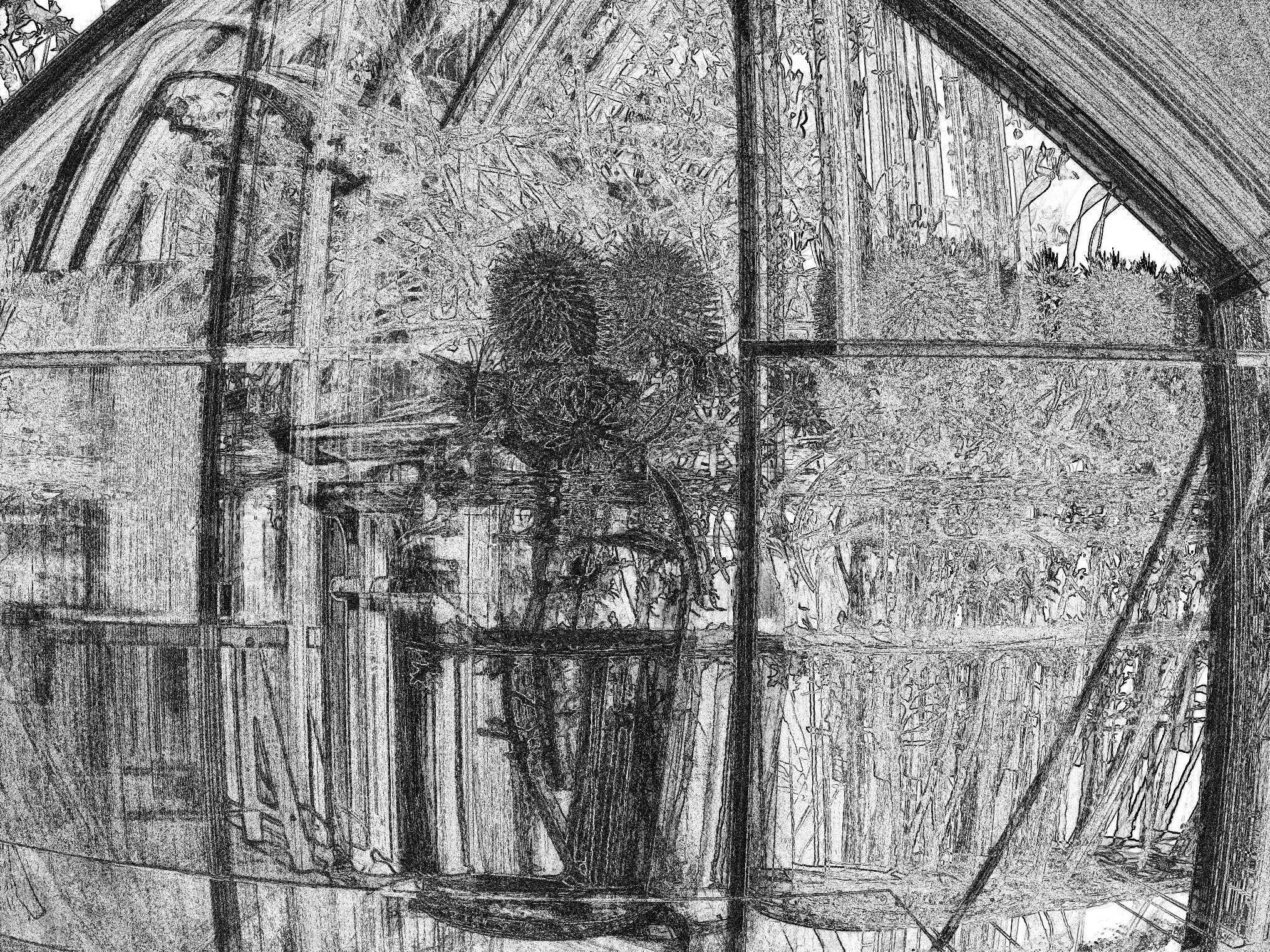

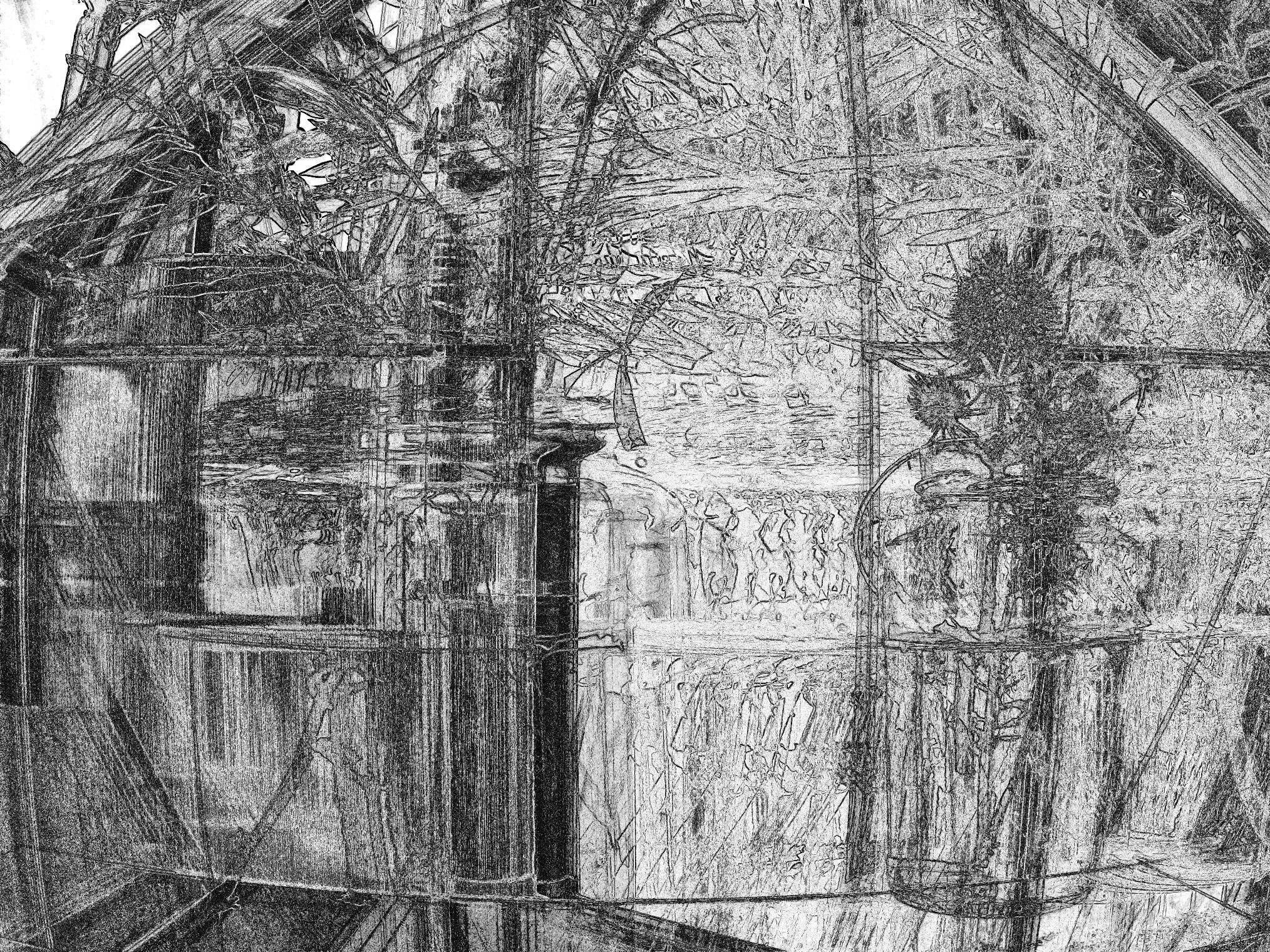

The camera could send and receive messages from an e-ink screen (controlled by a Raspberry Pi 3B+) and the motorised slider (controlled by a NodeMCU 12-E). This was a lightweight version of the camera used to document the landfill sites. During the show the camera was running a script that layered up images of plants over time using a script for frame differencing. This script, in combination with the movement of the camera on the slider allowed the plants to appear as if took up the width of the greenhouse, with humans drifting around them, reflecting their work in restoring land ecologically.

The camera was positioned towards plants from the landfill sites that people were free to move around to adjust the photographs.

3. A motorised slider (controlled by an ESP8266).

The slider is 3D printed based on Adafruit’s design. I chose to run the motor with an ESP8266 to make communication with the camera easy via OSC. I followed Engineers Garage’s method for how to interface with the motor using an ESP8266. The movement of the slider slowly oscillated from taking big steps all of the way across the slider to small steps during the show. This was to ensure the position of the plants in the images kept changing, the effect of which was to make it appear that the plants were filling the room.

I initially had problems driving the motor while using a L298N Driver but subsequently moved to an A4988 driver and changed the voltage to the necessary voltage for my motor (240 mv) using a multimeter. The motor ran steadily for the duration of the exhibition.

Image 1: Back of E-ink Screen Cover Displaying 3D Modelled Hooks to Attach to Greenhouse, Image 2: Larger E-ink screen from the front, Image 3: Prototype of screen

4. A 5.83” e-ink screen (controlled by a Raspberry Pi 3B+)

The screen has access to a folder on the Camera via RSYNC. The screen hangs on an otherwise unused piece of the greenhouse via a 3D printed cover. I designed a 3D enclosure that hooked the piece of the greenhouse. The Raspberry Pi rested on the flat of the greenhouse piece. Re-using some of the greenhouse helped develop the aesthetic of (computational) restor(y)ation. The orange backdrop of the greenhouse, alongside the orange gloves, add to this aesthetic by making a visual reference to the industrial past of the ex-landfill sites.

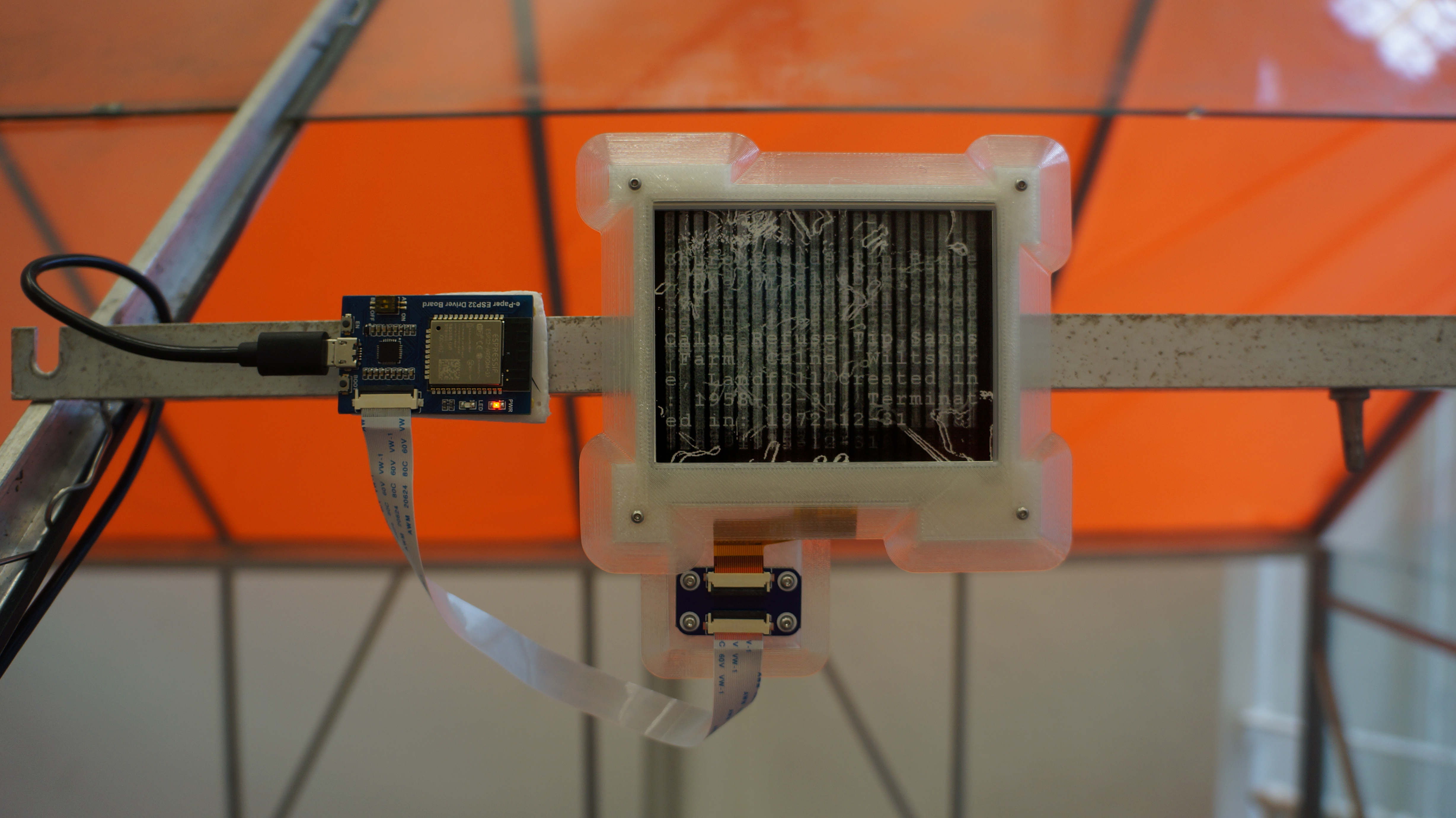

5. A 4.2” e-ink screen (controlled by an ESP32 Dev Board Created by Waveshare)

The screen also hangs on an otherwise unused piece of the greenhouse via a 3D printed cover. This board was much trickier to program than the Raspberry Pi. I had intended to also send images to this screen but because this is a development board I could not get any messaging libraries to work. However, I liked how lightweight this board was, and wanted a way to demonstrate the extent of landfills in the UK by drawing on the dataset of Historic Landfills I had. So, I decided to use this screen for a hypertext piece. This data needed significant work until it was ready to be used. To add it to the screen the data had to be in an array of strings, as the library for reading .csv files did not work on this board. I had to first convert it from geoJSON to csv, then remove the unwanted columns, then manipulate the data into a set of strings. To display the text I used the ‘partial refresh’ functionality of the e-ink screen which only updates pixels that are different from the previous image. This meant that the image on the screen was literally an accumulation of ink on the front of the screen, which I thought encapsulated how landfills have built up over time.

Images: A selection of Images Generated Throughout the Exhibition

Reflection and Further Development

Overall, I am very pleased with the outcome of this project. I think I was very successful in creating an aesthetic of computational restor(y)ation and the piece gelled together well visually. Alongside this, some of the photos that were generated during the show succeed in reimagining the plants that restore the land at landfill sites and stand up well as images by themselves. I also think the camera software functions well as a replacement to a DSLR, for a fraction of the price which was one of the key aims of the project. I have used the camera on location multiple times and have no trouble gathering photos with it. This is a great improvement from my previous attempt at a portable camera which required me to carry a Mini Smart Router, and have touch OSC on my phone running.

During the show, I found that people engaged with the photographs really well, especially the seed photography, and were keen to discuss the folk stories that complemented the imagery. Next time around I would consider connecting the movement of the motor to an organic or industrial process such that the outputted images would have a trace of that process, such as the sound of landfill sites, or traffic counting at a waste disposal site.

The next steps in developing the camera will be to design a waterproof case and then to reintegrate my work on sound and motion sensing photography from Conjuring Landscapes. Work on the case is currently in development, and I am speaking to a material designer from the Bartlett, at UCL. Once the case is ready, I will then use the camera for multispecies photography for a project with the group Sustainable Darkroom. I will be a resident at a photographic garden that they are building over the coming year. In addition to this, I will be further developing the software for my camera under the guidance of the Environmental Media Lab, who will help me work on digital environmental photography.

I also plan to communicate with community gardens on old landfill sites in London to offer them some panels of engraved glass for them to exhibit.

Source Code:

The code I developed for this project can be found here.

Code Used:

Picamera for interfacing with the Raspberry Pi Camera using Python

Scikit Image. I used their visual image comparison method for frame differencing

Python For Microscopists by bnsreenu. Used for preparing the glass for engravings and for the charcoal aesthetic of the photographs

ESP32 e-paper driver board by Waveshare

Image Segmentation Code is Based on this implementation by the Debugger cafe

Historic Landfills Dataset, by the Environment Agency. Used to create the hypertext piece

References:

Bermingham, A. (1986) Landscape and Ideology. University of California Press

Hutton, R. (2014) Pagan Britain. Yale University Press

Fowler, C. (2020) Green Unpleasant Land: Creative Responses to Rural England’s Colonial Connections. Peepal Tree Press

Loftus, F. (2021) From Vixen Tor: Notes on Photographies of the Land. Engines of Difference.

Low Tech Lab (2018) What Are Low Techs. https://lowtechlab.org/en/low-techs

Mabey, R. (1973) The Unofficial Countryside. Little Toller Books

Nichols, G. (2014) Picasso, I Want My Face Back. Bloodaxe Books

Wall Kimmerer, R. (2013) Braiding Sweetgrass. Milkweed Editions

Williams, M. (2016) Ireland’s Immortals: A History of the Gods of Irish Myth. University of Princeton Press

Zett, A & Groom, A. (2019). Dear Environment. Paradoxa, No. 31

Zylinska, J. (2017) Nonhuman Photography. MIT Press.