Vertigo

Vertigo is an audio-visual installation inspired by the title sequence from the Alfred Hitchcock film of the same name.

produced by: Harry Wakeling

Introduction

For this term's Workshops in Creative Coding final project, the brief was to create a piece inspired by a piece of media of our choice, for example a film, book or album. I intended for my piece to be inspired by the title sequence of the Alfred Hitchcock film ‘Vertigo’. It was created by Saul Bass in collaboration with John Whitney, and is often regarded as a highly influential piece of motion graphics. I picked it as I have always been a fan of Bass and Whitney; my background prior to studying at Goldsmiths was in graphic design, and these artists (and this particular piece) have been highly influential on my practise.

Concept and background research

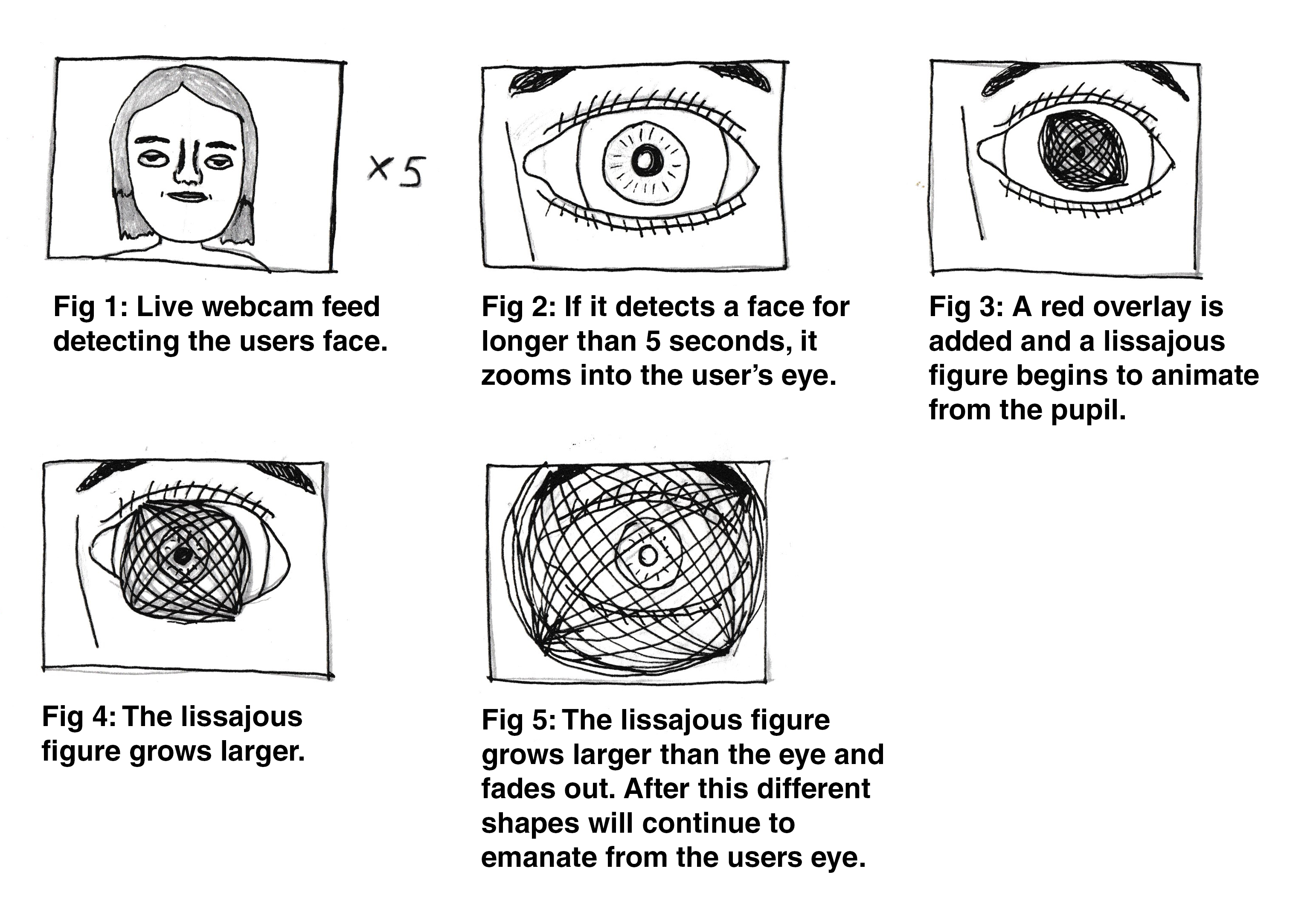

I was interested in creating an installation inspired by the section in the title sequence when lissajous figures animate out of a woman’s eye. The original concept in my proposal was to zoom in on the user’s eye using computer vision, at which point the aforementioned shapes would animate out of their pupil.

Storyboard of my original concept.

Upon reflection from my feedback, I felt it was important to include other animated elements than just the lissajous figure, as I felt that on its own would be too derivative of the original sequence. I felt an interesting dialogue could be explored by incorporating animations that looked futuristic, alongside shapes that are synonymous with early computer art.

One of the main inspirations I took was from the visuals of a performance by the late electronic music artist Sophie. Like the Vertigo title sequence, it makes frequent use of lissajous figures, however the colour palette and additional animations give it a futuristic style. I also looked at other works produced by John Whitney; the section where a mandala animates out of the user's eye was inspired by the work of his brother James, in particular the animation ‘Lapis’.

Sophie Livestream Heav3n Suspended

Technical

The tracking of the eye was based on the face detector example from week 13. After doing research online, I found a haar model that had been specifically designed to detect a user’s right eye, swapping this with the original; once I had created a confidence system, I was able to obtain a smooth input of data. I then located the central point of the detection and sent this data to each of my animation objects, using the translate function to centralise them around the user's eye.

Using the distance function, I was able to calculate the length of the eye position from the side of the screen. I then mapped this onto a series of functions that affected various elements of my animations, for example their size and colour. This meant that each animation would gradually change depending on the position of the user. To animate the lissajous figures, I used the modulo function to draw each point gradually based on the frame number, erasing the older points at the back of the deque. This created a dynamic animation that morphed out of the user’s eye.

An important element that I needed to get right was the way the animations would develop over time. I created a timer inside and an if statement in my draw function; this meant that if a user's eye was detected for more than 5 seconds, it would call the function used to trigger the animations. I then used a switch statement to call each animation object gradually over a specific period of time.

Another element I wanted to achieve in my animations was a smooth gradient of colour. I studied the work of Zach Lieberman for inspiration; I found a useful tutorial based on his work for creating shapes that gradually change colour using the sin function. I used this principle in each of the animations I created behind the lissajous figure, enabling the futuristic, psychedelic style I was hoping for.

Future Development

In my original proposal, I intended to create a function that slowly zooms into a close-up of the user’s eye. I attempted to achieve this by using the draw subsection function, the results of which looked very pixelated. I also attempted to run my project on a Raspberry Pi; I managed to get a simplified version working, however the full sequence slowed the frame rate down a great deal. I decided to use my laptop when it came to filming the project; if I had more time, I would research the most efficient way of running computer vision on the Pi. I believe this could potentially be achieved by using cameras that are native to the device; this could also potentially fix my zooming issue.

Self Evaluation

Overall I am happy with the outcome of this project. I believe I was able to take inspiration from an iconic motion graphics sequence and add enough variation to create something unique. This project also ties in well to the themes I am exploring in my research and theory module. I have been interested in the history of computer animation, looking at work associated with media archaeology. This involves studying developments in technology throughout history, reflecting on how they have influenced the tools we use today. I have been particularly interested in artists that explore a hybrid approach between old and new media; this is something I attempted to address within this project, taking a sequence that is regarded as an example of early computer art and re-imagining it using modern developments in computer vision.

This project has also taught me about several elements that I intend to develop in the future. One example is the use of switch statements. This is something that I had not used before, however I feel it will be beneficial for creating future animations that need to be displayed in a sequence. I have also learned about the importance of creating confidence systems to enable a consistent, smooth input of data. I was also happy with how I planned out my proposal in terms of storyboarding; this enabled me to receive useful feedback in terms of how to improve my concept, as well as providing a useful structure to base my animations on.

If I had further time to develop this project, I would have liked to add in more interactions from the user, as well as creating additional animations that can be modified. That being said, I am happy with the interaction I currently have, as I was able to gather a smooth input of data that I used to affect various elements of each animation. In summary, I am happy with the work produced, and I feel it has given me a lot to think about regarding my final project in the summer term.

References

Documentation

https://www.youtube.com/watch?v=xXPSe57pOss&ab_channel=SOPHIE

https://www.youtube.com/watch?v=kzniaKxMr2g&ab_channel=Chapad%C3%A3odoFormoso

https://www.youtube.com/watch?v=SkLn8mamU78&t=64s&ab_channel=MovieTitles

Tutorials

https://www.youtube.com/watch?v=kYejiSrzFzs&ab_channel=danbuzzo

https://www.youtube.com/watch?v=ehT7d9JPulQ&ab_channel=thedotisblackcreativecoding

https://www.youtube.com/watch?v=3i9cN8bbJb8&t=1190s&ab_channel=newandnewermedia

Referenced Code

http://www.generative-gestaltung.de/2/sketches/?02_M/M_2_5_01