Foley

A generative programme that listens to its environment and reconstructs its genetic code.

produced by: Howard Melnyczuk

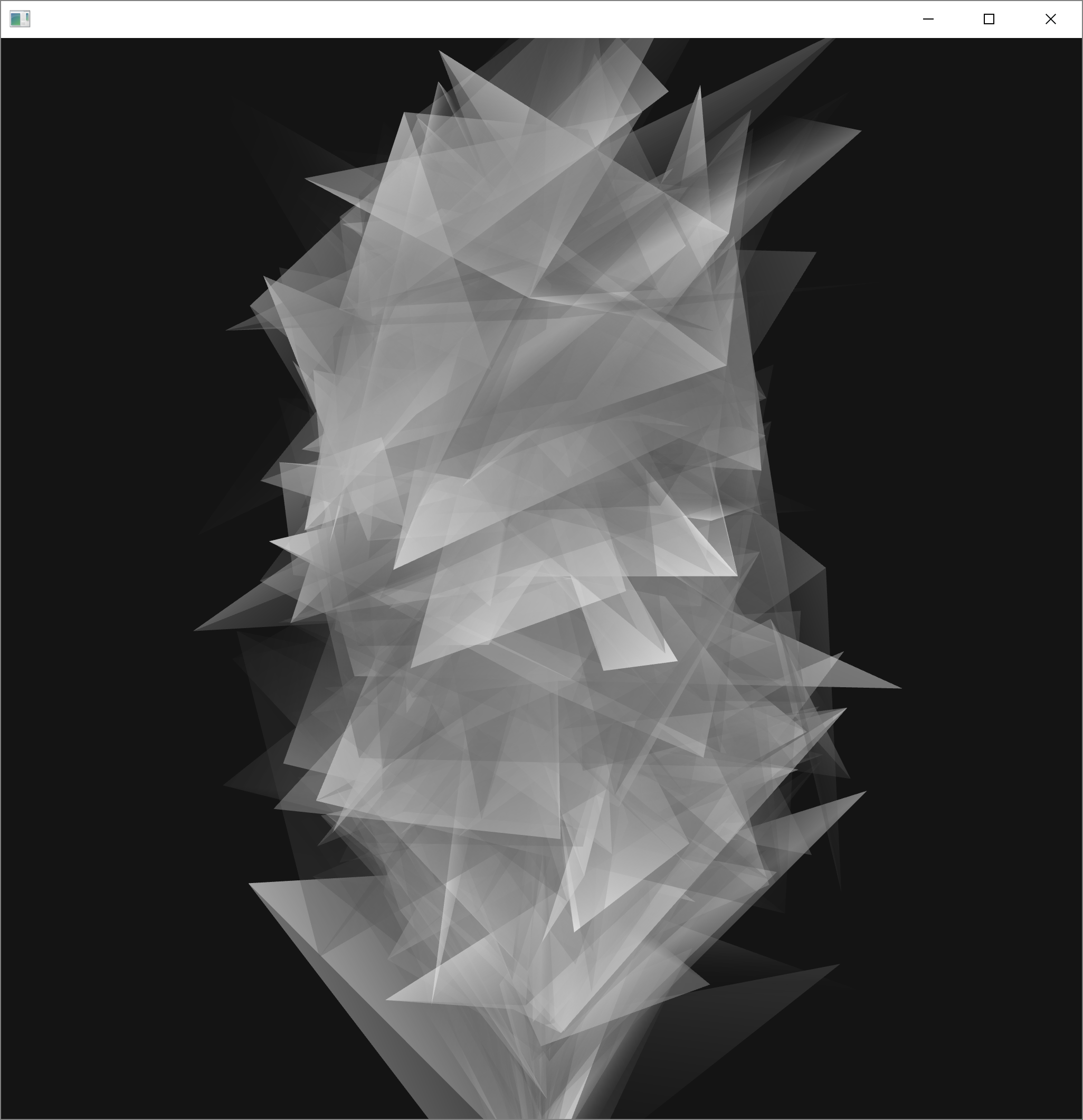

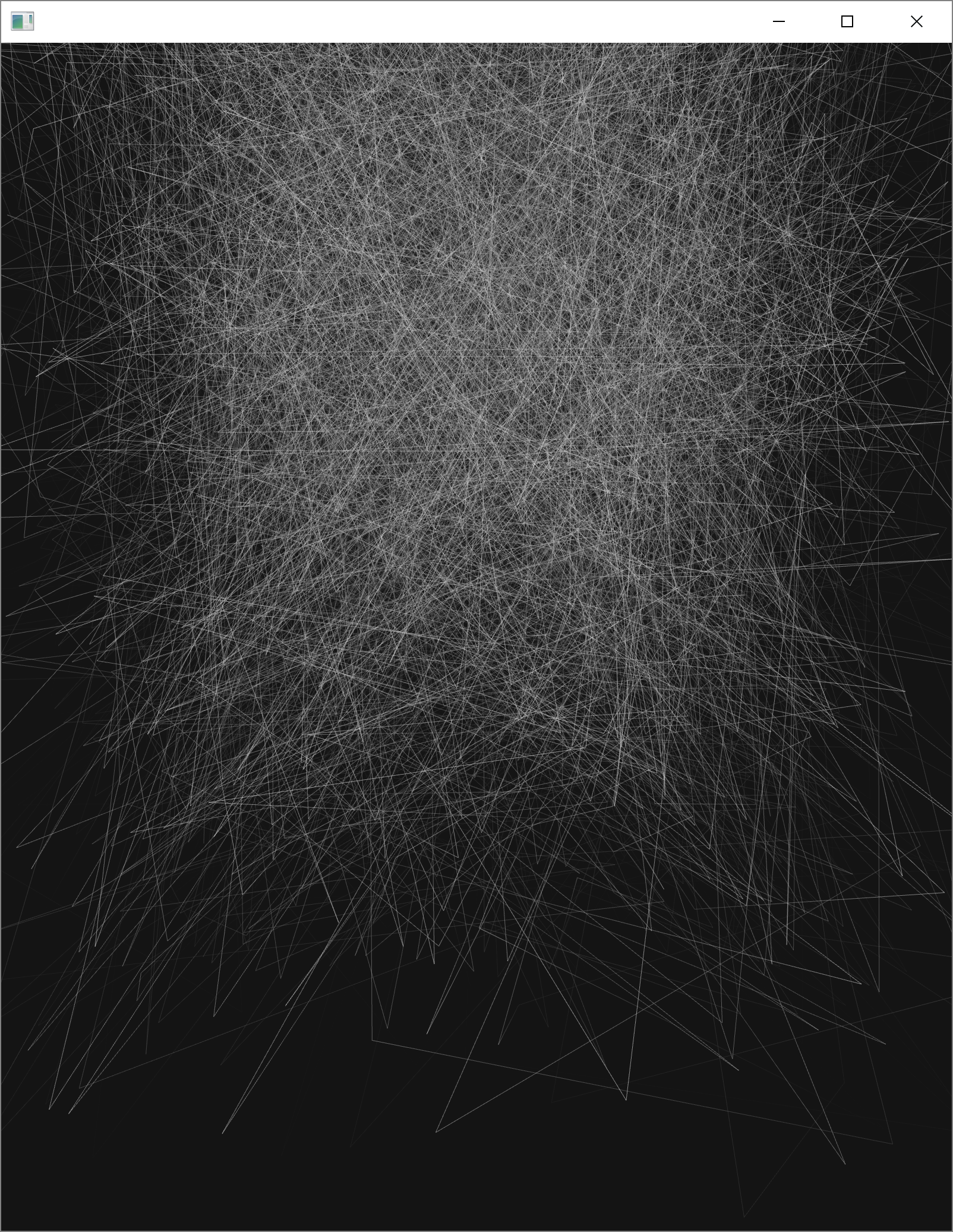

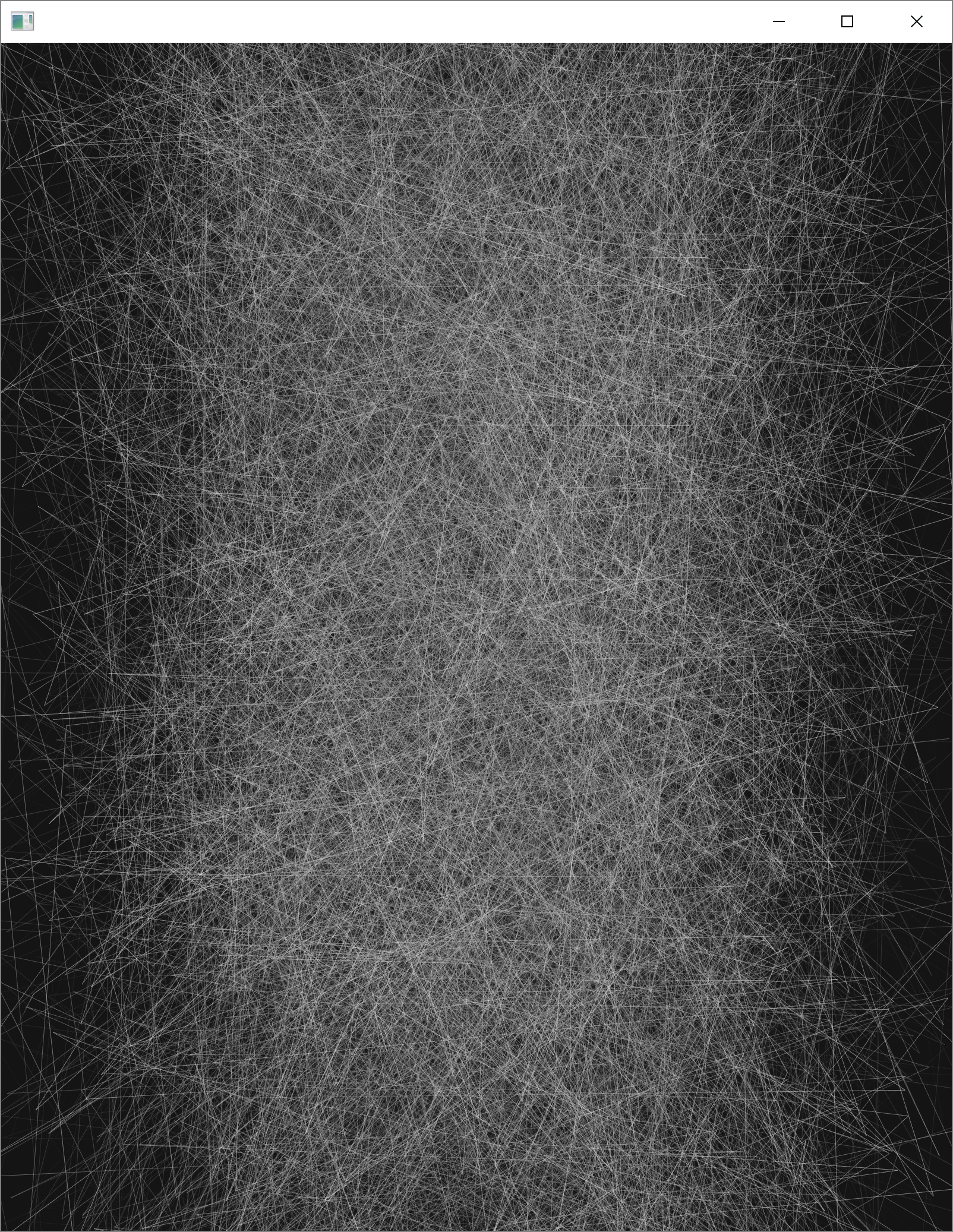

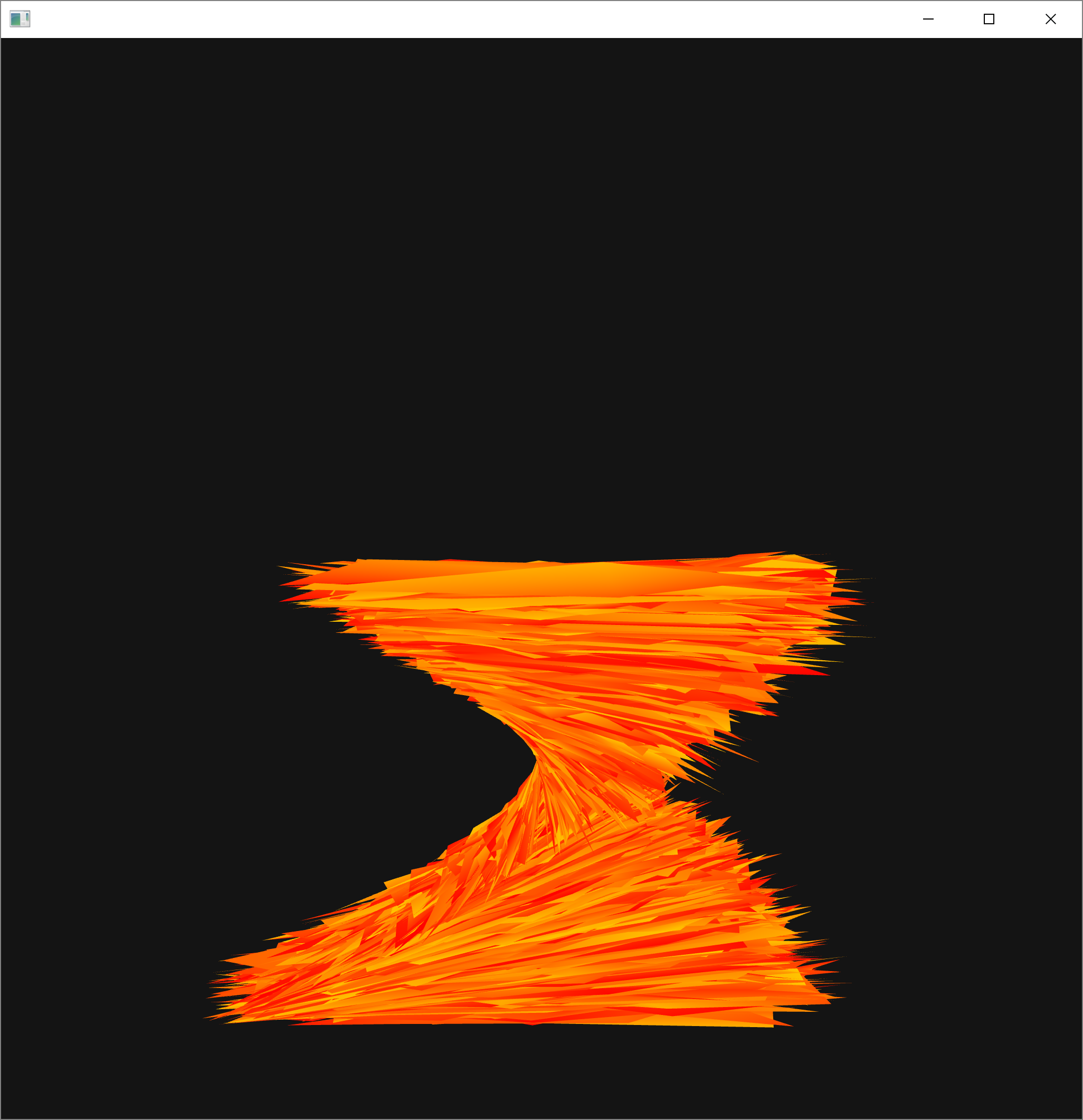

Foley uses a genetic algorithm to process live audio input, and from this, it is able to build a shape on screen. This means that what is shown is both a live representation of sound and a short history of the environment. Foley is designed to be left running in a space so that it can harmonise with the room and changes drastically only when there is a major change in the sonic texture.

The shape on screen is made using a series of DNA-sets with genes controlling the placement and the colouring of these. This is then bred with a similar genetic code that is generated live using audio data that has been processed through a Fast Fourier Transformation algorithm (using Kyle MacDonald's ofxFFT addon), the fitness of which is linked to the volume of the sound at any given time.

I wanted to take the traditional and somewhat overdone concept of sound visualisation and attempt to twist it into something new and (hopefully) more complex and interesting. Given that the sound is only represented when in as far as it mutates the existing code, I feel I have begun on a journey towards this. Foley is beautiful to watch and interesting to return to. It is intriguing to run in different locals given that it is genuinely different in different sounding places.

Moving forward, I would like to first improve the FFT processing. This is a very complex and deep area of sound computing and I have only dipped my toe in. By improving the analysis of the incoming sound, I hope to build a stronger algorithm that does more to understand the real textural differences between soundscapes.

The next step after that would be to move the visual elements into a shader and begin to analyse speech and language. With these two components, I would be able to write a script that pulls images from the web in response to the conversations viewers have around the piece, in a move that I hope will be both compelling and creepy.

References

- ofxFFT: https://github.com/kylemcdonald/ofxFft

- Demo video uses found footage: https://www.youtube.com/watch?v=cO_IFJaWmhA

- Some code was hacked from the 'Generative Sculptures' class exercise