More Than My Name

More Than My Name is a music video in collaboration with Mee & The Band. The video is using computer vision algorithms to generate effects based on the movements of the dancers and the tracking of their faces.

Direction, choreography, creative Coding and editing by: Clemence Debaig

(Music by: Mee & The Band)

(The video hasn't been released officially, please do not share just yet!)

The song explores the notions of identity and diversity, especially how modern technology defines us and our identification. However we existed as an identity when we are first born, before we are named, and when our bodies die we somehow still exist having lived, we leave an imprint. This video reveals the us that is unseen, a spiritual take on mental health and proof of our existence.

Behind the scenes

Early research

The project started with the analysis of the song and its lyrics to identify key themes and explore visuals effects accordingly.

- Technology and society blending our identities

Using face tracking and/or composing a face with parts of different persons’ face

- Feeling trapped in a web of expectations

Using the Good features to track algorithm and Delaunay triangulation

- Freeing oneself and sharing one’s intensity and beauty

Using frame differencing to produce particles based on movement, giving the impression that the particles come out of the person’s body

Prototype and choreography workshop

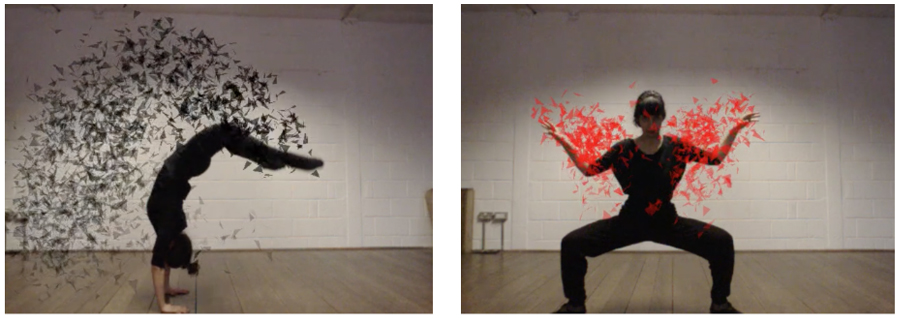

I put together a first prototype to explore the potential of the technology from a movement point of view. This prototype has been used in a workshop with the dancers to start creating the choreography according to the different effects.

During the session, I was leading them through a few creative tasks while live coding to adjust the algorithms to their movements and the setup of the room. A projector was installed in the room to help the dancers interact and react live to the system.

2 main effects were explored during the session:

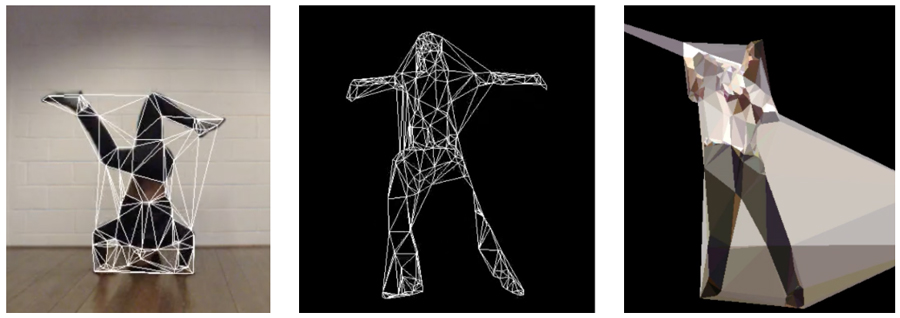

- “Delaunay”: using the Good features to track algorithm in OpenCV to detect interesting points and triangulate from it (inspire by Memo’s music video with the Wombats).

- “Glow”: using Frame differencing to generate particles (inspired by the Body glow assignment)

I had also added a GUI to help adjust some of the parameters faster. All the sections were recorded in Openframeworks using ofxVideoRecorder, capturing the movements of the dancers with the added graphics for further research.

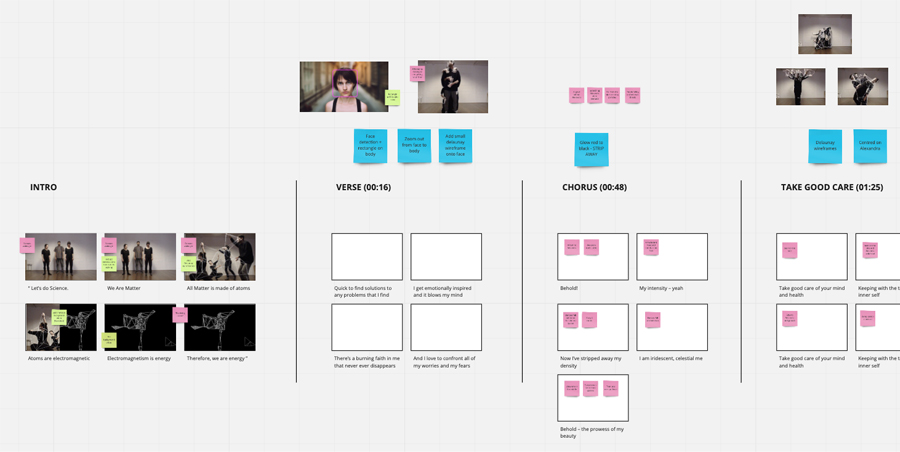

Storyboard, choreography, rehearsals and shooting prep

To prepare for the final video shooting, I created a detailed storyboard with the different sections and effects. This helped plan all the sequences that needed to be recorded on the day but also structured the choreographic research.

During the following rehearsals, I kept the same setup we had during the initial workshop to help the dancers understand the textures that were needed on each movement to optimise the way they would be picked up by the algorithms. For example, I really liked how particles were generated with sudden movements but then let them disappear by staying still or having a much slower sequence. Being able to experience it live help speed up the process and reduced rehearsal time.

Shooting day

The final images have been shot in 4K with a DSLR camera. This was a requirement from the artist in order to use the final video on different platforms in the future, including TV and live performances.

During the day, I still had a computer and the PS3 camera hooked to the DSLR camera as a control unit to make sure that the setup was still ok to generate the different effects properly. This helped control the lighting, fine-tune the outfit and direct the recording of the choreography.

Final editing and the challenges of dealing with 4K footage

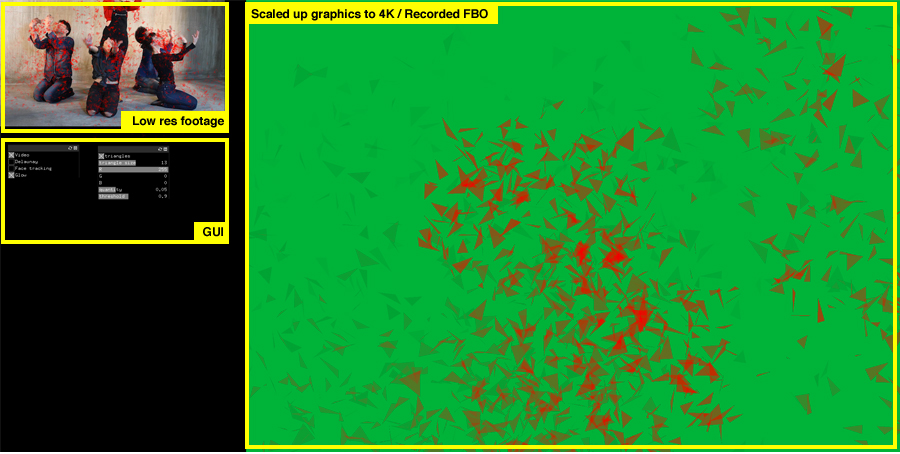

In my early prototype, I had already tested how I would use a video file as an input for computer vision instead of the live feed from the PS3 Eyecam. But I had not done it with a 4K file… I realised really quickly that I would need to change my approach as I couldn’t even get openFrameworks to play the 4K video smoothly.

As the 4K element was already agreed with the artist, I had to find a workaround to deal with the limitations of my computer. The final version of the code was organised in 3 parts:

- Using a low resolution video file to run the different computer vision algorithms, displayed on the screen as a way to control the final effect

- Using several GUI panels to adjust the parameters live

- Scaling up the graphics only to 4K, displayed on a green background in their own FBO. That FBO is the one that will be recorded using ofxVideoRecorder

The output file is then a 4K video file with the graphics on a green background. This is using the “green screen” technique. I would then use Adobe Premiere Pro to re-sync the graphics with the original 4K video and make the green background transparent.

Showcasing the video at the pop-up with a mini installation

As a final presentation, I realised that interacting with the system was worth a thousand words to explain how the video had been produced. So, I presented the final version of the interactive system next to the video during our pop-up exhibition 2POP||!2POP. The side installation was inviting the visitors to test the effects live, encouraging them to play around and dance in front of the camera!

Links

Mee And The Band on Instagram

More Than My Name on Spotify

Inspirations

Memo Akten's music video for the Wombats

Delaunay video assignment

Body Glow assignment

Addons

ofxCv

ofxOpenCv

ofxPS3EyeGrabber

ofxKinect

ofxDelaunay

ofxGui

ofxVideoRecorder (to output a video file)