The Surveilled Self: Resisting a Quantified Self and The Data Landscape Today

By: Eri Ichikawa, Omolara Aneke, and Tate Smith

“Until you make the unconscious conscious, it will direct your life and you will call it fate.”

-Carl Jung

How often have you opened up a web browser or social media platform, only to see a product or service you were just talking about with a friend? Experts in predictive algorithms will say this is just evidence of the effectiveness of the algorithm while more skeptical folks will say there’s something nefarious afoot. We believe this is a consequence of the quantified self regardless of which side you decide to take. Contemporary society is so comfortable accepting “terms and conditions,” we have “distilled [the digital] everywhere throughout real life, in homeopathic doses, no longer detectable. And if the rate of reality is lower from day to day, it is because the medium itself has passed into life...” (Baudrillard,1995); thus we have extended the self into the digital and we are only just beginning to see the consequences of such a decision while the problem only metastases further. Author Derrick de Kerckhove writes “In the Internet age, the creation of a digital layer over our personal identity produced a strong impact in the very definition of what a person is and his/her identity, be it personal or collective” (2013). This new found personhood carries with a somber reality--the very fact that our personage is a commodity. You are not you, but a collection of numbers held in a .json file, ready to be traded like oil in an unforgiving market.

Your default settings allow Facebook to monitor a multitude of actions; Information about operations and behaviors performed on the device, such as whether a window is foregrounded or backgrounded seem benign while the ability to collect information about other devices that are nearby or on your network finally answers why that person you saw while you were using pub’s Wi-Fi suddenly showed up in your “People you may know feed.” The most underhanded of them all is the ability the ability to monitor bluetooth signals, and information about nearby Wi-Fi access points, beacons, and cell towers as well as access to your GPS location. All these factors create an intoxicating cocktail of data ready to be sold and traded around with hyper-specificity. Facebook acts as the net while smaller companies lurk in the shadows on the brink of the law; how does it make you feel knowing that the data of survivors of sexual abuse and those with histories of substance abuse is more valuable than an expectant parent? Capitalism’s invisible hands mold all before it with impunity.

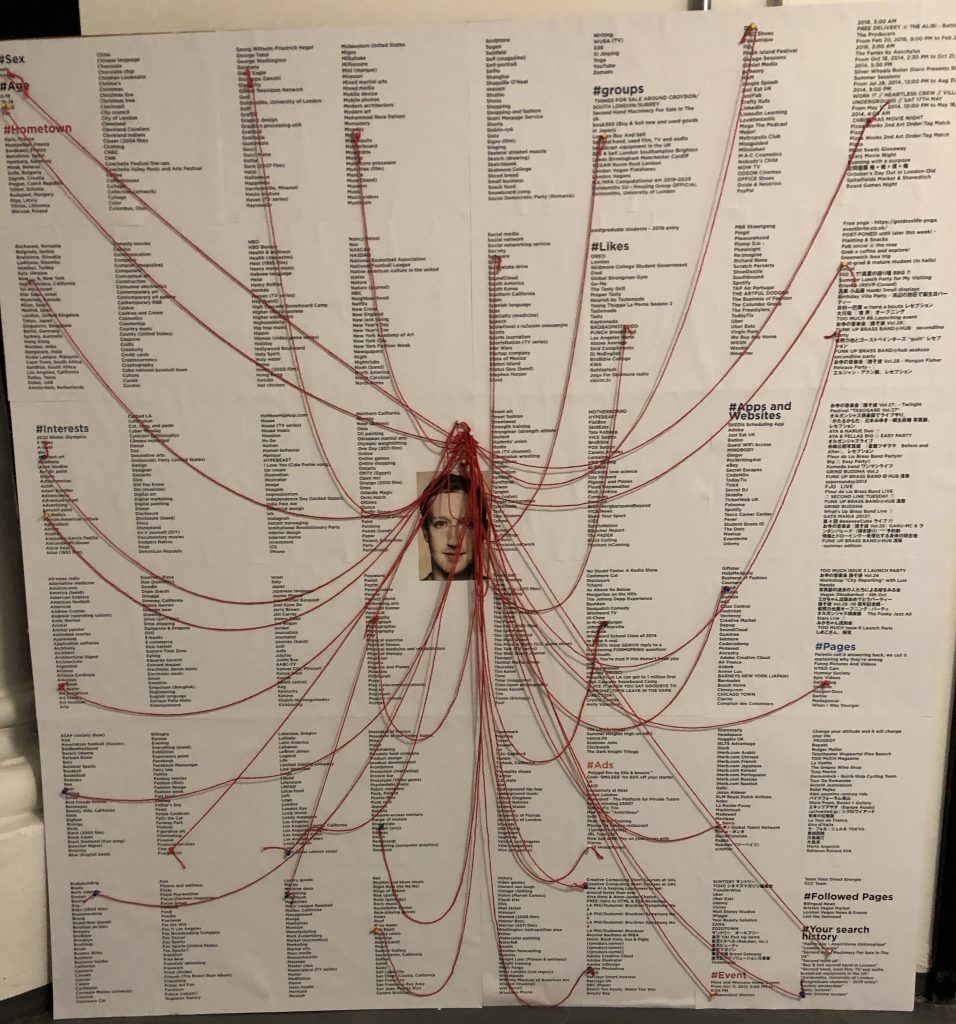

The three Moirai of Greek myth spin a thread which represents the fate of a human; etymologically data comes from the latin datum--meaning “something given,” just as your fate was given to you. Our artefact will extend this metaphor by creating a “person” entirely out of data. Placed inside of a church, the artefact will also commune with this metaphor and that of the false narrative of AI as god. Viewer’s will be asked to create a new-self the same way that algorithms do--through the attribution of data points to a specific place. In doing so, we aim to create a temporary autonomous zone, of Hakim Bey fame, which will elucidate the fluidity and ease of which the quantified self can become. Annemarie Mol and John Law have previously argued that “...artefacts may be strategically designed to have politics” (1995, p. 280-281). Our artefact will explore politics through its function as a locus of discussion for the ethics around data rights, data and the surveillance there of. Our artefact aims to bring the purposefully obfuscated data practices of big tech into the light thus enabling viewers to act with greater awareness.

Podcast:

References

Araujo, Patxi. “The Trained Particles Circus : Dealing with Attractors, Automatons, Ghosts and Their Shadows.” Leonardo, vol. 52, no. 4, Aug. 2019, pp. 389–94. DOI.org (Crossref), doi:10.1162/leon_a_01781.

Azuma, Hiroki, et al. General Will 2.0: Rousseau, Freud, Google. Vertical, 2014

Baudrillard, Jean. “The Virtual Illusion: Or the Automatic Writing of the World.” Theory, Culture & Society, vol. 12, no. 4, Nov. 1995, pp. 97–107, doi:10.1177/026327695012004007.

Bey, Hakim. T.A.Z.: The Temporary Autonomous Zone, Ontological Anarchy, Poetic Terrorism.

Bridle, James. “The Render Ghosts.” Electronic Voice Phenomena, Tom Chivers, 2013, http://www.electronicvoicephenomena.net/index.php/the-render-ghosts-james-bridle/.

Bridle, James. “On the Virtual.” Voices, National Gallery of Victoria Triennial , 2017, https://www.ngv.vic.gov.au/exhibition_post/on-the-virtual/.

Buongiorno, Federica. “From the Extended Mind to the Digitally Extended Self: A Phenomenological Critique.” Aisthesis: Pratiche, Linguaggi e Saperi Dell’Estetico, vol. 12, no. 1, Jan. 2019, pp. 61–68. EBSCOhost, doi:10.13128/Aisthesis-25622.

Chen, Y. et al.(2019) ‘Demystifying Hidden Privacy Settings in Mobile Apps’, in 2019 IEEE Symposium on Security and Privacy (SP). 2019 IEEE Symposium on Security and Privacy (SP), pp. 570–586. doi: 10.1109/SP.2019.00054.

Cukier, Kenneth Neil, and Viktor Mayer-Schoenberger. “The Rise of Big Data.” Foreign Affairs, Foreign Affairs Magazine, 17 Sept. 2019, https://www.foreignaffairs.com/articles/2013-04-03/rise-big-data.

de Kerckhove, Derrick, and Cristina Miranda de Almeida. “What Is a Digital Persona?” Technoetic Arts: A Journal of Speculative Research, vol. 11, no. 3, Dec. 2013, pp. 277–87. EBSCOhost, doi:10.1386/tear.11.3.277_1.

Derrida, Jacques, and R. Klein. “Economimesis.” Diacritics, vol. 11, no. 2, 1981, pp. 3–25. JSTOR, www.jstor.org/stable/464726.

Derrida, Jacques. “The Ends of Man.” Philosophy and Phenomenological Research, vol. 30, no. 1, 1969, pp. 31–57. JSTOR, www.jstor.org/stable/2105919.

“Digital Public Spaces.” Future Everything, 24 Jan. 2019, https://futureeverything.org/news/digital-public-spaces/.

Hosking, David. “Art Let Loose: Autonomous Procedures in Art-Making.” Leonardo, vol. 52, no. 5, Oct. 2019, pp. 442–47. DOI.org (Crossref), doi:10.1162/leon_a_01476.

Henriksen, E. E. (no date) ‘Big data, microtargeting, and governmentality in cyber- times. The case of the Facebook-Cambridge Analytica data scandal.’, p. 151.

Kafer, Gary. “Reimagining Resistance: Performing Transparency and Anonymity in Surveillance Art.” Surveillance & Society, vol. 14, no. 2, Sept. 2016, pp. 227–39. DOI.org (Crossref), doi:10.24908/ss.v14i2.6005.

Monahan, Torin. “Ways of Being Seen: Surveillance Art and the Interpellation of Viewing Subjects.” Cultural Studies, vol. 32, no. 4, July 2018, pp. 560–81. DOI.org (Crossref), doi:10.1080/09502386.2017.1374424.

Mustill, Edd. Understanding How Open Government Data Is Used in Capital Accumulation: Towards a Theoretical Framework.

Steyerl, Hito. “Digital Debris: Spam and Scam.” October, vol. 138, Oct. 2011, pp. 70–80. DOI.org (Crossref), doi:10.1162/OCTO_a_00067.

Spinoza, Benedictus de, and R. H. M. Elwes. Ethics: Including, the Improvement of the Understanding. Prometheus Books, 1989.

van de Ven, Inge. “The Monumental Knausgård: Big Data, Quantified Self, and Proust for the Facebook Generation.” Narrative, vol. 26, no. 3, 2018, pp. 320–38. DOI.org (Crossref), doi:10.1353/nar.2018.0016.