Sonic Flux

Sonic Flux is a generative music installation in the form of an android app that tracks your location. As the user moves around, they get to explore a surreal musical take on the Aylesbury Estate, where sounds have been arranged spacially within an area of around 1.1 square km. Rhythmic elements of the music are generative and detect and synchronize with the users walking pace. The user can breed and choose their own rhythms and arrange them spacially by pinning them down to specific locations.

You can download the app here.

produced by: Sam N

Concept

The main inspiration for this project was a book by Christoph Cox entitled Sonic Flux that explores the idea of sound as "a continuous flow to which human expressions contribute but which precedes and exceeds those expressions". The initial idea was to replicate this "continuous flow" with an app that would make music continuously and independently, being entirely determined by location, time, weather, etc. In other words, the aim was to create the impression that the app has its own inner life, going on regardless of whether you are using it or not. However, over time the project took the other direction lent by Cox's narrative, centered on the "contribution of human expressions" to this continuous flow. The idea was then to have a large part of the music be dictated by the user's interaction: the rhythmic elements of the music would be dependant on the user, while the textural and harmonic sounds would still be organized spacially and independently in order to mirror the ideas put forward in Sonic Flux.

The more ambitious idea I have for this project is for it to be intrinsically participatory - based on the musical content and interactions between users. Apart from the users being able to record within the app and use their own sounds, the idea here would be for users to be able to listen to any music pinned to their current location by any other users in the past, creating a much larger conglomerate of spatialized musical ideas - meaning you could go on a walk and listen to what others have pinned down to your present location. But for now, this is obviously far beyond the scope of this project.

Aesthetic

The surreal aesthetic of the music settled after a long period of pondering over the possible musical analogs of "one of the most notorious estates in the United Kingdom". While the Aylesbury Estate is famed for its embodiment of urban decay, over my 5 years living here I have developed a deep attachment to it, from its architecture to its residents and local businesses. This disparity between its public image as a 'ghost town' and my experience of it eventually became fertile ground for a surreal aesthetic, where playful and eccentric rythms akin to its inside experience contrast with eerie and uncanny harmonic textures representing its outsider perception as a "a desolate concrete dystopia".

Technical

Pace detection

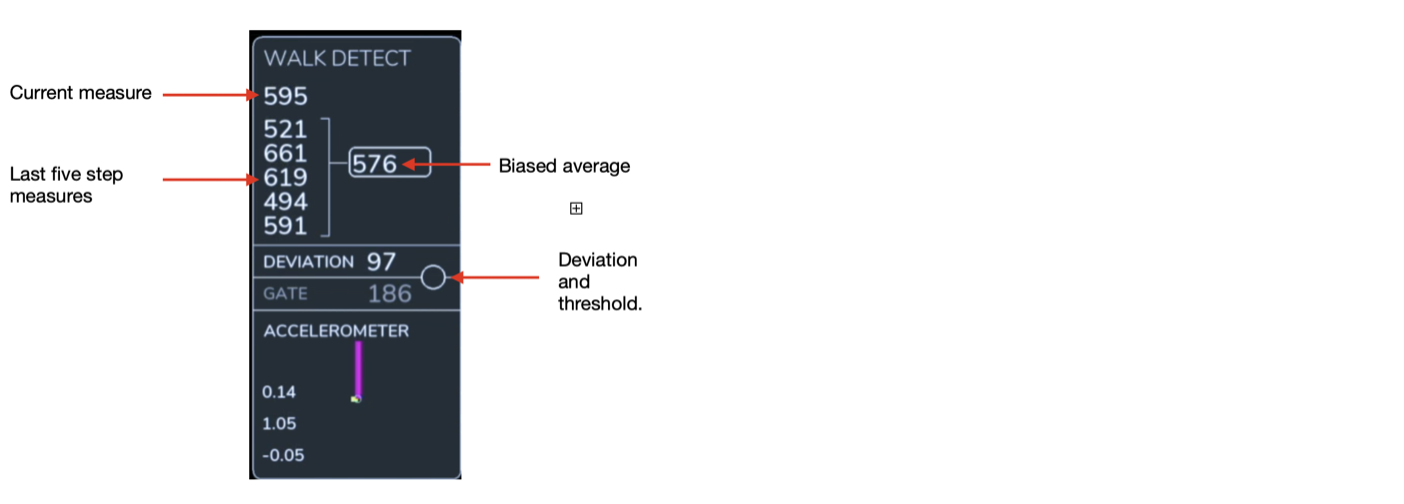

The app uses accelerometer values to detect walking pace, and while the code for this makes use of pretty basic technical principles, it was challenging to come up with a reliable pedometer for musical purposes. The x, y, and z values are firstly smoothed with a class I made for term 1's project. The three values are then added together to get a single measure of combined movement. I tried a few different ways to do this in order to avoid opposite changes canceling each other out, but unintuitively what did the job was scaling each value differently. The combined stream is fed into a vector, and at every point where the second value in the vector is higher or lower than both the first and third values, a high or low peak is registered. When the difference between the low and high peaks is larger than a predefined threshold, a "step" is detected. There is a separate thread running an ofTimer that measures the milliseconds between each low peak, after which the timer is reset regardless of whether it is considered a step, as long as the time measure is over 300ms. This makes sure the time measures between real steps are accurate even if a step is missed, as well as stopping the ofTimer counter from counting away when the phone is still. The 300ms threshold makes sure quick movements are not registered as steps (and no one will run that fast either). Each detected step's time measure is then fed into a vector, where the 5 last values are averaged, with a bias towards the most recent steps. (the most recent measure is worth 166% as opposed to the 33% of the last one) This bias makes sure pace detection is quick to respond to changes in walking rhythm. The difference between time measures is used to get a combined deviation value. I found that values between left and right steps were often asynchronous, so the differences are calculated between even and odd values separately. Finally, this deviation value is compared with a moving threshold ("gate" in the app) value equal to a third of the non-biased average. This moving threshold is also smoothed to allow for some headroom for the odd offbeat measure. Finally, when the deviation value is lower than the threshold the app assumes you are walking (or running) and tells the beat to do its thing, sending the calculated BPM along.

Getting the GPS to work within the app was incredibly frustrating and it took a great deal of time and getting familiar with events and listeners. Luckily this would end up being useful as I would be using them for tempo coding as well.

The music

Getting a decent sense of rhythmic accuracy from the phone also proved to be very tedious, as what worked on my computer wouldn't work on my phone. One of my main issues was not knowing what the problem was in the first place, so I very often found myself fixing the wrong things - obviously to no effect. After using ofTimer to get precise tempo and still getting inconsistent beats, I finally realized the common sound players I was using for testing weren't designed for precise timing as they weren't writing directly into the sound stream. It then took many failed attempts at different strategies to find the right coding framework and library for the music. The Android OS is notoriously bad in terms of audio latency, so I went as far as trying libraries outside of openframeworks designed specifically for android sound, but that was going to take too long. Thankfully I found ofxPDSP (ofxMaxim would not work for android) the latency was still there but it was manageable. I also went back to ofxBPM, a simple metronome addon, after wrongfully deeming it imprecise initially.

While the music includes a few oscillators (sub-bass, white noise), the music is largely sample-based. This was important to me not only to keep CPU use at a minimum, but more importantly because I wanted to be able to add more samples at any point, and I also want other potential users to be able to put in their own sounds. There is a total of 358 pre-loaded samples at the moment, roughly categorized by their best suiting role within the rhythm (i.e kick, hat, etc..) with around 14 samplers that can simultaneously play them. Most of these I have created in MaxMSP. Sounds from each category are indexed in terms of similarity. This is important because the samplers rely on randomness for the choice of sample for every triggered sound. The scope of this randomness therefore determines the range in timbre for each given sampler for every seed, and slight changes in timbre are important to get organic-sounding rhythms. There are also five granular synthesizers, two of which are controlled by the user's location and cover non-rythmic sounds in the music. The use of granular synthesizers was important as it allowed for a great deal of sonic variability, opening up a myriad of different timbres from the same original sample. Granular synthesis was also crucial in order to play reversed samples so that their transients are synchronized with the beat (for a normal sampler, the starting point would have to be different for each tempo). It also includes a couple of delay lines, a few filters, one reverb line, two compressors affecting most tracks, and a "ducker" (fake sidechain). Their job was to get a good-sounding mix out of the chaos coming into them. I also initially included sample-based Convolution Reverb but I had to ditch it as my phone didn't handle it too well, which was sad as the idea was to convolute sounds with each other as well as for reverberance.

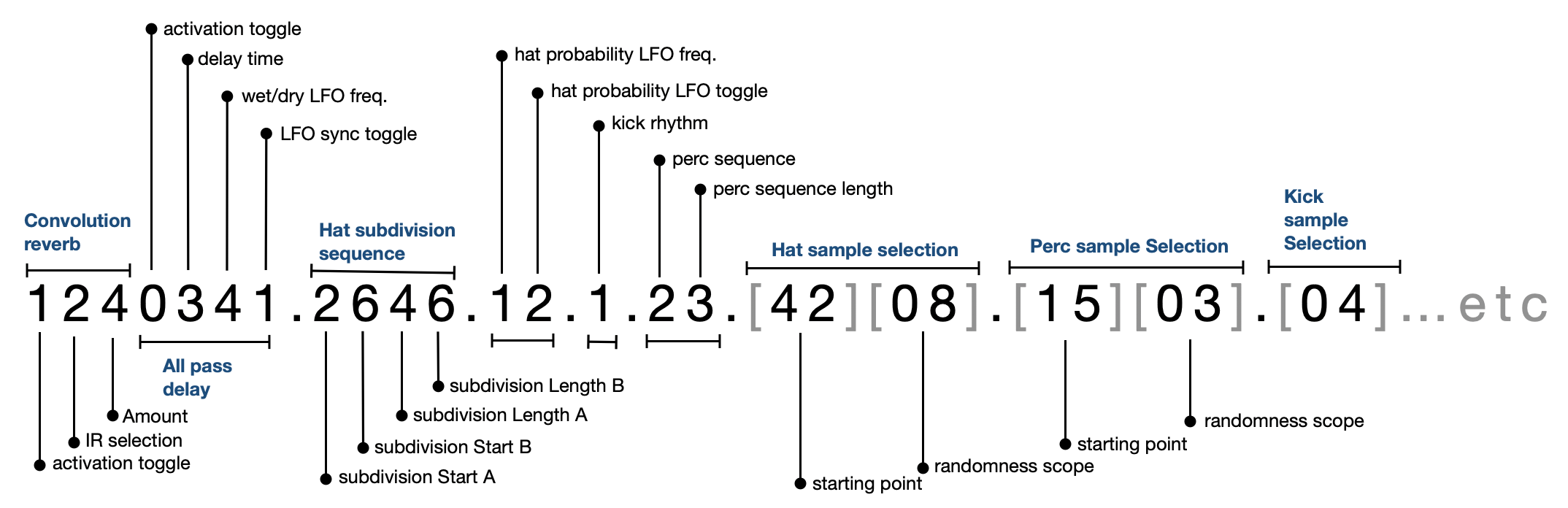

The seeds for each rhythm are a series of integers that represent different settings of all the above as well as sounds pitch, rhythm, frequency, sequence length, subdivision, entry point, and activation etc. A couple of LFOs affecting trigger probability spice things up even further. I was weirdly fixated on keeping all genes as single-digit integers but it wasn't always possible. The seed currently holds 63 values, but I feel like it either needs more, or I need to make better use of the current ones.

Lastly, the latency problem weirdly solved itself in the end. It is honestly hard for me to tell when using the app if there is any latency at all as the beat eventually synchs perfectly after a few seconds. I believe we probably unconsciously use the latency in our favour so that each step falls on the next beat. Whatever the reason, the music interaction feels very responsive and tight, and it doesn't feel like it's me following the beat but the beat following me. We always stay together when I speed up or down, as long as it's not drastic.

Self-reflection

I am generally pretty pleased with what turned out in the end. The whole creative process was full of frustration and challenges but the moments when things finally worked made it felt worthwhile - My first walk with a synched rhythm felt like eccstatic.

I was completely amazed at how well my phone could manage all the DSP going in within the app. Although I did have to ditch the convolution, I couldnt believe I was getting away with using 5 granular synthesisers, apart from all the other sounds, effects and constantly changing bus routings. My phone is a pretty old and cheap xiaomi, so I cant start to imagine what a powerful phone can manage.

However, there are some issues that need fixing. One of them is the GPS not updating at a fast enough rate compared to other apps on my phone, even though I am requesting "fine location access" and setting all the right things within my phone. It can go on for a straight minute without an update despite me moving continuously. The sense of moving within the music can therefore currently feel jumpy. Another thing I am not too happy with is the variability of the generated rhythms, which is decent but not good enough considering all the samples and genes involved. I have found that when changing a couple of more general settings within the rhythmic generation, I can get drastically different results, so I think I would probably have to add some genes of a more general effect. I will probably have to manage probability a bit better as well, as some rythmic sequences I was expecting hapen only very rarely. More sounds will always help aswell. Rhythmic samples are usually very light so I can imagine getting away with loading thousands of them.

There are also some minor easy fixes and upgrades I should add for the app to reach its full potential. Most of these were not prioritized for the submission as the app would still be usable, so I simply did not have the time to fix them: An annoying initial issue is the loading time the app spends on a white screen before finally setting up. I have not timed this but its can probably get up to around 15 seconds. I know this is the time it spends loading the samples so my understanding is that the easy fix for this is using a separate thread for loading the samples. Also, there is currently no way to delete saved or pinned seeds within the app, which should be a pretty basic function. (For now I have been doing this by rewriting the txt files within the code.) The app may also crash if you press the wrong button at the wrong time due to bad access errors related to the seed vectors (for example if you press 'BREED' before saving a seed). In retrospect, most of these issues could have been fixed in no time so its possible I got my priorities wong.

Lastly, I should have definitely gotten hold of a decent looking phone (without a smashed screen) for the example video. (I tried using my partner's phone but the screen resolution was different and some parts of the gui were slightly out of place. I have used width/hight proportions as measures for most of the gui so the app should roughly look the same for any phone, but I did use some pixel values for adjustments.)

References

ofxPDSP examples

https://www.youtube.com/watch?v=cpwrwPTqMac. This video gave me some direction for the step detection

The breeding technique was inspired by Week 14's lecture