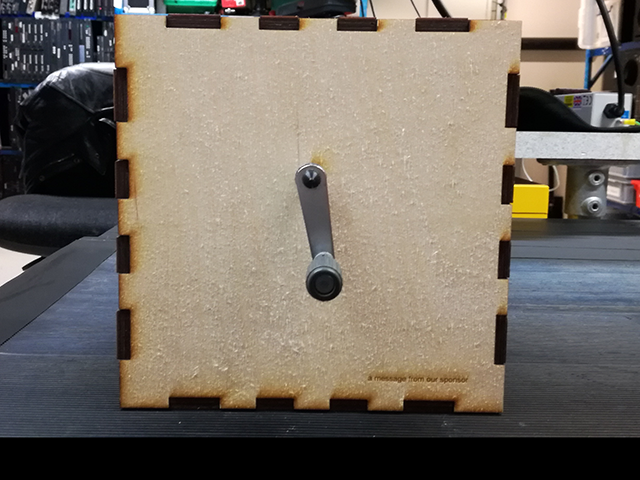

a message from our sponsor

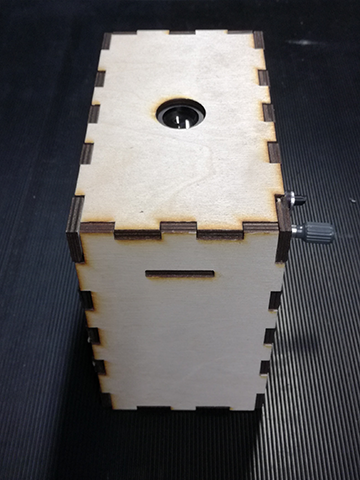

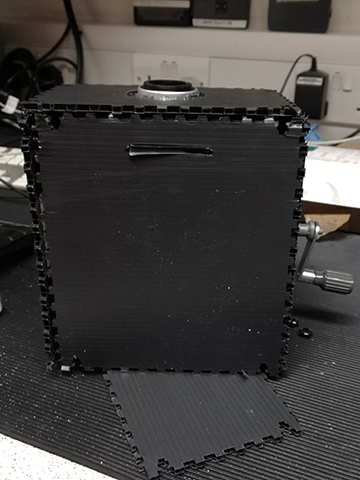

A version of a magic lantern, an apparatus dating back a few hundred years, where the viewer is invited to peer through a looking glass to witness fantastical images and possibly receive inspiration from higher realms.

produced by: richard moores

Introduction

This project was motivated by a fascination with the image in broad sense - in the relationship between an image as an internal subjective phenomenon in the 'mind's eye' or 'imagination' and as an external object in the visual field. Equally, it could the result of several years spent working as a projectionist.

Concept and background research

The precise origins of the magic lantern are obscure but go back as as far as the middle ages [1]. They mark an early stage in the development and understanding of the optical sciences which was soon to produce miriad optical devices and are a precursor to the invention of photography, moving film projectors and ultimately the VR and AR technologies which seem about to become part of everyday experience.

The image, light, its cause and shadow, its effect have provided a framework for philosophical and religious thought for centuries, going back at least to Plato's allegory of the cave. The concept of the minds eye, the allegorical and moral significance of light and shadow run so deeply through our culture I thought it would be an interesting area of research.

I am also interested in how the technological history of image science runs alongside changes in our understanding of what an image is - the notion of the mental image as the primary sign of intellect seems to have been overtaken by the external image, the notion of the fantastic or magical intervention by the digital. I wanted to play around with the idea of the internal and external nature of image with this box.

Technical

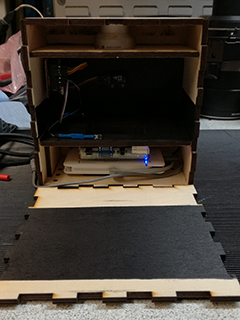

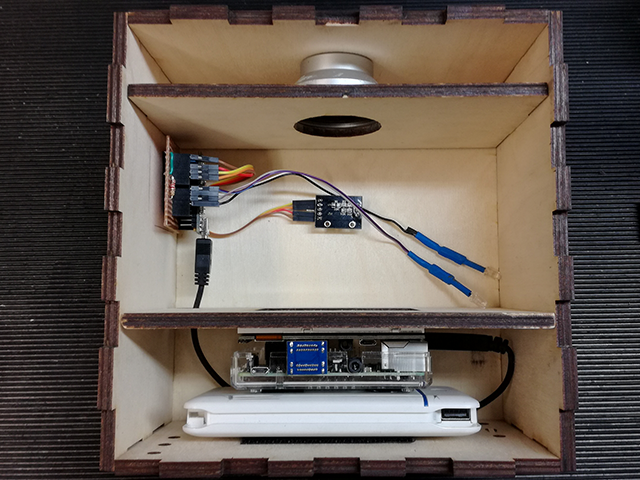

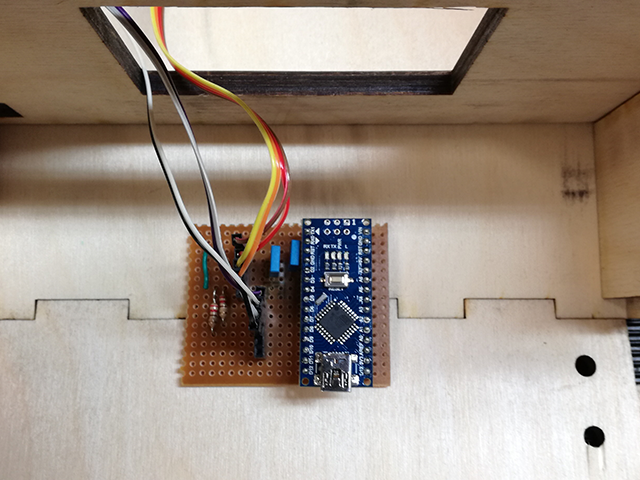

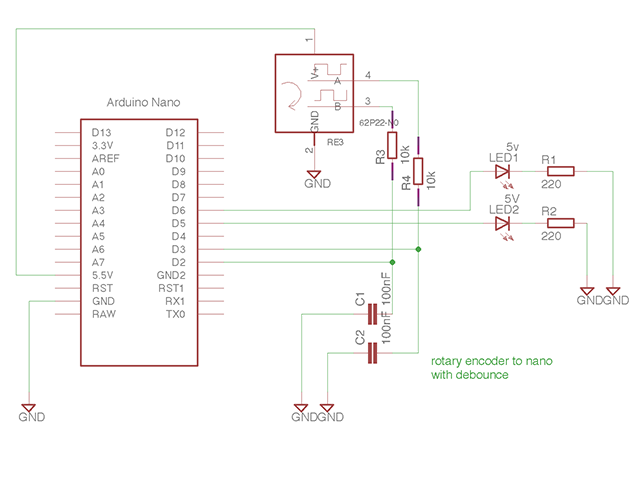

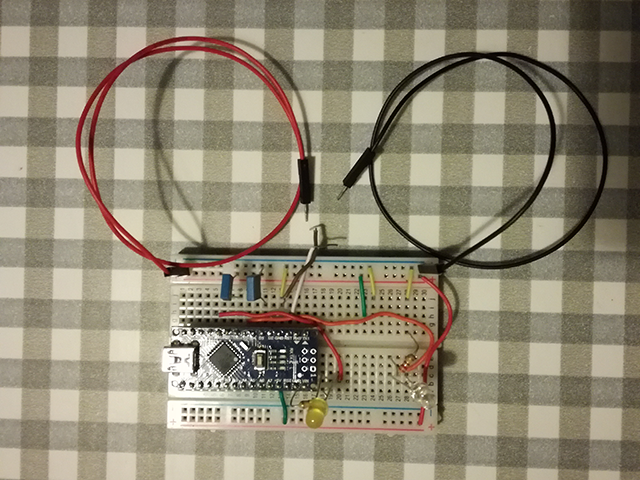

The set-up uses an Arduino Nano copy, a Keyes 040 Rotary encoder and a Raspberry Pi running openFrameworks with an LCD screen for the video playback.

Two LEDs to try and create a kind of projector flicker effect.

A couple of capacitors to debounce the encoder switches.

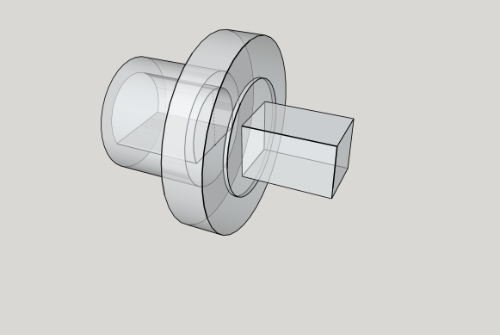

And a crank handle from an old 8mm film viewer, for hand cranking.

I wanted to keep the input from the encoder separate from the video playback system. While i think it would have been possible to connect the switch directly to the Pi, I wanted the system to be really responsive and I thought to achieve this wholly inside openFrameworks might be tricky and involve a separate thread to listen for events.

Thinking about speed lead me to wonder how a similar principle might apply to single threaded microcontrollers and i came across the idea of interrupts on the Arduino forum[2]. The nano code attaches an interrupt routine to one of the switches on the encoder, which means the function is called immediately, whatever else the nano is doing. This is important as the direction the handle is turning is given by the state of the second encoder switch, at the moment the first switch is activated. There are many, many versions of the code for this and in the end i wrote a very simple one which seemed good enough for my application.

For the flickering effect I used a bit-shift operator (>>) to create a kind of fade, with one LED offset from the other.

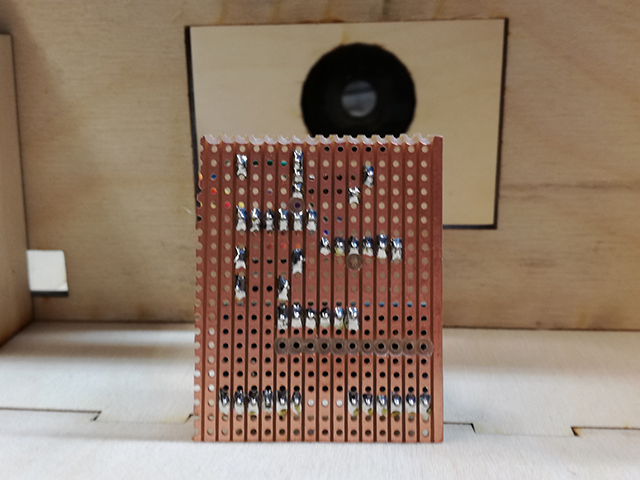

The switch was 'bouncing' however - giving multiple readings for each click in, often on opposing directions, causing the images to jump about and stutter. The 2 capacitors across the switches and ground (in series with the 10k resistors in the switch) were really effective at controlling this. The debouncing of switches is a slightly contentious subject [3] and this method isn't without its critics as there is the potential to generate large currents throught the switch. Most of the circuits I saw also included a hex-inverter Schmitt trigger which adds hysteresis to the logic, preventing the voltage from staying in the ambiguous crossover range between 1.5 and 3.5v. I found the circuit worked better (the frame numbers scrolled up and down more smoothly) without this addition though perhaps this was down to incorrect use of it.

The nano sends values to the Pi over serial USB, which the Pi interprets as frame numbers. Pi's are great at video playback provided the hardware acceleration on the graphics chip is used. They come with an example of how to access the openMAX pipeline in C, but this is way beyond me. I decided the best way to get good performance was to load the images into a vector of textures, which i understood from the Dennis Perevalov's book [4] would use the video RAM direcly, avoiding the costly processing on the CPU. It also suited the nature of the thing as i kind of flipbook, with separate images rather than video. (I knew the PI could playback h264 video extremely well, but the h264 codec isn't designed for playing backwards..)

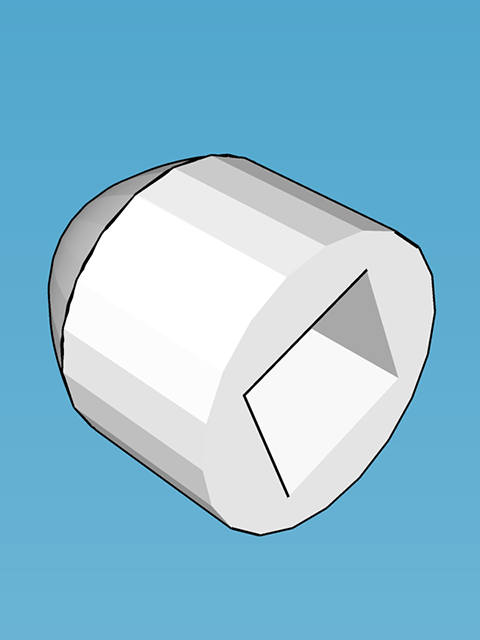

I 3d printed a connector to join the handle to the encoder stem and a cap to keep it in place. (Tinkercad, SketchUp).

The schematic was made with Eagle.

Future development

I would like to experiment with different content. My original idea was for imagery of a dancer, but I decided it might be a little twee. I would like to get away from the flat surface of a screen, perhaps using projection onto some semi-translucent material to give a 3 dimensional effect, but still in a confined space.

Strobe lights were another possibility I considered - I would at some point like to work with them as a way of changing the apparent phase of a moving object, reversing its direction and so on.

The handle seemed appropriate for this version, but it could be interesting to experiment with touch as a way of modifying the content.

Self evaluation

On a superficial level as visual joke I think this project worked fairly well. I would have liked to make a better flicker effect, possibly using strobes as the LEDs were difficult to place without being too dim or blocking the view.

I felt the relationship between the viewer and image was too direct - I would have liked to add some element of distortion or some other effect to distance the object. In this regard, the piece isn't unsettling enough and doesn't invoke a sense of mystery to the extent I was originally aiming for.

References

1. Marina Warner Phantasmagoria (Oxford 2006)

2. https://playground.arduino.cc/Code/Interrupts

3. https://hackaday.com/2015/12/09/embed-with-elliot-debounce-your-noisy-buttons-part-i/

4. Dennis Perevalov, Mastering openFrameworks: Creative Coding Demystified