WE DO NOT SEE UNTIL WE SEE

The flow would not be perceived clearly until we seeing ourselves under its influence.

produced by: Bingxin Feng

Demonstration video (Videographer/Editor: Bingxin Feng, Music: Prototype 02 (2000) form Alva Noto)

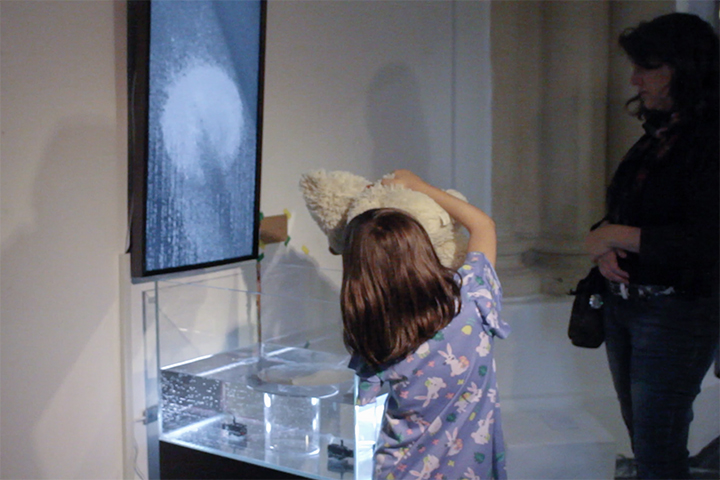

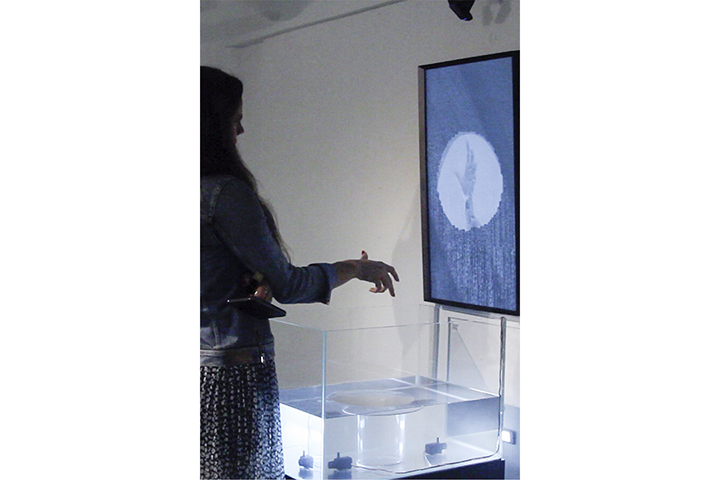

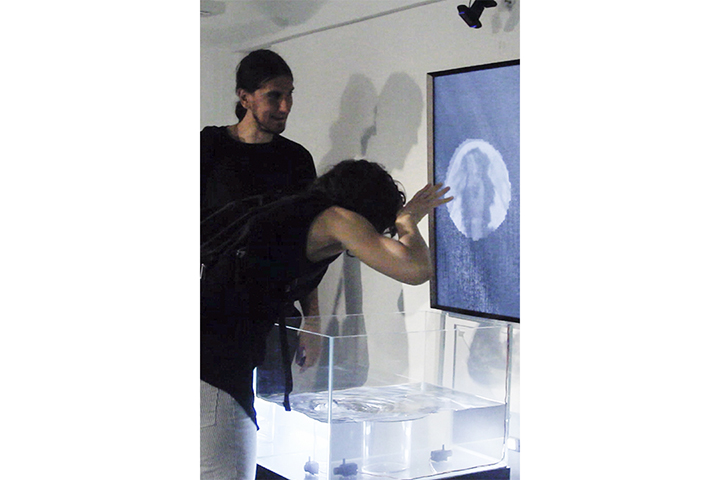

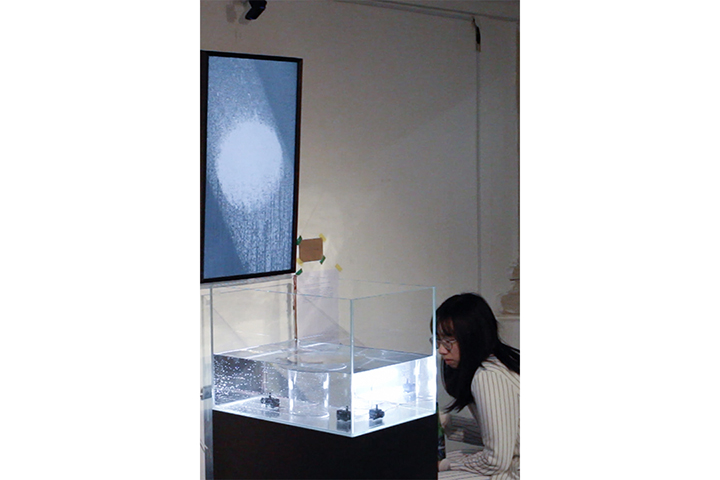

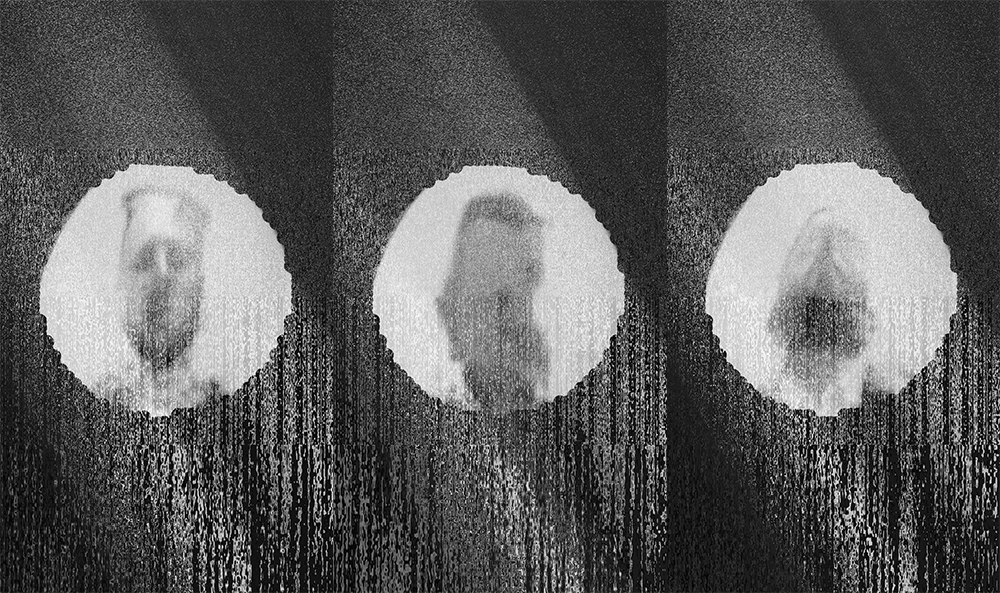

Selected photos from the exhibition

Selected screenshots from the exhibition

Introduction

WE DO NOT SEE UNTIL WE SEE (2019) is an interactive physical computing and video installation, an abstraction of the state of how we perceive matters around us. With minor waves and flows which have been generated from the pumps underneath, the tank of water never stays still. However, without any attention to look into the water, the wave seems rather subtle, even too minor to notice. But upon closer inspection, when there is a viewer comes forward to look into the water, he/she will see himself/herself in the mirror which is within the centre of the water. While the viewer observing himself/herself within the water, the flow gets more intensively, then he/she will see the self image getting influenced by the flow - that’s the moment when the viewer perceives the flow most clearly and strongly. However, if the viewer stops gazing at the self reflection in the water and leaves, the waves would damp down, back to the subtle state which is hard for the surroundings to notice.

The image on the screen is a digital reflection of the water substance. While one is gazing into the water, his/her reflection in the mirror will emerge from the centre of the screen - which creates a collective experience that meanwhile, the other viewers in the surrounding space would also perceive the wave - through the gazing viewer’s reflection emerged on the screen. However, one could not see both of his/her physical and digital self reflection at the same time. The digital image contents are generated from the viewer’s interaction with the physical substance, while one chooses to give more attention to the digital image, he/she will lose the image.

Concept and background

- Self image, Mirror, Camera

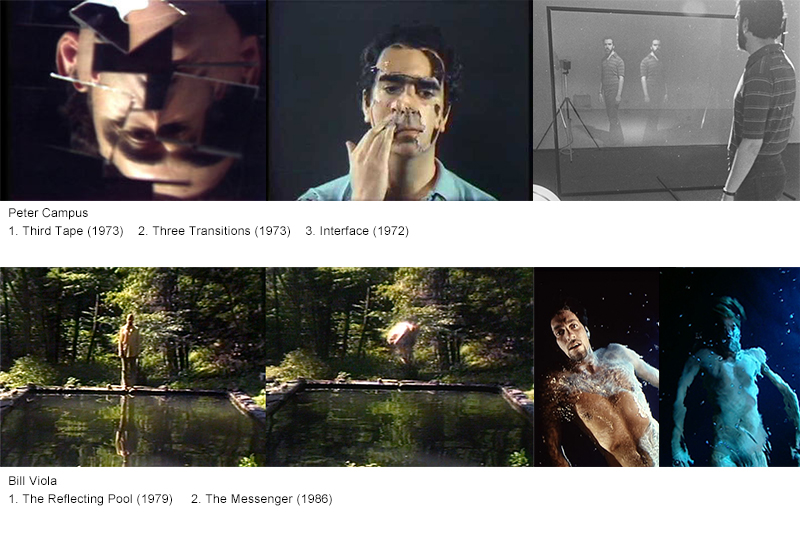

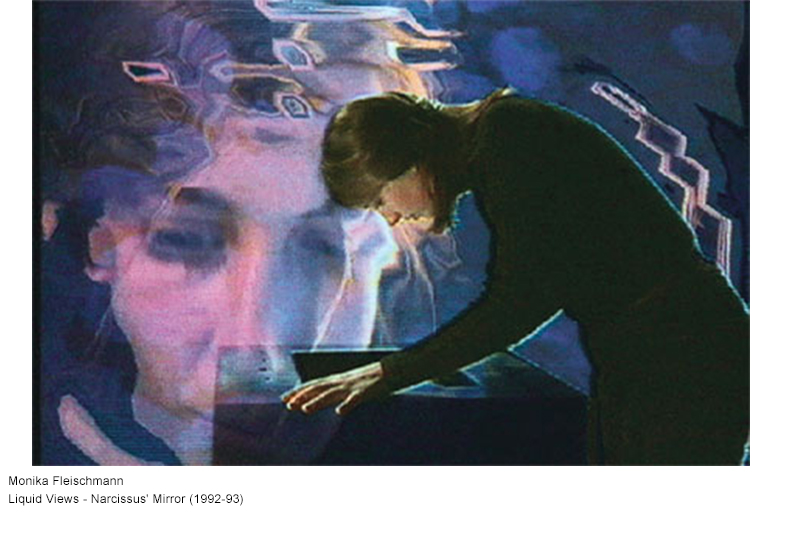

Due to one of my identities of being a video artist, as well as my research interest of audience engagement in their video viewing experience - self image, mirror, and camera have been the most major research objects in my recent projects. Peter Campus and Bill Viola are the most important artists influenced me in this realm. With simple video editing like green screen, or with mirror fragments reflections, or just with a coloured background, Peter Campus took many videos of himself, and he was good at use very simple behaviour (performance/interaction) to make us think what self/ego is, and what the relation between self and other, or self and the surrounding is. Bill Viola’s videos were not himself, but they were also about self reflection. And water, reflection, distortion are some of his favourite materials, or rather symbols in his work. Today there are so many “new media artworks” using camera and self image, but not really having a clear intention (more like a trend or for fun). However, from those early video arts pioneers works, I gained deeper understanding of reflection and self image in an artwork, and I’m inspired to concern: What does it mean to see oneself’s reflection? What’s the different between me and my reflection? Can I really see myself? Do I think about my ego or the other when see my reflection? What do we think while seeing the other’s reflection…

- Matter, Phenomenon, Experience

Olafur Eliasson is another significant artist inspiring my project. His works inspired me to use physical material, nature material (like water), and shape it in a simple and nice aesthetic form, however the form itself should be able to tell people to experience it, to perceive it. His installations are like the ineffable phenomenons, the audience don’t need any instruction, any guide, or any tools, they just need to see it, then they would be just attracted by the pieces and step into the pieces, even become parts of the pieces, meanwhile the audience are welcome to just have fun with it, or to reflect on deeper thoughts from it. The material, the form, the design, the audience experience, the deliver meanings, I found it all greatly poetic, and that’s what I would like to create in this (and the further) project(s).

Some people saw the immersiveness (engagement) of the dematerialized experience as an excuse for utopian escapism...But I was, on the contrary, hoping that dematerialization would actually allow you to evaluate a certain set of questions that would otherwise be hard to raise.

...I became very curious about how we might introduce the notion that the person perceiving is not a consumer passively taking in something but, in fact, a producer.

... there was a very strong sense of collective motion a lot of people being physically active in a very non-hierarchical way...I had not foreseen all of them, but the opportunity was still there for me to host the possibility of a recalibration of how body, muscle memory, and cognition actually work together.

Olafur Eliasson (2018)

Excerpt from More than Real, Art in the Digital Age, chapter ‘But Doesn’t the Body Matter?’

- Happening RightNow

The project was also inspired during seeing the drastic protest actions in HongKong against the political conflicts between HongKong and the mainland Chinese government. I was born and grown up in Guangzhou, and my mother’s families are living in HongKong for years. We speak the same language, share the same culture, so could say that there’s a bit more connection between this city and me, comparing with most of the people in mainland China. And I’ve known there’re some political decision conflicts between the two governments and there’re many culture and value conflicts between the people. However, I was more like a spectator, who wasn’t really very concerned about these matters. Until recently people’s anger bringing violence and destroy on the streets, as well as governments’ policy changing, which actually influencing my families and friends’ lives there, then I started to really perceive these issues, look into these incidents. In the end, the more I look into it, the more I see this is not “their matters there”, but “our matters here”.

We might believe the wave was too minor to perceive, until we come forward and look into it, seeing ourselves being inside the water and being affected by the wave, then we actually perceive the wave. - In fact, this behavioural concepts could apply to many objects beyond the HongKong-Mainland matters: politics, social and economic trends, ecosystems, climates, etc. If we don’t step forward and look into it, we would see nothing, and we won’t understand what it is.

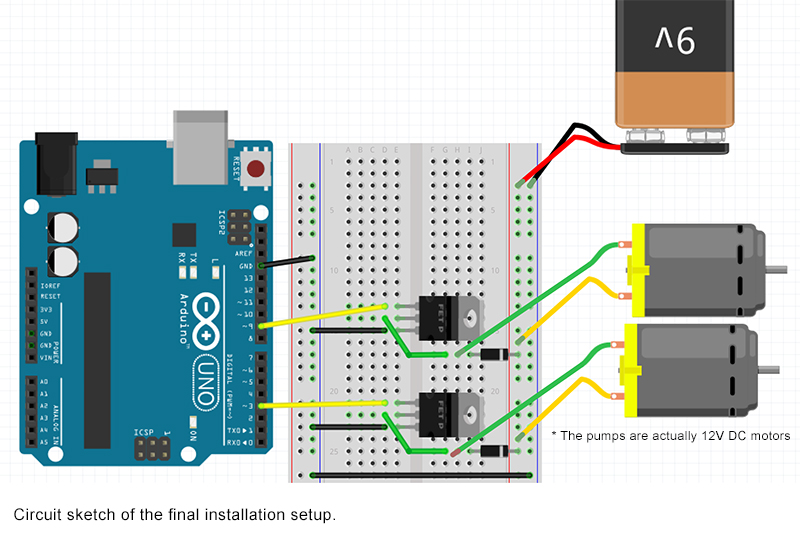

Technical

The project was built with OpenFrameworks and Arduino. The system includes 4 parts: (1) ARDUINO controlled PUMPS which generates ripples/waves in the water; (2) WEBCAM gets motion information of the water waves (using algorithm of background differencing and brightness value calculation), and use the data to generate, or rather to affect the computer graphics on the display screen; (3) DISPLAY SCREEN shows designed computer graphics combined with the webcam stream of the water and the audience; (4) AUDIENCE react to the screen and/or the water (mirror), those reactions (e.g. gazing or paying attention to the work) are captured by the webcam, with the algorithm of face tracker, the data then affects the electric current value which goes through the Arduino and pumps to control the water waves generating.

1 - The Mirror and The Camera

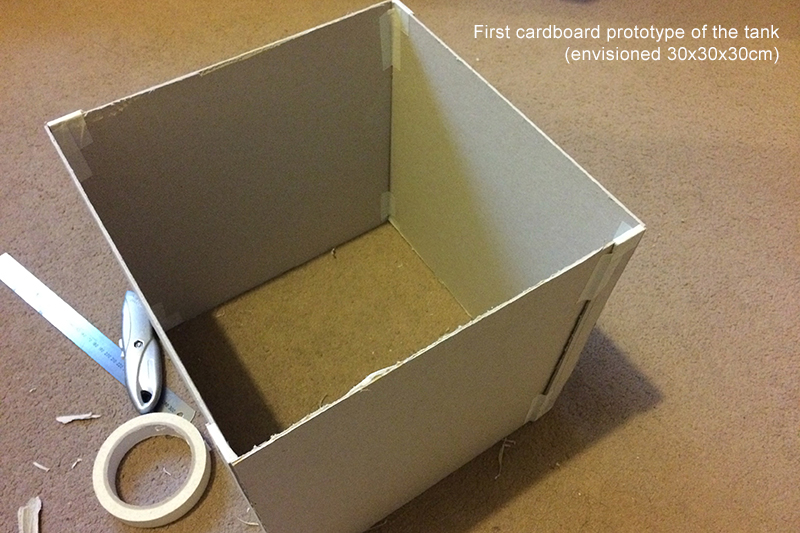

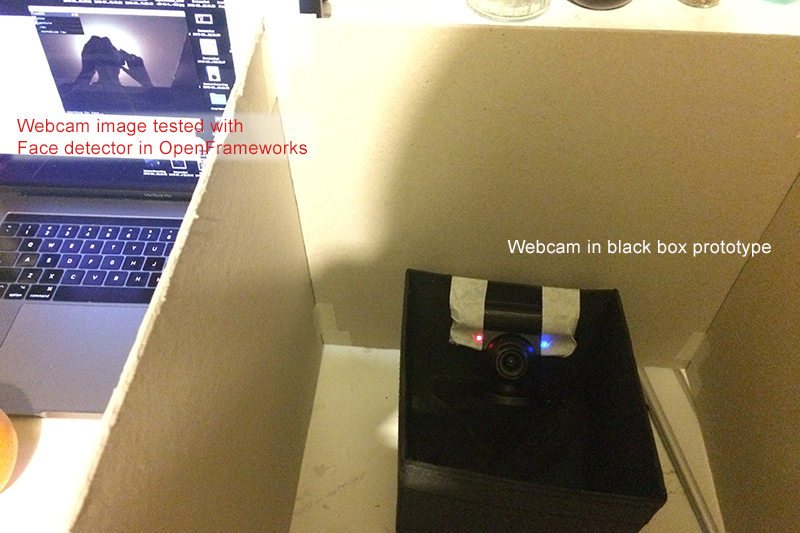

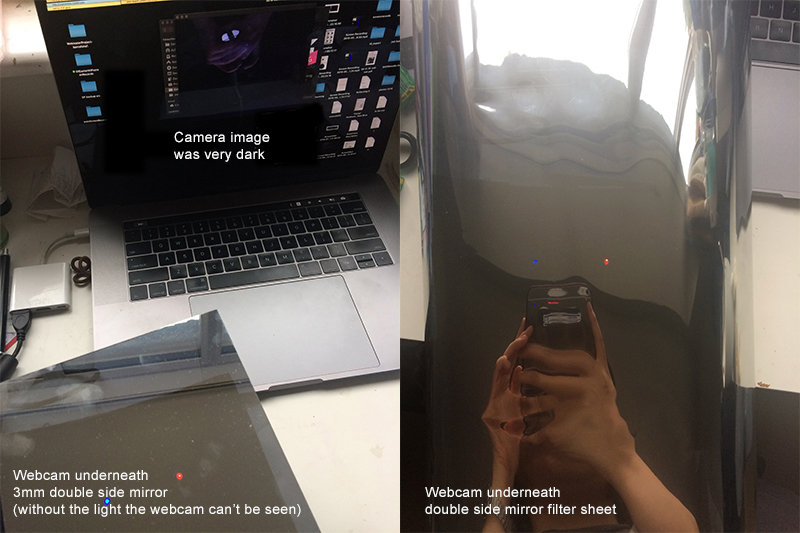

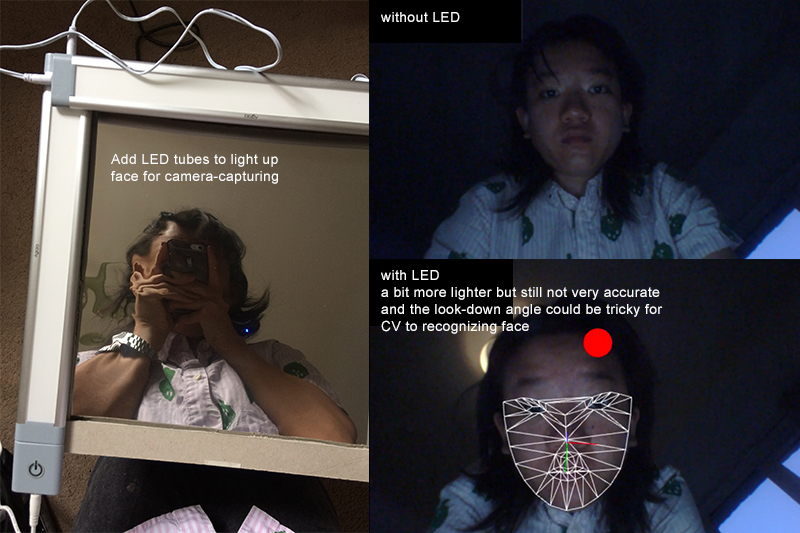

“The viewers notice it, look at it, but not be aware of they are parts of the system before they get engaged in the system, or while they are interacting with the system - after the experience, they might (or might not) find out they are parts of the system.” To achieve this very first intention of this project, my first experiment goal was to “hide the camera”. At first, I experimented with the double side mirror, which the dark side of the mirror can see through the bright side of the mirror without being seen. To test it, I tried to place the camera in a black box, then covered the box with the double side mirror. I tested with both the 3mm mirror and the mirror filter sheet. But see-through mirror is not equal to a transparent layer, both of them covered quite a lot light, and the camera image was too dark to get information precisely. Then to solve the lighting problem, I tried to place led light tubes around the mirror surface to light up the captured side, as well as change the webcam from Sony Ps3 Eye to Logitech C920, which the camera settings could be pre-managed by a software called Webcam Settings. However, the result was still not very satisfying.

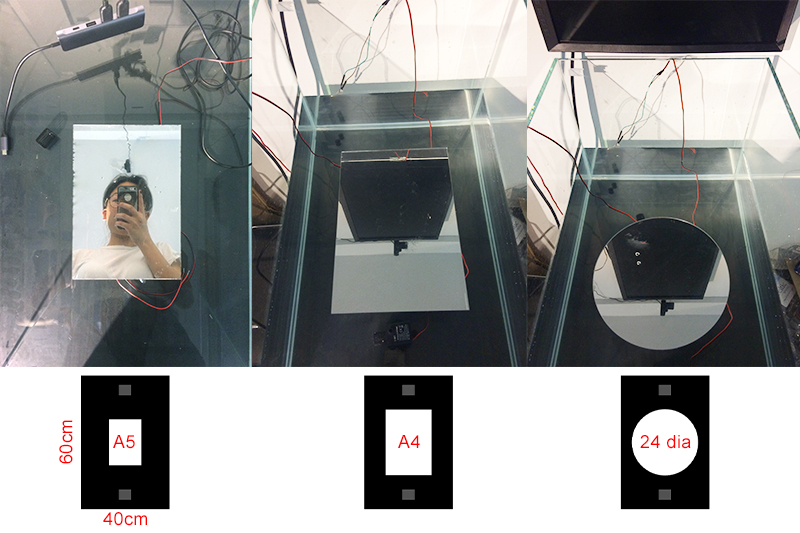

Then I gave up and decided to just place the webcam above the water tank on the ceiling, or at the top of the screen, and using algorithm like face detection, face tracker, and YOLO object (person) classification to get information like audience amount and audience distance to control the Arduino. Luckily during this experiment process, I found that the webcam could detect human face/person class from the mirror reflection, which could be a good setup to “see the audience without being seen obviously”. So the setup of placing a mirror in the water tank and a webcam capturing image above the tank became the final plan.

2 - Challenges of Setting Up Physical Installation

The other major technical challenge was setting up the system “in the real world”. There were a few key points:

2.1. How to make sure the audience look into the mirror in a right angle?

My initial plan was to cover a full mirror surface above the pumps (with holes in the mirror for the pumps, and the mirror surface was also underneath the water surface). But from the feedbacks from peers and tutors, I realised that if there’s such a big mirror out there, people were actually not that interested in it, they could just see the mirror in the tank and pass by from a certain distance, without coming forward to look into the tank. And there’s a large possibility that people won’t look into the mirror from the precisely front of the tank, but look into it from the left/right side of it, which would give a wrong angle of face image to the camera, and the face classification algorithm would not work with it. In addition, the face tracker (face classification) algorithm works best with 1 person’s face, but with a large scale mirror, audience with company more tend to look into it together rather than one by one. Therefore after a few tests, I decided to use a small scale mirror in the centre of the tank - which kind of forcing the viewer to step forward and look into it alone. Like Brenda Laurel on Don Norman said in The Design of Everyday Things: "What is represented in the interface is not only the environment and tools but the process of interaction”. As for the shape of mirror, to present the poetic of the installation in terms of aesthetic/form, since there were all straight lines and angles in the plinth, tank, and screen, I decided to use a circle mirror to bring some curves in the setup, which also corresponding to the softness of water.

2.2. The Weight of Water

The other issue worried me a lot during the setup process was the weight of water, which the plinth might not be able to support. (And from peers’ experience, if the tank is made from plastic but not glass, it might be leak or even broken with too much water.) I filled half of the tank water (45L, i.e. 45kg, and the glass tank is around 10kg) and it turned out fine in the end. However, I think it’s good to mention as an advice for people who would like to work with water: don’t forget to consider the weight of water and the material to support it. 1L water weights 1kg.

3 - Arduino and Pumps

To control the pumps with Arduino through data from a webcam in OpenFrameworks, my workflow was : (1) following example from Youtube Easy arduino projects, run pumps with Arduino; (2) following example from keitasumiya, test sending gradient serial value from OpenframeWork to Arduino with simple LED; (3) replace LED lights with pumps in the circuit, and add code of face tracker, using the boolean of if there’s a face as the trigger to increase/decrease the serial value to control the electric current goes through the Arduino.

4 - Shader Computing Graphics

Since from my last Creative Coding workshop project’s experience, I believed that in OpenFrameworks running the visuals by calculating with GPU is necessary, otherwise it would be way too slow to running complicated system and/or sophisticated visuals. So I learned to use shader from Andy Lomas workshop resource and the OpenFrameworks examples. The final visual was created with 2 shaders.

The first one is the background layer, modified from @kyndinfo’s Scaled Noise, I modified some values to make it looks like a streaming waterfall, but in a very computational and glitchy aesthetic. And I combined a texture of live camera image with it, to give the visuals a bit more variation lively. The speed this “waterfall” flows depended on how intense was the water waving in the tank, which was captured by the webcam on top and processed with a background differencing algorithm and calculated from the average brightness value of the differencing images. When the water was calm, the graphic streamed slow, when the water waved intensely, it streamed faster. The second shader is the circle layer. It’s a webcam live video capturing from the top of the tank, cropped with the WebcamSetting software, then used a circle-in-rectangle image mask to cropped again in OpenFramework to only draw the circle mirror area. After that, I put this camera image as a texture of a noise distortion shader modified from @patriciogv_Fractal's Brwonian Motion. And designed it to look more or less dissolved in the background, then only became clearer when there’s a viewer stepped forward, looking into the mirror, and turned up the water waves - which was captured by the webcam background differencing.

Future Development

During the process, I spent a lot of time and money to make the plinth, to manage the water in the tank, etc. The outcome did looks neat and clean as I expected, but if there’s more time, rather than separated it as two parts, I would like to try to make a whole vessel with material like cement. So that aesthetically it’s neater, and more symmetry of the screen and water surface, and people would only see the water and mirror when they come closer to the installation. The other thing I’d like to improve is to change the audience live webcam video into previous/ random stored audience video. So that people would not see themselves on the screen while they are looking in the mirror, but someone else instead. And maybe to play the video of someone looking into the mirror when there’s no one looking into the installation. That would present the concept of seeing/not seeing self more sophisticatedly.

Self-Evaluation

In general, I’m quite happy with the project. It’s my first proper installation work. My goal was to make it simple and nice - clear and near aesthetic, simple interaction, delivers polysemous meanings (of course I have my interpretation logic, but depends on different audience, there could be different interpretations, I believe that’s a kind of poetic). And from the exhibition feedbacks, I think this goal has been achieved. There were people from gallery mentioned they liked the design simple and clever, there were people from the non-art background found it interested to play with the relation between the camera and the mirror (surprisingly it was quite popular to take selfies in between the mirror under waves and the screen image). And the border / combination between digital and physical materials has been one of the main goals since I joined this course. Experience from this project definitely gave me more confidence to work with this subject in the future.

References

- Conceptual

Ólafur Elíasson, Godfrey, M., Tate Modern, Solomon R. Guggenheim Museum, 2019. Olafur Eliasson: in real life.

Barlow, M., 2004. Touch: Sensuous Theory and Muitisensory Media Laura U. Marks: Touch: Sensuous Theory and Muitisensory Media Laura U. Marks Minneapolis: University of Minnesota Press, 2002, 259 pp. Canadian Journal of Film Studies 12, 126–129. https://doi.org/10.3138/cjfs.12.2.126

Daniel Birnbaum editor, Michelle Y. Kuo editor, 2018. More than real: art in the digital age : 2018 Verbier Art Summit. Koenig Books, London.

John. McCarthy, 2004. Technology as experience. MIT Press, Cambridge, Mass.

- Technical

DIY MINI FOUNTAIN SHOW LIKE DUBAI - ARDUINO, n.d.

Sumiya, K., 2017. keitasumiya/oF_arduino.

Shaders: Common ingredients for Fragment Shaders [WWW Document], n.d. URL https://learn.gold.ac.uk/mod/book/view.php?id=652211&chapterid=18156.

GLSL Shader Editor [WWW Document], n.d. . The Book of Shaders. URL https://thebookofshaders.com/edit.php?log=161127200614.

GLSL Shader Editor [WWW Document], n.d. . The Book of Shaders. URL https://thebookofshaders.com/edit.php#11/lava-lamp.frag.

ofBook - Introducing Shaders [WWW Document], n.d. URL https://openframeworks.cc/ofBook/chapters/shaders.html.

Thanks

Thanks to lecturers Theo Papatheodorou, Atau Tanaka, Rachel Falconer, Helen Pritchard, Andy Lomas, Evan Raskob and staff Anna Clow, Pete Mackenzie, Konstantin Leonenko, Erika and Joe for their feedbacks and supports.

Thanks to my classmates Andi Wang, Ankita Anand, Eirini Kalaitzidi, Eddie Wong, James Tregaskis, Keita Ikeda, Luke Dash and Panja Göbel for their feedbacks and helps.