Storm

Storm is a dance performance that explores the body as a vehicle for expressions with the synthesis of sound, motion and spatial awareness.

produced by: Yewen Jin

Introduction

We first learned to perceive the world as a three-dimensional space not by looking, but by touching. By reaching out the bodies and getting feedbacks like obstacles, frictions, heat, pain or even sounds, a child learns to situate themselves in the world. From what we learn by moving and touching, our two eyes can read light, and our two ears can read the sound to locate objects in relation to us and ourselves to environment. Eyes and ears are the aids, but the body is the fundamental instrument with which we interact with the world.

This project is a prototype as part of my ongoing exploration of the crossover between music/sound, space, touch and awareness of the body. Enabled by Kinect and the way it’s programmed, the piece combines choreography and music composition in one single process and uses the naturally synchronized flow of events to create a narrative.

Technical

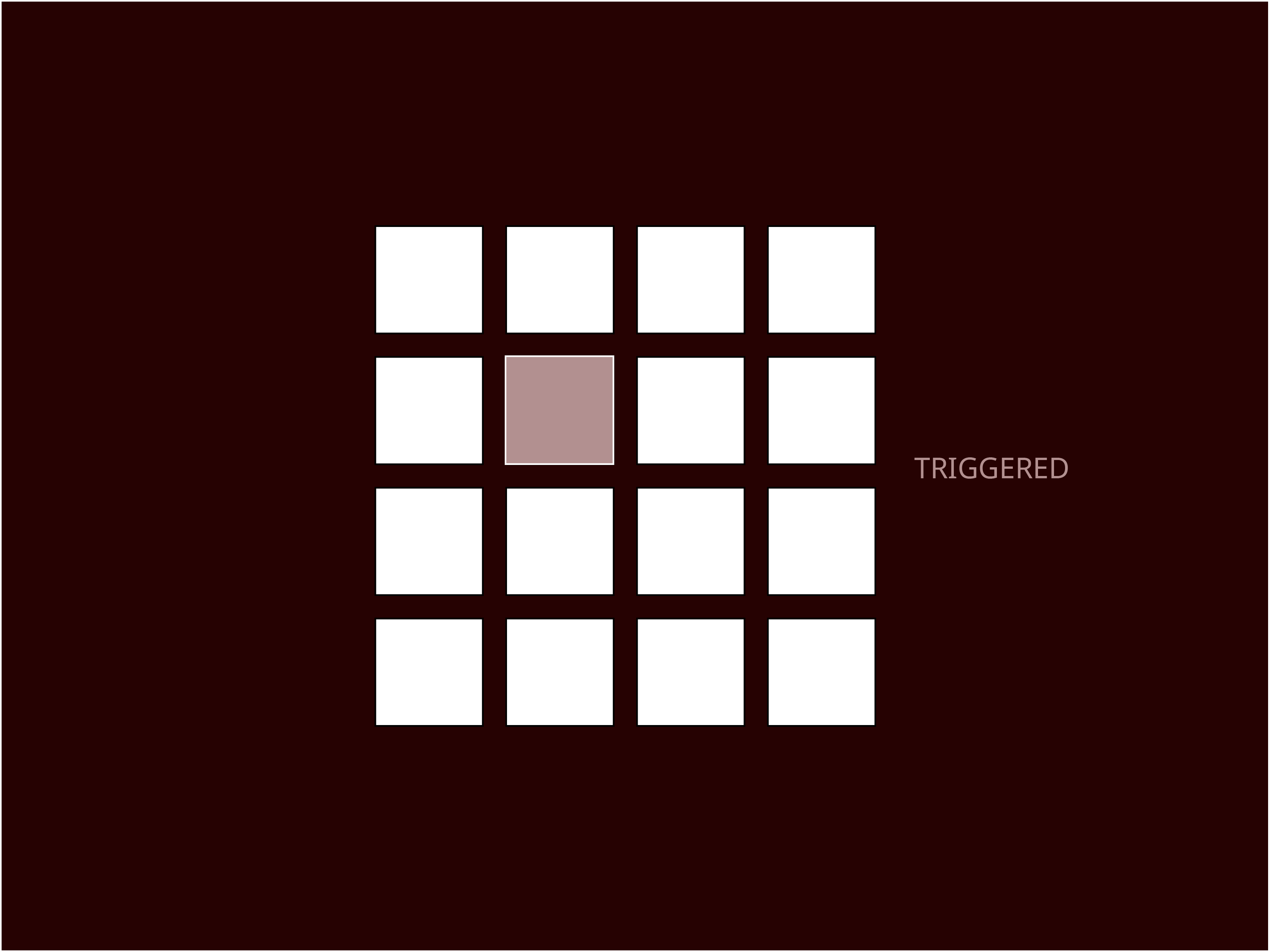

The performance uses Kinect as a spatially reactive instrument, which maps the body as a physical object to the virtual space, which contains the triggers for sound. When collision between the body and a trigger is detected in virtual space, a sample is played. As shown from the video, there are currently 16 pre-recorded samples laid out in a 4x4 grid, which are all placed roughly at the height of the top of my head.

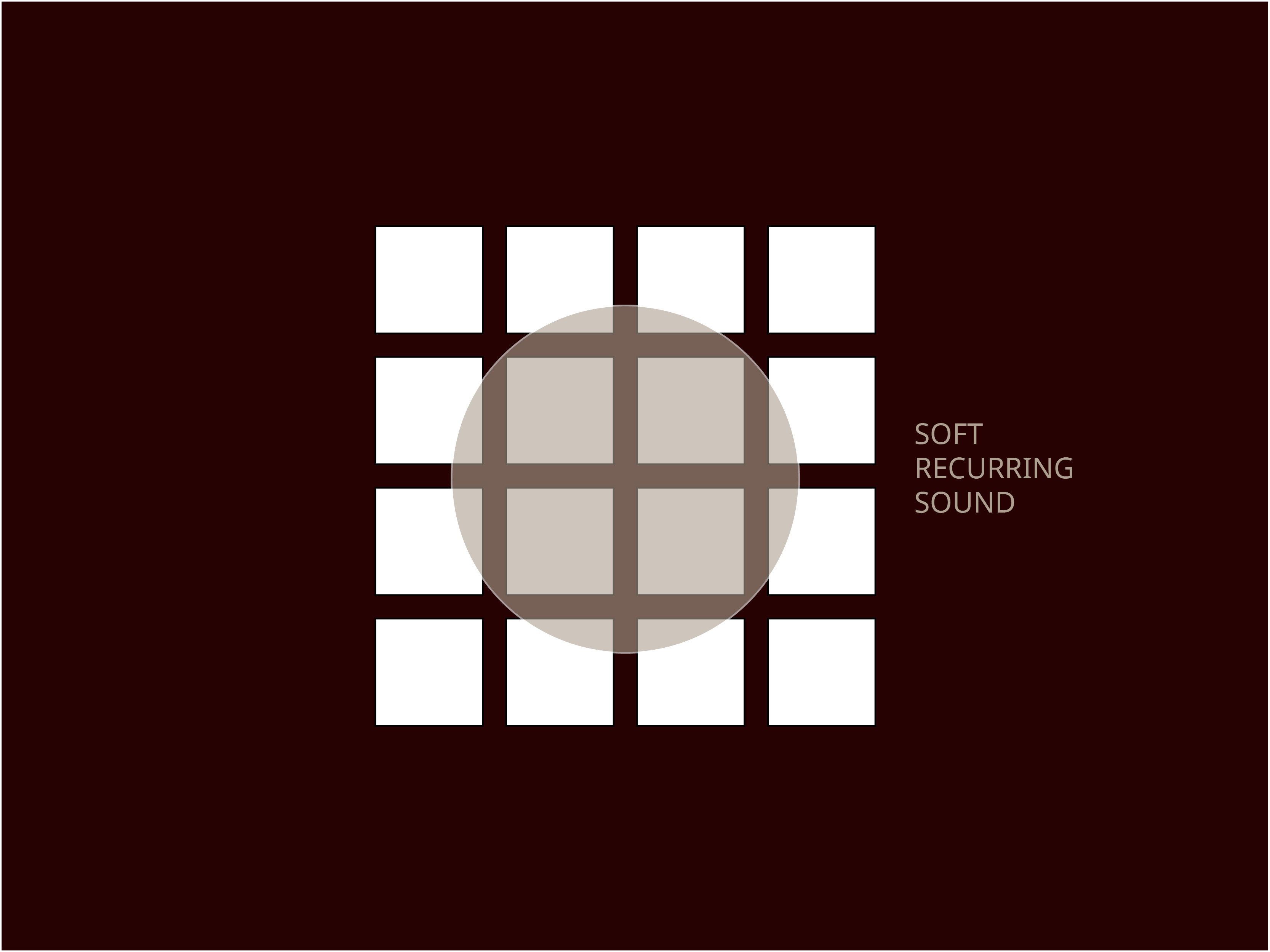

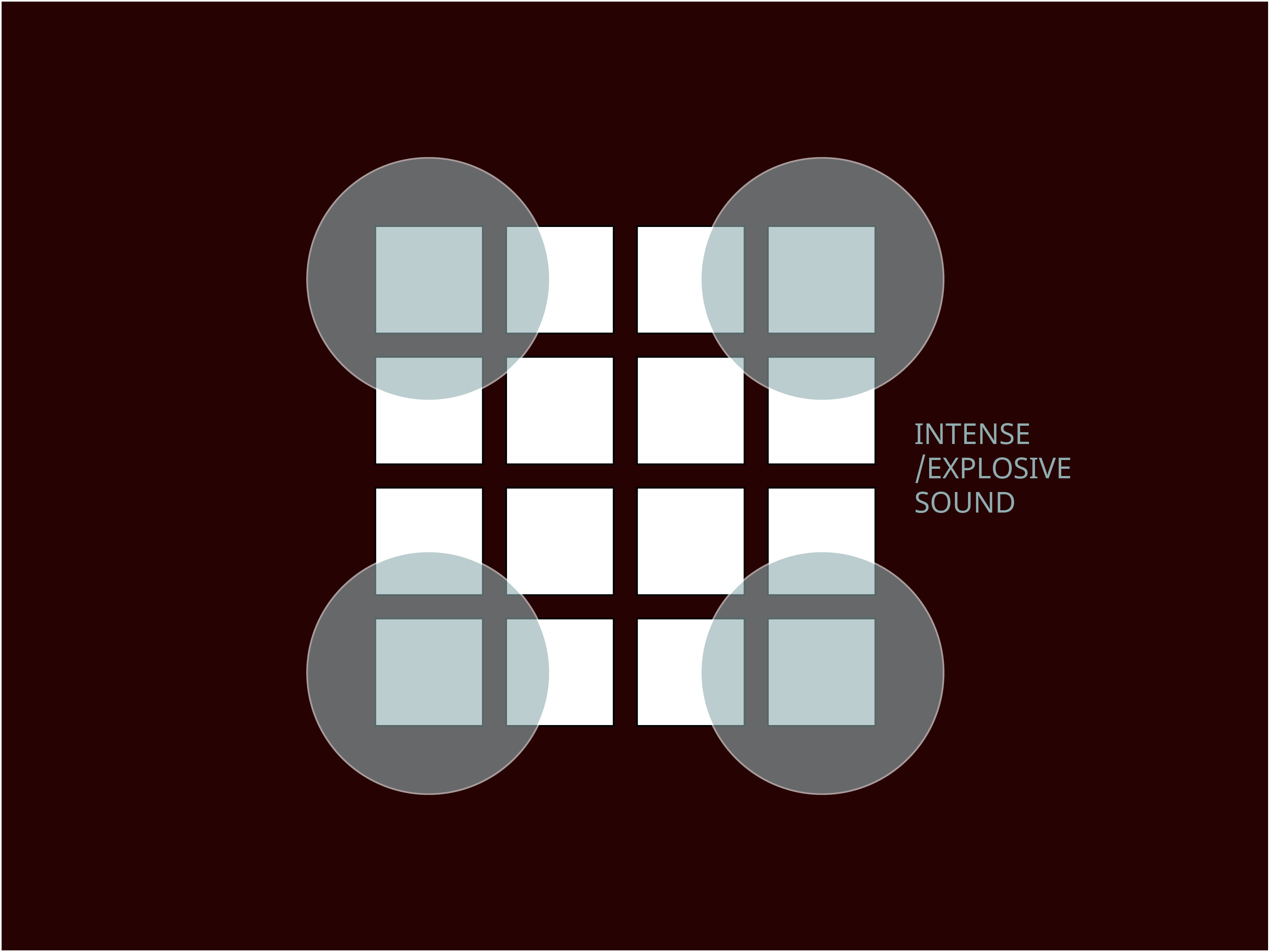

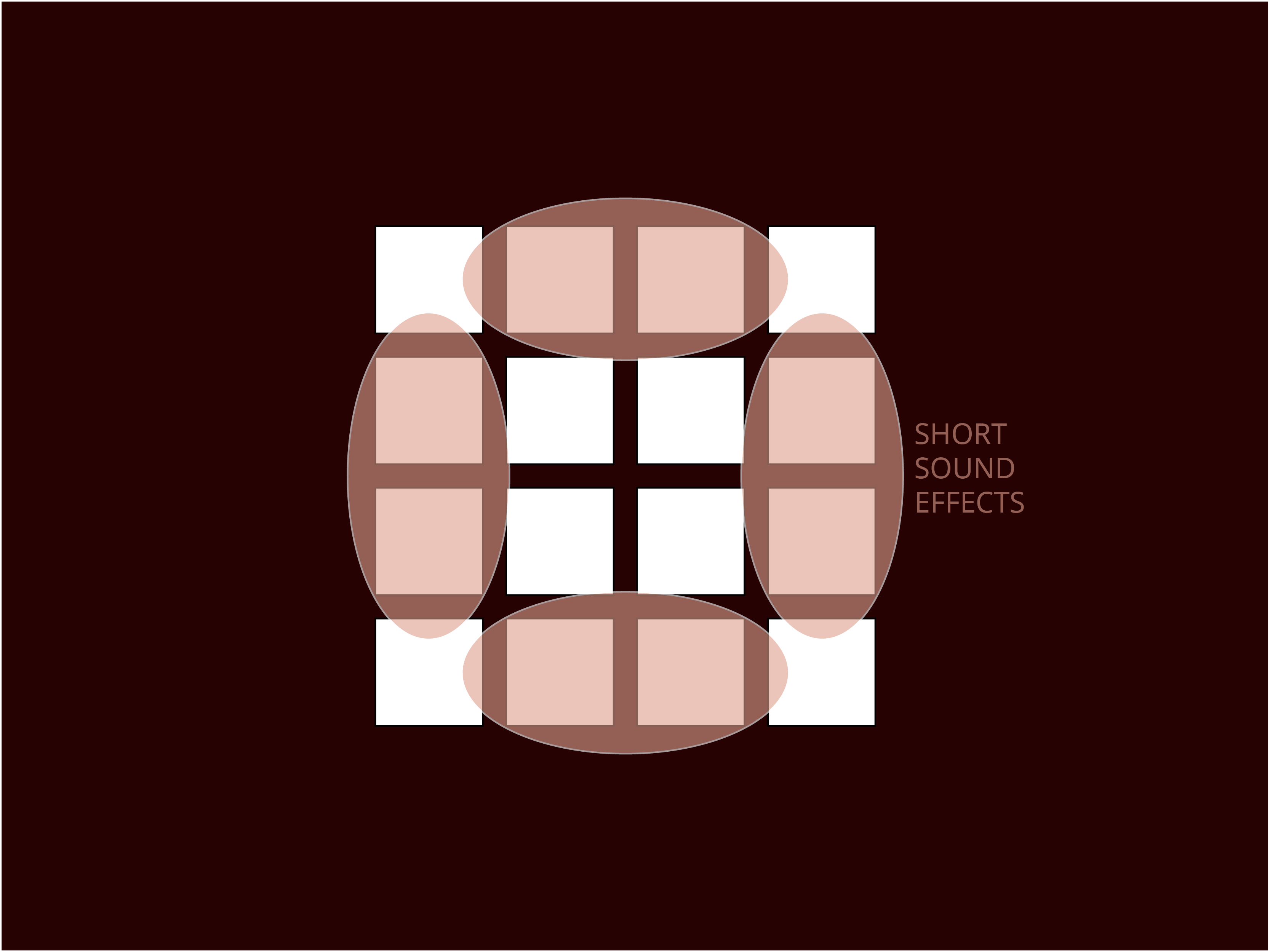

The grid layout creates a spatial framework for arrangement of expressions. For sound composition, I put the samples in a few different categories, each used for different effects (See diagrams below). The more intense samples are triggered as larger events, and they’re meant to be played sequentially and not too frequently. These are put in each of the 4 corners so they won’t be triggered all at once. Then there are the smooth ambient sound effects such as running water and bells, which persist throughout the piece on and off and won’t create disturbance. The rest of the samples include other sound effects such as percussion and bass. In a way, the arrangement is the most important part of the composition, and the combination and layout are the result of many rounds of testing.

Once the setup is ready, the performance operates on building the pace and tension through body movement. With the spatial awareness of the sound locations at the backdrop, very dance move is created with an intention of setting up the next event, and with sound playing, the feedback of the sound in turn affects the expression of the dance move.

Further Development

For this prototype I used the point cloud system to create object input, which means that it requires a large enough clear space for it to recognize the body properly, and any other object nearby could create interference. The next iteration could integrate skeleton rigging and motion capture to improve precision and create distinctions between different body parts, which would give space to much more possibilities of input control.

The device also has a straightforward input system where one collision corresponds to one sound. The benefit of that is the simplicity of control, which makes it more intuitive to operate. The more complicated the control is, the more practice it would take to acquire muscle memory for it, which is crucial for dance performances, where it needs concentration for the aesthetics body movement itself. However, I'd still like the push this further and perhaps bring in one or two extra effect controls, such as looping and sequencing, to enrich the musical composition aspect.

References:

The code is built from the point cloud system in Openframeworks Kinect example. The prototype is built off the idea of “air drum” from Workshop for Creative Coding week 16 lab exercise.