Understanding Intelligence

'Understanding Intelligence' is an installation which embodies the 4 main dimensions of human intelligence as described in Yogic Philosophy through computational mediums.

produced by: Ankita Anand

Introduction

According to Yogic Philosophy there are 16 dimensions to human intelligence, 4 main ones being: Buddhi (Intellect), Manas (Memory), Ahankara (Identity) and Chitta (Cosmic Intelligence). Understanding Intelligence is the manifestation of these through computation. This installation combines physical computing, object-oriented programming and machine learning to embody the unique nature of each of these dimensions.

Concept and background research

This project is the result of my own personal experiences and findings through my yogic practices. Yoga, by the time it reached the West, has become skewed towards various different shapes and forms. Hence it becomes even more important to re-emphasise its origins. The word ‘yoga’ essentially means ‘union’, union with the ultimate reality, it offers to experience the nature of existence as it is. In particular I became fascinated by the yogic perspective on human intelligence. Amongst the 16 different dimensions of intelligence I chose to work with the 4 main ones : Buddhi (Intellect), Manas (Memory), Ahankara (Identity) and Chitta (Cosmic Intelligence). All four have their own respective nature and qualities as described below:

Buddhi (Intellect)

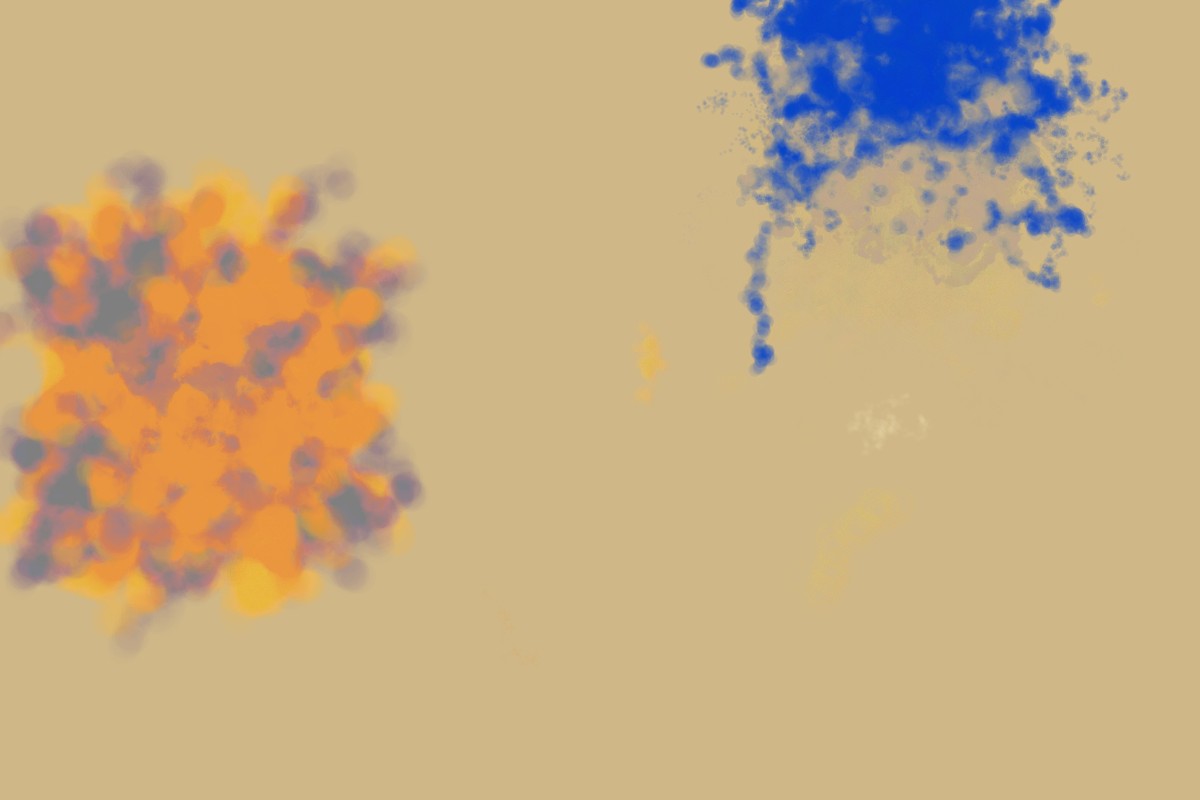

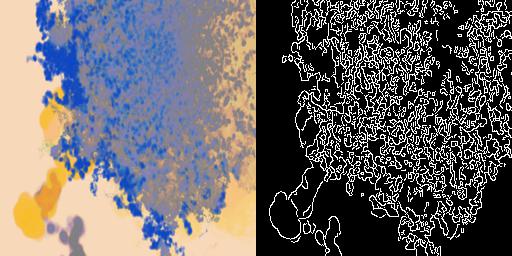

The intellect is like a knife, its purpose is to dissect and aid survival. It does not have the capacity to go beyond its own limitations. Hence I decided to represent Buddhi through clusters of colours following certain set of rules which expand and grow onto each other.

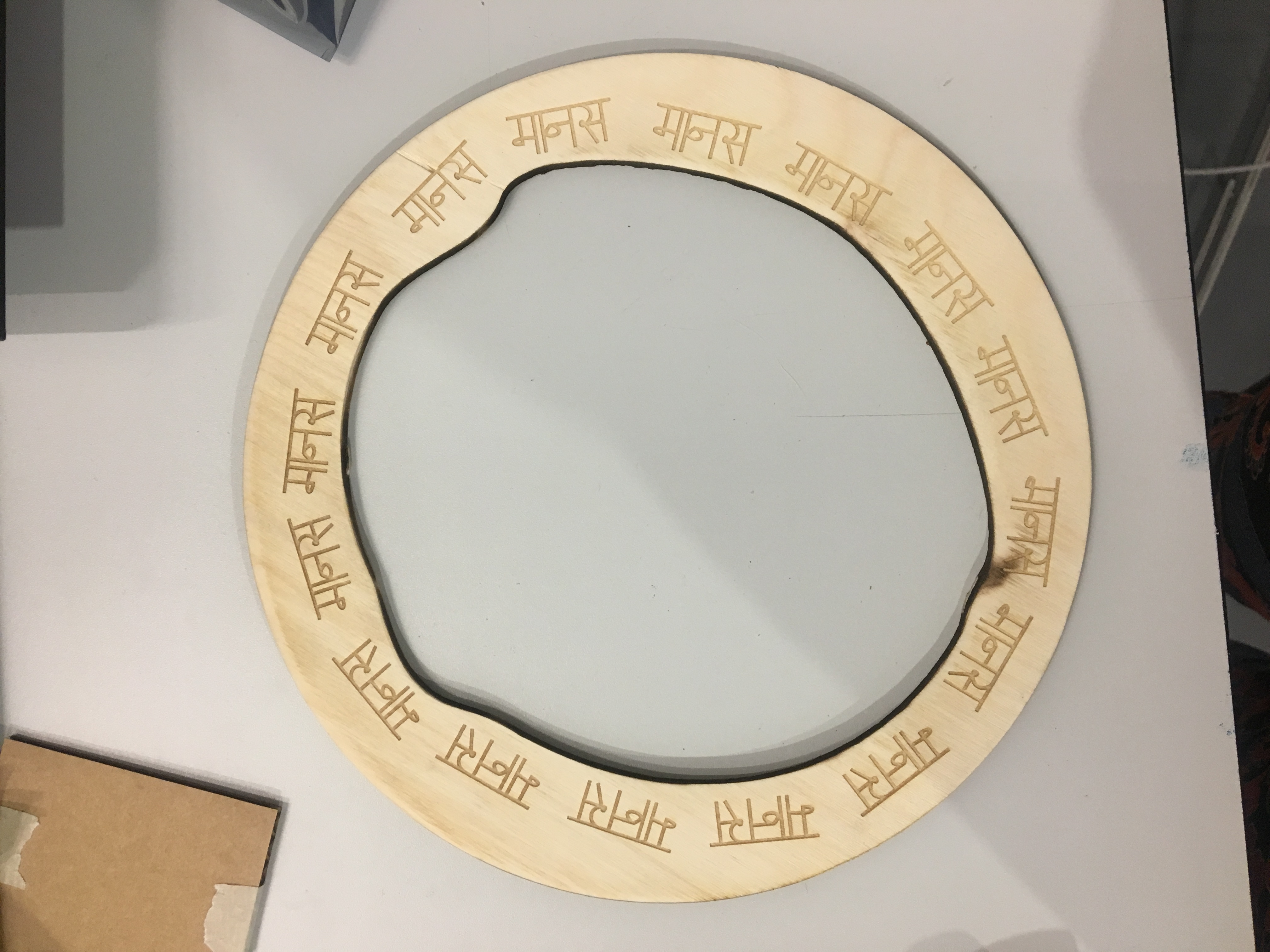

Manas (Memory)

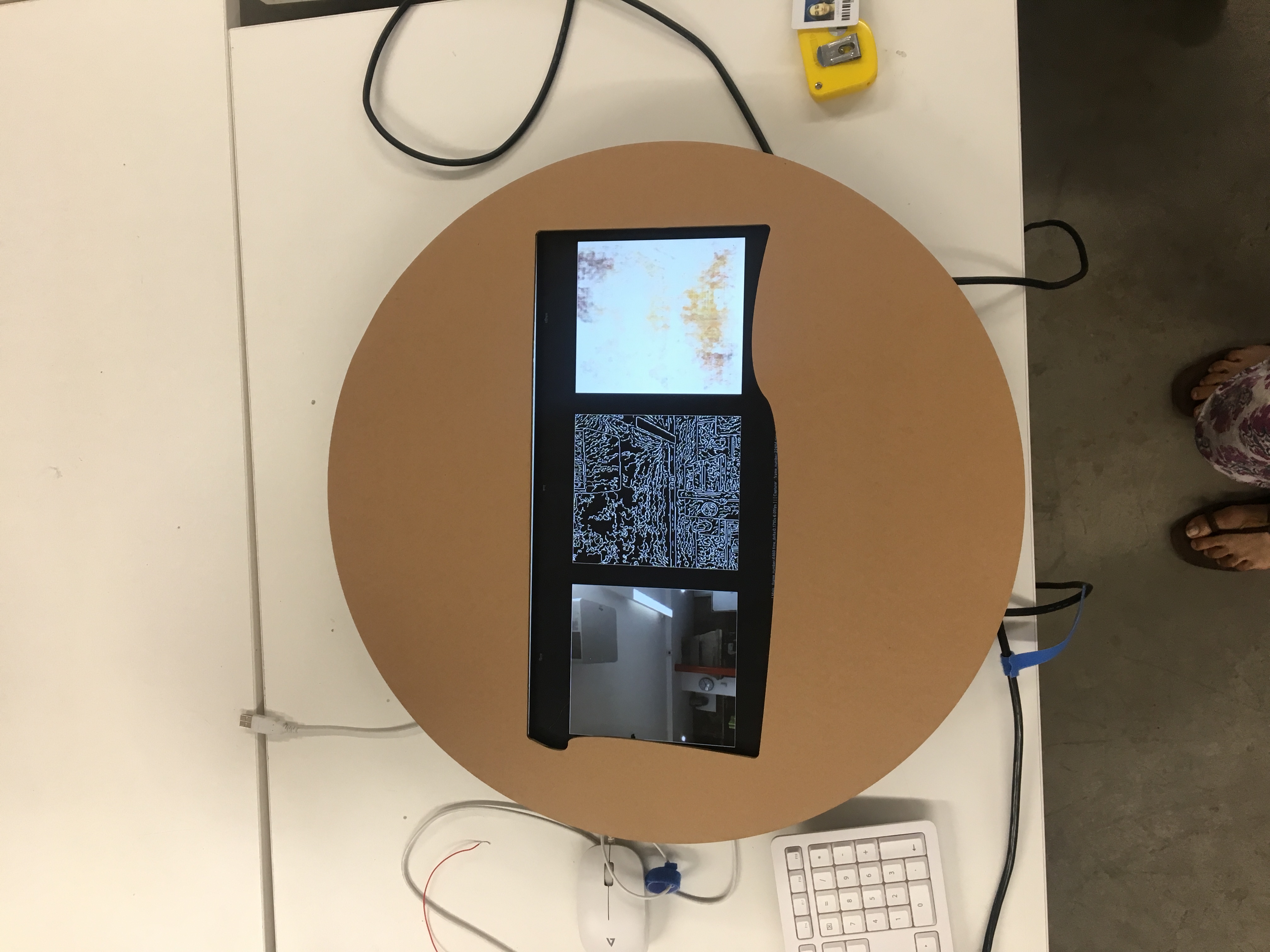

When we say memory in Yoga it does not mean memory in our minds but it means memory held in every cell of our body (manomaya kosha) which is on a phenomenal scale.The nature of Manas is like a boundary, it is also an input to the intellect. Hence Manas is embodied through three ultrasonic sensors placed under three heaps of soil.

Ahankara (Identity)

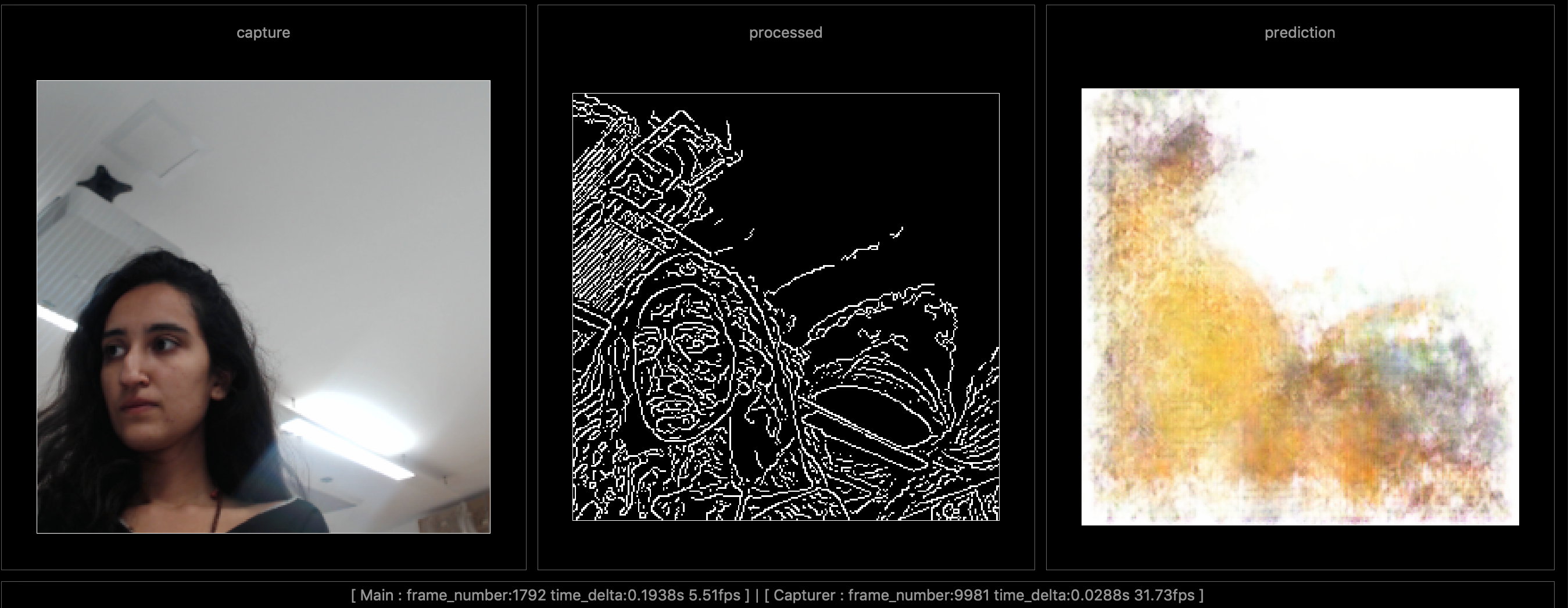

Ahankara gives a sense of identity, once you strongly identify with something, the intellect only functions in that context. Hence it is depicted by a deep learning model which is trained on the Buddhi. Ahankara is strongly identified with Buddhi hence it starts to see everything as that.

Chitta (Cosmic Intelligence)

Chitta is pure intelligence, unsullied by memory, intellect and identification. It is beyond these other three dimensions; ever-present but rarely accessed. Chitta is embodied by a glowing orb.

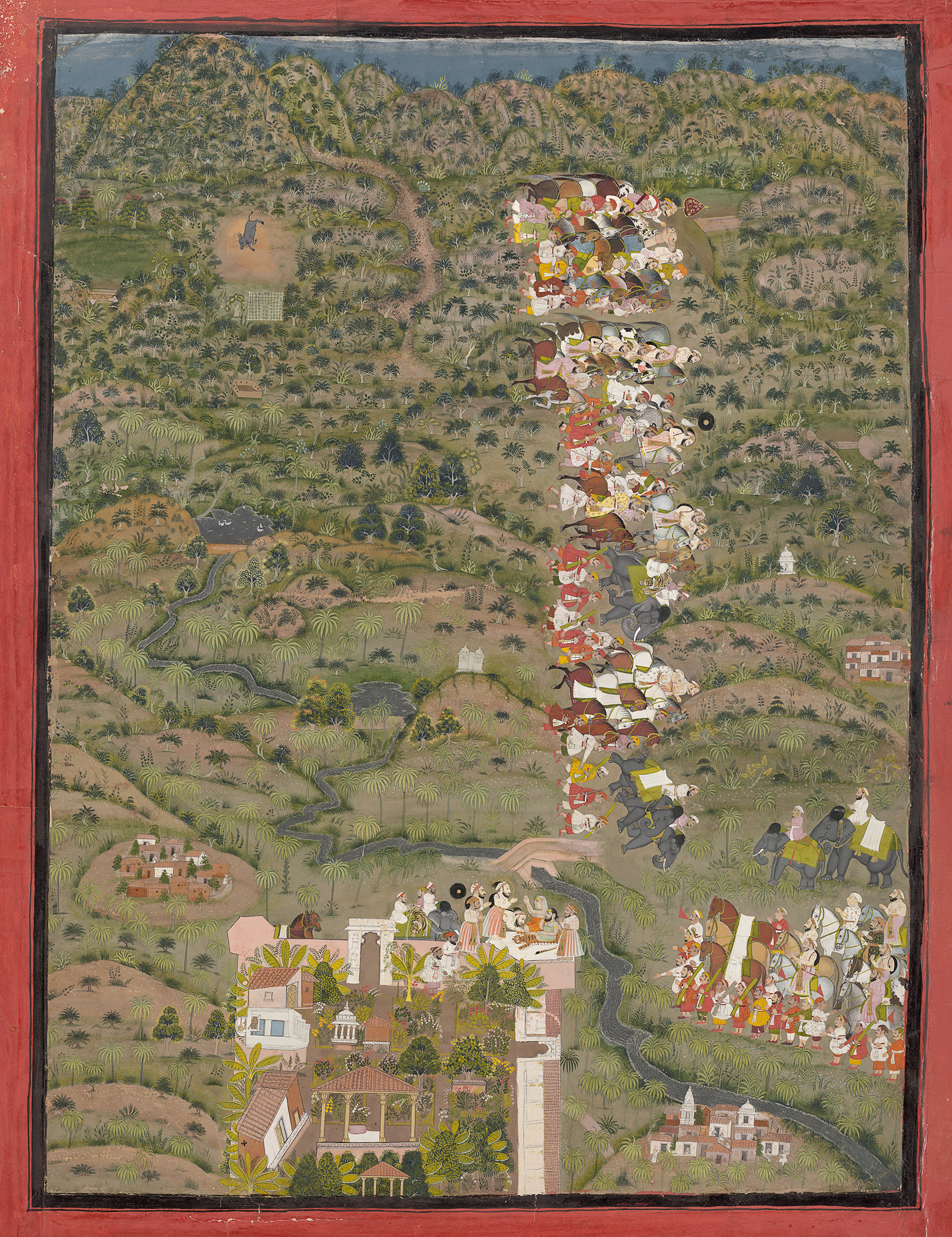

I was also heavily influenced by the representation of yoga in Indian visual art and culture. Throughout history Yoga has been documented in the form of sculpture, illustrated manuscripts, prints, photographs, books, and film, which elucidate the key aspects of yoga — as a practice — as well as its esoteric aspects. My choice of colours and engravings on the frames in Sanskrit, are all an attempt to belong to this ancient visual language.

Walter De Maria’s ‘The New York Earth Room’ is an interior earth sculpture by the artist which is essentially 250 cubic yards of earth in a room. I was inspired by how you could bring the outdoors indoors and re-emphasise nature.

Tabita Rezaire is a new media artist whose work I was influenced by. She tries to envision network sciences - organic, electronic and spiritual - as healing technologies. I am drawn to her work as she attempts to find spaces where spirituality and technology might overlap.

Technical

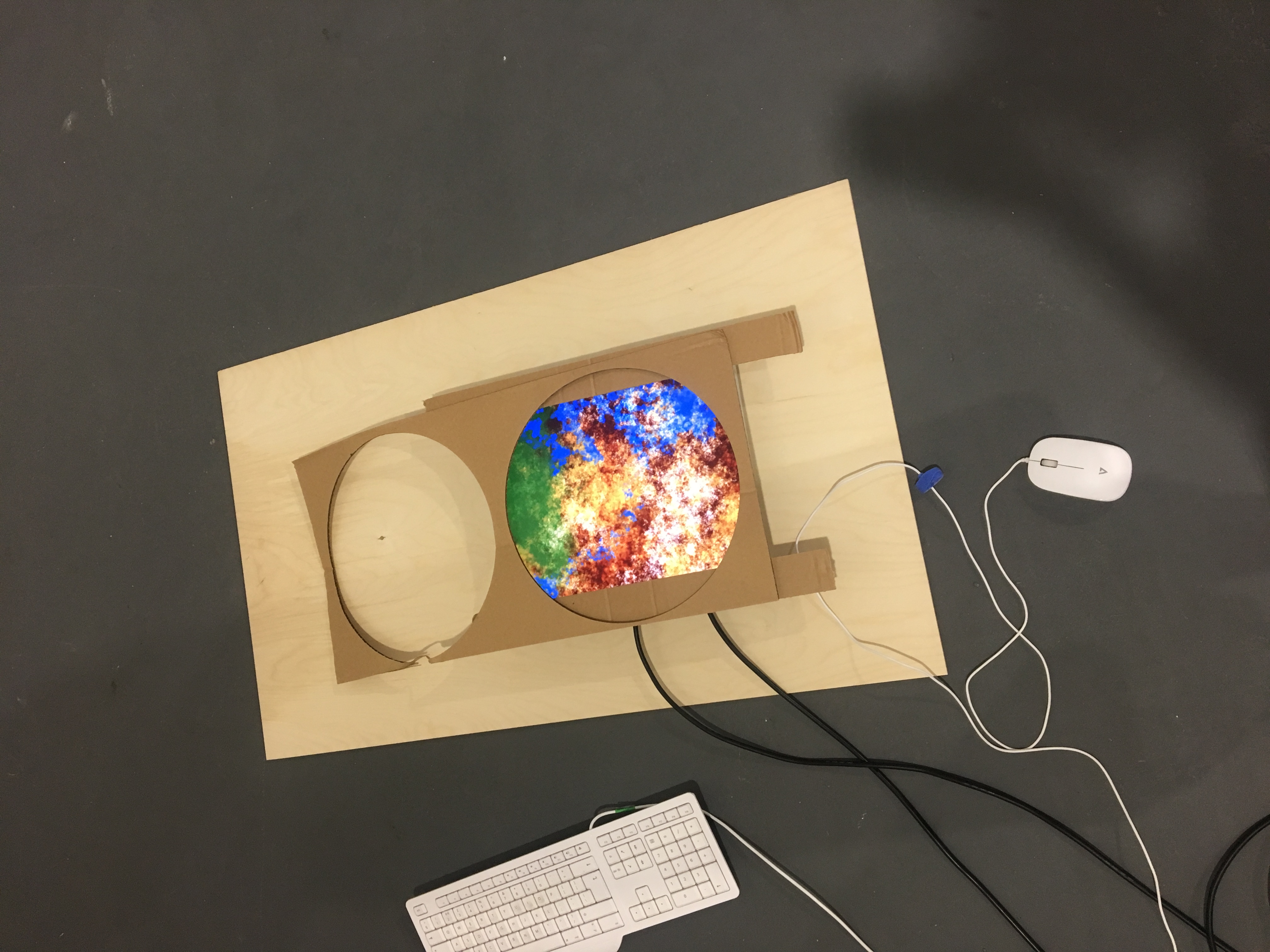

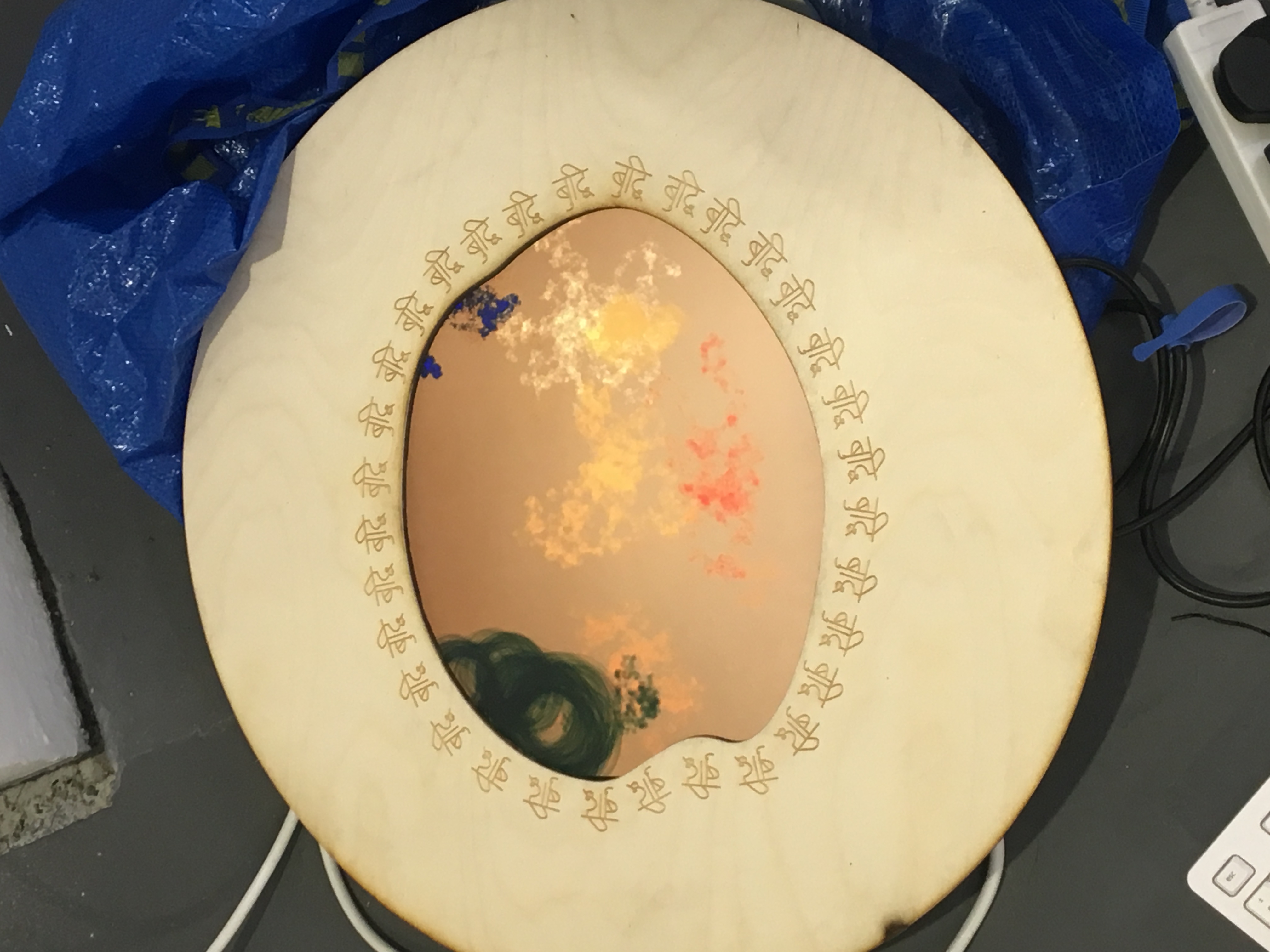

The technologies I used for this project were dictated by how well they could embody or represent each of the intelligences. I made wooden frames and feet which were laser cut and engraved with the name of each of the intelligences in Hindi.

When I started off with Buddhi (Intellect) my initial plan was to work with Game of Life (Cellular Automata) in OpenFrameworks. However I eventually decided to use clusters of cells which were random walkers as they were aesthetically more pleasing and organic. I used object oriented programming on processing so each cluster was essentially the same but had different features of colour, shape and fill. This successfully embodied the nature of the intellect - which was to simply follow rules. If we get enamoured by the intellect it feels as if it can take us somewhere; hence as the cells grow and overlap they start to look different. However, it is still limited to its own nature. This sketch was running on a raspberry Pi and I wrote a script that would automatically launch it when powered. This proved to be very helpful as getting access to the pi from underneath the soil was cumbersome.

For Memory (manas) I wanted to represent a boundary which people would observe but also be willing to cross. Memory in yoga is further divided into into elemental, atomic, evolutionary, karmic, sensory, karmic, articulate and inarticulate memory. I wanted the physical soil circle to be articulate memory and the three ultrasonic sensors to be inarticulate memory: memory which is present but you don’t consciously think about. The incense represented sensory memory.

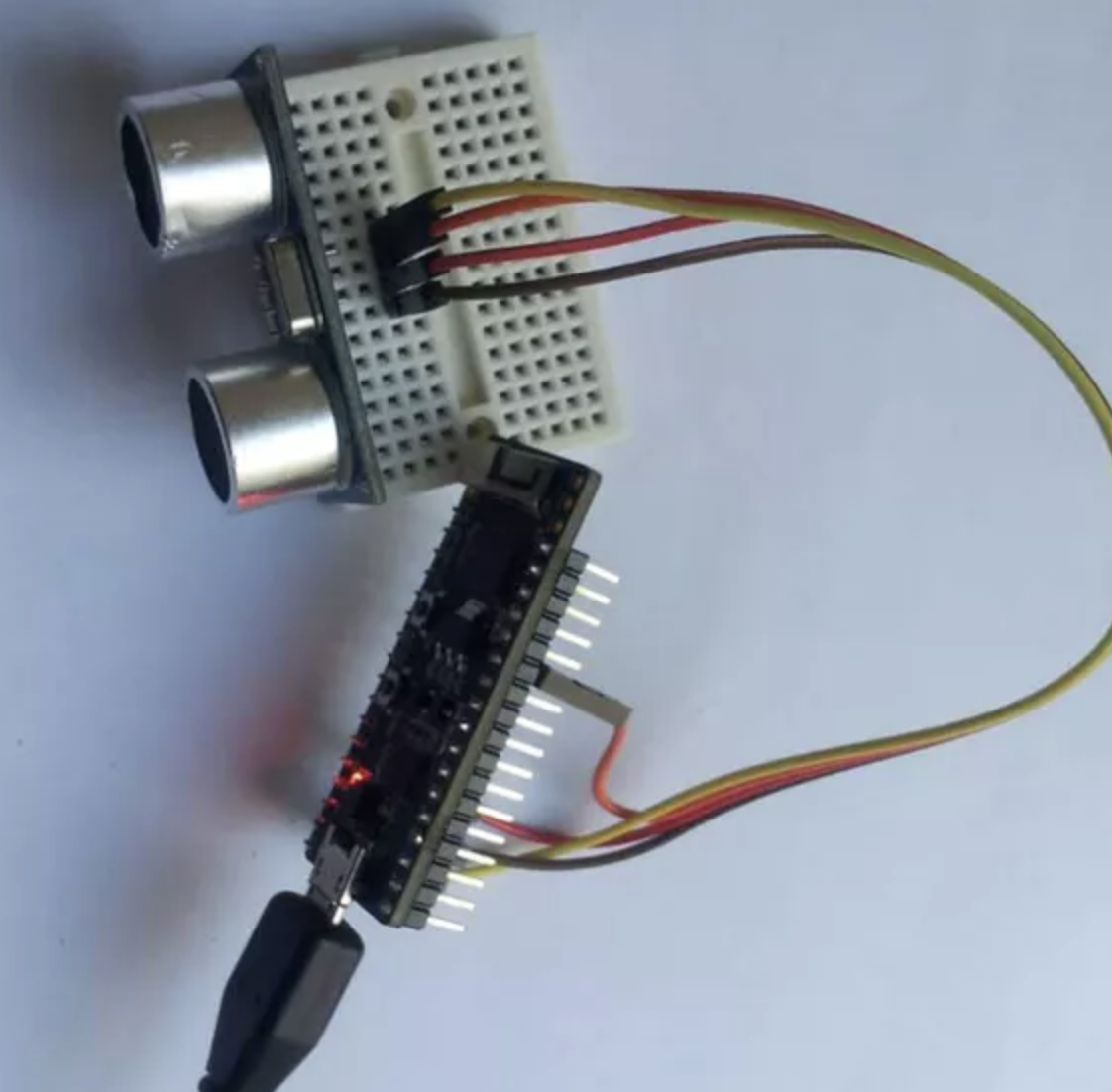

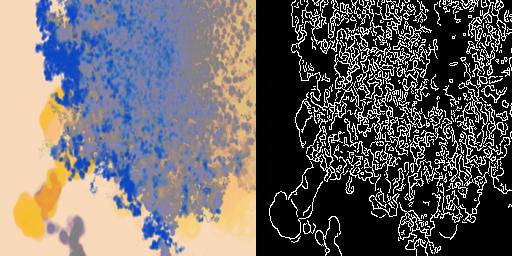

Since memory acts as an input to the intellect the ultrasonic sensors send data over wifi via OSC messages to the processing sketch. I had to use ESP32 board as it was readily available and made it possible to send OSC wirelessly. When someone steps in front of the sensors the processing sketch adds more of each particular colour hence your presence affects the aesthetics of the intellect overtime. I wanted this to be a subtle process, an interaction which is not visible instantly but rather overtime. One of the challenges was how to detect if people had crossed the boundary more accurately from all sides rather than just 3 - I could have perhaps used computer vision using a camera to detect that but I wanted the second dimension (memory) to be a physical manifestation hence the sensors.

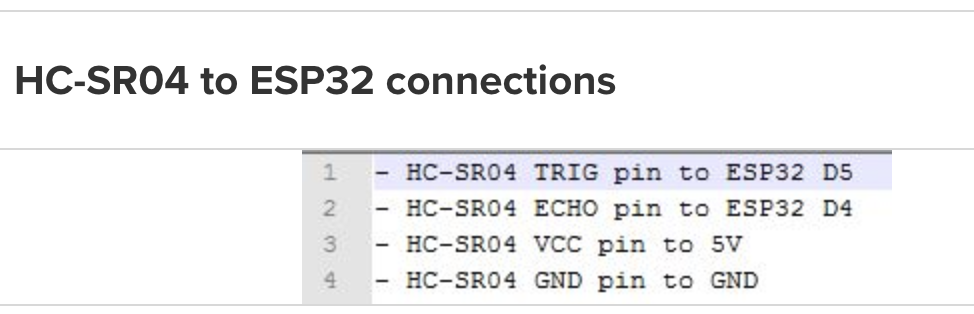

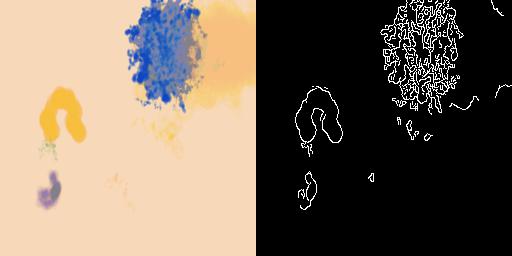

Machine Learning felt incredibly appropriate to embody something like Ahankara (identity). Technically this was something I struggled the most with - I wanted to use a machine learning model which ran live with a camera feed. I spent endless time trying to run different models and examples only to realise later on that I either did not have the GPU requirements or ability to install all dependencies on my laptop. I then ventured into machine learning models made on Openframeworks using Gene Kogan’s examples. I managed to make some of them run however the results were just not as interesting as I would have liked to see. However I did finally manage to get Memo Atken’s pix2pix model running on my MacBook Pro. I then went onto training my own dataset using Google Colaboratory and replacing the model in Memo’s python script. For the training I had to first prepare my dataset which had to be in pairs - one side was the image and the other side was the outline. I used Memo's preprocess script to convert my images into this format ready for training. After trying various training sets (large and small) I arrived at a conclusion of using a dataset of 100 images which were screen grabs from my processing sketch. This took approximately 6 hours of training. For the input feed the camera was placed on the top so that it overlooked the entire installation and as people walked through it the pix2pix model started to adapt to it.

Conceptually, embodying chitta was one of the most difficult tasks - how could I possibly represent something which meant the absence of any iota of memory, intellect, past experience or identification? I wanted to show how chitta was everywhere but not accessed. I intentionally wanted to keep Chitta away from computation - as the other three dimensions can be done more efficiently than us by machines - however chitta is something intrinsic to life itself. Hence I decided to represent it with a glowing light inside a frame which you noticed only when you looked up. It was away from the other three but the shape and frame made it look like it belonged to one piece.

Future development

As soon as I looked at my final piece I had a feeling that it somehow belonged outdoors. However, I would have a number of outdoor factors to consider - such as how to protect the screen, cables, plugs and sensors from rain, wind and sunlight. Also powering everything could be challenging. I would also like to spend more time on experimenting on the training set that I used for my ML implementation.

Ideally as a longer project I would attempt to embody all the 16 dimensions instead of the 4 main ones and create a much bigger system which interacts and exists together. It would be beneficial for me to look into sourcing sustainable wood for my frames and thinking about how I could make the entire installation more environment friendly.

Self evaluation

The overall natural, aesthetic and organic aesthetic was achieved and also all elements looked like they belonged together. I would have perhaps liked the screens to be slightly larger and also perhaps getting more sensors than just 3 to cover a bigger area of the circle or make the interaction more prominent for audience.The boxes I designed for my sensors were not as soil-proof as I imagined them to be so this is something I wish to work on further. I was happy to be able to implement the machine learning system however I would have ideally liked more time to experiment with training. A very big advantage was to be in the space almost a month before the exhibition which meant that I could design and fit my work according to the location. This was extremely helpful in me deciding where the camera and Chitta were placed.

During the first day of the exhibtion I realised my processing sketch slowed down considerably after 5 or 6 hours as it was running on a raspberry Pi, I then worked on the code the next day and emptied my arrays frequently. Hence next time I will try and run my work for a long time before the exhibition starts to look out for faults and mistakes. For the ML implementation I had to leave my laptop at the exhibition as it would run considerably slower on any other device such as a macMini, I will try looking into NVIDIA's Jetson Nano and other ML suited computing platforms which would work better for long term exhibits.

Looking back I have learnt so many things that I would have never imaged to experiment with and a big credit goes to my course mates who were such a valuable asset to have.

References

Ugo Rondinone, Becoming Soil, 2016

Walter De Maria, The New York Earth Room. Available at: https://diaart.org/visit/visit/walter-de-maria-the-new-york-earth-room-new-york-united-states [Accessed : 10/08/2019]

Prabhu, H. R. Aravinda, and P. S. Bhat. 2013. ‘Mind and Consciousness in Yoga – Vedanta: A Comparative Analysis with Western Psychological Concepts’. Indian Journal of Psychiatry 55 (Suppl 2): S182–86. https://doi.org/10.4103/0019-5545.105524

Vasudev, Jaggi. 2016. Inner Engineering: A Yogi’s Guide to Joy. First Edition. New York: Spiegel & Grau.

Tabita Rezaire http://tabitarezaire.com/index.html [Accessed: 13/09/2019]

Memo Atken's Webcam-pix2pix example available at : https://github.com/memo/webcam-pix2pix-tensorflow (Last Accessed: 4/09/2019]

'Learning Processing' by Daniel Shiffman Available at : http://learningprocessing.com/examples/chp22/example-22-02-polymorphism [Accessed : 13/01/2019]

Gene Kogan's ML implementations, Avaibale at : https://github.com/genekogan [Accessed : 13/07/2019]

Patañjali, Purohit, and W. B Yeats. 1987. Aphorisms of Yôga. London; Boston: Faber.

ESP32 Examples from Arduino Addon and also available here: https://www.instructables.com/id/Pocket-Size-Ultrasonic-Measuring-Tool-With-ESP32/ [Accessed : 13/07/2019]

Yoga in Visual Art and Culture. Availble at: http://etc.ancient.eu/interviews/yoga-as-reflected-in-indian-visual-art-and-culture/ [Accessed : 25/08/2019]

Thank you to all my inspiring tutors : Theo Papatheodorou, Rebecca Fiebrink, Lior Ben-gai, Evan Raskob, Helen Pritchard, Atau Tanaka and Andy Lomas.

A very special thanks to all my course mates specially Keita Akeda, Julien Mercier, Eirini Kalaitzidi, Raphael Theolade, Harry Morley, Rachel Max and Bingxin Feng.